Michael Oberst

@MichaelOberst

Followers

2K

Following

5K

Media

19

Statuses

244

Assistant Professor of CS at @JohnsHopkins, Part-time Visiting Scientist @AbridgeHQ. Previously: Postdoc at @CarnegieMellon. PhD from @MIT_CSAIL.

Cambridge, MA

Joined August 2011

RT @niloofar_mire: 🧵 Academic job market season is almost here! There's so much rarely discussed—nutrition, mental and physical health, unc….

0

38

0

RT @MonicaNAgrawal: Excited to be here at #ICML2025 to present our paper on 'pragmatic misalignment' in (deployed!) RAG systems: narrowly "….

0

7

0

RT @matthewherper: Layoffs hit FDA’s Center for Devices and Radiological Health

statnews.com

Layoffs at the FDA appear to have hit the AI and digital health staff particularly hard. It also has a strained relationship with Musk’s company Neuralink.

0

7

0

An example of some recent work (my first last-author paper!) on rigorous re-evaluation of popular approaches to adapt LLMs and VLMs to the medical domain.

🧵 Are "medical" LLMs/VLMs *adapted* from general-domain models, always better at answering medical questions than the original models?. In our oral presentation at #EMNLP2024 today (2:30pm in Tuttle), we'll show that surprisingly, the answer is "no".

0

0

7

Application Link: More information on my website:

michaelkoberst.com

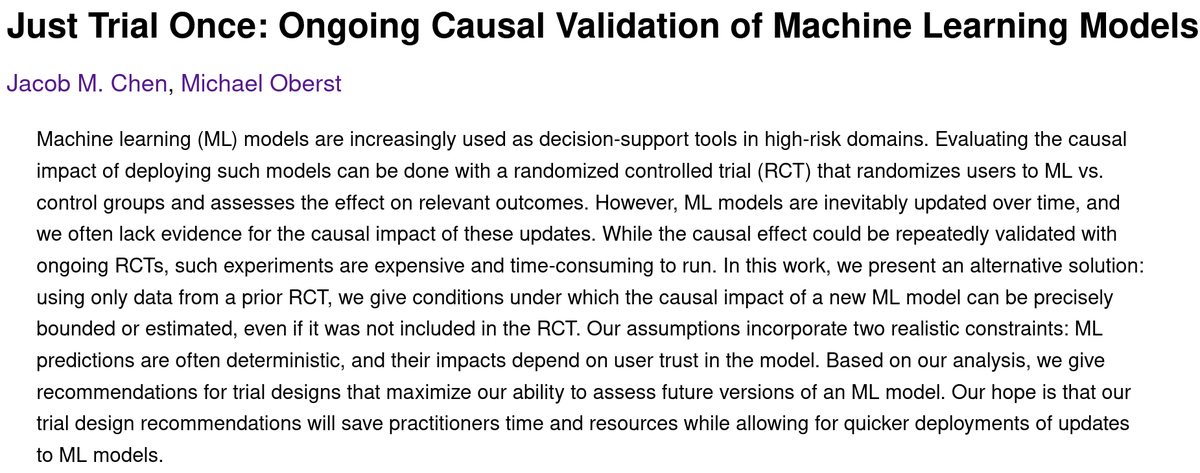

Computer Science, Statistics, Causality, and Healthcare

1

0

7

RT @danielpjeong: 🧵 Are "medical" LLMs/VLMs *adapted* from general-domain models, always better at answering medical questions than the ori….

arxiv.org

Several recent works seek to develop foundation models specifically for medical applications, adapting general-purpose large language models (LLMs) and vision-language models (VLMs) via continued...

0

36

0

RT @yisongyue: Just updated my Tips for CS Faculty Applications. Best of luck to everyone applying!.

yisongyue.medium.com

This article is a collection of tips for improving your faculty application package (tailored to computer science). Most of the time…

0

72

0

RT @mdredze: The early 🦜 gets the 🪱. @JHUCompSci has a great opportunity for faculty hiring. Apply early and you couls interview early and….

0

18

0

RT @anjalie_f: As application season rolls around again, here's your reminder that materials from my successful applications are available….

0

156

0

RT @mdredze: 🚨 Johns Hopkins @JHUCompSci is hiring faculty at all ranks! 1) Data Science and AI 🤖; 2) All other areas of CS 💻. We will doub….

0

45

0

RT @DanielKhashabi: Computer Science @ JHU is hiring in ALL areas:. 🔑 Apply early for flexible scheduling + potenti….

0

17

0