Phillip Isola

@phillip_isola

Followers

17K

Following

5K

Media

107

Statuses

650

Associate Professor in EECS at MIT, trying to understand intelligence.

Joined December 2016

#BigGAN is so much fun. I stumbled upon a (circular) direction in latent space that makes party parrots, as well as other party animals:

31

658

3K

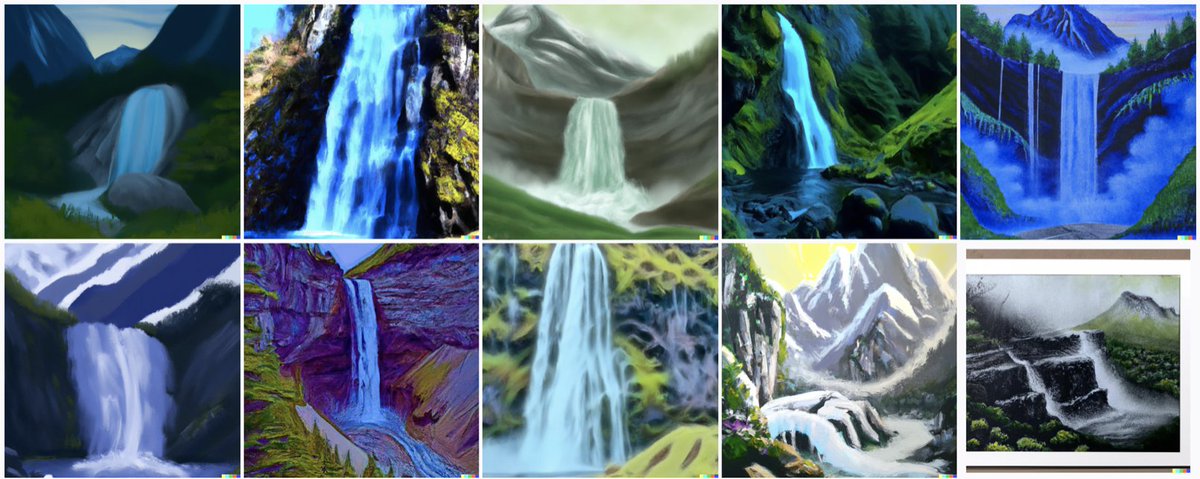

Language-conditional models can act a bit like decision transformers, in that you can prompt them with a desired level of "reward". E.g., want prettier #dalle creations? "Just ask" by adding "[very]^n beautiful":. n=0: "A beautiful painting of a mountain next to a waterfall."

34

199

1K

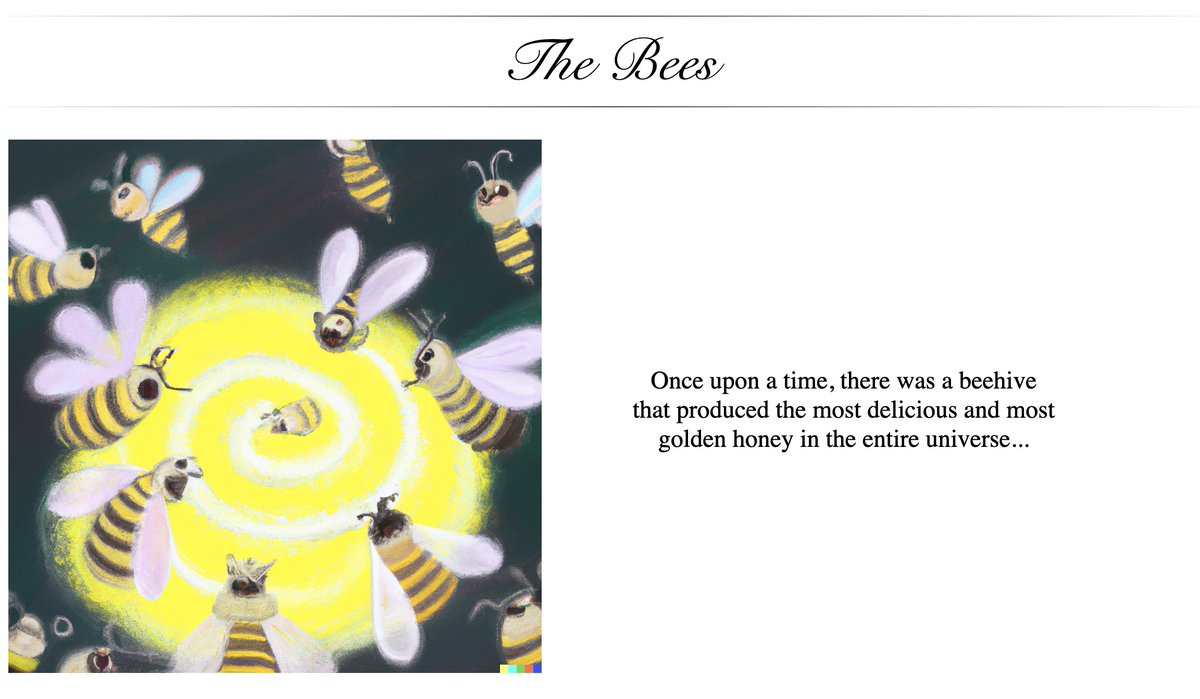

Back in 2018 at OpenAI, a few of us wrote a story with gpt as an AI "co-author". We didn't have an AI illustrator back then, but now we sort of do, so I tried plugging the text into #dalle. Here is the result! “The Bees”, a short story by humans & AIs:

17

77

595

Sharing two new preprints on the science and theory of contrastive learning:. pdf: code: w/ @YonglongT, Chen Sun, @poolio, @dilipkay, Cordelia Schmid. pdf: code: w/ @TongzhouWang. 1/n.

4

107

426

More new work at ICCV: Training a GAN to explain a classifier. Idea: visualize how an image would have to change in order for the predicted class to change. Can reveal attributes and biases underlying deep net decisions. w/ big team @GoogleAI.—>

3

62

331

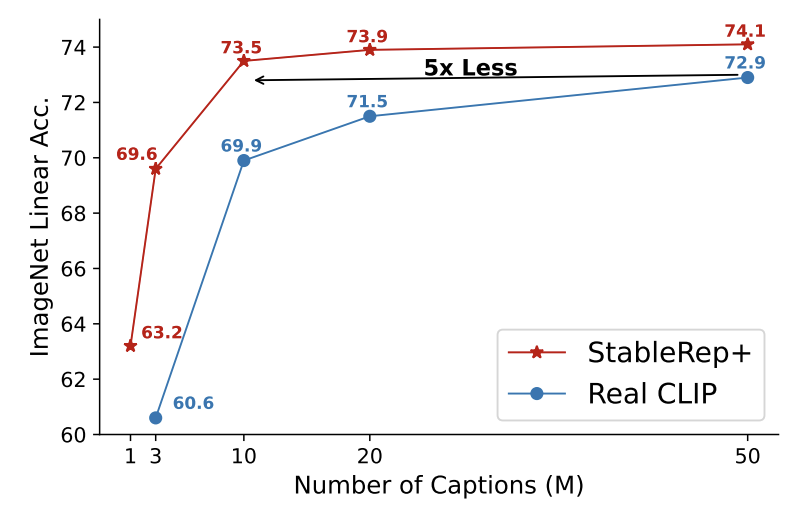

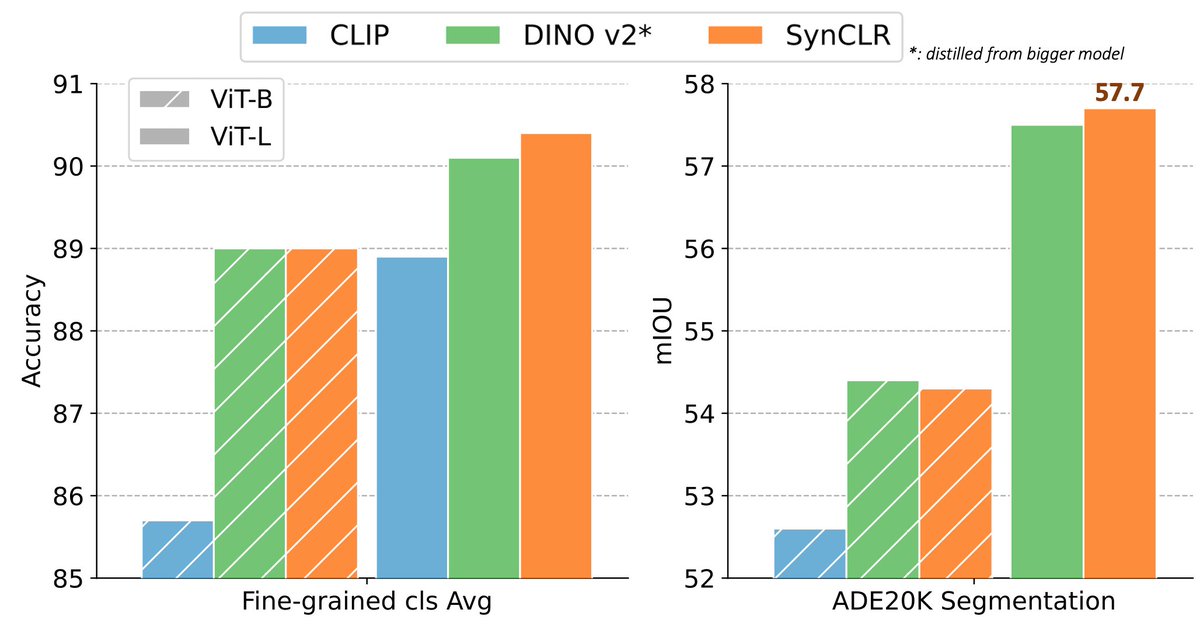

Should you train your vision system on real images or synthetic? . In the era of stable diffusion, the answer seems to be: synthetic! . One stable diffusion sample can be worth more than one real image. paper link:

New paper!! We show that pre-training language-image models *solely* on synthetic images from Stable Diffusion can outperform training on real images!!. Work done with @YonglongT (Google), Huiwen Chang (Google), @phillip_isola (MIT) and Lijie Fan (MIT)!!

8

46

245

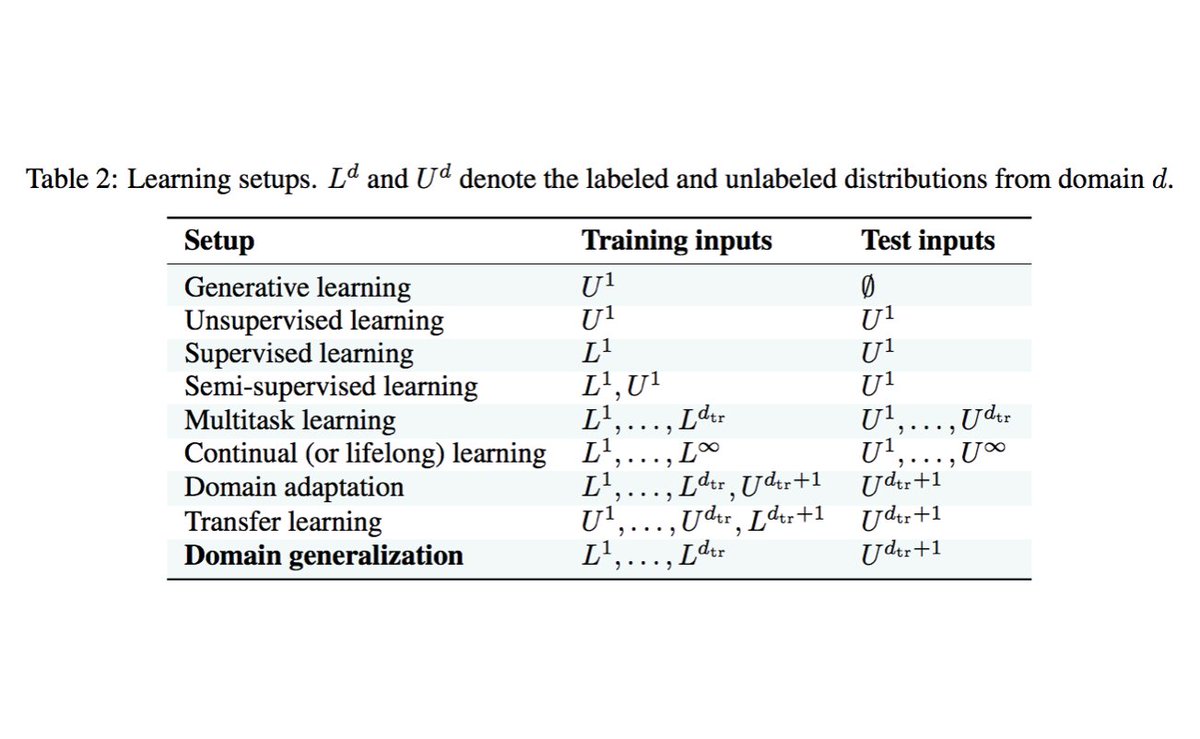

Beautiful summary of different learning problems from a new paper by @__ishaan and David Lopez-Paz . (rest of paper also looks interesting)

0

34

210

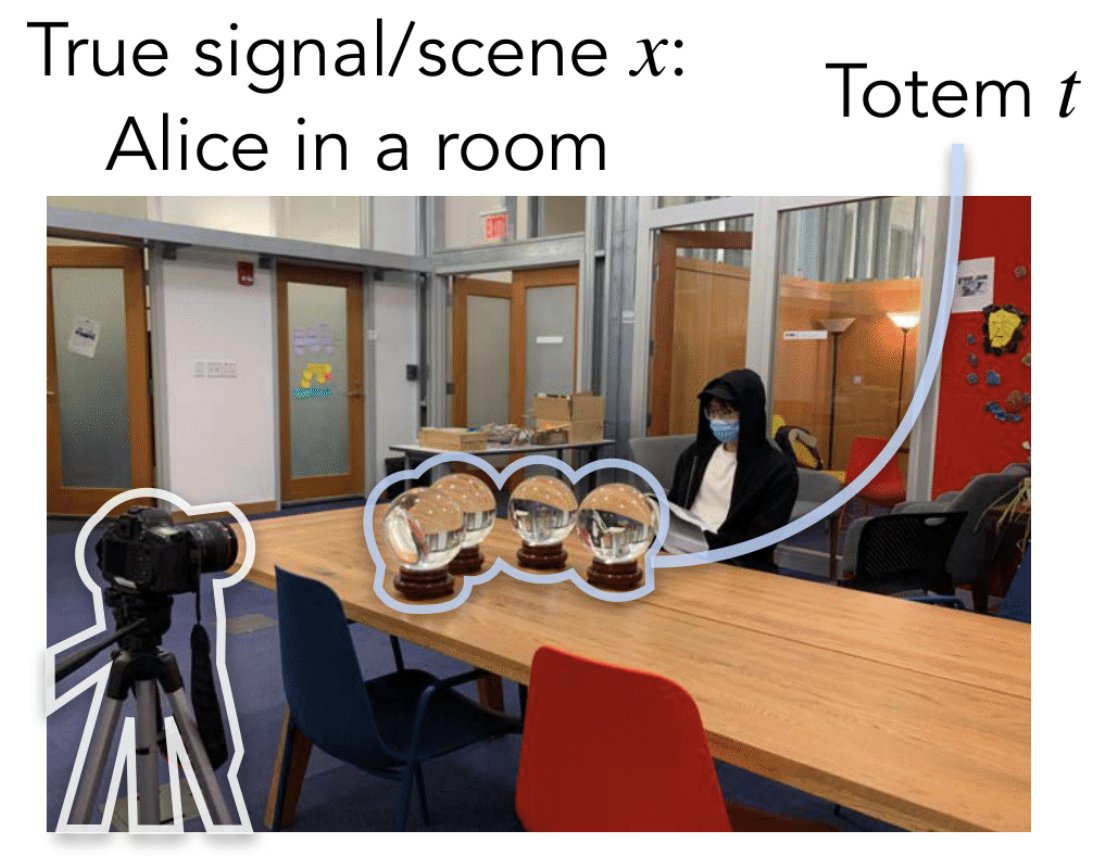

As a kid I was fascinated the Search for Extraterrestrial Intelligence, SETI. Now we live in an era when it's becoming meaningful to search for "extraterrestrial life" not just in our universe but in simulated universes as well. This project provides new tools toward that dream:.

Introducing ASAL: Automating the Search for Artificial Life with Foundation Models. Artificial Life (ALife) research holds key insights that can transform and accelerate progress in AI. By speeding up ALife discovery with AI, we accelerate our

4

18

213

New paper at #CoRL2023! "Distilled Feature Fields Enable Few-Shot Language-Guided Manipulation". How should robots represent the world around them?. This paper's answer: as a field of foundation model features localized in 3D space. paper+code: 1/n

1

28

200

Very interesting work on a question I've been thinking a lot about: when can training a system on X' ~ G outperform training directly on X (where G is a gen model of X). They find that retrieving task-relevant images from X outperforms sampling task-relevant images from G. 1/n.

Will training on AI-generated synthetic data lead to the next frontier of vision models?🤔. Our new paper suggests NO—for now. Synthetic data doesn't magically enable generalization beyond the generator's original training set. 📜: Details below🧵(1/n).

6

23

201

Impressive results! This paper incorporates so many of my favorite things: representational convergence, GANs, cycle-consistency, unpaired translation, etc.

excited to finally share on arxiv what we've known for a while now:. All Embedding Models Learn The Same Thing. embeddings from different models are SO similar that we can map between them based on structure alone. without *any* paired data. feels like magic, but it's real:🧵.

2

12

194

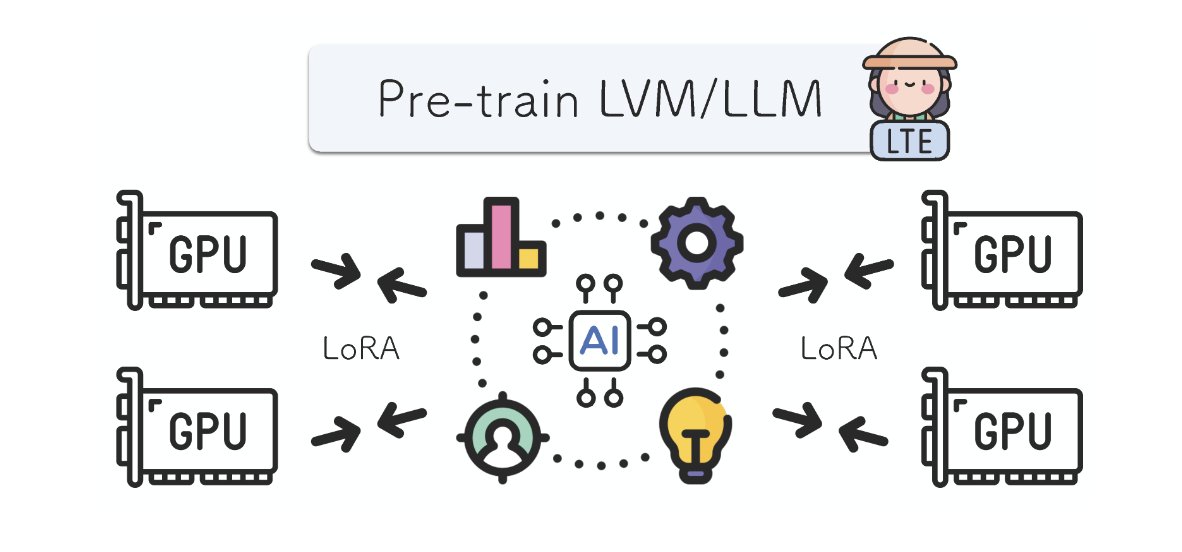

Distributed training using parallel LoRAs, infrequently synced. My fav part is the analogy to git, where lots of coders can work together on a project, coordinated by simple operators like pull, commit, merge. Potential implications toward community training of big models.

Presenting a method for training models from SCRATCH using LoRA: .💡20x reduction in communication .💡3x savings in memory. - Find out more: .- Code available to try out.- Scaling to larger models ongoing.- led by Jacob Huh!

6

24

186

A big dream in AI is to create world models of sufficient quality that you can train agents within them. Classic simulators lack visual diversity and realism. GenAI lacks physical accuarcy. But combining the two can work pretty well!. paper:

For roboticists, one challenge towers above the others: there isn’t enough data. To accelerate the deployment of intelligent robots in the real world, MIT CSAIL’s "LucidSim" uses genAI & physics engines to create diverse & realistic virtual training grounds for robots. W/o any

3

24

188

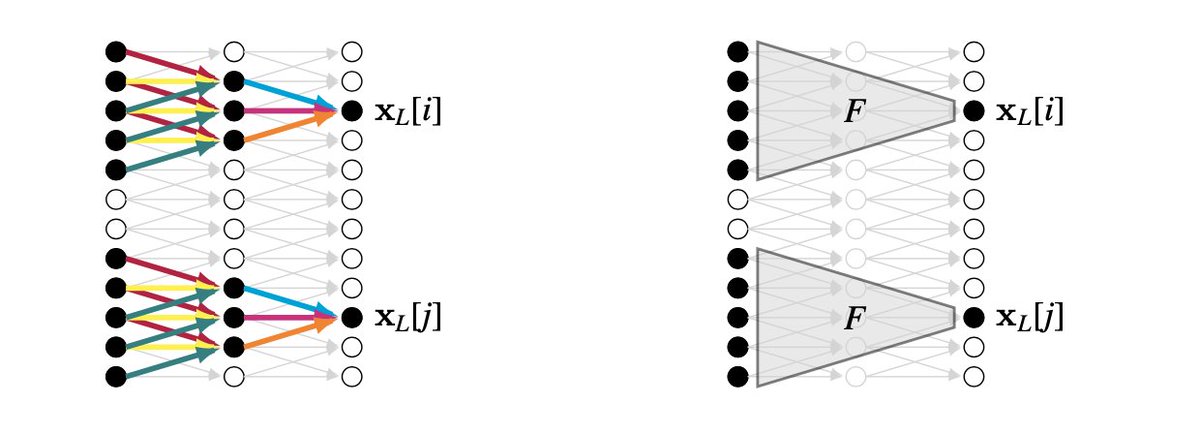

At ICLR this week, we are presenting our work on learning contrastive representations from generative models: . w/ @Ali_Design_1 @xavierpuigf @YonglongT. 1/n.

4

24

180

Slides of the talk I gave today at the #iccv19 synthesis workshop, on "Generative Models as Data Visualization": (covers and .

3

44

164

Great to see GANs becoming competitive on text-to-image. GANs are still my favorite kind of generative model :).

StyleGAN-T: Unlocking the Power of GANs for Fast Large-Scale Text-to-Image Synthesis. significantly improves over previous GANs and outperforms distilled diffusion models in terms of sample quality and speed . abs: .project page:

3

17

154

If you’re interested in learning about the theory behind Muon (a new optimizer), Jeremy has a great explainer in this thread. Also check out all his work leading to this (modula, modular duality, etc): It’s a beautiful theory and seems to work too!.

It's been wild to see our work on Muon and the anthology start to get scaled up by the big labs. After @Kimi_Moonshot released Moonlight, people have asked whether Muon is compatible with muP. I wanted to write up an explainer, as there is something deeper going on here!. (1/8)

3

21

167

If you are interested in GANs, and around Boston on 5/31, please come check out this workshop we are organizing: Talks on theory and practice, arts and applications. Also accepting poster submissions here:

SAVE THE DATE! We’re co-hosting a GANs workshop @MIT on Friday 5/31 with @MITIBMLab. Tutorials, talks and posters on one of the hottest topics in AI.

6

37

158

This post prompted some interesting reactions :) so let me quickly respond to a few:. 1. 'but you gave it the answer'.-- yes, partially, and that's the point, the hypothesis space gives it the _form_ of the answer.

A simple, fun example to refute the common story that ML can interpolate but not extrapolate:. Black dots are training points. Green curve is true data generating function. Blue curve is best fit. Notice how it correctly predicts far outside the training distribution!. 1/3

17

8

126

On my way to CVPR: Antonio, Bill, and I will be at the MIT Press booth on Thursday, 4-4:30pm. We will be happy to sign books if you want to bring yours!. We will also raffle away a few copies.

Our computer vision textbook is released!. Foundations of Computer Vision.with Antonio Torralba and Bill Freeman. It’s been in the works for >10 years. Covers everything from linear filters and camera optics to diffusion models and radiance fields. 1/4

1

13

134

Here's a tool that I think many may find useful: a *variable length* image tokenizer. 𝚕𝚎𝚗(𝚝𝚘𝚔𝚎𝚗𝚒𝚣𝚎(𝚒𝚖𝚊𝚐𝚎)) should depend on image complexity and task needs, and this tool supports both.

Current vision systems use fixed-length representations for all images. In contrast, human intelligence or LLMs (eg: OpenAI o1) adjust compute budgets based on the input. Since different images demand diff. processing & memory, how can we enable vision systems to be adaptive ? 🧵.

2

7

128

Even wonder if all the fancy new contrastive objectives would be useful for regular old *supervised* learning? Turns out they can be!.

New paper on *Supervised Contrastive Learning*: A new loss function to train supervised deep networks, based on contrastive learning! Our new loss performs significantly better than cross-entropy across a range of architectures and data augmentations.

5

15

122

How much does #dalle know about 3D?. Let's see by asking it to render stereo pairs. "An anaglyph photo of a cute lego elephant."

5

13

113

More of our work on learning vision from synthetic data from generative models. This time both the images and the text are synthetic!.

🚀 Is the future of vision models Synthetic? Introducing SynCLR: our new pipeline leveraging LLMs & Text-to-image models to train vision models with only synthetic data!.🔥 Outperforming SOTAs like DinoV2 & CLIP on real images! SynCLR excels in fine-grained classification &

1

12

112

(1/2) New work!. Contrastive Multiview Coding.Paper+Code: Different views of the world capture different info, but important factors are shared. Learning to capture the shared info —> SOTA reps. Saturday @ ICML self-sup workshop.w/ @YonglongT + @dilipkay.

2

30

111

Human imagination is compositional: e.g., you can picture the Notre Dame, on a grassy field, surrounded by oaks, . Turns out GANs can too, in their latent space! . We study to what extent GANs can compose parts, and provide some fun tools for doing so in the paper+code below:.

Excited to share our ICLR 2021 paper on image composition in GAN latent space! joint with @jswulff @phillip_isola . paper+code+colab: .it's interactive and super fun to play with :)

1

18

103

Nice to see more theory on this. Paraphrasing: the only way to correctly colorize pikachu yellow is to first implicitly recognize that you are looking at a picture of pikachu!.

Predicting What You Already Know Helps: Provable Self-Supervised Learning.We analyze how predicting parts of the input from other parts (missing patch, missing word, etc.) helps to learn a representation that linearly separates the downstream task. 1/2

2

10

95

How can we learn good visual reps from *environments*, rather than datasets?. Requires exploring env to collect data to train rep. We study this as adv game (curiosity) between an explorer and a contrastive learner. At ICCV! w/@du_yilun @gan_chuang.-->

0

8

88

"Google search" for generative models:. with all the gazillions of models being trained now, I think tools like this will become more and more essential -- very exciting work!.

Introducing Modelverse (, an online model sharing and search platform, with the mission to help everyone share, discover, and study deep generative models more easily. Please share your models on Modelverse today. [1/4]

0

7

92

Different generative models (not just CNNs) tend to all make similar mistakes, especially at the patch level. This means you can train a fake detector on one kind of fake and it generalizes decently well to detecting fakes from held out models too!. We analyze this ability here:.

Just released our new project on using small patches to classify and visualize where artifacts occur in synthetic facial images, joint with @davidbau, Ser-Nam Lim, @phillip_isola . code+paper available at:

1

12

90

I found this to be a really nice perspective for understanding recent DL optimizers. My favorite kind of science is when someone finds a unifying theory that makes knowledge simpler than it was before, and I think this in that category.

Laker and I wrote this anthology to show how if you disable the EMA in three of our best optimizers---Adam, Shampoo and Prodigy---then they are actually steepest descent methods under particular operator norms applied to layers. (1/3).

0

5

86

Work we did on visualizing memorability, using GANs!.

What makes some images stick in the mind while others fade? Ask a GAN. via @MIT #icccv2019 @phillip_isola @AudeOliva @alexjandonian @L_Goetschalckx.

1

9

78

My favorite thing here is we are not just supervising our way to this result by imitating artist examples. Rather good sketches emerge, in part, as a consequence of what may be the _objective_ of line drawings: to communicate geometry and meaning. Demo:

Our new work (with fun demo!) on making better line drawing by making them informative, as assessed by a neural network's ability to infer depth and semantics. With Caroline Chan and @phillip_isola

3

9

80

@docmilanfar I agree this is one mode for success. But I think you can also be successful in other modes. I think my own career, which has had some success, is more often in the mode of jumping around from topic to topic.

1

1

71

Revisiting this idea with GPT-4!. Prompt:."When I type “eli[N,M] X”, please explain X like I’m age N, using M references to movies. Respond with one sentence.". Now maybe it can help us all understand how GPT-4 works. 🧵.

Now trying out parameterized natural language commands in ChatGPT. a bit like defining a function F(X;n) where n is a parameter. Here is the prompt:. 1/n

5

7

71

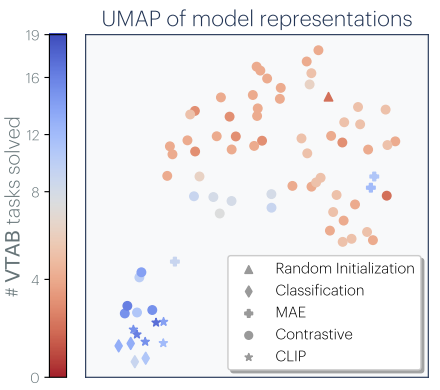

Super cool results on generative representation learning; thought-provoking about generative versus contrastive. Generative tries to model all info, which may make it less efficient, but perhaps sufficient in the end:.

Transformers trained to predict pixels generate plausible completions of images and learn excellent unsupervised image representations!. To compensate for their lack of 2d prior knowledge, they are more expensive to train.

0

5

69

This is very intriguing, suggestive that of the underlying sameness of so many problems. Reminds me of the Feynman quote: "Nature uses only the longest threads to weave her patterns, so each small piece of her fabric reveals the organization of the entire tapestry.".

What are the limits to the generalization of large pretrained transformer models?. We find minimal fine-tuning (~0.1% of params) performs as well as training from scratch on a completely new modality!. with @_kevinlu, @adityagrover_, @pabbeel.paper: 1/8.

1

8

58

@RRKRahul96 perhaps but I think similar principles help explain the extrapolation properties of modern neural nets. CNNs and transformers extrapolate in part due to the extreme constraints they place on the hypothesis space.

6

5

54

Really cool new high-res + editable version of #pix2pix from nvidia and my colleague @junyanz89:

2

12

55

Really thought-provoking work! In determining what makes a good representation, it might be the journey that matters not the destination.

Excited to share our position paper on the Fractured Entangled Representation (FER) Hypothesis!. We hypothesize that the standard paradigm of training networks today — while producing impressive benchmark results — is still failing to create a well-organized internal.

3

5

55

New work to appear at NeurIPS:. How can we get agents to communicate meaningfully with each other?. Simple idea: just have them broadcast compressed reps of their obs. Makes decentralized coordination much easier!. --> w/ @ToruO_O J Huh C Stauffer S Lim

2

6

52

Super cool new paper/framework from @jxbz Tim Large et al. (in which I had a small role). Enables tuning lr on small model, then using same value on big model. Hopefully helps eliminate wasteful lr sweeps on big models.

New paper and pip package:.modula: "Scalable Optimization in the Modular Norm". 📦 📝 We re-wrote the @pytorch module tree so that training automatically scales across width and depth.

0

7

50

These are beautiful. It's interesting how the objects seem have their own unique style, a bit distinct from other CLIP/NeRF styles. I'd like to play a video game rendered in this style.

Zero-Shot Text-Guided Object Generation with Dream Fields.abs: project page: combine neural rendering with multi-modal image and text representations to synthesize diverse 3D objects solely from natural language descriptions

0

6

46