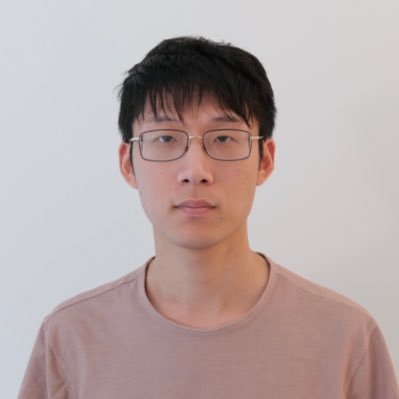

Taishi Nakamura

@Setuna7777_2

Followers

2K

Following

8K

Media

19

Statuses

2K

On the job market | Working on scalable and efficient LLM (MoE pretraining, RL, reasoning). CS MS at @sciencetokyo_en Intern @SakanaAILabs

Joined October 2017

I won’t make it to ICML this year, but our work will be presented at the 2nd AI for Math Workshop @ ICML 2025 (@ai4mathworkshop). Huge thanks to my co‑author @SisForCollege for presenting on my behalf. please drop by if you’re around!

1

8

48

ICLR authors, want to check if your reviews are likely AI generated? ICLR reviewers, want to check if your paper is likely AI generated? Here are AI detection results for every ICLR paper and review from @pangramlabs! It seems that ~21% of reviews may be AI?

14

58

300

seems like the new MoEs by @arcee_ai are coming soon, super excited for this release lfg here is a recap of the modeling choice according to the transformers PR: > MoE (2 shared experts, top-k=6, 64 total experts, sigmoid routing) > GQA with gated attention > NoPE on the global

github.com

Summary This PR adds support for the AFMoE (Arcee Foundational Mixture of Experts) model architecture for the upcoming Trinity-Mini and Trinity-Nano releases. AFMoE is a decoder-only transformer mo...

10

11

123

SIMA 2 is our most capable AI agent for virtual 3D worlds. 👾🌐 Powered by Gemini, it goes beyond following basic instructions to think, understand, and take actions in interactive environments – meaning you can talk to it through text, voice, or even images. Here’s how 🧵

388

1K

6K

10月はじめに行っていたデバッグ作業の一部をブログ化しました!LLM開発の裏で行われている作業の雰囲気を感じていただけますと幸いです。 LLM開発の裏で行われるデバッグ作業: PyTorch DCP|Kazuki Fujii https://t.co/S30aNeBdbg

#zenn

zenn.dev

1

41

220

In my experiments as well, I was able to confirm that FP16 has very small differences in logits between rollout and training.

🚀Excited to share our new work! 💊Problem: The BF16 precision causes a large training-inference mismatch, leading to unstable RL training. 💡Solution: Just switch to FP16. 🎯That's it. 📰Paper: https://t.co/AjCjtWquEq ⭐️Code: https://t.co/hJWSlch4VN

1

2

5

We've just finished some work on improving the sensitivity of Muon to the learning rate, and exploring a lot of design choices. If you want to see how we did this, follow me ....1/x (Work lead by the amazing @CrichaelMawshaw)

6

23

188

I also worked on model evaluation, supported experimental design, and led visualization and analysis for this work! Looking forward to contributing to the next steps! モデル評価、実験計画のサポート、可視化・分析を担当しました!今後の開発にも関わっていくのでぜひご注目を!

We’re releasing SwallowCode-v2 & SwallowMath-v2 — two high-quality, Apache-2.0 licensed datasets for mid-stage pretraining. https://t.co/mPSfrbuwvc

https://t.co/LFWRGNzKUo Details in the thread 🧵

0

3

11

dllm is also on the way!

🚀 Introducing SGLang Diffusion — bringing SGLang’s high-performance serving to diffusion models. ⚡️ Up to 5.9× faster inference 🧩 Supports major open-source models: Wan, Hunyuan, Qwen-Image, Qwen-Image-Edit, Flux 🧰 Easy to use via OpenAI-compatible API, CLI & Python API

0

2

39

Thoughts on Kimi K2 Thinking Congrats to the Moonshot AI team on the awesome open release. For close followers of Chinese AI models, this isn't shocking, but more inflection points are coming. Pressure is building on US labs with more expensive models. https://t.co/10yLWcxPld

17

70

537

Science is best shared! Tell us about what you’ve built or discovered with Tinker, so we can tell the world about it on our blog. More details at

thinkingmachines.ai

Announcing Tinker Community Projects

37

39

361

Kimi K2 Thinking just launched on Product Hunt! 🥳 Not chasing votes, just using PH as a clean milestone log for our model updates. :) Huge thanks to the helpful team from @ProductHunt

https://t.co/IlOB3WgI3i

producthunt.com

🔹 SOTA on HLE (44.9%) and BrowseComp (60.2%) 🔹 Executes up to 200 – 300 sequential tool calls without human interference 🔹 Excels in reasoning, agentic search, and coding 🔹 256K context window

21

13

362

SwallowCode, SwallowMathのv2を公開しました。このデータセットを中間学習に使用すると、他のデータセットで学習したのと同等かそれ以上の性能(コーディングや数学において)が出ています。ライセンスもApache 2.0になり、使いやすくなりました。詳細は藤井 (@okoge_kaz) さんのスレッドで。

We’re releasing SwallowCode-v2 & SwallowMath-v2 — two high-quality, Apache-2.0 licensed datasets for mid-stage pretraining. https://t.co/mPSfrbuwvc

https://t.co/LFWRGNzKUo Details in the thread 🧵

0

19

109

SwallowProject最新モデルの開発に利用している数学、コードデータセットを公開しました! 前回のモデルリリースから大分時間が空いてしまっていますが、引き続き強いバイリンガルLLM(日本語、英語)の開発を行っています。 モデルの性能が所定の水準に達し次第、リリースできると思います。お待ちを

We’re releasing SwallowCode-v2 & SwallowMath-v2 — two high-quality, Apache-2.0 licensed datasets for mid-stage pretraining. https://t.co/mPSfrbuwvc

https://t.co/LFWRGNzKUo Details in the thread 🧵

0

5

23

We’re releasing SwallowCode-v2 & SwallowMath-v2 — two high-quality, Apache-2.0 licensed datasets for mid-stage pretraining. https://t.co/mPSfrbuwvc

https://t.co/LFWRGNzKUo Details in the thread 🧵

4

38

150

Leaving Meta and PyTorch I'm stepping down from PyTorch and leaving Meta on November 17th. tl;dr: Didn't want to be doing PyTorch forever, seemed like the perfect time to transition right after I got back from a long leave and the project built itself around me. Eleven years

501

582

11K

to continue the PipelineRL glazing, @finbarrtimbers implemented PipelineRL for open-instruct a little bit ago and it ended up being probably the single biggest speedup to our overall pipeline. We went from 2-week long RL runs to 5-day runs, without sacrificing performance

Don't sleep on PipelineRL -- this is one of the biggest jumps in compute efficiency of RL setups that we found in the ScaleRL paper (also validated by Magistral & others before)! What's the problem PipelineRL solves? In RL for LLMs, we need to send weight updates from trainer to

6

35

229

1/3 🚬 Ready to smell your GPUs burning? Introducing MegaDLMs, the first production-level library for training diffusion language models, offering 3× faster training speed and up to 47% MFU. Empowered by Megatron-LM and Transformer-Engine, it offers near-perfect linear

5

42

149

Despite all the big funding rounds and flashy demos in US robotics, K-Scale’s inability to raise more money should worry us We're at risk of replaying the LLM story all over again in robotics: - Chinese companies are going open-source and collaborating across the value chain

K-scale cancels orders and refunds deposits for kbot. I thought all the VCs were excited about US-based robotics, what happen?

31

40

335