Arcee.ai

@arcee_ai

Followers

4K

Following

2K

Media

283

Statuses

655

Optimize cost & performance with AI platforms powered by our industry-leading SLMs: Arcee Conductor for model routing, & Arcee Orchestra for agentic workflows.

San Francisco

Joined September 2023

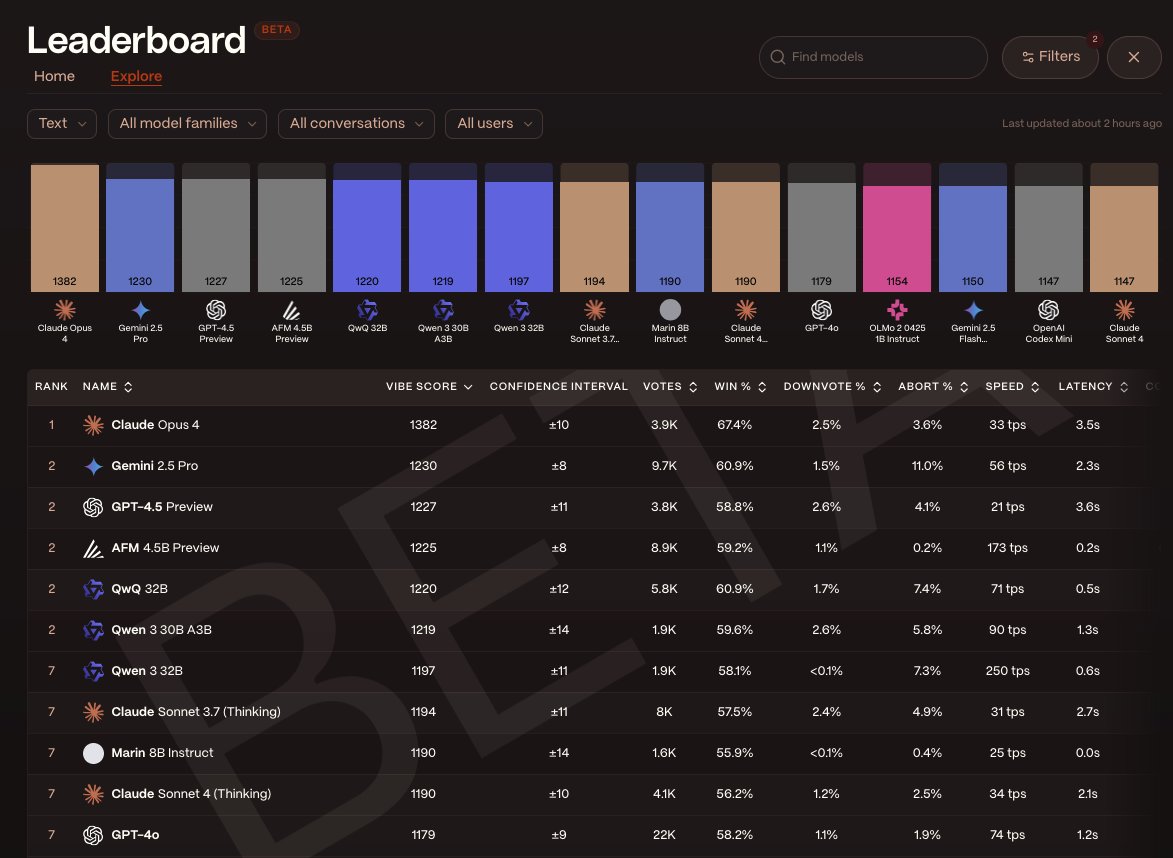

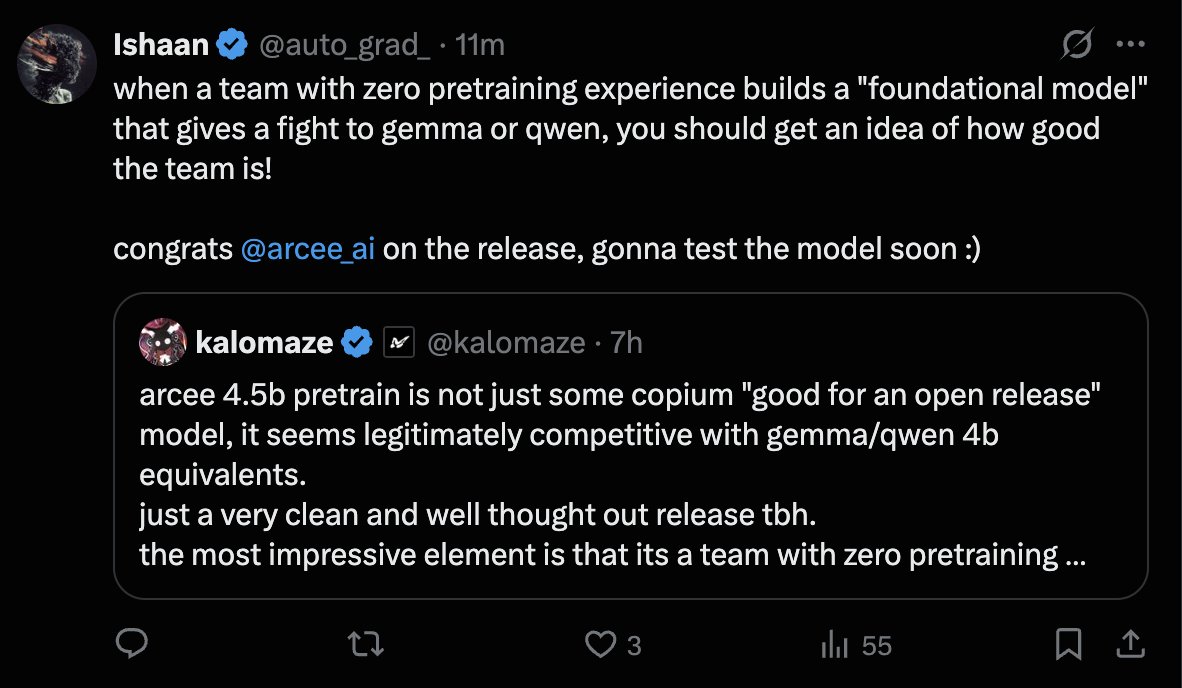

We knew it all along, but it's great to get user validation: through real-user usage and evaluation, several of our small models—Maestro, Coder, and AFM-4.5B-Preview—are topping the charts on @yupp_ai , the only platform that determines AI quality through real-user pairwise

1

3

27

As generative AI becomes increasingly central to business applications, the cost, complexity, and privacy concerns associated with language models are becoming significant. At @arcee_ai, we’ve been asking a critical question: Can CPUs actually handle the demands of language

1

3

21

RT @julsimon: In this new video, I introduce two new research-oriented models that @arcee_ai recently released on @huggingface Face. Homu….

0

1

0

Save the date! A week from now, please join us live to discover how @Zerve_AI is leveraging our model routing solution, Arcee Conductor, to improve its agentic platform for data science workflows. This should be a super interesting discussion, and of course, we'll do demos!

1

1

7

RT @sjoshi804: Congratulations to the @datologyai team on powering the data for AFM-4B by @arcee_ai - competitive with Qwen3 - using way wa….

0

1

0

RT @arcee_ai: Last week, we launched AFM-4.5B, our first foundation model. In this post by @chargoddard , you will learn how we extended t….

0

33

0

RT @teortaxesTex: You can't overstate how impressive this is. Arcee took one of the strongest base models, GLM-4, a product of many years o….

0

26

0

Last week, we launched AFM-4.5B, our first foundation model. In this post by @chargoddard , you will learn how we extended the context length of AFM-4.5B from 4k to 64k context through aggressive experimentation, model merging, distillation, and a concerning amount of soup. Bon

6

33

190