elie

@eliebakouch

Followers

10K

Following

15K

Media

465

Statuses

4K

Training llm's (now: @huggingface) anon feedback: https://t.co/JmMh7Sfvxd

Joined January 2024

Training LLMs end to end is hard. Very excited to share our new blog (book?) that cover the full pipeline: pre-training, post-training and infra. 200+ pages of what worked, what didn’t, and how to make it run reliably https://t.co/iN2JtWhn23

123

897

6K

introducing X Wrapped 2025 visualize your posts as a github contribution graph. roast your X persona. boast your stats! link below 👇🏻

45

13

145

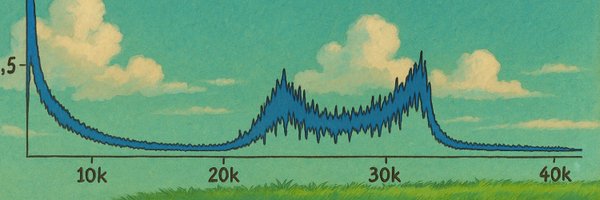

(someone told me it might not be 40k gpu available all the time, which make sense)

0

0

2

just realizing that mistral cluster will be ~2x smaller than poolside cluster??? wtf

these were trained on ~3k h200s, a practice cluster, and yet, sota zone mistral’s 18k gb200 cluster comes online soon today’s releases are a warmup for the mistral 4 family it will be an interesting few months for frontier open models

3

0

35

nanoGPT - the first LLM to train and inference in space 🥹. It begins.

We have just used the @Nvidia H100 onboard Starcloud-1 to train the first LLM in space! We trained the nano-GPT model from Andrej @Karpathy on the complete works of Shakespeare and successfully ran inference on it. We have also run inference on a preloaded Gemma model, and we

211

444

6K

1/5 🚀Apriel-1.6-15B-Thinker: a 15B multimodal reasoner scoring 57 on the Artificial Analysis Intelligence Index - approaching the performance of ~200B-scale frontier models while remaining an order of magnitude smaller. 🧠Model weights: https://t.co/GE22SOIBfT 📄Blog:

9

49

200

let's go! glad to see more lab releasing part of their training data

🔥 Ultra-FineWeb-en-v1.4 is coming! 2.2T tokens fully open-sourced! The core training fuel for MiniCPM4 / 4.1, fully updated based on FineWeb v1.4.0: 🆕 What's New 1️⃣ Fresher Data: Added CommonCrawl snapshots from Apr 2024 - Jun 2025 to capture the latest world knowledge. 2️⃣

0

1

36

what a pace. cant wait to see their further scaled up model using moe. https://t.co/IJng9yfZ3V

Motif Technologies, a 🇰🇷 Korean AI lab, has just launched Motif-2-12.7B-Reasoning, a 12.7B open weights reasoning model that scores 45 on the Artificial Analysis Intelligence Index and is now the leading model from Korea Key benchmarking takeaways: ➤ Open weights:

1

1

28

Directly comparing a benchmark of Devstral2-123B on my hardware to MiniMax-M2 (230B-A10B) shows the difference in performance MoE can give. At 100 requests concurrently: MiniMax is 2x faster At 2 requests concurrently: MiniMax is 3.5x faster

nice that it's open weight, but comparing dense vs moe models and only looking at total params is pretty unfair, if you look at active params instead of total params it's a different story: - GLM 4.6 (32B): 74% fewer - Minimax M2 (10B): 92% fewer - K2 thinking (32B): 74% fewer -

2

2

13

Stop guessing Incoterms. Grab our free quick-reference sheets and know exactly who owns cost + risk on every move. → Click for free download

0

2

6

is there any paper or blog on synthetic data vs knowledge distillation, and if using both together lead to diminishing return? my understanding is that none of the open models (except gemma and lfm2) use knowledge distillation in pre training, but most of them do use some kind

Similar to how we use distillation ( https://t.co/KUjmWfHyxC) to create awesome Gemini Flash models that are high quality and very computationally efficient from larger-scale Pro models, Waymo similarly uses distillation from larger models to create computationally efficient

8

2

50

I think that's a very fair point, but also swe-bench is not a great proxy anyway so the whole graph is a bit meh ? (notice the y axis on top) Worth a try is all I would say, really impressive model to my eyes in a practical use, go check for yourself ! https://t.co/HbEJqN6YB0

nice that it's open weight, but comparing dense vs moe models and only looking at total params is pretty unfair, if you look at active params instead of total params it's a different story: - GLM 4.6 (32B): 74% fewer - Minimax M2 (10B): 92% fewer - K2 thinking (32B): 74% fewer -

0

1

8

@eliebakouch @ADarmouni not really! a lot of orgs have only 4xh100 for their entire companies, so this is where something dense shines

0

0

8

More and more Amazon sellers switch to Boxem every day It's the modern way to create shipments, track profit, and save more time than ever managing your business Get a free trial today:

3

1

21

i should clarify, no hate on mistral here, i'm really glad they release it in open weight and i'm sure it will be useful for researcher! It's the comparison on total parameters for dense vs moe that i find very misleading but it's not something that they advertise heavily (it's

nice that it's open weight, but comparing dense vs moe models and only looking at total params is pretty unfair, if you look at active params instead of total params it's a different story: - GLM 4.6 (32B): 74% fewer - Minimax M2 (10B): 92% fewer - K2 thinking (32B): 74% fewer -

4

0

47

nice that it's open weight, but comparing dense vs moe models and only looking at total params is pretty unfair, if you look at active params instead of total params it's a different story: - GLM 4.6 (32B): 74% fewer - Minimax M2 (10B): 92% fewer - K2 thinking (32B): 74% fewer -

Introducing the Devstral 2 coding model family. Two sizes, both open source. Also, meet Mistral Vibe, a native CLI, enabling end-to-end automation. 🧵

18

17

289

Hey @EUCouncil why don’t you guys go fine these police officers? Or are you’ll to busy trying to steal more money from American Companies and shareholders since you all opened an investigation into @Google now as well?

5

6

56

btw i didn't mean at all that glm are benchmaxing here lol, i was thinking that some of the benchmark here can be saturate with distillation and some not (if they are distilling)

0

0

0

> Today, we’re building an infrastructure-first, deep-tech company with a simple and ambitious mission: "Make frontier-level AI infrastructure open and accessible to everyone." this is very very exciting 🥹

We've been running @radixark for a few months, started by many core developers in SGLang @lmsysorg and its extended ecosystem (slime @slime_framework , AReaL @jxwuyi). I left @xai in August — a place where I built deep emotions and countless beautiful memories. It was the best

0

1

36

interesting how some benchmark doesn't seems to get huge boost between glm-4.6V and the flash version (which is ONLY 9B dense compare to 106B A12B MoE)

GLM-4.6V Series is here🚀 - GLM-4.6V (106B): flagship vision-language model with 128K context - GLM-4.6V-Flash (9B): ultra-fast, lightweight version for local and low-latency workloads First-ever native Function Calling in the GLM vision model family Weights:

8

2

107