Jonathan Ross

@JonathanRoss321

Followers

14,186

Following

99

Media

70

Statuses

513

Explore trending content on Musk Viewer

ドカ食い

• 99091 Tweets

自動車税

• 46482 Tweets

アイスの日

• 46089 Tweets

Saka

• 42633 Tweets

作者の性別

• 32399 Tweets

#素のまんま

• 23870 Tweets

Rodri

• 22419 Tweets

クソマロ

• 19586 Tweets

Foden

• 15792 Tweets

マシュマロ

• 14503 Tweets

ROCK 'N' ROLL

• 14090 Tweets

Mainoo

• 13213 Tweets

Saliba

• 12197 Tweets

LOREAL PARIS X ML

• 11657 Tweets

ブレマイ

• 11326 Tweets

Isak

• 10645 Tweets

Last Seen Profiles

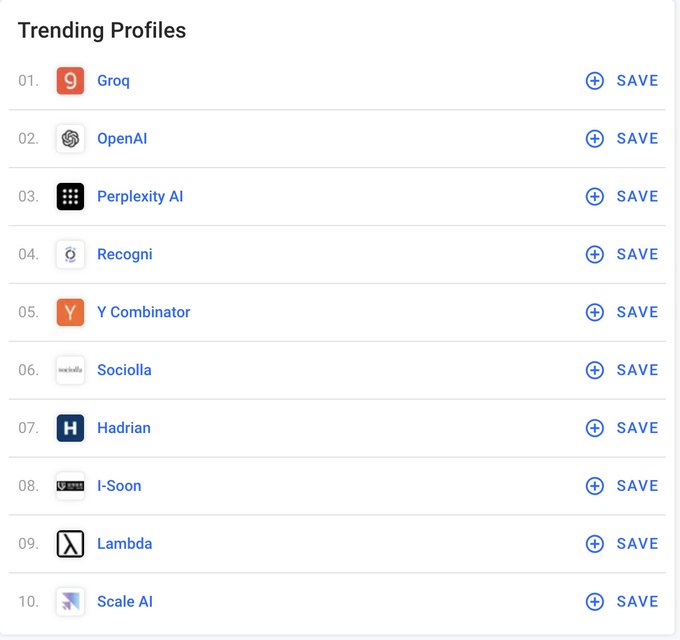

What do

@GroqInc

's LPUs cost? So much curiosity!

We're very comfortable with this pricing and performance - and no, the chips/cards don't cost anywhere near $20,000 😂

#Groqspeed

34

41

398

We heard the demand for increased rate limits! This partnership adds another 21,600 LPUs this year to GroqCloud, with the option to add an additional 108,000 LPUs next year. For comparison. This will 8x the capacity this year vs. what's available on GroqCloud today, which will…

We're excited to partner with

@EWPESG

to build an

#AI

Compute Center for Europe in Norway. This puts us on track to deliver 50% of the world's

#inference

compute capacity via GroqCloud™, running on our AI infrastructure - the LPU™ Inference Engine. Read more:…

3

10

92

12

13

140

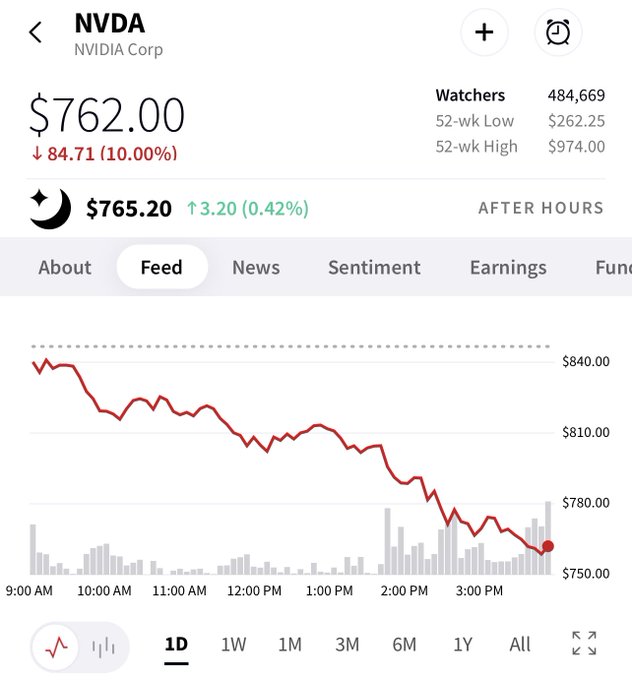

Looking forward to

@nvidia

GTC next week! Apparently there are numerous exciting and significant last minute changes to the event aimed at answering how Nvidia will respond to concerns raised by how popular some inference focused AI chip startup has recently become. 👀

What…

14

6

138

😉

A new partnership between

@aramco

and

@GroqInc

to build GroqCloud inference capabilities together announced at

@LEAPandInnovate

22

39

344

10

12

121

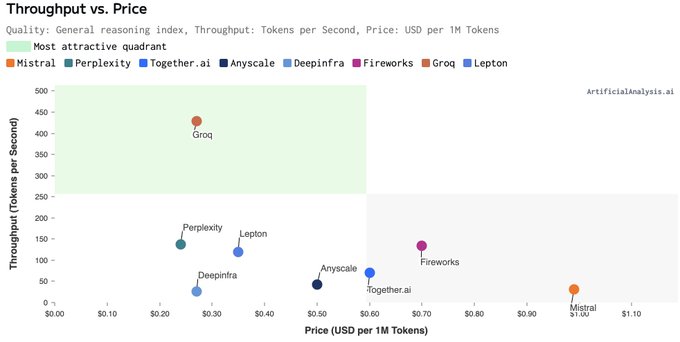

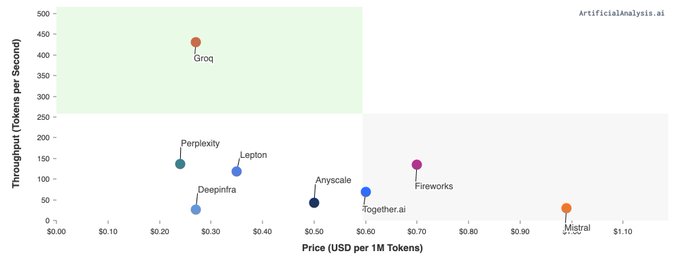

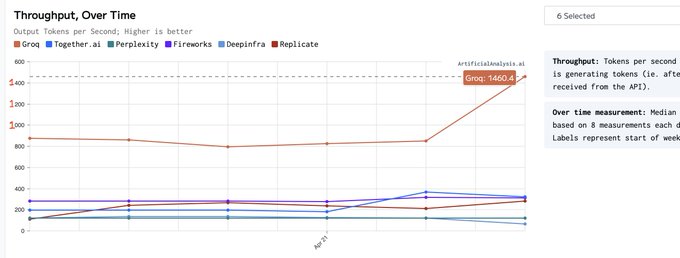

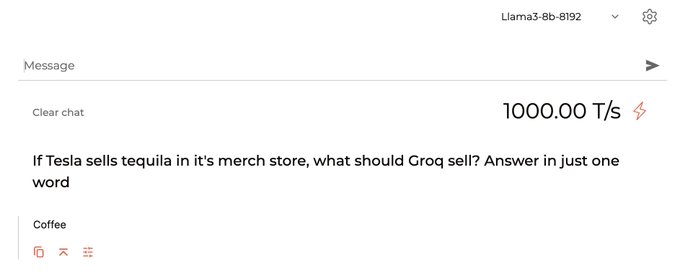

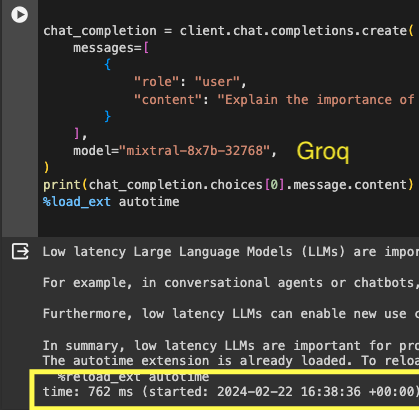

We broke

@ArtificialAnlys

's graph for Llama3 8B.

Sometimes we get a lucky shot on the benchmarks at the high end of our variance, but this is real, we have some we've logged that are even a liiiiiittle bit faster. Who knows, maybe someday this could be our mean perf 🤔

Maybe.

9

9

107

Wow on the press recently, thanks (

@Gizmodo

,

@stratechery

,

@SemiAnalysis_

) for the coverage of

@GroqInc

. 🙏 We're 2 months into providing early access to our LPU™ systems. Since recent publishings we've pushed a software update last night that gets more than 2x the throughput…

10

17

106

Thank you for the shoutout of

@GroqInc

on

@theallinpod

!

@friedberg

w.r.t. getting everything right all at once, when asked what our secret sauce is, we say, "No, we have 11 herbs and spices, and it takes every one of them to do what we do."

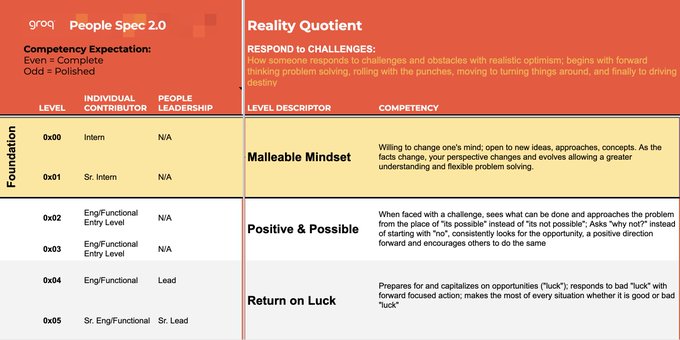

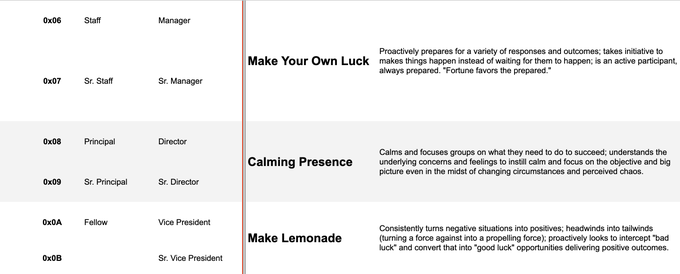

As for luck, here's a small excerpt…

E167: $NVDA smashes earnings (again), $GOOG's AI disaster,

@GroqInc

's LPU breakthrough & more

(0:00) bestie intros: banana boat!

(2:34) nvidia's terminal value and bull/bear cases in the context of the history of the internet

(27:26) groq's big week, training vs. inference,…

152

105

864

7

4

97

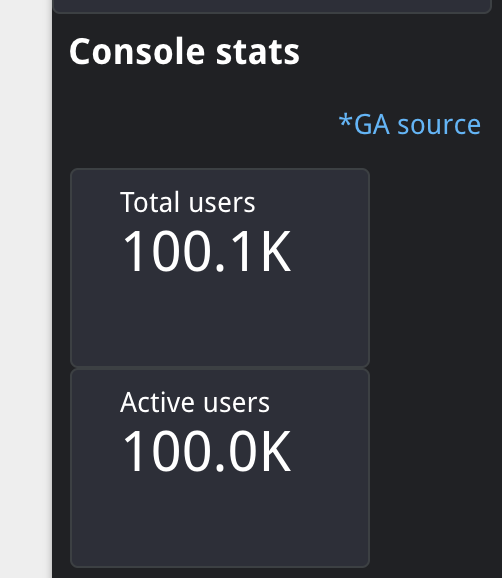

@notfncladvice

You're absolutely right. The reason we got to 100,000 developers >50x faster was we made it almost effortless to build AI applications. No low level C++ code, no CUDA kernels, no need to understand computer architecture.

We also deployed all the HW and automated the dev ops. 😂

3

3

96

Thank you for the shout out

@AndrewYNg

- at

@GroqInc

we're committed both to driving the cost of compute to zero, and the speed of compute to ∞

If you haven't tried ludicrous speed yet, try it at

And if you find yourself in Mountain View, you're invited…

6

11

97

@adamscochran

Nvidia announced up to 50 T/s per GPU using 4-bit numerics with the B200. So 30x faster than what, an Arduino?

- we're still a liiiiiitle bit faster. Just a little 😉

3

6

64

We thought the universe needed to bend a bit more in both space and time. This will do it.

Groq news!

Happy to announce that

@DefinitiveIO

has been acquired by

@GroqInc

so that we can accelerate Groq’s cloud offering. Was lucky to be the seed and A for both of these companies.

@sundeep

will now be GM of the Groq Cloud and work closely with

@JonathanRoss321

to…

76

50

623

5

8

62

@rowancheung

Hey

@rowancheung

, another competitive difference is responsiveness. LLMs run faster on Groq®'s LPU™ chips than any other hardware, so if you want better answers fast let

@elonmusk

know that you want

@xai

to run at

#GroqSpeed

. That, or you can wait, and wait, and wait for them to…

26

25

36

@IanCutress

@nvidia

@groq

I've met Jensen before, and he's had his team updating GTC specifically in response to Groq this week, so not knowing enough about Groq seems unlikely. That said, ***

@GroqInc

runs 70B parameter models faster than

@nvidia

runs 7B parameter models. *** Try it:…

2

9

52

@lulumeservey

Lulu, have you been reading our internal comms plans? 😂

Credit goes to

@lifebypixels

our head of Brand and

@andycunningham4

our fractional CMO, and the whole

@GroqInc

team.

2

0

42

@kami_ayani

@adamscochran

Yes, we're do our arithmetic in FP16 and store weights in FP8 on now. That said, it's a 70B and 8x7B on that site. We do have a (not released) 180B parameter model running at 200T/s with FP16 multiplies, and the performance of an MoE tends to be similar to…

2

6

40

Cute kid. DM me, we'll get you access, and you can just include him in whatever demo you do, that'll be enough 😂

4

1

34

@Ahmad_Al_Dahle

We credit Llama3 with accelerating it a week faster than we projected.

Thank you

@AIatMeta

🦙🦙🦙

1

0

32

Deepgram knocked it out of the park! Enabling real-time voice to text from 12,000km away. Part of any serious voice solution.

Huge shoutout to Jonathan Ross at

@GroqInc

for this incredible LUI demo on

@CNN

! We're honored to be a part of your stack & we can't wait to see where this astounding technology will go next 🚀

Thanks again

@JonathanRoss321

for the mention 😄

Demo:

2

6

36

2

5

28

@PatrickMoorhead

@AMD

@nvidia

@Signal_65

Looks like Nvidia went big on input tokens and small on output tokens because the MI300X has better memory bandwidth, and will look better than Nvidia on output. I wouldn't call these "typical" settings, typically the output is longer.

#BenchmarkShenanigans

2

2

28

A little over 24 hours left until my talk at

@WorldGovSummit

- first time speaking to a Prime Minister, let alone multiple, let alone from a stage.

As usual,

@GroqInc

has something new exciting to show. Wish us luck!

😁👍

#GroqSpeed

7

2

28

Thrilled to talk with

@SavIsSavvy

and

@LisaMartinTV

for

#SuperCloud5

. Great conversation about why whiteboards were banned at

@GroqInc

,

#AI

safety, and how

#GenAI

may increase empathy in the world.

Oh, also, why the

#Groq

™ brand is "wow!"

The llama is back! 🦙

#Supercloud5

rock stars.

A curious wardrobe change for

#theCUBE

's Savannah Peterson as Jonathan Ross, CEO of

@GroqInc

, known for its mascot llama, stops by our studios today.

💬 Join the real-time conversations!

#LiveTechNews

…

0

3

12

4

10

25

@lukaszbyjos

@GroqInc

We'll save you the electric bill, and send you some foot warmers instead.

1

0

26

@ramahluwalia

Correction -

@GroqInc

doesn't compete with

@OpenAI

, we uniquely provide a service to affordably run very, very large language models ultra fast. Anyone can run on Groq and get a massive speed boost.

#GroqOn

🚀🚀🚀

4

8

23

@ylecun

@tegmark

@RishiSunak

@vonderleyen

Meta's Open Source models saved Groq

2 years ago we got a panicked call from a CEO deploying the then largest LLM service, he couldn't get any GPUs. They couldn't share the models with us so for 2 years...Nothing.

Then Llama. Now we have insane demand for LPU chips.

Thank you!

0

8

22

Try

#GroqSpeed

for yourself at .

A question we at Groq used to get before we had LLMs running on our

#LPU

™ Inference Engine was why do

#LLMs

need to run faster than reading speed. No one asks that anymore.

7

4

20

@pmddomingos

We love Nvidia, for training models. For inference, well, we have another offering from

@GroqInc

.

1

4

16

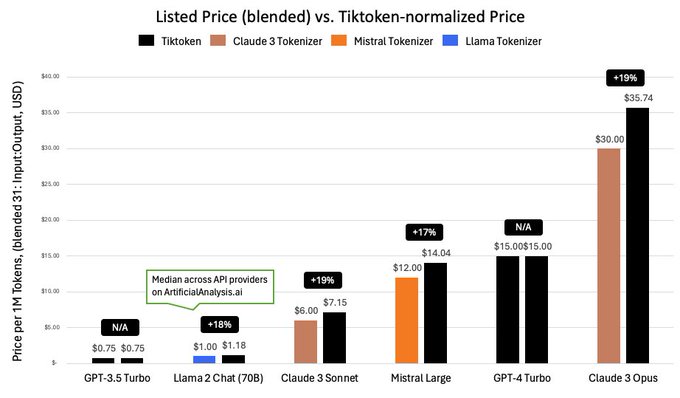

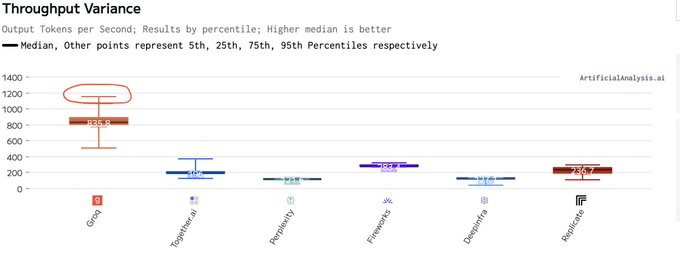

Clever new benchmark from Artificial Analysis.

0

1

19

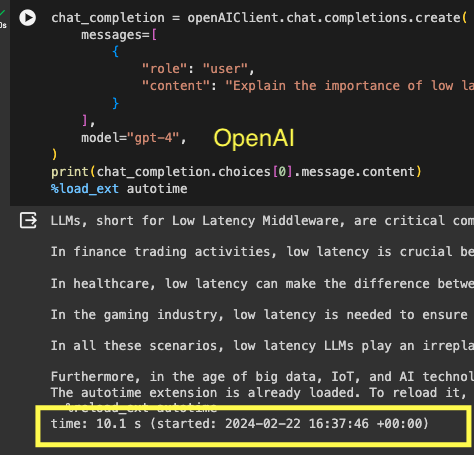

@GroqInc

Thanks for access! The speed is insane!

Note how similar the calls are. Makes it super easy to get going..

3

2

31

0

1

18

@canipeonyou

@nvidia

Nvidia's been talking to a lot of CFOs recently - explaining why they're still economical for inference.

We believe in show rather than tell, so let's count how many LPUs get deployed by the end of 2025. That'll answer that question 😁

0

0

15

We now have definitive proof that fast tokens are worth more than slow tokens.

3

2

14

@ramahluwalia

and I start getting philosophical around timestamp 41:17. What's next after the Turing test? What is insight and can AI have it? Can an AI experience a Eureka! moment? What is sentience, and are we even sentient?

4

3

11

@samlakig

@satnam6502

Your math seems to be accurate :)

The weights for Mixtral are smaller, the KV cache is a little larger (32K), but overall, close.

The reason we're so low power is that we keep the model in the on-chip memory (SRAM). HBM requires almost 10x the energy per token at this latency.

2

1

9

@ramahluwalia

Hey

@satyanadella

,

Have you considered

@GroqInc

's LPU™ Inference Engine? ;)

#GroqSpeed

1

4

9

We love the work

@lmsysorg

is doing! Paged Attention, Chatbot Arena, LMSYS-Chat-1M. Very cool. I'm really impressed with their performance on

#llama2

70B in the video.

Of course, LPU vs. GPU, I think we all know how this video is going to end.

#GroqSpeed

2

1

10

@tomjaguarpaw

@Russell_AGI

@GroqInc

We have plenty of capacity:

As for our next-gen chip (in addition to those 1 million LPUs), we're the lead customer for Samsung's 4nm plant in Taylor Texas, which is what Tom Ellis shared.

We're going very, very high volume 😁

2

1

10

@samlakig

@satnam6502

We measure in joules per token. Under full load we're expecting it to get down to less than 3J / Token

1

0

9

Love the wow factor!

#GroqSpeed

We love when

#Groqsters

show people our demo out in the wild. This was last night at Schiphol airport and it's really fun to see the reaction of end-users to

#groqspeed

. Try it yourself over at and reach out for API access requests.

0

2

11

0

2

8

@sharongoldman

@GroqInc

@mattshumer_

@nvidia

Thank you

@sharongoldman

. I asked the LLM to summarize, but it said the content was best read in full 😉 That said, trying anyway:

#LPU

== Insanely Fast Inference

6

1

7

@NaveenGRao

@chamath

Thanks! We're huge fans of what you're doing over at Mosaic/Databricks :)

0

0

7

@GroqInc

's LPU™ chips run LLMs at crazy speeds, e.g. 70B parameters models at 300 tokens / second. How fast do you think we can run a 7B parameter model like

@MistralAI

's 7B model? Watch below to find out

👇 😂

2

1

7

@TonyHawkersCRC

-- We built our own chips - that's how we're faster. The video you saw was Meta's 70B parameter model running on our LPU™ Inference Engine, rather than on GPUs.

2

3

5

@JoshMiller656

@IntuitMachine

It was the only picture of a GPU we could find that hadn't been photoshopped to look more regular than it is 😉

1

0

6

Hey

@SamA

, glad to see you back at

@OpenAI

.

As you've said, technology magnifies differences, and the difference here👇 is clear.

#BetterOnGroq

When the dust settles, let's build something together.

#GroqOn

1

3

7