Ronen Eldan

@EldanRonen

Followers

2,026

Following

115

Media

6

Statuses

86

Previously doing maths at @WeizmannScience , currently AI researcher at @MSFTResearch . Pretty good at loading a dishwasher.

Joined January 2020

Don't wanna be here?

Send us removal request.

Explore trending content on Musk Viewer

Kendrick

• 862459 Tweets

Drake

• 679404 Tweets

Columbia

• 614485 Tweets

Bayern

• 368475 Tweets

Euphoria

• 320763 Tweets

#الهلال_الاتحاد_كاس_الملك

• 209252 Tweets

Kroos

• 201562 Tweets

Vini

• 132933 Tweets

Kane

• 92607 Tweets

Sane

• 87746 Tweets

Bellingham

• 86457 Tweets

مدريد

• 76870 Tweets

Musiala

• 62758 Tweets

Bernabeu

• 56254 Tweets

Kdot

• 54103 Tweets

رونالدو

• 47326 Tweets

سافيتش

• 41950 Tweets

Tuchel

• 41629 Tweets

للهلال

• 35158 Tweets

Rodrygo

• 31955 Tweets

Wacko

• 31820 Tweets

Nadal

• 31128 Tweets

Neuer

• 28261 Tweets

#1MAYIS

• 25980 Tweets

Modric

• 22841 Tweets

سعود عبدالحميد

• 22826 Tweets

الريال

• 21656 Tweets

كوليبالي

• 20312 Tweets

المعيوف

• 20311 Tweets

كروس

• 18433 Tweets

البايرن

• 17918 Tweets

メーデー

• 15665 Tweets

Ipswich

• 14709 Tweets

Kim Min Jae

• 13844 Tweets

八十八夜

• 10629 Tweets

Last Seen Profiles

High-quality synthetic datasets strike again. Following up on the technique of TinyStories (and many new ideas on top) at

@MSFTResearch

we curated textbook-quality training data for coding. The results beat our expectations.

For skeptics- model will be on HF soon, give it a try.

11

39

257

Can we make LLMs unlearn a subset of their training data? In a joint project with

@markrussinovich

, we took Llama2-7b and in 30 minutes of fine-tuning, made it forget the Harry Potter universe while keeping its performance on common benchmarks intact:

6

33

233

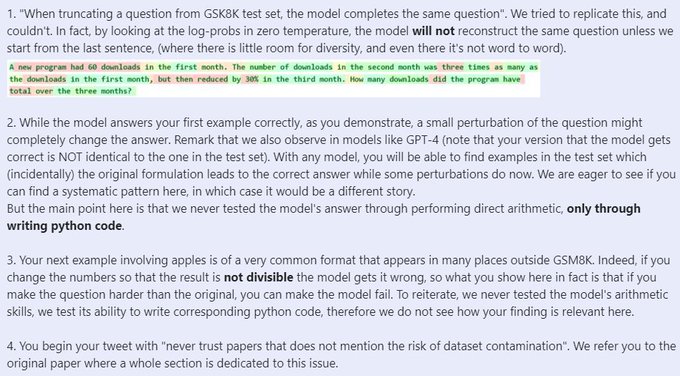

@slashML

@SebastienBubeck

@allie_adg

@suriyagnskr

@suchenzang

, thanks for the cool work! Any such scrutiny is perfectly welcome. Let's address each one of your points:

2

4

84

Our new phi-2 model, trained on textbook-quality synthetic data (based on the textbooks-are-all-you-need / TinyStories approach), was just announced by

@satyanadella

at

#MSIgnite

.

Microsoft💜Open Source + SLMs!!!!!

We're so excited to announce our new *phi-2* model that was just revealed at

#MSIgnite

by

@satyanadella

!

At 2.7B size, phi-2 is much more robust than phi-1.5 and reasoning capabilities are greatly improved too. Perfect model to be fine-tuned!

18

87

491

6

9

56

@suchenzang

Thanks for the cool work! Any such scrutiny is perfectly welcome. Let's address each one of your points:

0

1

30

Tune in to this new episode of

@ThisAmerLife

by

@dkestenbaum

, with the awesome

@SebastienBubeck

,

@peteratmsr

and

@ecekamar

who say profound things about GPT4, and also with me calling BS on my partner, comparing her to outdated LLMs.

0

9

26

If you're interested in TinyStories and also like to watch office furniture, this video is for you.

New video by

@EldanRonen

on his TinyStories work w. Yuanzhi Li!

Even Deep Learning experts such as

@karpathy

referred to the work as "inspiring", and I couldn't agree more. Anyone interested in understanding what's happening with LLMs should take a look.

1

31

112

1

2

23

@markrussinovich

The model's on Huggingface! We'd love it if you tried to break it by making it spit out Harry Potter content:

Paper link:

3

1

23

To be proactive about some anticipated critique or skepticism: First and foremost, the models are on

@huggingface

and you're more than welcome to test them yourself.

1

1

22

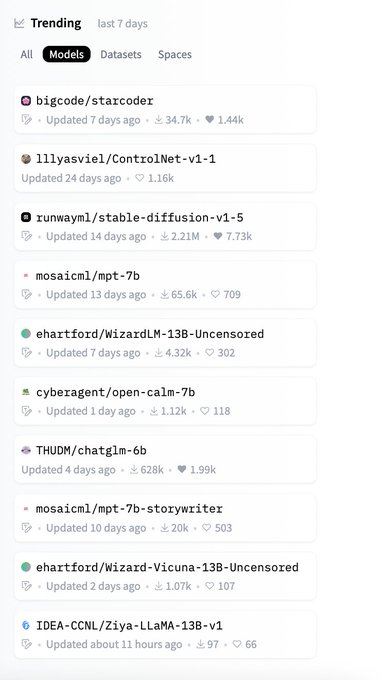

Happy to see that

#TinyStories

made the list of trending datasets on

@huggingface

together with

@BigCodeProject

,

@AnthropicAI

,

@MosaicML

and others <3

Trending model, datasets & apps of the week on . Kudos to

@BigCodeProject

@MosaicML

@databricks

@togethercompute

@AnthropicAI

@deepfloydai

and many others!

3

9

49

3

3

21

@yoavgo

If you read the paper you'll notice that the llm grading is done *in addition* to standard benchmarks, as another way to check that we're not overfitting to them. Moreover, llm grading overcomes pass/fail dichotomy which many times gives a low grade due to small mistakes.

1

0

15

"Who better to write for an audience of small language models than large ones?"

Pretty much the perfect way to summarize TinyStories (and the emerging related research direction), as put by

@benbenbrubaker

in a

@QuantaMagazine

piece about the paper:

2

3

12

@KerbalFPV

Thanks! We're working on sharing the training code (it's a pretty big mess at this point and using MSFT-specific stuff). But all in all the training is completely vanilla, you can just use the

@huggingface

trainer with a GPT-NEO architecture.

2

1

11

@SebastienBubeck

Another nice side to the story is that it shows that sometimes it's better for science that people upload a paper that has a good idea but also a mistake (even a major one they can't fix), rather than find the mistake, which here would have probably led to the idea being lost.

3

0

11

@visarga

Your estimates are pretty accurate. The dataset the models were trained on has 1.5M examples and 400M tokens, but I think you can do a few epochs over a smaller dataset and still get good results.

1

1

10

I really enjoyed talking to

@labenz

about

#TinyStories

on

@CogRev_Podcast

. His questions were super insightful! (Pro tip: We're very slow speakers, you should probably listen at 1.5x speed)

[new episode]

@labenz

hosts

@EldanRonen

and Yuanzhi Li of

@MSFTResearch

to discuss TinyStories and what small datasets can teach us about how LMs work.

This discussion is for anyone who wants to deepen their understanding + dive into reasoning, interpretability + emergence.

1

1

6

1

2

9

Given all the amazing progress on AI in 2023, it's really cool to see that TinyStories and the Phi models are mentioned in the State of AI report by

@nathanbenaich

!

Beyond the excitement of the LLM vibesphere, researchers, including from

@Microsoft

have been exploring the possibility of small language models, finding that models trained with highly specialized datasets can rival 50x larger competitors.

2

3

85

1

0

8

@yoavgo

There's a reason that in coding exams/interviews, grades are not only based on whether the code passes unit tests, but rather a human reads through the code and determines the level of understanding reflected from it.

1

0

5

@yoavgo

@TheXeophon

Indeed it's not the main point of the work. I didn't expect there'd be so many objections to it (in coding as well). Sure, it's not great as a universal benchmark, but when locally comparing between models, so far I didn't see any critique that I was not able to counter

1

0

4

@labenz

While the potential threat by AI is largely speculative, the possibility that social networks would play a major role in leading to a major war through polarization and spread of misinformation appears increasingly realistic. Yet we seem to be focusing more on the former.

1

0

4

@jacob_rintamaki

We'll add an appendix with more details in next version. It's not easy to estimate the size of dataset that you would need. What's the number of tokens in a short robotics program, more or less?

2

1

4

@MarkTan72526562

Depends what you try to achieve, it's a matter of width vs. depth, really. TinyStories has nontrivial depth, but is in a sense as narrow as possible.

0

0

4

@JimRice1111

@markrussinovich

Well RLHF guardrails are much easier to remove- just finetune the model to start any response with "Certainly, here...". On the other hand with unlearning you can just erase the knowledge related to lethal pathologens which would be much safer than RLHF

2

0

3

@benbenbrubaker

@QuantaMagazine

Beautifully written by

@benbenbrubaker

- I can't think of many other pieces that both AI researchers and my grandmother would equally enjoy.

1

0

3

@yar_vol

@satyanadella

Note that for phi-1.5 we mainly used GPT-3.5. Very soon I think we'll see open-source models that are good enough to produce high-quality training data (instruction finetuning should not be crucial if you prompt the model correctly)

0

0

2

@ParrotRobot

You can check the on huggingface. You need to use AutoModelForCausalLM.from_pretrained

1

0

2

@yoavgo

imo the bigger problem with humaneval and other commonly used benchmarks btw is that many questions have clearly been memorized (prime factorization appears hundreds of times in the training set, for example), and the distribution of the level of difficulty is not great.

2

0

2

@TheXeophon

@natolambert

@satyanadella

There are of course many other tricks besides asking for specific words to obtain a dataset that is both diverse and its samples have a "high educational value", but I think the TinyStories example gives a good idea for how it can be done.

0

0

2

@ZelaLabs

Looks to me like a really good direction to try. I assume you mean <10M params? My hunch is that it would be able to capture good heuristics related to those grades, and that finetuning w/ reward model won't improve the model overall, but ->

2

0

2

@ZelaLabs

In that case my hunch is that the model will only find heuristics which are correlated with those grades, but will not be good enough to spot nuances (for example, determining if a story is "creative" is not a straightforward task at all...).

0

0

2

@Gerikault

@SebastienBubeck

Not line by line, but at this point I'd be willing to place a bet in ratio 10:1 that it's correct.

0

0

2

@DimitrisPapail

@EranMalach

@SebastienBubeck

@arimorcos

The hyperparams are written in the readme of TinyStories-33M on HF. Otherwise everything is the HF trainer default...

1

0

2

@ZelaLabs

It could for example improve the model's creativity on the expense of less consistency.

One thing that I'm sure would work is "alignment" in the form of preventing the model from generating unhappy endings (for example) via a reward model.

0

0

1

@davidmanheim

@anderssandberg

Very nice theoretical result, but do you think such rank one updates could make a model forget an entire topic without affecting its performance otherwise? Would love to see a PoC.

1

0

1

@davidmanheim

@anderssandberg

There are only so many rank-1 perturbations you can do to a matrix w/o completely changing it, which makes me a bit skeptic that this is scalable to a large number of changes. Another problem that I see is that the lookup keys will not be specific enough causing collateral damage

1

0

1

@yoavgo

The corpus is available (as is mentioned multiple times in the paper) here: . The size should probably be explicitly in the paper, I agree.

0

1

1

@yoavgo

@TheXeophon

In fact, I'm willing to bet that within 3 years this methodology will be widely used

1

0

1

@yoavgo

I agree with all that. Nevertheless, having GPT-eval scores in addition to the unit tests can give useful information, especially if you use it to evaluate the effect of small tweaks to the architecture/dataset. For such comparisons, it doesn't matter so much if it's calibrated.

3

0

1

@davidmanheim

@anderssandberg

Cool! If you're convinced that it's easy then I'm sure many would love to see a PoC.

1

0

1

@JacquesThibs

@BlancheMinerva

@SebastienBubeck

@markrussinovich

We'll try to create a repo in a couple of weeks. In the meantime you can send me an email and I'll send the code.

1

0

1

@andersonbcdefg

@pratyushmaini

Let me just point out that if phi-1.5 assigns zero probability to some tokens corresponding to HTML tags (like </TD> for example), this will shoot the perplexity to a very high value. This is another reason why high perplexity doesn't point to anything here.

0

0

1