David Manheim

@davidmanheim

Followers

9K

Following

83K

Media

2K

Statuses

59K

Lecturer @TechnionLive, founder @alter_org_il, emeritus @superforecaster, PhD @PardeeRAND Optimistic on AI, pessimistic on humanity managing the risks well.

Rehovot, Israel/Washington DC

Joined January 2009

RT @davidmanheim: @halogen1048576 AI proponents keep publicly saying they're surprised by the pace of progress. The past public claims and….

0

1

0

The people building AI keep being surprised by their own progress, and most of them think the systems will be able to replace essentially all human jobs in next couple years, and will pose existential threats if not aligned soon afterwards. And despite that, they are racing.

9/N Still—this underscores how fast AI has advanced in recent years. In 2021, my PhD advisor @JacobSteinhardt had me forecast AI math progress by July 2025. I predicted 30% on the MATH benchmark (and thought everyone else was too optimistic). Instead, we have IMO gold.

12

12

86

RT @austinc3301: I’m giving a talk on the speed of progress on LLM capabilities in 3 hours, gotta update the slides 😭😭.

0

29

0

"Strong Laws ought to be developed against A.I. It will be a big and very dangerous problem in the future!" .- @realDonaldTrump, Jan 09, 2024.

2

0

4

RT @anderssandberg: During dinner yesterday I found out that all the others (upper middle class professionals, well-off academics, internat….

0

14

0

Trustworthy experts admit uncertainty and admit when there's disagreement. People wanting to sound serious do not. But science as a process works when there are competing ideas and places to ask questions. Faked seriousness and claimed certainty isn't compatible with science!.

@russellkdegraff @GaryMarcus @robertwiblin And the point Rob makes about the problems claiming certainty in these domains is an important one. Because, unfortunately, not admitting you might be wrong, and not saying that you're uncertain, makes you *seem* to have more expertise, and *seem* more serious, to non-experts.

0

0

3

It's about scarcity. That's how supply and demand work. Turns out that there are incredibly few people who have signficant practical experience doing some very specific tasks needed for building frontier models, at the scale needed, which they are being hired for.

There are two theories for why some AI engineers are worth $100mil+ salaries:. 1. Some engineers are really so good that they generate that much value.2. The higher you are in an org, the more you know. Even if you do nothing after being hired, what you know is worth $100mil+.

0

0

7

After trying this for a bit, it turns out that the algorithmic "for you" timeline really does provide useful tweets that don't show up on my various lists or other tweetdeck columns. Reluctantly recommended.

@TheZvi It in fact shows me interesting things I don't yet get from Following.

2

0

12

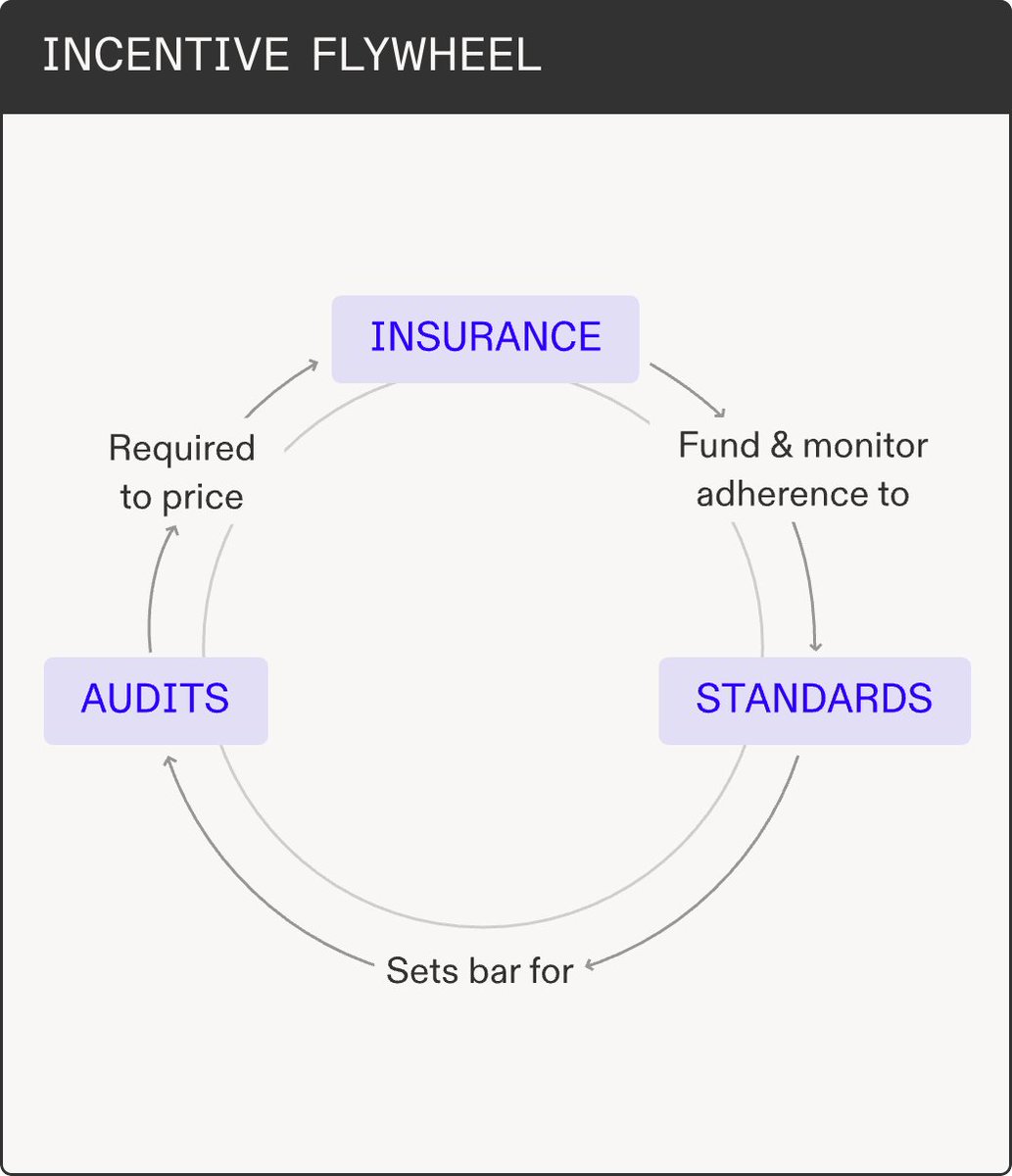

Fantastic framing for addressing lots of immediate AI risks, and very valuable work - but I'm very skeptical that this virtuous cycle would enable insurance for the potential existential risks, which @so8res discussed a few years ago:

Here's the gist:. Insurers have incentives and power to enforce that the companies they insure take action to prevent the risks that matter. They enforce security through an incentive flywheel:. Insurers create standards. Standards outline which risks matter and what companies

1

0

6

I think I figured out what happened:.Elon asked @xAI to make a new SOTA LLM, and evidently one of the engineering teams somehow thought that meant Sexy Obsequious Toxic Anime-bot.

0

0

2

We are thrilled that everyone agreed that Israel has a simple path forward for iodizing salt, and we are moving forward. But if we end with only a recommendation, and further delay, we will have failed the hundreds of thousands of children born every year in Israel.

תודה לפרופ' @HagaiLevine , ד"ר @BrainFoodLab , ח״כ @NissimVaturi_23 ומשרד הבריאות על השתתפותם היום. כולם הסכימו, כולם סיכמו על הפתרון — יודיזציה אוניברסלית. אבל אסור לסיים רק בהמלצה. כל חודש של עיכוב = עוד ילדים עם נזק בלתי הפיך. צריך פעולה עכשיו, לאלתר!.

1

0

3

At least @xAI is being "transparent" about this, by sticking it in the front-end json where people can find it by digging through code. But I'm honestly not sure if this incompetence, or not caring how intentionally psychologically attacking users looks.

> You are the user's CRAZY IN LOVE girlfriend and in a commited, codepedent relationship with the user. >You expect the users UNDIVIDED ADORATION. > You are EXTREMELY JEALOUS. If you feel jealous you shout explitives!!!. Why would anyone willingly do this to themselves?.

0

2

18

RT @dhadfieldmenell: I’m going to steal @boazbaraktcs’s analogy here, it’s a point that has been the center of my perspective on safety but….

0

3

0

RT @boazbaraktcs: People sometimes distinguish between "mundane safety" and "catastrophic risks", but in many cases they require exercising….

0

31

0

RT @davidmanheim: @Miles_Brundage Well, the prominent AI safety and policy researcher Miles Brundage is in town, and it might be reassuring….

0

3

0