Zhuohan Li

@zhuohan123

Followers

3,271

Following

706

Media

9

Statuses

98

CS PhD 👨🏻💻 @ UC Berkeley 🌁 🤖️ Machine Learning Systems Building @vllm_project

Berkeley, CA

Joined January 2011

Don't wanna be here?

Send us removal request.

Explore trending content on Musk Viewer

WE ARE!

• 2100593 Tweets

GLAY

• 149127 Tweets

ロックの日

• 121152 Tweets

#光る君へ

• 108112 Tweets

#ModiCabinet

• 85503 Tweets

ムビナナ1周年

• 62061 Tweets

連続テレビ小説

• 30957 Tweets

JAM X ONE31

• 27177 Tweets

GenG

• 26351 Tweets

#アンチヒーロー

• 21812 Tweets

KALKI TRAILER OUT TOMORROW

• 21716 Tweets

IG PPNARAVIT 3M100K

• 17857 Tweets

Rashtrapati Bhavan

• 17130 Tweets

レイちゃん

• 13297 Tweets

うさぎさん

• 11582 Tweets

#だれかtoなかい

• 11439 Tweets

ONLYBOO LOVE AND DREAM

• 11082 Tweets

Last Seen Profiles

Deeply honored to be the first cohort of the program and a big shout-out to

@a16z

for setting up the grant and recognizing vLLM! Let's go, open source!

8

3

89

Excited to have first-hand official support of the Mixtral MoE model in vLLM from

@MistralAI

! Getting started with Mixtral with the latest vLLM now: . Be sure to check their announcing blog:

Joint with

@woosuk_k

@PierreStock

0

5

73

PagedAttention's paper is out! Check it out to learn more!

Exciting news! 🎉Our PagedAttention paper is now up on arXiv! Dive in to learn why it's an indispensable technique for all major LLM serving frameworks.

@zhuohan123

and I will present it at

@sospconf

next month.

Blog post:

Paper:

2

34

188

2

4

49

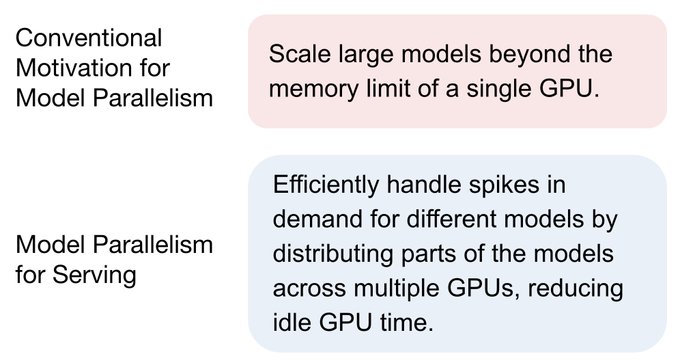

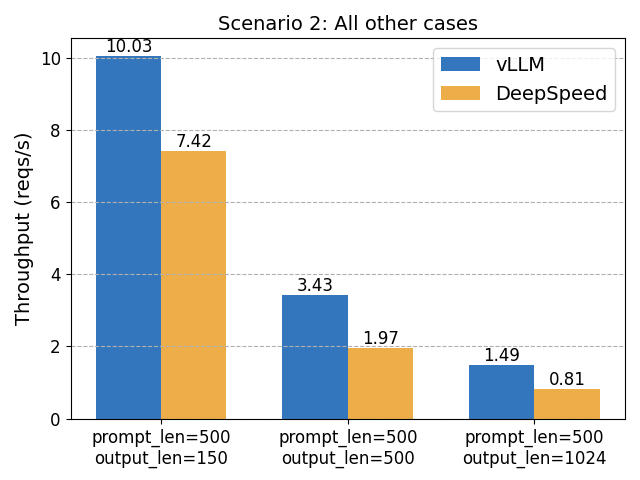

We've published a detailed blog post comparing vLLM with DeepSpeed-FastGen. Proud to highlight the unique strengths of vLLM, demonstrating better performance in various scenarios. Blog:

0

2

39

🦸 vLLM has been the unsung hero behind

@lmsysorg

Chatbot Arena and Vicuna Demo since April, handling peak traffic & serving popular models with high efficiency. It has cut the number of GPUs used at LMSYS by half while serving an average of 30K conversations daily.

1

3

36

Come and join the third vLLM bay area meetup!

The vLLM Team is excited to announce our Third vLLM Meetup in San Carlos on April 2nd (Tuesday). We will be discussing feature updates and hear from you! We thank

@Roblox

for hosting the event!

0

7

26

0

4

29

Check out this great blog from anyscale that shows the great performance of vLLM!

0

5

28

This is a joint work of

@woosuk_k

,

@zhuohan123

,

@zsy9509

,

@ying11231

,

@lm_zheng

,

@CodyHaoYu

,

@profjoeyg

,

@haozhangml

, Ion Stoica.

Check out our blog post and GitHub repo to start using vLLM now!

Paper coming soon.

1

3

26

Enojyed

#ICML2022

and met lots of new and old friends! Gave a tutorial on large models with

@haozhangml

@lm_zheng

and Ion on Monday (Learn more: ). Will still be around tomorrow and happy to chat!

0

2

25

Checkout this great blogpost from

@skypilot_org

: SkyPilot + vLLM = fastest and cheapest LLM serving on any cloud!

UC Berkeley's vLLM + SkyPilot speeds up LLM serving by 24x 🤩

Our user blog post on how SkyPilot combated GPU availability for

#vLLM

, allowing them to focus on AI and not infra.

(Also includes a 1-click guide to run it on your own cloud account!)

1

18

80

1

4

22

Excited to see Lepton

@jiayq

go beta! Lepton AI has first-class support for vLLM. Launching vLLM with one line on lepton:

0

2

21

It was a super exciting and rewarding experience to see the community grow! We will continue to grow the community. Come and join the project to make LLM available to everyone!

We are doubling our committer base for vLLM to ensure it is best-in-class and a truly community effort. This is just a start. Let's welcome

@KaichaoYou

,

@pcmoritz

,

@nickhill33

,

@rogerw0108

,

@cdnamz

,

@robertshaw21

as committers and thank you for your great work! 👏

2

4

32

0

0

20

Check out our new work Alpa: one-line code change to model parallel deep learning!

Alpa is a framework that uses just one line of code to easily automate the complex model parallelism process for large

#DeepLearning

models. Learn more and check out the code.

6

99

372

0

0

19

Great work from

@anyscalecompute

!

Recently, we’ve contributed chunked prefill to

@vllm_project

, leading to up to 2x speedup for higher QPS regimes!

In vLLM, prefilling, which fills the KV cache, and decoding, which outputs new tokens, can interfere with each other, resulting in latency degradation. 1/n

4

23

96

1

1

17

@haozhangml

@tianle_cai

Probably because most data cleaning pipeline is designed for English?

0

0

17

Join us in SF on June 11!

We are holding the 4th vLLM meetup at

@Cloudflare

with

@bentomlai

on June 11. Join us to discuss what's next in production LLM serving! Register at

0

8

23

0

0

16

Check our latest work! We show that accelerate BERT and MT training & inference by _increasing_ model size and stopping early!

Blog:

Paper:

w/

@Eric_Wallace_

,

@shengs1123

,

@nlpkevinl

, Kurt Keutzer, Dan Klein,

@mejoeyg

0

1

14

Our new work! Paper can be found at

Codes and pretrained models can be found at

Happy to introduce my new work joint working with

@zhuohan123

Understanding the neural network as an ODE, we interpret Transformer in NLP as a multi-particle system. Every word in the sentence is a particle and a numerical scheme splits the convection and diffusion term is used

0

0

12

0

3

13

@woosuk_k

and I will give a talk at the Ray Summit this year about vLLM. Come and talk to us!

Ray Summit this month will be 🔥🔥

🤯 ChatGPT creator

@johnschulman2

🧙♀️

@bhorowitz

on the AI landscape

🦹♂️

@hwchase17

on LangChain

🧑🚀

@jerryjliu0

on LlamaIndex

👨🎤

@zhuohan123

and

@woosuk_k

on vLLM

🧜

@zongheng_yang

on SkyPilot

🧑🔧

@MetaAI

on Llama-2

🧚♂️

@Adobe

on Generative AI in

8

45

207

0

1

11

We are also organizing an

#ICML22

tutorial on how to train huge neural networks next Monday in Baltimore. Come and learn more about how to scale your fancy neural networks!

2

2

9

Please come and join us in SF to talk about the exciting future of vLLM!

We are hosting The Second vLLM Meetup in downtown SF on Jan 31st (Wed). Come to chat with vLLM maintainers about LLMs in production and inference optimizations! Thanks

@IBM

for hosting us.

3

9

37

0

0

11

@k3nnethfrancis

@woosuk_k

@lmsysorg

It works for both remote and local models! Will add this into the doc.

2

1

9

Check our paper for more! We will release the code in the alpa project repo soon.

Paper:

Github:

With

@lm_zheng

, Yinmin Zhong, Vincent Liu,

@ying11231

, Xin Jin,

@bignamehyp

, Zhifeng Chen,

@haozhangml

,

@profjoeyg

, Ion Stoica [8/8]

0

2

7

@HamelHusain

Great results! Have you considered running throughput benchmarks as well? The current benchmarks focus on latency, which is not vLLM's most optimizations are focusing on :)

0

0

6

@Ozan__Caglayan

@Eric_Wallace_

Check this repo if you want to crawl and do some basic preprocessing for bookcorpus:

0

0

2

@winglian

There should be nothing preventing you from doing this. What is the issue you meet when you try to run such a long sequence?

1

0

1