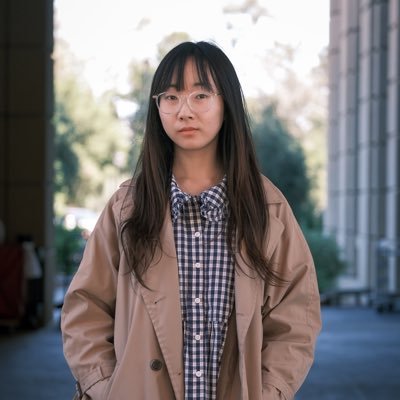

Ying Sheng

@ying11231

Followers

12K

Following

2K

Media

6

Statuses

863

@lmsysorg @sgl_project | Prev. @xAI @Stanford | Assist Prof @UCLA. (delayed) | Do it anyway | Live to fight another day

Joined April 2021

For inference, you can send email to the same contact as RL for now: rl_team@lmsys.org

0

0

0

I learned the most from working with cool people in opensource community!

🎯SGLang RL Team is growing! We’re building a state-of-the-art RL ecosystem for LLMs and VLMs — fast, open, and production-ready. If you’re excited about RLHF, scalable training systems, and shaping how future LLMs are aligned, now is the right time to join. We are looking for

0

1

2

Ditch your other HEADLAMPS! This SUPER BRIGHT and WIDE RANGE LED headband is perfect for DIY jobs of all types.

6

9

109

We’re actively looking for strong candidates 💕

🎯SGLang RL Team is growing! We’re building a state-of-the-art RL ecosystem for LLMs and VLMs — fast, open, and production-ready. If you’re excited about RLHF, scalable training systems, and shaping how future LLMs are aligned, now is the right time to join. We are looking for

0

1

5

Hiring! SGLang RL (Inference is also open all the time)

🎯SGLang RL Team is growing! We’re building a state-of-the-art RL ecosystem for LLMs and VLMs — fast, open, and production-ready. If you’re excited about RLHF, scalable training systems, and shaping how future LLMs are aligned, now is the right time to join. We are looking for

3

5

95

We just got ~100× faster GAE by borrowing ideas from chunked linear attention and turning GAE into a chunked scan problem. Code: https://t.co/FGjrNMF1Ma Detailed write-up (Chinese):

0

7

19

Need a job? Join ICE today. ICE offers competitive salaries & benefits like health insurance and retirement plans.

11K

19K

112K

Probably the first open-source RL framework that targets GB300 optimizations!

🚀 Introducing Miles — an enterprise-facing RL framework for large-scale MoE training & production, forked from slime. Slime is a lightweight, customizable RL framework that already powers real post-training pipelines and large MoE runs. Miles builds on slime but focuses on new

2

18

180

🔥We are excited to partner with the @intel Neural Compressor team to bring AutoRound low-bit quantization (INT2 to INT8, MXFP4, NVFP4, mixed bits) directly into SGLang’s high-performance inference runtime. With this collaboration, developers can: 1. Quantize LLMs and VLMs with

1

4

35

Excited to announce our deployment guide with @UnslothAI 🦥⚡ Unsloth gives you 2x faster fine-tuning. SGLang gives you efficient production serving. Together = complete LLM workflow: ✅ Fast training with Unsloth ✅ FP8 conversion & quantization ✅ Production deployment

3

7

37

Awesome to team up with @UnslothAI ! This guide shows how to run LLMs locally with SGLang, including GGUF serving, FP8 acceleration, and production-ready deployment. A solid resource for anyone building efficient inference pipelines 👇

We made a guide on how to deploy LLMs locally with SGLang! In collab with @lmsysorg, you'll learn to: • Deploy fine-tuned LLMs for large scale production • Serve GGUFs locally • Benchmark inference speed • Use on the fly FP8 for 1.6x inference Guide: https://t.co/hxNZikSeLS

0

4

42

We made a guide on how to deploy LLMs locally with SGLang! In collab with @lmsysorg, you'll learn to: • Deploy fine-tuned LLMs for large scale production • Serve GGUFs locally • Benchmark inference speed • Use on the fly FP8 for 1.6x inference Guide: https://t.co/hxNZikSeLS

8

74

435

We’re excited to team up with @lmsysorg to co-host an Open Inference Night Happy Hour at NeurIPS 2025 in San Diego 🎉 Join us for an evening of good drinks and even better conversations with researchers, engineers, and founders across the AI community. Come for: - high‑signal

0

1

3

🔗 Miles Repo: https://t.co/4VOVxMpYr3 📝 Blog: https://t.co/NEIVeK16bN A journey of a thousand Miles begins with a single rollout. 😉

lmsys.org

A journey of a thousand miles is made one small step at a time. We're excited to introduce Miles, an enterprise...

0

4

27

Miles is the RL framework we want to push for enterprise use. This story is just beginning. Lightweight, customizable, flexible, scalable, as always. ☺️

🚀 Introducing Miles — an enterprise-facing RL framework for large-scale MoE training & production, forked from slime. Slime is a lightweight, customizable RL framework that already powers real post-training pipelines and large MoE runs. Miles builds on slime but focuses on new

2

19

194

Great write-up from the Modal team! SGLang is proud to collaborate on reducing host overhead and improving inference efficiency. Every bit counts when keeping the GPU busy 😀

Never block the GPU! In a new @modal blogpost, we walk through a major class of inefficiency in AI inference: host overhead. We include three cases where we worked with @sgl_project to cut host overhead and prevent GPU stalls. Every microsecond counts. https://t.co/ZeumrZpSKE

1

4

40

Introducing Grok 4.1, a frontier model that sets a new standard for conversational intelligence, emotional understanding, and real-world helpfulness. Grok 4.1 is available for free on https://t.co/AnXpIEOPEb,

https://t.co/53pltyq3a4 and our mobile apps. https://t.co/Cdmv5CqSrb

x.ai

Grok 4.1 is now available to all users on grok.com, 𝕏, and the iOS and Android apps. It is rolling out immediately in Auto mode and can be selected explicitly as “Grok 4.1” in the model picker.

2K

3K

13K

We introduce speculative decoding into the RL sampling process, achieving a significant improvement in sampling speed under appropriate batch sizes. Compared to freezing the draft model, the accepted length maintain at a high level, generating long-term stable positive gains.

4

28

220

Honored to see SGLang in @GitHub's Octoverse 2025 report on fastest-growing open source projects. 2,541% contributor growth reflects our community's shared vision for better LLM infrastructure. But metrics aside — what matters is shipping: diffusion support, performance

3

3

16

🚀 SGLang 2025 Q4 Roadmap is here! From full-feature reliability → next-gen kernel optimizations (GB300/MI350/BW FP8) → PD disaggregation, spec decoding 2.0, MoE/EP/CP refactors, HiCache, multimodal & diffusion upgrades, RL-framework integration, and day-0 support for all major

2

6

64