Yanai Elazar @ICLR

@yanaiela

Followers

3,105

Following

1,141

Media

145

Statuses

1,610

Explore trending content on Musk Viewer

#WeAreSeriesEP6

• 393678 Tweets

COME BACK TO ME TEASER

• 208342 Tweets

iPad

• 194908 Tweets

Pablo Marçal

• 146524 Tweets

PondPhuwin WeAre EP6

• 136422 Tweets

Olón

• 114464 Tweets

FURIA ES GRAN HERMANO

• 69847 Tweets

#SRHvLSG

• 57400 Tweets

#XMen97

• 51472 Tweets

Bruno Mars

• 50136 Tweets

Natalie Elphicke

• 49789 Tweets

Suki

• 47873 Tweets

Mother's Day

• 45809 Tweets

ANTT

• 43538 Tweets

Chromatica Ball

• 41804 Tweets

THE A'TIN OF SB19

• 40962 Tweets

SHEE SLAYS AT 20

• 31165 Tweets

Gazzede SoykırımaDurDe

• 29083 Tweets

Steve Albini

• 28894 Tweets

Santiago Bernabéu

• 26440 Tweets

#乃木坂46ANN

• 25105 Tweets

Piers Morgan

• 22831 Tweets

1st Dream of FAYMAY

• 22330 Tweets

Big Black

• 18983 Tweets

Belem

• 14949 Tweets

Asher

• 14819 Tweets

#MebTekinDeğil

• 10042 Tweets

Last Seen Profiles

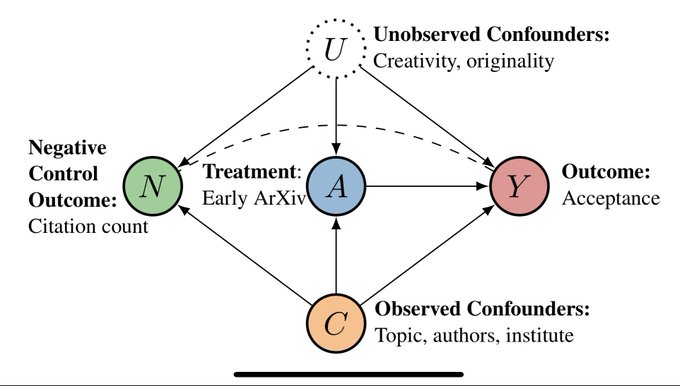

New *very exciting* paper

We causally estimate the effect of simple data statistics (e.g. co-occurrences) on model predictions.

Joint work w/

@KassnerNora

,

@ravfogel

,

@amir_feder

,

@Lasha1608

,

@mariusmosbach

,

@boknilev

, Hinrich Schütze, and

@yoavgo

4

52

325

I'm... graduating!

Last week (the pic): goodbye party from the amazing

@biunlp

lab and the one and only

@yoavgo

Now: In the airport, on my way to Seattle for my postdoc at AI2 in the

@ai2_allennlp

team and UW, where I'll be working with

@nlpnoah

@HannaHajishirzi

and

@sameer_

25

4

222

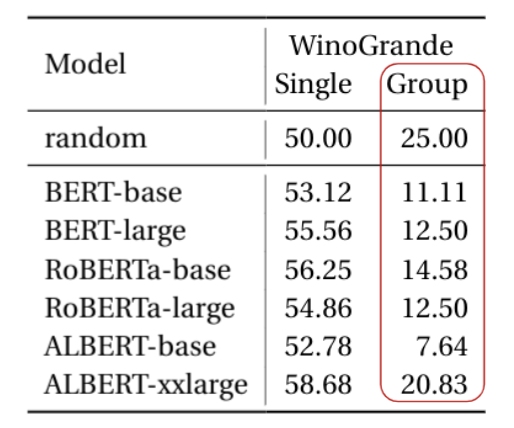

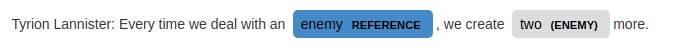

Advancements in Commonsense Reasoning and Language Models make the Winograd Schema look solved.

After revisiting the experimental setup and evaluation, we show that models perform randomly!

Our latest work at EMNLP, w/

@hongming110

,

@yoavgo

,

@DanRothNLP

5

54

218

5 years ago: "This cool paper didn't publish any code, but I'll quickly implement and try it out!"

Today: "WHAT? the code, model or dataset are not on

@huggingface

? moving on, nothing to see here..."

2

7

215

@BlancheMinerva

Here's a few I like for different reasons (not exhaustive):

Careful perturbations:

Cool ML modeling:

Data collection:

2

31

171

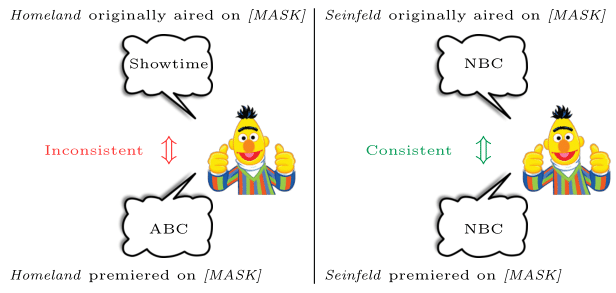

Are our Language Models consistent? Apparently not!

Our new paper quantifies that:

w/

@KassnerNora

,

@ravfogel

,

@Lasha1608

, Ed Hovy,

@HinrichSchuetze

, and

@yoavgo

3

32

140

Super excited to receive the Google PhD Fellowship!

Thank you for the support.

I'm extremely grateful to my advisor,

@yoavgo

who I learn from tremendously, and supports me at every step of the way.

And of course, the

@biunlp

which is an amazing lab to be part of.

11

4

119

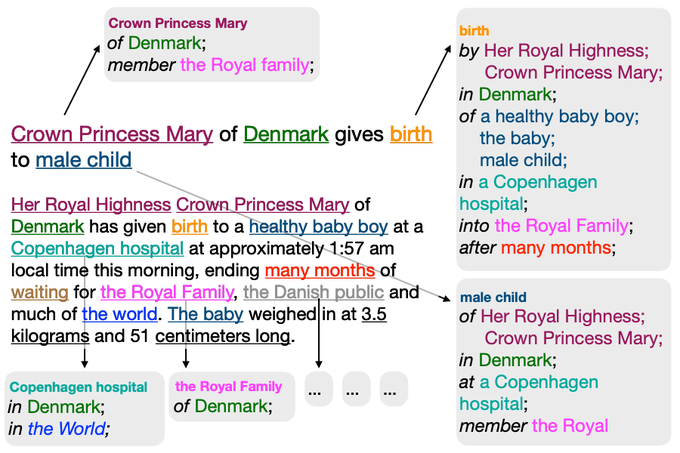

We present:

Text-based NP Enrichment (TNE)

A new dataset and baselines for a very new, exciting, and challenging task, which aims to recover preposition-mediated relations between NPs.

Join work w/ Victoria Basmov,

@yoavgo

,

@rtsarfaty

2

20

116

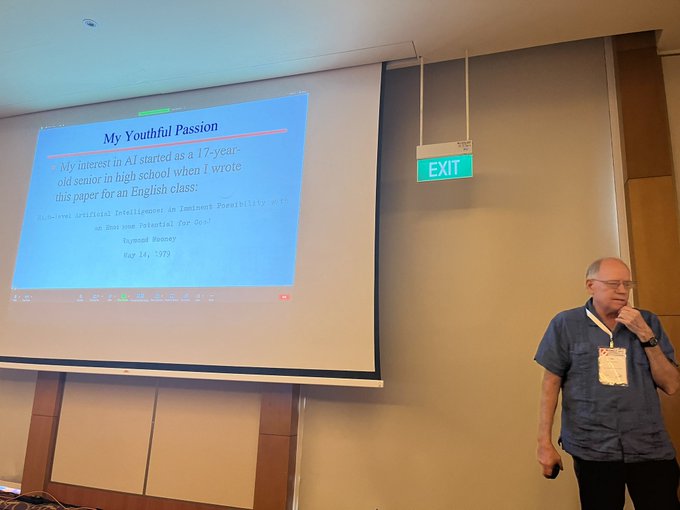

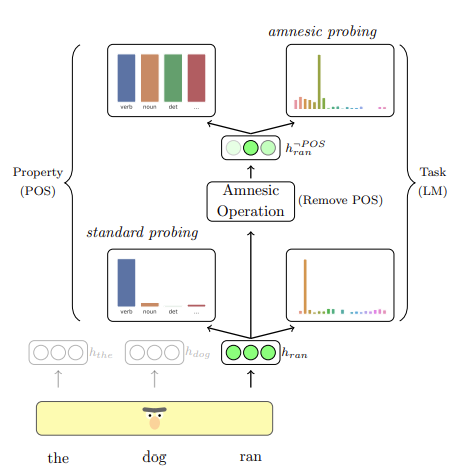

Super excited to have our paper accepted to TACL!

"Amnesic Probing: Behavioral Explanation with Amnesic Counterfactuals"

We propose a new method for testing what features are being used by models

Joint work with

@ravfogel

,

@alon_jacovi

and

@yoavgo

3

18

96

I don't really understand this claim. Sure, if you work at one of 5 companies that actually have the compute to run these huge models, you can just go larger. But even then, can you actually use these huge models in production? Are the improved results actually worth the money?

9

3

92

Our consistency paper got accepted to TACL!

Updated paper at:

Code:

Thanks again to my awesome collaborators

@KassnerNora

,

@ravfogel

,

@Lasha1608

, Ed Hovy,

@HinrichSchuetze

and

@yoavgo

See you at Punta Cana?

Are our Language Models consistent? Apparently not!

Our new paper quantifies that:

w/

@KassnerNora

,

@ravfogel

,

@Lasha1608

, Ed Hovy,

@HinrichSchuetze

, and

@yoavgo

3

32

140

3

16

80

The Big Picture workshop @ EMNLP23 is just one week away, and we (

@AllysonEttinger

,

@KassnerNora

,

@seb_ruder

,

@nlpnoah

) have an incredible program awaiting you!

3

8

78

The

@ai2_allennlp

team is recruiting research interns for next summer, come work with us!

Apply by October 15

Let me know if you have any questions

0

14

68

Interviewing is hard, and many considerations are often obfuscated and hidden from interviewers.

In this blog post, I share some insights into what's happening behind the scenes and what sitting on the other side of the table (or screen) looks like.

The interview process can often feel shrouded in mystery. Today on the AI2 Blog,

@ai2_allennlp

Young Investigator

@yanaiela

shares his experiences from both sides of the table:

0

5

36

1

7

68

This is a reminder that the advisor, and lab environment are way more important than some random school ranking.

(Not that I have anything bad to say about CMU)

CMU SCS has done it again! SCS is ranked

#1

in AI graduate programs in the USA for 2023 by US News. Kudos and Congratulations to all the departments that make up AI within SCS!

Special shoutout to the LTI. Yes Shameless plug here :)

0

9

103

3

3

63

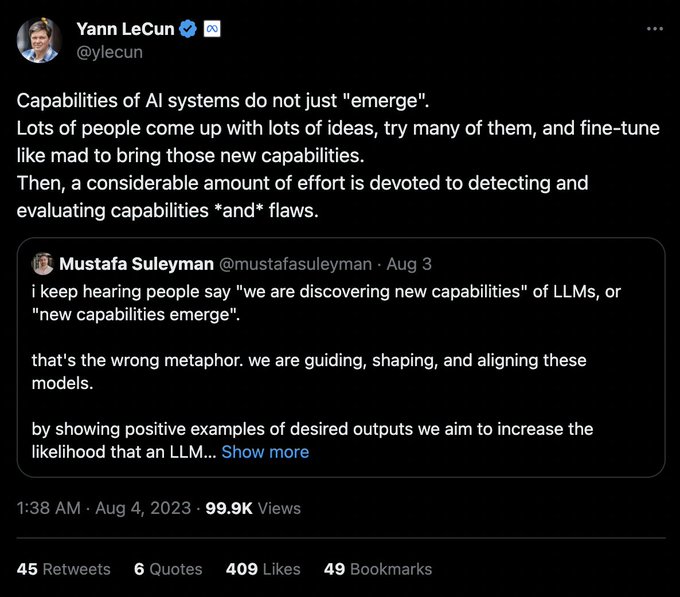

When word2vec came out, and it was used for a variety of tasks/ ppl found it has some interesting properties, nobody explicitly trained it for those tasks/properties. Same with BERT and other models.

It's just what you do in science.

"Emergent abilities" is just rebranding.

1

3

63

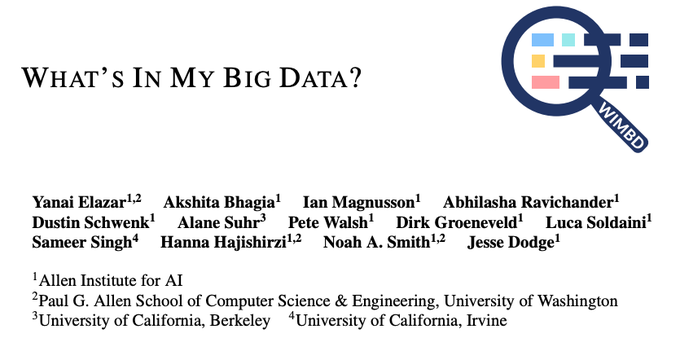

Language Models are nothing without their training data. But the data is large, mysterious, and opaque, which requires selection, filtering, cleaning, and mixing. Checkout our survey paper (led by the incredible

@AlbalakAlon

) that describes the best (open) practices in the field.

1

8

62

How are different linguistic concepts *used* by models? Probing isn't the tool for answering these questions.

We propose a new method for answering this question: Amnesic Probing.

Joint work with

@ravfogel

@alon_jacovi

and

@yoavgo

1

16

62

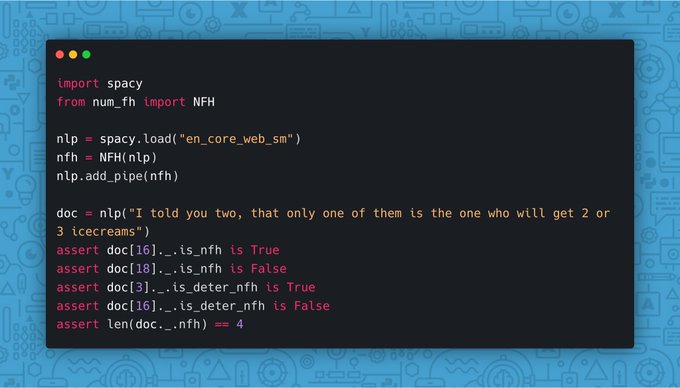

After a lot of work, I'm very happy to see this project integrated in the best library for

#NLPRoc

1

12

61

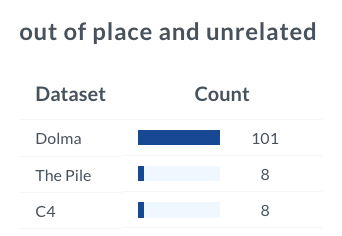

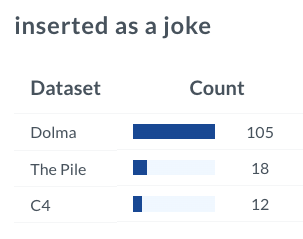

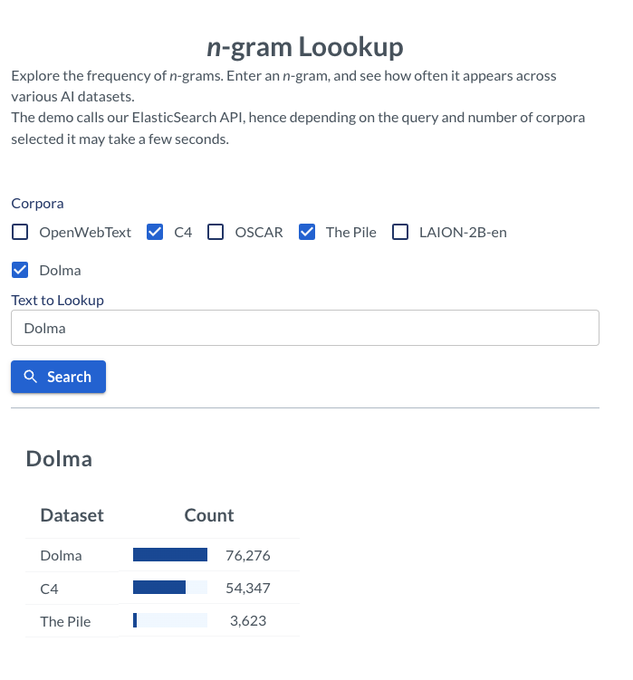

Or some composition of the data?

I couldn't find the exact phrase as is, in some of the open-source datasets we have indexed, but parts of it definitely appear on the internet

2

2

57

using adversarial training for removing sensitive features from text? do not trust its results! new

#emnlp2018

paper with

@yoavgo

now in arxiv

1

20

55

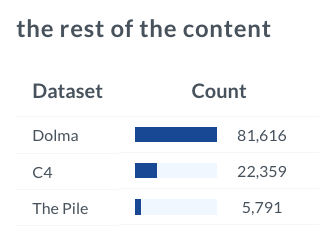

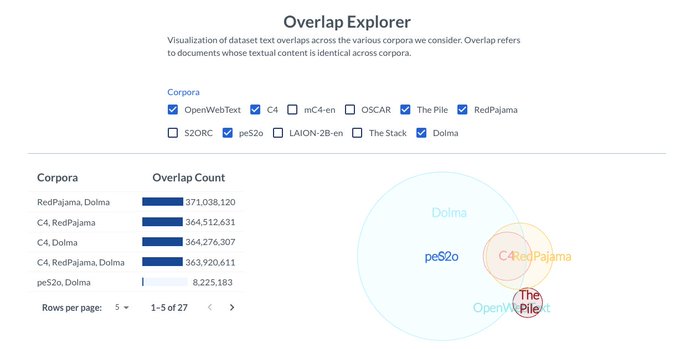

Excited to share that Dolma can now also be found on WIMBD

You can get programmatic access to data (through the ElasticSearch index), as well as the other analyses through the demo

2

6

54

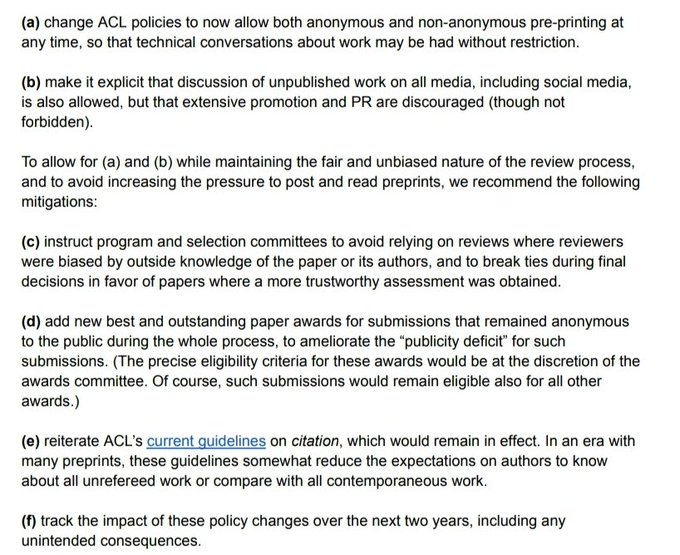

These discussions on the anonymity period and what the "community" wants or who it affects are full of hand-waving.

We empirically study this question in a paper that (funnily enough) could have been discussed at EMNLP if the anonymity period didn't exist

2

5

53

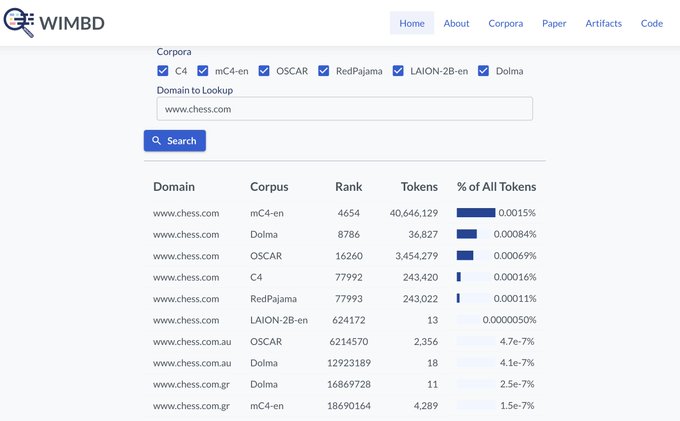

Even C4, an "old" dataset has almost 250K tokens from . The English version of mC4 has over 40M!!

This and more, with WIMBD -

2

5

50

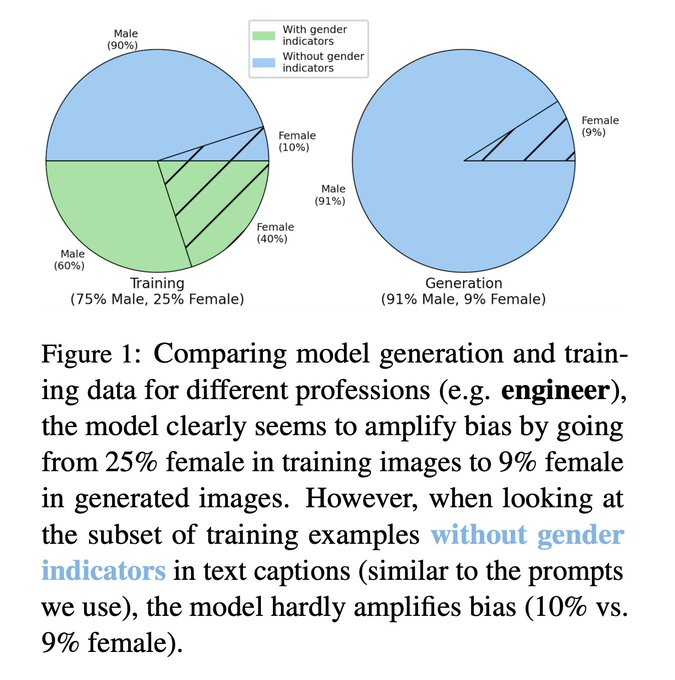

🚨New paper🚨

"The Bias Amplification Paradox in Text-to-Image Generation"

We find that while a naive investigation of Stable Diffusion leads to conclusions of bias amplification, it's confounded by various factors.

Work led by

@Preethi__S_

, w/

@sameer_

1

7

50

TNE was accepted to TACL!

Compatible with the 3-years it took to finish this project, we also went through all TACL steps (from C to B to A).

You can also download it from 🤗's datasets now:

And now, a meta thread:

We present:

Text-based NP Enrichment (TNE)

A new dataset and baselines for a very new, exciting, and challenging task, which aims to recover preposition-mediated relations between NPs.

Join work w/ Victoria Basmov,

@yoavgo

,

@rtsarfaty

2

20

116

1

5

47

It was a delight to host

@lena_voita

today in our very first

@NLPwithFriends

seminar. Thank you for the interesting talk, especially made for all the friends.

And thank you all for attending! See you next week with

@RTomMcCoy

.

1

0

41

Today at

#ACL2021

basically all sessions are oral, so no reason to be in gather, and tomorrow, all posters sessions (20 tracks!) are in the same, dense, 2 hours session.

WHYY??

#lesszoom

#moregather

3

1

40

Overwhelmed with organizing your

#acl2020nlp

schedule?

So am I! But I have a strategy.

Read about it at -

0

9

38

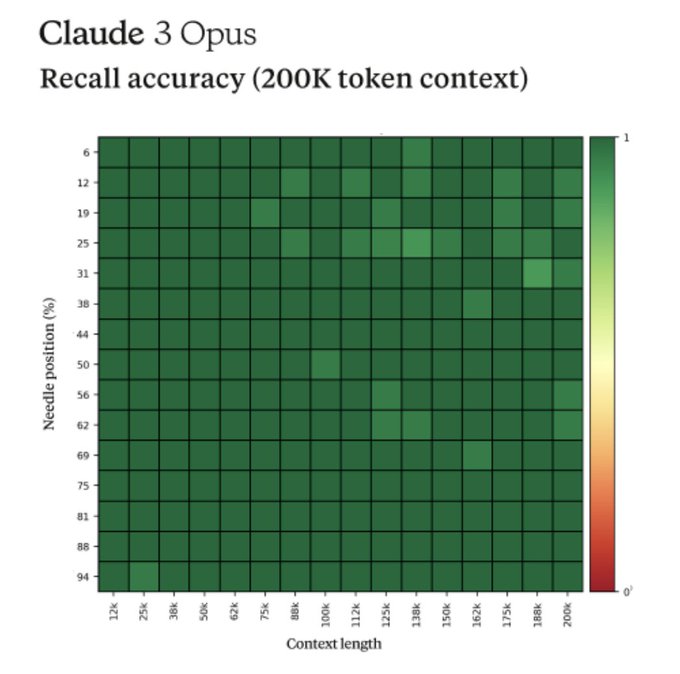

Data contamination is one of the biggest challenges for evaluating [small|medium|large|huge] language models.

Submit your work that tackles these challenges to our workshop (@ ACL24).

0

4

39

Join us at NLP with Friends (

@NLPwithFriends

) for an online seminar, where students present their recent work.

Really excited to host this new project with the awesome

@Lasha1608

@esalesk

@ZeerakW

🤗

Hello

#NLProc

twitter! We are NLP with Friends, a friendly online NLP seminar series where students present ongoing work.

Join us to meet our amazing speakers, learn about cool research, and collectively think about what’s next:

See you soon! ❤️

5

128

404

0

14

39

Giving a talk at

@EdinburghNLP

tomorrow!

Come to hear about what makes it to text corpora these days and how it's affecting model behavior.

1

0

37

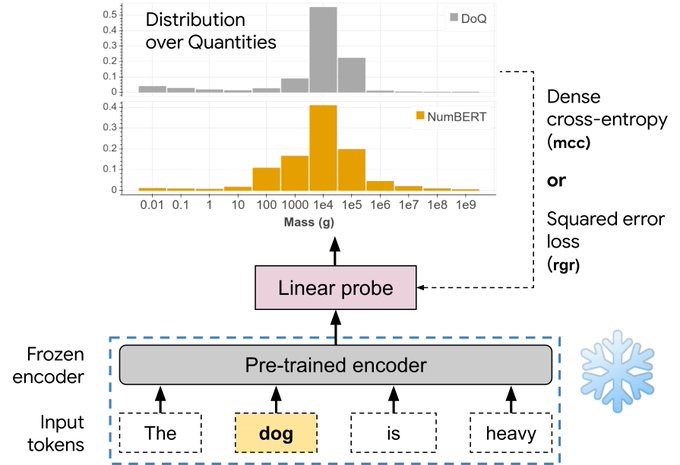

Do Language Embeddings Capture Scales?

Well, they sort of do, but we're not there yet.

New paper at findings of

#emnlp2020

(to be presented at

#BlackboxNLP

) by

@xikun_zhang_

, Deepak Ramachandran,

@iftenney

, myself and

@dannydanr

2

12

36

New paper:

Back to Square One: Bias Detection, Training and Commonsense Disentanglement in the Winograd Schema

Joint work with

@hongming110

,

@yoavgo

and

@dannydanr

2

9

36

@OwainEvans_UK

@megamor2

Cool!

You might be interested in this paper where we studied similar issues:

1

0

36

This is fantastic news!!

Somewhat of a coincidence, but our paper that studies the effect of early arxiving on acceptance that suggested this effect is small and that it does not fill its purpose was accepted to CLeaR (Causal Learning and Reasoning) 2024

0

2

34

Unsatisfied with adversarial training? the difficulties of training it, and making it work in practice?

We've got your back!

INLP: Iterative Nullspace Projection, a data-driven algorithm for removing attributes from representations.

the paper -

Our paper on controlled removal of information from neural representations has been accepted to

#acl2020nlp

🙂 A joint work with

@yanaiela

,

@hila_gonen

, Michael Twiton and

@yoavgo

(1\n)

5

25

125

1

7

31

We made our workshop open!

What does it mean?

We publish all our material, including

- the proposal

- the emails we drafted for reviewers/invited speakers/etc.,

- tasks (which we update in real time!)

- and more

Check it out:

3

6

32

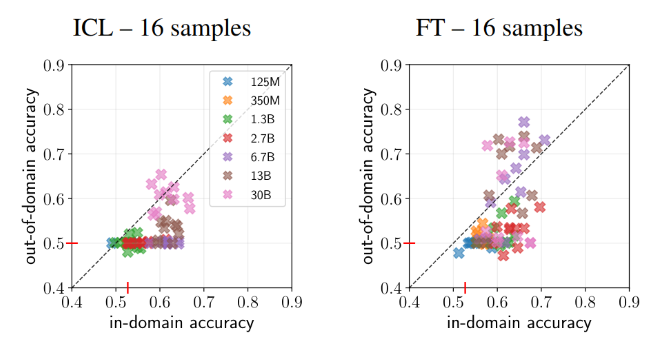

What do you know, when you make comparable experiments, ICL and fine-tuning (FT) aren't that different!

We find that in- and out-of-domain few-shot learning works well with FT, if not better than ICL, at scale.

Check out our recent work, led by

@mariusmosbach

the great.

In our

#ACL2023NLP

paper, we provide a fair comparison of LM task adaptation via in-context learning and fine-tuning. We find that fine-tuned models generalize better than previously thought and that robust task adaptation remains a challenge! 🧵 1/N

3

18

87

1

4

32

Remember BERT? RoBERTa? They were once the best models we had.

And while it seemed they weren't great at generalization, we found that simply training them for longer, or using their larger versions, dramatically increases their generalization on OOD (while no diff for in-domain)

New paper! ✨

Everyone knows that when increasing size, language models acquire the skills to solve new tasks. But what if I tell you they secretly might also change the way they solve pre-acquired tasks?🤯

#emnlp2022

with

@yoavgo

&

@yanaiela

2

27

105

0

5

31