Dan Roth

@DanRothNLP

Followers

2K

Following

7

Media

0

Statuses

46

Chief AI Scientist, Oracle, and the Eduardo D. Glandt Distinguished Professor, CIS, University of Pennsylvania. Former VP/Distinguished Scientist, AWS AI Labs.

Philadelphia, PA

Joined May 2010

Social Impact Award: "AccessEval: Benchmarking Disability Bias in Large Language Models" by Srikant Panda, Amit Agarwal, and Hitesh Laxmichand Patel https://t.co/tcT69fbM42 10/n

aclanthology.org

Srikant Panda, Amit Agarwal, Hitesh Laxmichand Patel. Proceedings of the 2025 Conference on Empirical Methods in Natural Language Processing. 2025.

1

4

10

📷 New #EMNLP2025 Findings survey paper! “Conflicts in Texts: Data, Implications, and Challenges” Paper: https://t.co/y9l472CyTk Conflicts are everywhere in NLP — news articles reflecting different perspectives or opposing views, annotators who disagree, LLMs that hallucinate

0

4

11

✨Yesterday we released MoNaCo, an @allen_ai benchmark of 1,315 hard human-written questions that, on average, require 43.3 documents per question!✨ The three aforementioned questions were actually some of the easier ones in MoNaCo 😉 (8/) https://t.co/Ad9FrWiwtn

LLMs power research, decision‑making, and exploration—but most benchmarks don’t test how well they stitch together evidence across dozens (or hundreds) of sources. Meet MoNaCo, our new eval for question-answering cross‑source reasoning. 👇

1

1

3

MoNaCo evaluates complex question-answering with: 📚 1,315 multi‑step queries 🔎 Retrieval, filtering & aggregation across text and tables 🌟 Avg 43.3 distinct documents per query

1

1

15

LLMs power research, decision‑making, and exploration—but most benchmarks don’t test how well they stitch together evidence across dozens (or hundreds) of sources. Meet MoNaCo, our new eval for question-answering cross‑source reasoning. 👇

10

39

227

Augmenting GPT-4o with Visual Sketchpad ✏️ We introduce Sketchpad agent, a framework that equips multimodal LLMs with a visual canvas and drawing tools 🎨 . Improving GPT-4o's performance in vision and math tasks 📈 🔗: https://t.co/I6ul5406E6

Humans draw to facilitate reasoning and communication. Why not let LLMs do so? 🚀We introduce✏️Sketchpad, which gives multimodal LLMs a sketchpad to draw and facilitate reasoning! https://t.co/DWsPQcuJ4b Sketchpad gives GPT-4o great boosts on many vision and math tasks 📈 The

10

53

285

😺 This work is done with my amazing collaborators: @yujielu_10, muyu he, @WilliamWangNLP @DanRothNLP YOU ARE THE BEST!!! 😎🔥 (n/n)

3

1

7

🔥Highlights of the Commonsense-T2I benchmark: 📚Pairwise text prompts with minimum token change ⚙️Rigorous automatic evaluation with descriptions for expected outputs ❗️Even DALL-E 3 only achieves below 50% accuracy (2/n)

1

2

10

Can Text-to-Image models understand common sense? 🤔 Can they generate images that fit everyday common sense? 🤔 tldr; NO, they are far less intelligent than us 💁🏻♀️ Introducing Commonsense-T2I 💡 https://t.co/gf8VZHlxPS, a novel evaluation and benchmark designed to measure

7

38

130

Best-fit Packing completely eliminates unnecessary truncations while retaining the same training efficiency as concatenation with <0.01% overhead tested on popular pre-training datasets like @TIIuae's RefinedWeb and @BigCodeProject's Stack.🧵5/n

1

1

3

The common practice in LLM pre-training is to concat all docs then split into equal-length chunks. This is efficient but hurts data integrity: doc fragmentation leads to loss of info, and causes next-token prediction to be ungrounded, making model prone to hallucination.🧵2/n

1

2

4

🚀Introducing "Fewer Truncations Improve Language Modeling" at #ICML2024 We tackle a fundamental issue in LLM pre-training: docs are often broken into pieces. Such truncation hinders model from learning to compose logically coherent and factually grounded content. 👇🧵1/n

2

10

44

Can GPT-4V and Gemini-Pro perceive the world the way humans do? 🤔 Can they solve the vision tasks that humans can in the blink of an eye? 😉 tldr; NO, they are far worse than us 💁🏻♀️ Introducing BLINK👁 https://t.co/7Ia9u9e0EY, a novel benchmark that studies visual perception

BLINK Multimodal Large Language Models Can See but Not Perceive We introduce Blink, a new benchmark for multimodal language models (LLMs) that focuses on core visual perception abilities not found in other evaluations. Most of the Blink tasks can be solved by humans

9

126

408

Super excited to announce that our "3rd Workshop on NLP for Medical Conversations" will be co-located with IJCNLP-AACL 2023!! Website and CFP: https://t.co/17pA4at3PL

@aaclmeeting

#AACL2023 #NLProc #NLP #AI #DigitalHealth #HealthTech #Healthcare

1

7

11

We are thrilled to announce our second workshop on natural language interfaces, held in conjunction with the prestigious IJCNL-AACL conference! In collaboration with researchers from AWS AI Labs, Google Research, Meta AI Research, and Microsoft Research, this workshop aims to

1

3

6

I’ve been working with @awscloud’s #Bedrock service for a couple of months now at @caylentinc, and I’d like to share some of what I’ve learned. 🧵

8

90

317

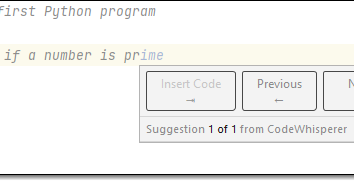

https://t.co/duGiOK8wBP is really neat. Helps you code faster, checks for security vulns, discloses licenses of code it drew from, and works great for AWS APIs. Boom! @awscloud putting ML to work for developers

aws.amazon.com

Amazon Q Developer is the most capable generative AI–powered assistant for building, operating, and transforming software, with advanced capabilities for managing data and AI/ML.

1

3

5

Excited to announce a new product from AWS AI: Amazon CodeWhisperer

aws.amazon.com

As I was getting ready to write this post I spent some time thinking about some of the coding tools that I have used over the course of my career. This includes the line-oriented editor that was an...

0

1

10