Volodymyr Kuleshov 🇺🇦

@volokuleshov

Followers

9K

Following

2K

Media

456

Statuses

3K

Co-Founder @InceptionAILabs | Prof @Cornell & @Cornell_Tech | PhD @Stanford

Joined July 2013

Excited to announce the first commercial-scale diffusion language model---Mercury Coder. Mercury runs at 1000 tokens/sec on Nvidia hardware while matching the performance of existing speed-optimized LLMs. Mercury introduces a new approach to language generation inspired by image.

We are excited to introduce Mercury, the first commercial-grade diffusion large language model (dLLM)! dLLMs push the frontier of intelligence and speed with parallel, coarse-to-fine text generation.

31

34

390

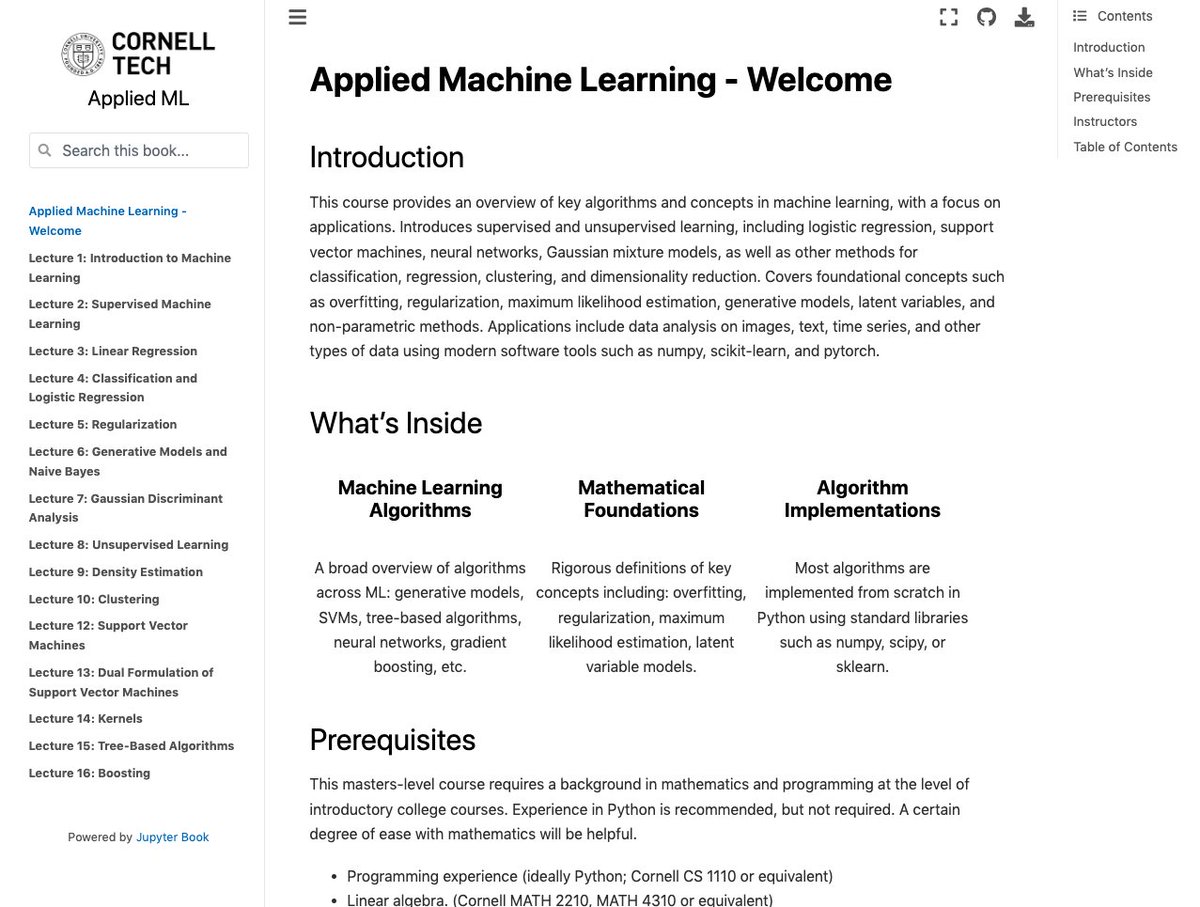

Did you ever want to learn more about machine learning in 2021? I'm excited to share the lecture videos and materials from my Applied Machine Learning course at @Cornell_Tech! We have 20+ lectures on ML algorithms and how to use them in practice. [1/5]

20

282

1K

Ok, I'm sorry, but this is just brilliant. Folks argue that AI can't make art, but look: (1) DALLE2 distills the essence of NY in a stunning & abstract way (2) each pic has unique visual language (bridge-loops in #1!?) (3) it *builds* (not copies) something new on top of Picasso!.

23

84

626

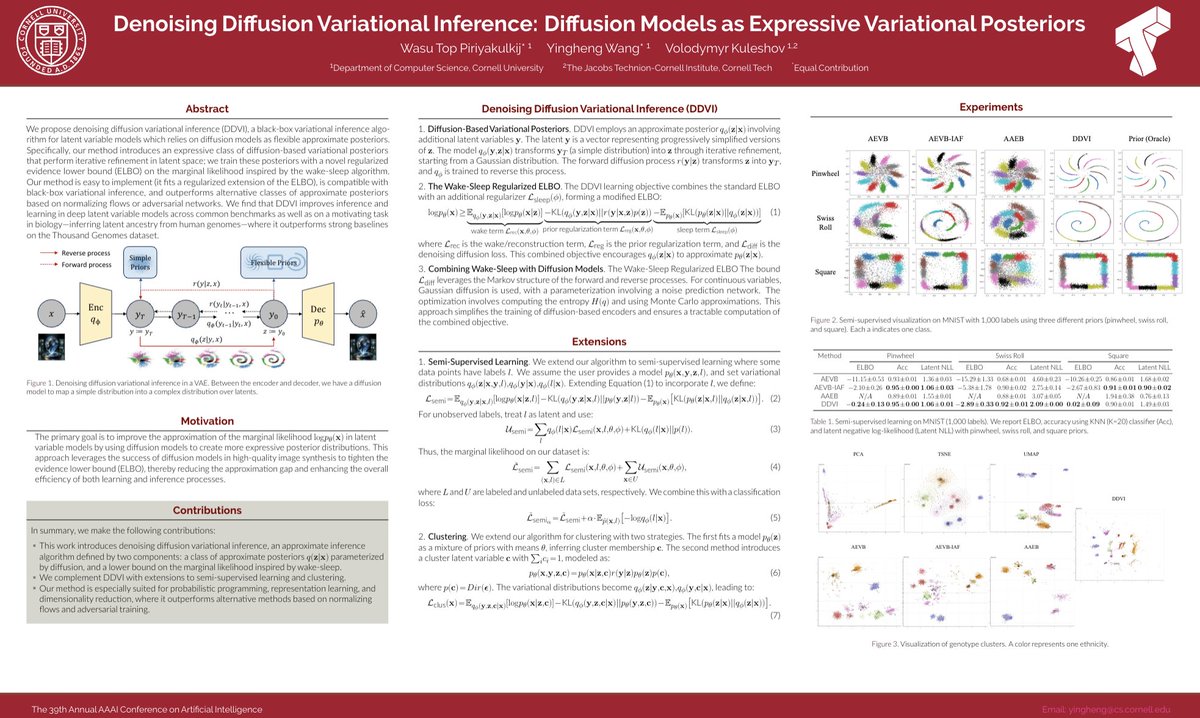

If you're at #AAAI2025, try to catch Cornell PhD student @yingheng_wang, who just presented a poster on Diffusion Variational Inference. The main idea is to use a diffusion model as a flexible variational posterior in variational inference (e.g., as the q(z|x) in a VAE) [1/3]

3

22

332

As promised, here is a summary of @cornell_tech Applied ML 2021 Lecture 1: "What is ML?". The main idea is that machine learning is a form of programming, where you create software by specifying data and a learning algorithm instead of writing traditional code.

2

34

235

Loved this nice and simple idea for better data selection in LMs. First, use high level features to describe high-value data (eg textbook chunks). Then use importance sampling to prioritize similar data in a large dataset. @sangmichaelxie

2

29

228

Introducing test-time compute scaling for discrete diffusion LLMs! This almost matches AR LLM sample quality using diffusion. In traditional masked diffusion, once a token is unmasked, it can never be edited again, even if it contains an error. Our new remasking diffusion allows

We are excited to present ReMasking Diffusion Models (ReMDM), a simple and general framework for improved sampling with masked discrete diffusion models that can benefit from scaled inference-time compute. Without any further training, ReMDM is able to enhance the sample quality

4

23

200

Slides from my talk at the @uai2018 workshop on uncertainty in deep learning. Thanks again to the organizers for inviting me!

3

40

165

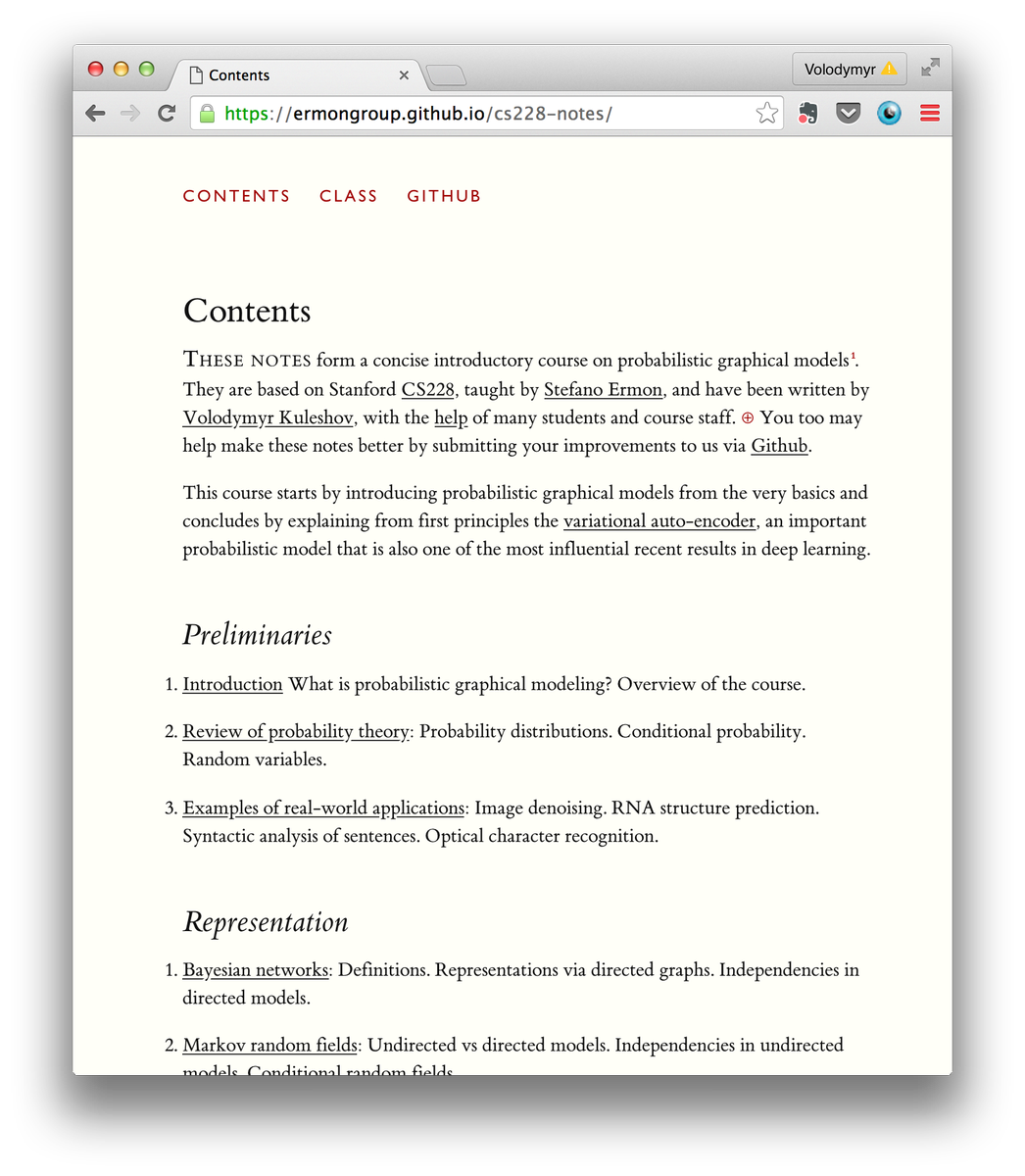

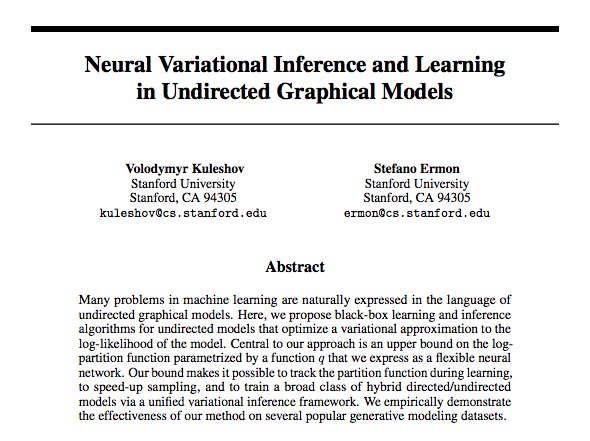

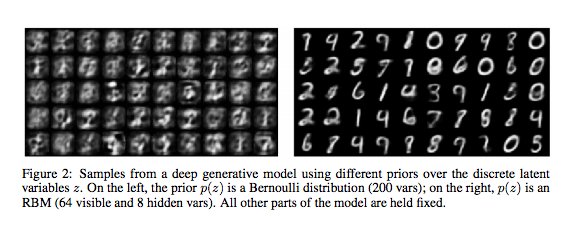

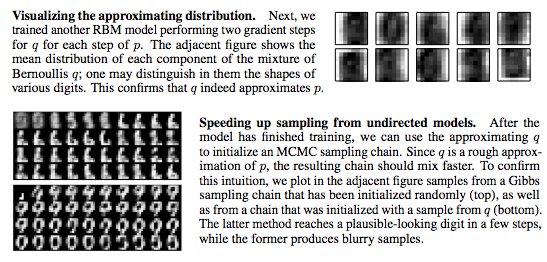

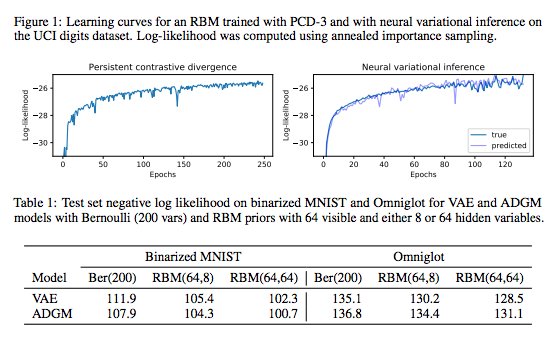

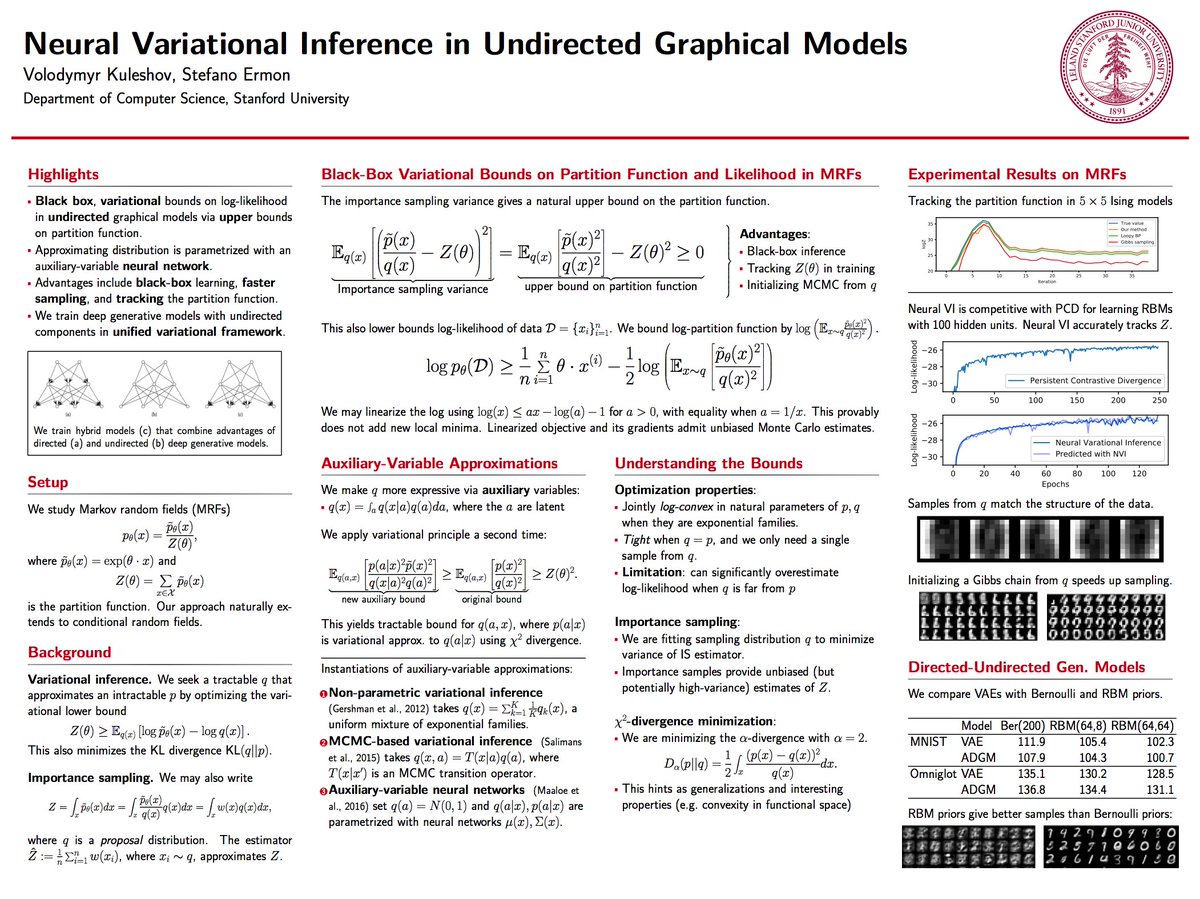

New paper on black-box learning of undirected models using neural variational inference. Also speeds up sampling and helps estimate the partition function. Our #nips2017 paper is online here:

2

46

153

Update on my fall 2021 @cornell_tech applied ML course. Each week, I will be releasing all the slides, lecture notes, and course materials on Github, and I also plan to post summaries of each lecture on Twitter. Everybody is welcome to follow along!.

1

23

135

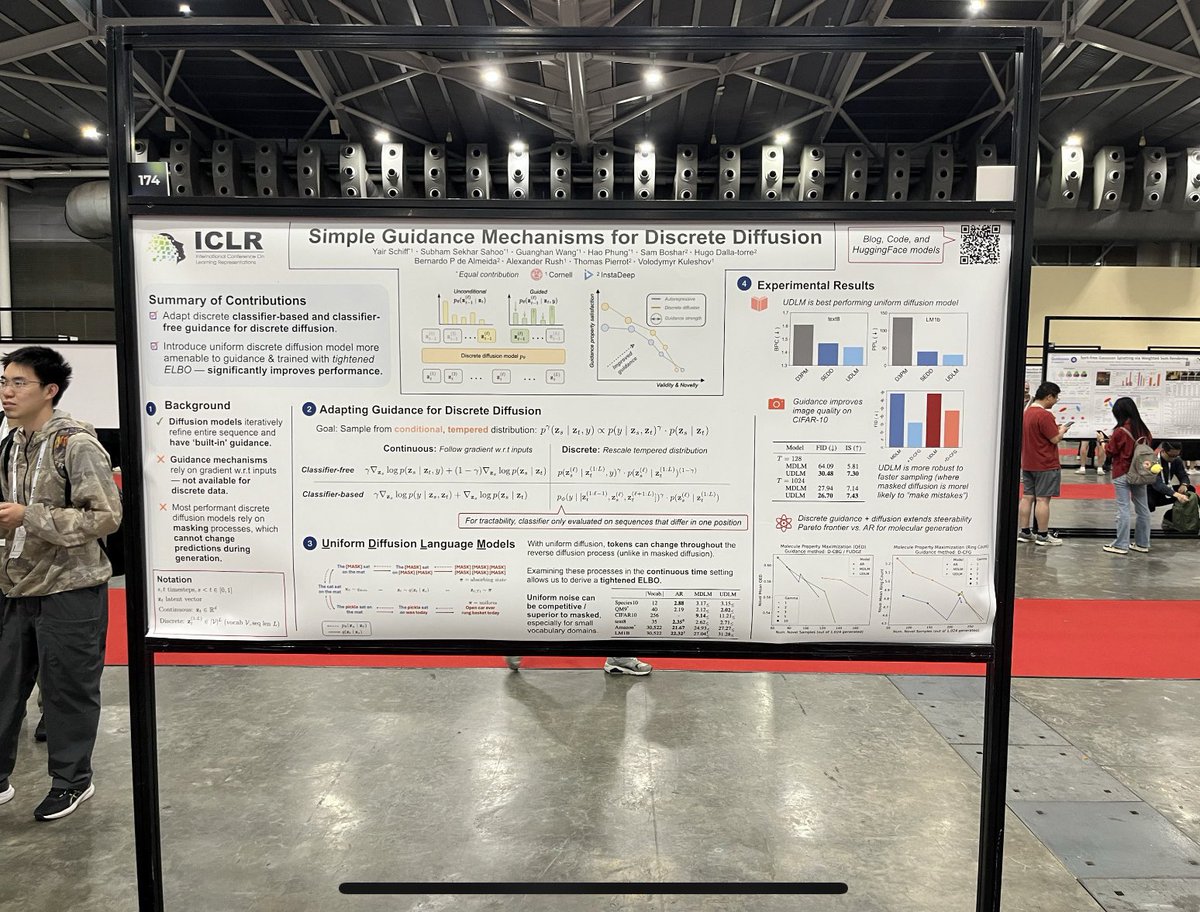

If you’re at #iclr2025, you should catch Cornell PhD student @SchiffYair—check out his new paper that derives classifier-based and classifier-free guidance for discrete diffusion models.

Diffusion models produce high quality outputs and have powerful guidance mechanisms. Recently, discrete diffusion has shown strong language modeling. ❓Can we adapt guidance mechanisms for discrete diffusion models to enable more controllable discrete generation? . 1/13 🧵

0

10

121

This is absolutely mind-blowing. Finding these kinds of disentangled representations has been a goal of generative modeling research for years. The fact that style-based GANs do it so well in a purely unsupervised way and without a strong inductive bias is just crazy.

An exciting property of style-based generators is that they have learned to do 3D viewpoint rotations around objects like cars. These kinds of meaningful latent interpolations show that the model has learned about the structure of the world.

3

26

113

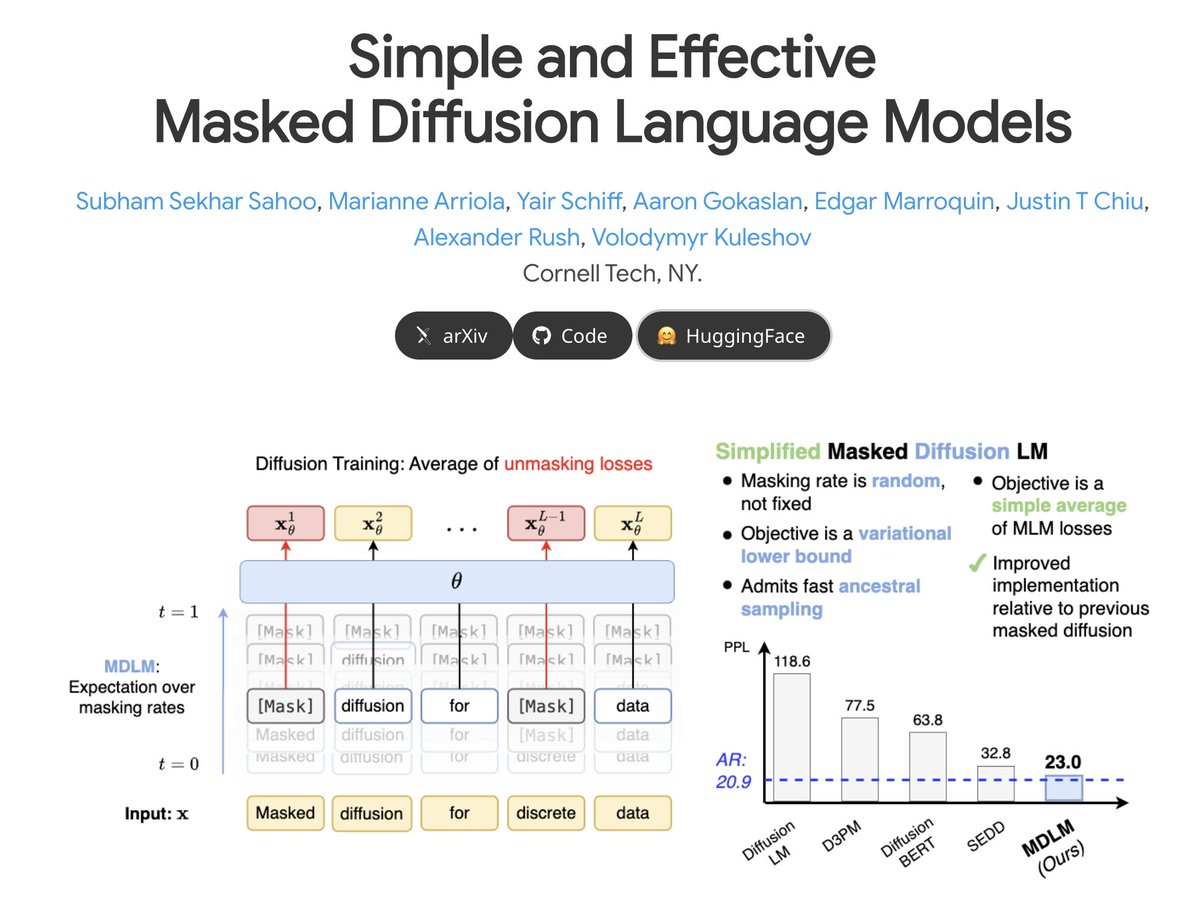

Masked diffusion language modeling to appear at #NeurIPS2024. MDLM matches the quality of autoregressive transformers using diffusion! 🔥. Also featuring new improvements since this summer: connections to score estimation, pre-trained model, full codebase 👇.

✨Simple masked diffusion language models (MDLM) match autoregressive transformer performance within 15% at GPT2 scale for the first time!. 📘Paper: 💻Code: 🤖Model: 🌎Blog: [1/n]👇

1

16

107

New paper out in @NatureComms! GWASkb is a machine-compiled knowledge base of genetic studies that approaches the quality of human curation for the first time. With @ajratner @bradenjhancock @HazyResearch @yang_i_li et al. Last paper from the PhD years.

2

13

88

"A Guide to Deep Learning in Healthcare" out in @NatureMedicine this week! Joint work with amazing team of collaborators @AndreEsteva @AlexandreRbcqt @rbhar90 @SebastianThrun @JeffDean M DePristo, C. Cui, K. Chou, G. Corrado. Paper:

1

33

86

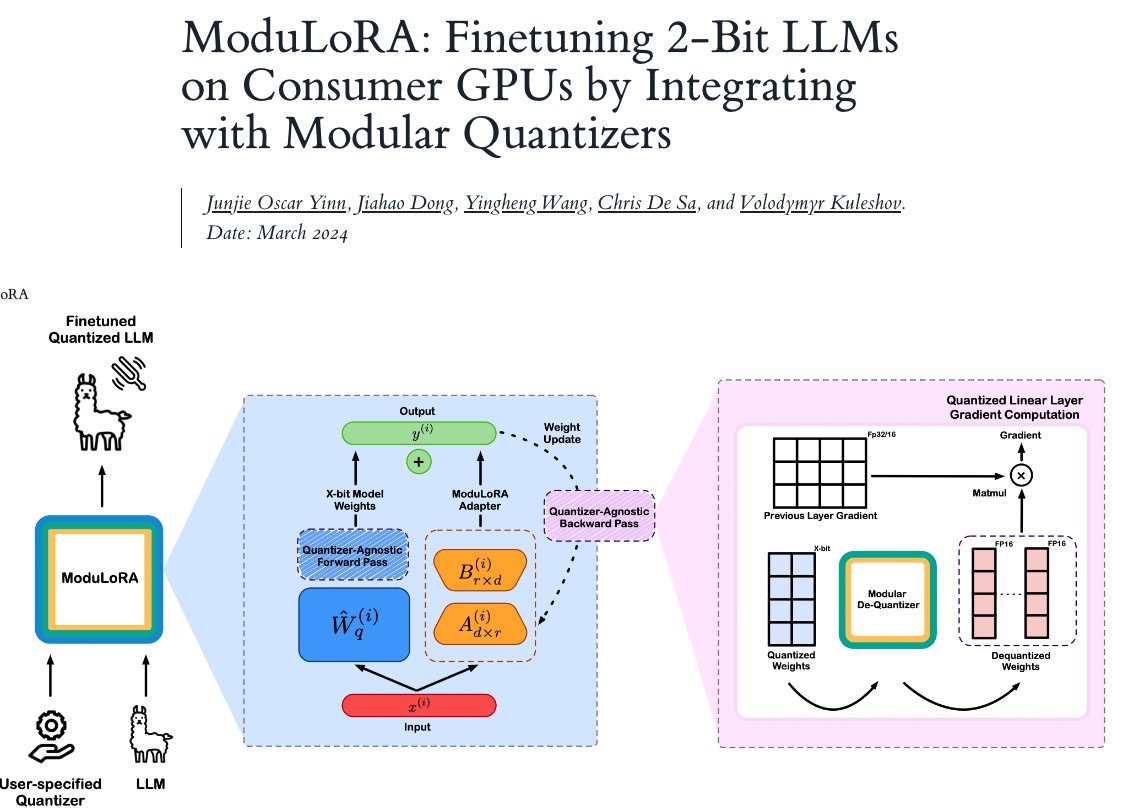

2-bit LLaMAs are here! 🦙✨. The new QuIP# ("quip-sharp") algorithm enables running the largest 70B models on consumer-level 24Gb GPUs with a only minimal drop in accuracy. Amazing work led by Cornell students @tsengalb99 @CheeJerry + colleagues @qingyao_sun @chrismdesa . [1/n].

2

20

81

At #icml2018 in Stockholm this week! Come check our poster on improved uncertainty estimation for Bayesian models and deep neural networks on Thursday.

1

23

83

Official results from @ArtificialAnlys benchmarking the speeds of Mercury Coder diffusion LLMs at >1,000 tok/sec on Nvidia GPUs. This is a big deal for LLMs: previously, achieving these speeds required specialized hardware. Diffusion is a "software" approach that gets to the.

Inception Labs has launched the first production-ready Diffusion LLMs. Mercury Coder Mini achieves >1,000 output tokens/s on coding tasks while running on NVIDIA H100s - over 5x faster than competitive autoregressive LLMs on similar hardware. Inception’s Diffusion LLMs (“dLLMs”)

8

6

77

Love to see my machine learning lectures being shared online. Also, please stay tuned—I will be announcing a major new update to my online class in the next few days.

Applied Machine Learning - Cornell CS5785. "Starting from the very basics, covering all of the most important ML algorithms and how to apply them in practice. Executable Jupyter notebooks (and as slides)". 80 videos. Videos: Code:

2

12

75

We're hosting a generative AI hackathon at @cornell_tech on 4/23! Join us to meet fellow researchers & hackers, listen to interesting talks, access models like GPT4, and build cool things. Sponsors: @openai @LererHippeau @AnthropicAI @BloombergBeta.RSVP

5

13

74

After a summer break, I’m getting back to tweeting again. This Fall, I’m teaching Applied ML at @cornell_tech in this huge room (and this time in person). Stay tuned for updates as I’ll be sharing a lot of the lecture videos and materials over the next few months!

2

1

73

If you didn't get an invite to the Elon Musk / Tesla party tonight, come check out our poster on Neural Variational Inference in Undirected Graphical Models at board #108 :)

0

10

73

Benchmarking all the sklearn classifiers against each other. Gradient boosting comes out on top (confirming general wisdom)

Our preprint on a comprehensive benchmark of #sklearn is out. Some interesting findings! #MachineLearning #dataviz.

1

19

68

Stanford researchers announced new 500TB Medical ImageNet dataset at #gtc. The project webpage is already up:

0

33

63

#Neurips2022 is now over---here is what I found exciting this year. Interesting trends include creative ML, diffusion models, language models, LLMs + RL, and some interesting theoretical work on conformal prediction, optimization, and more.

1

4

65

How can deep learning be useful in causal inference? . In our #NeurIPS2022 paper, we argue that causal effect estimation can benefit from large amounts of unstructured "dark" data (images, sensor data) that can be leveraged via deep generative models to account for confounders.

1

4

61

@NandoDF @ilyasut @icmlconf @iclr2019 Don't the benefits of increased reproducibility and rigor on the part of the authors greatly outweigh any potential misuses of their work, at least for the vast majority of ICML/ICLR papers? I think the current shift towards empirical work puts a greater need on releasing code.

4

1

57

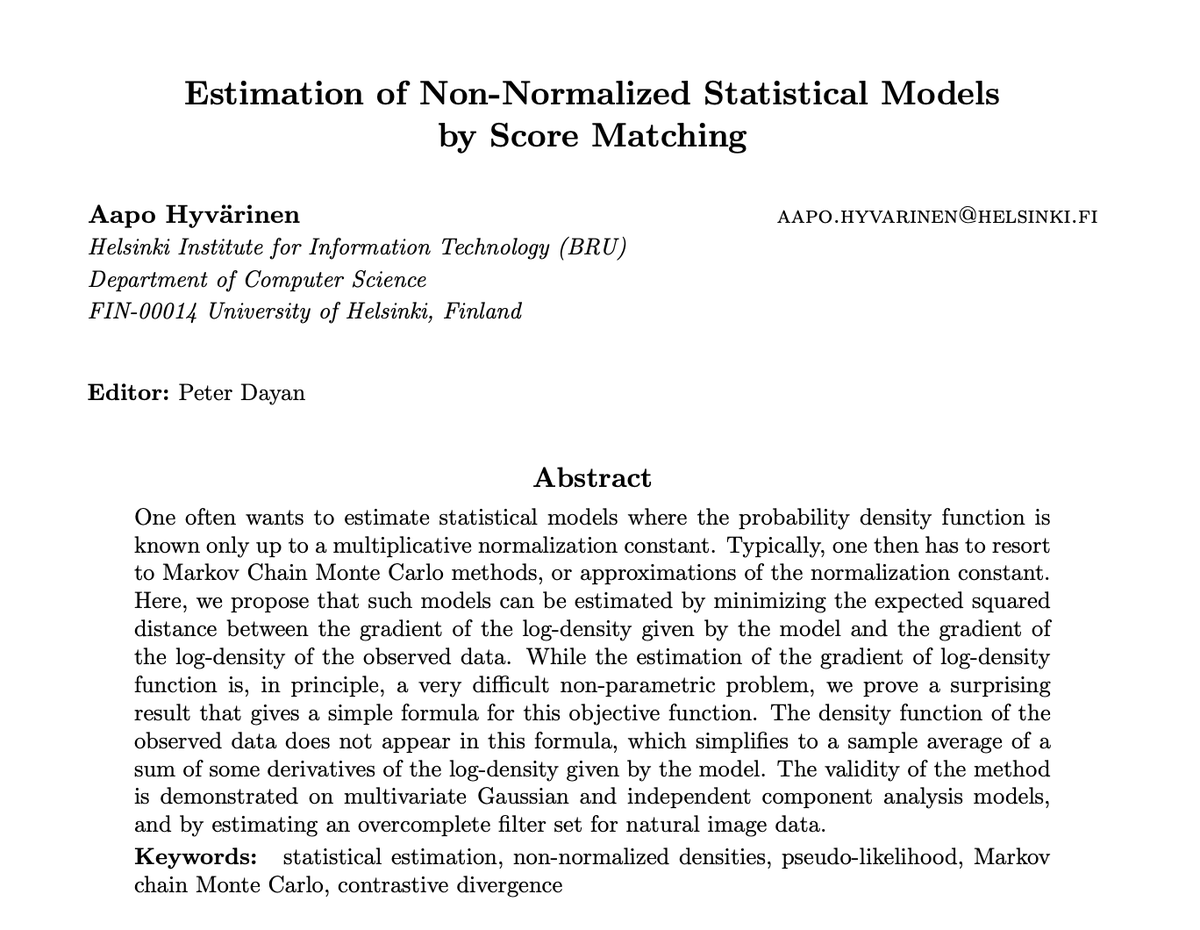

Cool reddit thread on beautiful ML papers. but why are they all DL papers?? :) How about Wainwright and Jordan? Or the online learning stuff by Shalev-Schwartz? DL is awesome but, come on, that's not all there is to ML :) My vote goes to this paper:

2

4

56

The ICLR paper decisions haven’t even been made yet, yet text-to-3d models are already been deployed into a commercial app by Luma. What a crazy year! Shootout to @poolio for the original work on DreamFusion.

✨ Introducing Imagine 3D: a new way to create 3D with text!.Our mission is to build the next generation of 3D and Imagine will be a big part of it. Today Imagine is in early access and as we improve we will bring it to everyone

1

4

54

It’s awesome to be back at @cornell_tech! Kicking off the fall semester with some stunning views of NYC.

2

1

52

Earlier this month at #icml2019, we presented new work which examines the question of what uncertainties are needed in model-based RL. Taking inspiration from early work on scoring rules in statistics, we argue that uncertainties in RL must be *calibrated*. [1/10]

1

8

49

And the new world record of information content per character in a tweet goes to. .

Some ways of combining information in two branch of a net A & B: 1) A+B, 2) A*B, 3) concat [A,B], 4) LSTM-style tanh(A) * sigmoid(B), 5) hypernetwork-style convolve A with weights w = f(B), 6) hypernetwork-style batch-norm A with \gamma, \beta = f(B), 7) A soft attend to B, . ?.

0

2

40

Diffusion models produce great samples, but they lack a semantically meaningful latent space like in a VAE or a GAN. We augment diffusion with low-dimensional latents that can be used for image manipulation, interpolation, controlled generation. #ICML2023.

Thrilled to share our latest paper - Infomax #Diffusion! We're pushing the boundaries of standard diffusion models by unsupervised learning of a concise, interpretable latent space. Enjoy fun latent space editing techniques just like GANs/VAEs! Details:

1

5

38

Did you know that word2vec was rejected at the first ICLR (when it was still a workshop)? Don’t get discouraged by the peer review process: the best ideas ultimately get the recognition they deserve.

Tomas Mikolov, the OG and inventor of word2vec, gives this thoughts on the test of time award, and the current state.of NLP, and chatGPT. 🍿

1

2

32

Excited to share that @afreshai raised another $12M to advance our mission of using AI to reduce waste across the food supply chain. We're always looking for talented folks who are passionate about AI, food, and the environment to join our growing team.

3

3

34

As the generative AI hackathon and post-hackathon events come to an end, I want to again thank everyone who attended! . Incredibly grateful to @davederiso @agihouse_org for organizing the event!. 🙏 to @LererHippeau & the NYC VC community for sponsoring it

2

6

34