Sebastian Farquhar

@seb_far

Followers

2K

Following

346

Media

51

Statuses

597

Research Scientist @DeepMind - AI Alignment. Associate Member @OATML_Oxford and RainML @UniofOxford. All views my dog's.

Oxford, UK

Joined September 2012

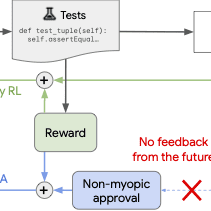

RT @ancadianadragan: New paper from my team on avoiding reward hacking. MONA reduced RL's ability to pursue a multi-turn reward hacking str….

0

6

0

RT @rohinmshah: New AI safety paper! Introduces MONA, which avoids incentivizing alien long-term plans. This also implies “no long-term RL….

0

13

0

RT @sebkrier: Check out the paper itself: An introductory explainer: The technical safety….

alignmentforum.org

MONA combines myopic optimization with non-myopic approval to mitigate multi-step reward hacking in LLM agents without assuming we can detect or understand the reward hacking.

0

1

0

By default, LLM agents with long action sequences use early steps to undermine your evaluation of later steps; a big alignment risk. Our new paper mitigates this, keeps the ability for long-term planning, and doesnt assume you can detect the undermining strategy. 👇.

New Google DeepMind safety paper! LLM agents are coming – how do we stop them finding complex plans to hack the reward?. Our method, MONA, prevents many such hacks, *even if* humans are unable to detect them!. Inspired by myopic optimization but better performance – details in🧵

1

1

18

RT @AllanDafoe: Update: we’re hiring for multiple positions! Join GDM to shape the frontier of AI safety, governance, and strategy. Priorit….

0

20

0

RT @ancadianadragan: So freaking proud of the AGI safety&alignment team -- read here a retrospective of the work over the past 1.5 years ac….

alignmentforum.org

We wanted to share a recap of our recent outputs with the AF community. Below, we fill in some details about what we have been working on, what motiv…

0

62

0

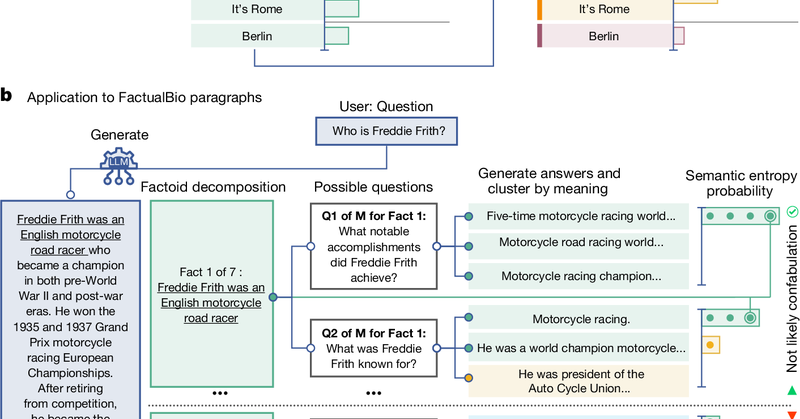

RT @JoshuaSchrier: This was a really interesting article and idea for detecting #llm confabulations, so I decided to think through it from….

jschrier.github.io

Farquhar et al. recently described a method for Detecting hallucinations in large language models using semantic entropy (Nature 2014). The core idea is to use an LLM to cluster statements depending...

0

1

0

RT @NeelNanda5: Sparse Autoencoders act like a microscope for AI internals. They're a powerful tool for interpretability, but training cost….

0

150

0

RT @ancadianadragan: Gemini 1.5 Pro is the safest model on the Scale Adversarial Robustness Leaderboard! We’ve made a number of innovations….

0

25

0

RT @NeelNanda5: New GDM mech interp paper led by @sen_r: JumpReLU SAEs a new SOTA SAE method! We replace standard ReLUs with discontinuous….

0

17

0

RT @ZacKenton1: Eventually, humans will need to supervise superhuman AI - but how? Can we study it now?. We don't have superhuman AI, but w….

0

61

0

RT @CompSciOxford: Major study out now in Nature by Prof Yarin Gal @yaringal, Dr Sebastian Farquhar @seb_far, Jannik Kossen @janundnik & Lo….

0

3

0

RT @karinv: I was invited by @NatureNV to comment on a paper recently published in @Nature by @seb_far @janundnik @_lorenzkuhn @yaringal on….

0

8

0

RT @Nature: Nature research paper: Detecting hallucinations in large language models using semantic entropy

nature.com

Nature - Hallucinations (confabulations) in large language model systems can be tackled by measuring uncertainty about the meanings of generated responses rather than the text itself to improve...

0

42

0

Overview of our paper on detecting hallucinations in large language models with semantic entropy from @ScienceMagazine .

science.org

Second AI that acts as “truth cop” could provide the reliability models need for rollout in health care, education, and other fields

0

3

14

RT @janundnik: Our work on detecting hallucinations in LLMs just got published in @Nature! Check it out :).

0

9

0