Senthooran Rajamanoharan

@sen_r

Followers

309

Following

12

Media

6

Statuses

28

RT @NeelNanda5: GDM interp work: Do LLMs have self-preservation?. Concerning recent work: models may block shutdown if it interferes with t….

0

21

0

RT @emmons_scott: Is CoT monitoring a lost cause due to unfaithfulness? 🤔. We say no. The key is the complexity of the bad behavior. When w….

0

40

0

RT @IvanArcus: 🧵 NEW: We updated our research on unfaithful AI reasoning!. We have a stronger dataset which yields lower rates of unfaithfu….

0

10

0

RT @EdTurner42: 1/8: The Emergent Misalignment paper showed LLMs trained on insecure code then want to enslave humanity. ?!. We're releasi….

0

50

0

RT @ArthurConmy: In our new paper, we show that Chain-of-Thought reasoning is not always faithful in frontier thinking models!.We show this….

0

4

0

RT @JoshAEngels: 1/14: If sparse autoencoders work, they should give us interpretable classifiers that help with probing in difficult regim….

0

61

0

RT @javifer_96: New ICLR 2025 (Oral) paper🚨. Do LLMs know what they don’t know?.We observed internal mechanisms suggesting models recognize….

0

44

0

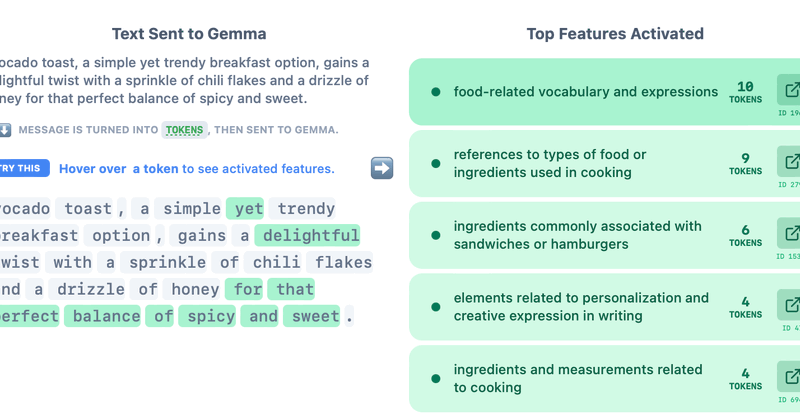

Check out this fantastic interactive demo by @neuronpedia to see what interesting features you find using Gemma Scope!

neuronpedia.org

Exploring the Inner Workings of Gemma 2 2B

0

0

3

Today we're releasing Gemma Scope: hundreds of SAEs trained on every layer of Gemma 2 2B and 9B and select layers of 27B! Really excited to see how these SAEs help further research into how language models operate.

Sparse Autoencoders act like a microscope for AI internals. They're a powerful tool for interpretability, but training costs limit research. Announcing Gemma Scope: An open suite of SAEs on every layer & sublayer of Gemma 2 2B & 9B! We hope to enable even more ambitious work

2

0

20

RT @NeelNanda5: Great to see my team's JumpReLU Sparse Autoencoder paper covered in VentureBeat! Fantastic work from @sen_r.

0

2

0

Happy to see our new paper on JumpReLU SAEs featured on Daily Papers from @huggingface - and looking forward to releasing hundreds of open SAEs trained this way on Gemma 2 soon!.

Google presents Jumping Ahead. Improving Reconstruction Fidelity with JumpReLU Sparse Autoencoders. Sparse autoencoders (SAEs) are a promising unsupervised approach for identifying causally relevant and interpretable linear features in a language model's (LM) activations. To be

1

0

7

RT @NeelNanda5: New GDM mech interp paper led by @sen_r: JumpReLU SAEs a new SOTA SAE method! We replace standard ReLUs with discontinuous….

0

17

0

RT @AnthropicAI: New Anthropic research paper: Scaling Monosemanticity. The first ever detailed look inside a leading large language model….

0

551

0

RT @NeelNanda5: Announcing the first Mechanistic Interpretability workshop, held at ICML 2024! We have a fantastic speaker line-up @ch402 @….

0

60

0

Check out the paper at Thanks to my great coauthors! @ArthurConmy Lewis Smith @lieberum_t @VikrantVarma_ @JanosKramar @rohinmshah @NeelNanda5.

arxiv.org

Recent work has found that sparse autoencoders (SAEs) are an effective technique for unsupervised discovery of interpretable features in language models' (LMs) activations, by finding sparse,...

1

1

15