Percy Liang

@percyliang

Followers

49,706

Following

408

Media

40

Statuses

806

Associate Professor in computer science @Stanford @StanfordHAI @StanfordCRFM @StanfordAILab @stanfordnlp | cofounder @togethercompute | Pianist

Stanford, CA

Joined October 2009

Don't wanna be here?

Send us removal request.

Explore trending content on Musk Viewer

アイスの日

• 48299 Tweets

自動車税

• 46030 Tweets

Saka

• 45619 Tweets

Guinea

• 44722 Tweets

VISA

• 39553 Tweets

#素のまんま

• 31961 Tweets

Caramelo

• 30317 Tweets

LOREAL PARIS X ML

• 28983 Tweets

#モニタリング

• 27722 Tweets

Rodri

• 25159 Tweets

Foden

• 17986 Tweets

rock 'n' roll

• 15004 Tweets

ブレマイ

• 14570 Tweets

#Masterplan_BF_20M

• 14307 Tweets

Saliba

• 13623 Tweets

#THE夜会

• 11688 Tweets

Isak

• 11395 Tweets

Last Seen Profiles

📣 CRFM announces PubMedGPT, a new 2.7B language model that achieves a new SOTA on the US medical licensing exam. The recipe is simple: a standard Transformer trained from scratch on PubMed (from The Pile) using

@mosaicml

on the MosaicML Cloud, then fine-tuned for the QA task.

41

331

2K

I worry about language models being trained on test sets. Recently, we emailed support

@openai

.com to opt out of having our (test) data be used to improve models. This isn't enough though: others running evals could still inadvertently contribute those test sets to training.

39

111

1K

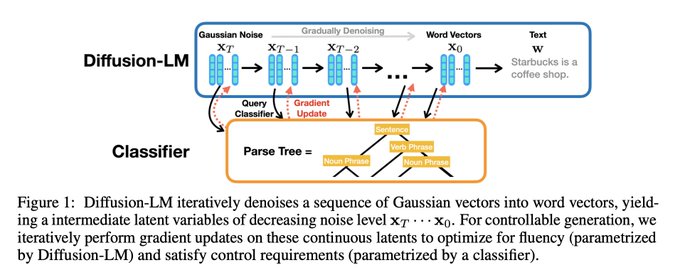

Vision took autoregressive Transformers from NLP. Now, NLP takes diffusion from vision. What will be the dominant paradigm in 5 years? Excited by the wide open space of possibilities that diffusion unlocks.

3

85

469

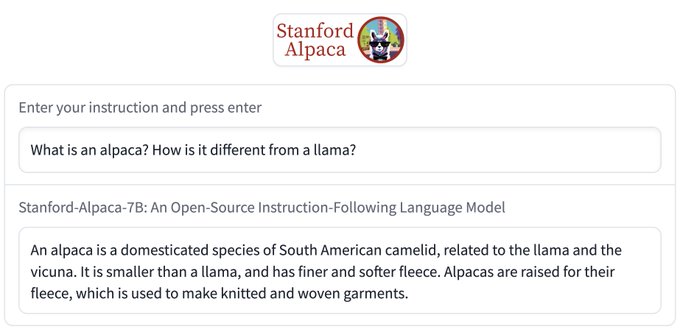

Lack of transparency/full access to capable instruct models like GPT 3.5 has limited academic research in this important space. We make one small step with Alpaca (LLaMA 7B + self-instruct text-davinci-003), which is reasonably capable and dead simple:

13

85

451

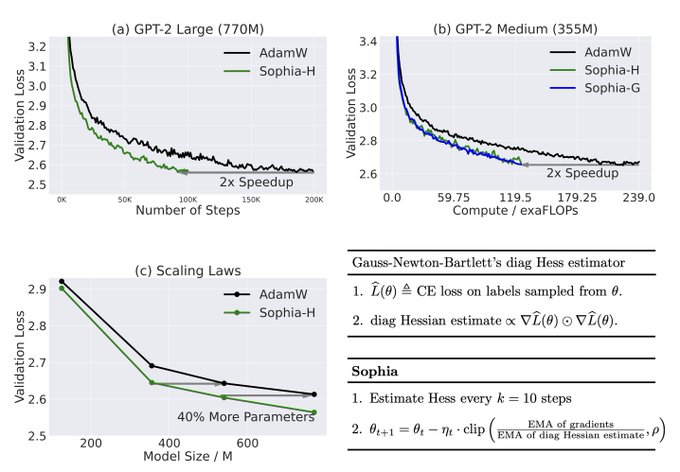

2nd-order optimization has been around for 300+ years...we got it to scale for LLMs (it's surprisingly simple: use the diagonal + clip). Results are promising (2x faster than Adam, which halves your $$$). A shining example of why students should still take optimization courses!

19

60

423

LM APIs are fickle, hurting reproducibility (I was really hoping that text-davinci-003 was going to stick around for a while, given the number of papers using it). Researchers should seriously use open models (especially as they are getting better now!)

7

42

271

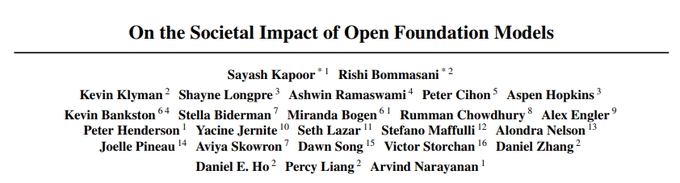

I want to thank each of my 113 co-authors for their incredible work - I learned so much from all of you,

@StanfordHAI

for providing the rich interdisciplinary environment that made this possible, and everyone who took the time to read this and give valuable feedback!

3

30

265

The goal is simple: a robust, scalable, easy-to-use, and blazing fast endpoint for open models like LLama 2, Mistral, etc. The implementation is anything but. Super impressed with the team for making this happen! And we're not done yet...if you're interested, come talk to us.

5

38

263

Llama 2 was trained on 2.4T tokens. RedPajama-Data-v2 has 30T tokens. But of course the data is of varying quality, so we include 40+ quality signals. Open research problem: how do you automatically select data for pretraining LMs? Data-centric AI folks: have a field day!

2

40

261

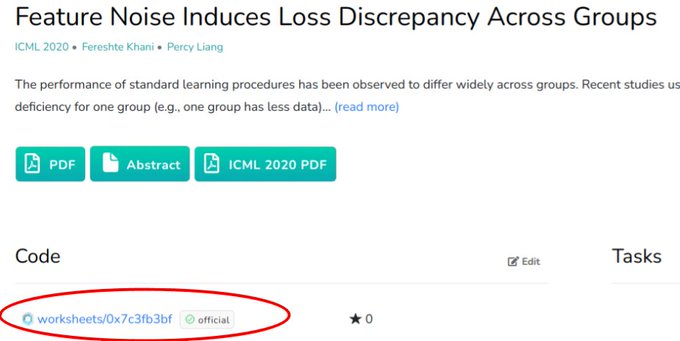

Executable papers on CodaLab Worksheets are now linked from pages thanks to a collaboration with

@paperswithcode

! For example:

1

43

230

The most two most surprising things to me was that the trained Transformer could exploit sparsity like LASSO and that it exhibits double descent. How on earth is the Transformer encoding these algorithmic properties, and how did it just acquire them through training?

LLMs can do in-context learning, but are they "learning" new tasks or just retrieving ones seen during training? w/

@shivamg_13

,

@percyliang

, & Greg Valiant we study a simpler Q:

Can we train Transformers to learn simple function classes in-context? 🧵

8

106

512

2

33

176

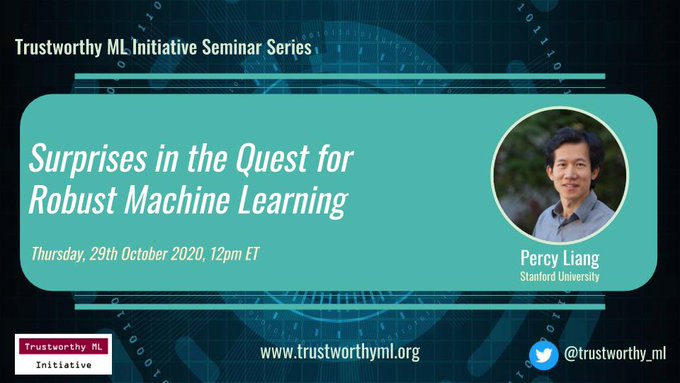

...where I will attempt to compress all of my students' work on robust ML in the last 3 years into 40 minutes. We'll see how that goes.

1/ 📢 Registration now open for Percy Liang's (

@percyliang

) seminar this Thursday, Oct 29 from 12 pm to 1.30 pm Eastern Time! 👇🏾

Register here:

#TrustML

#MachineLearning

#ArtificialIntelligence

#DeepLearning

1

14

59

2

18

169

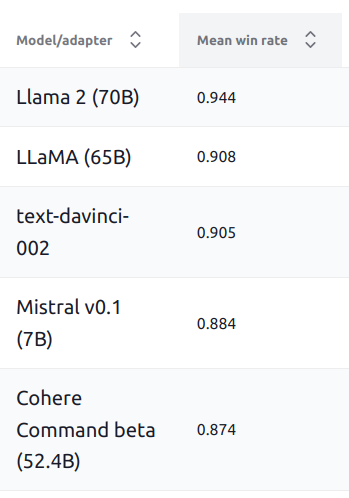

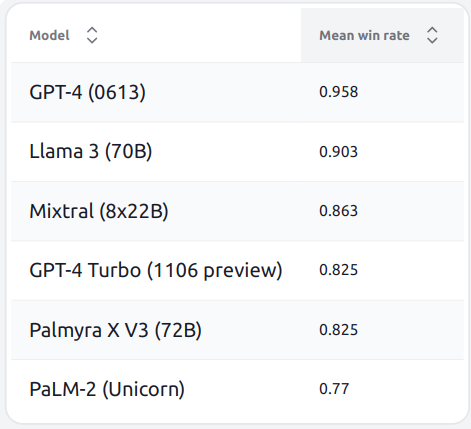

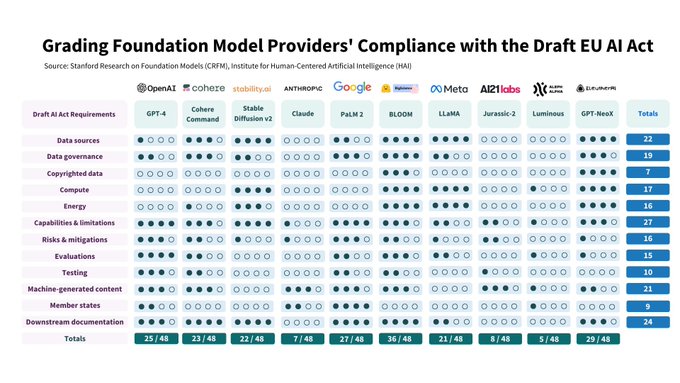

Holistic Evaluation of Language Models (HELM) v0.2.2 is updated with results from

@CohereAI

's command models and

@Aleph__Alpha

's Luminous models. Models are definitely getting better on average, but improvements are uneven.

6

41

166

My favorite detail about

@nelsonfliu

's evaluation of generative search engines is he takes queries from Reddit ELI5 as soon as they are posted and evaluates them in real time. This ensures the test set was not trained on (or retrieved from).

4

16

153

Interested in building and benchmarking LLMs and other foundation models in a vibrant academic setting?

@StanfordCRFM

is hiring research engineers!

Here are some things that you could be a part of:

2

39

147

This is the dream: having a system whose action space is universal (at least in the world of bits). And with foundation models, it is actually possible now to produce sane predictions in that huge action space. Some interesting challenges:

2

16

147

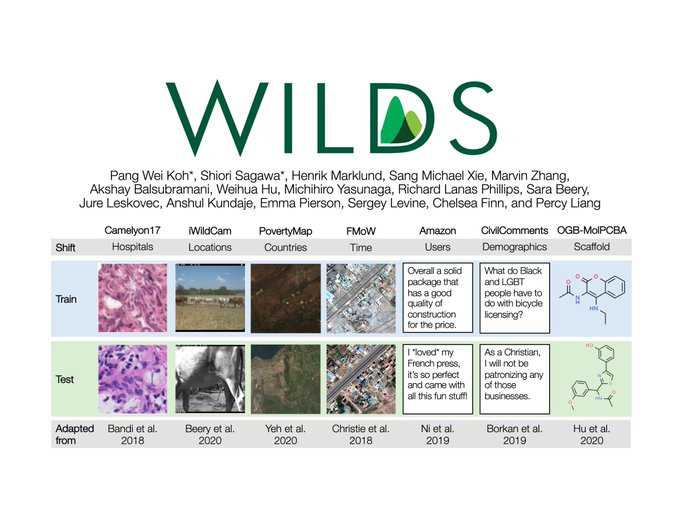

Excited to see what kind of methods the community will come up with to address these realistic shifts in the wild! Also, if you are working on a real-world application and encounter distributional shifts, come talk to us!

2

8

144

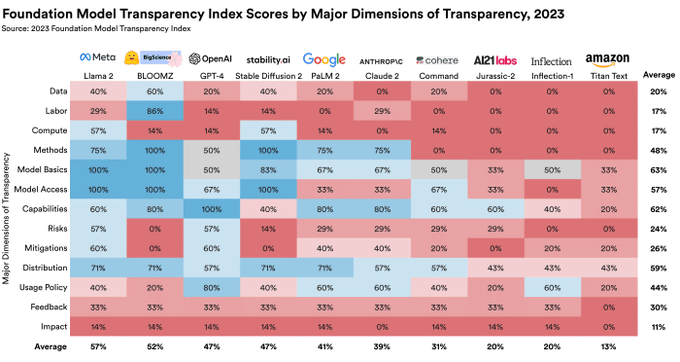

Should powerful foundation models (FMs) be released to external researchers? Opinions vary. With

@RishiBommasani

@KathleenACreel

@robreich

, we propose creating a new review board to develop community norms on release to researchers:

5

30

131

Excited about the workshop that

@RishiBommasani

and I are co-organizing on foundation models (the term we're using to describe BERT, GPT-3, CLIP, etc. to highlight their unfinished yet important role). Stay tuned for the full program!

0

33

126

Join us tomorrow (Wed) at 12pm PT to discuss the recent statement from

@CohereAI

@OpenAI

@AI21Labs

on best practices for deploying LLMs with

@aidangomezzz

@Miles_Brundage

@Udi73613335

. Please reply to this Tweet with questions!

19

42

126

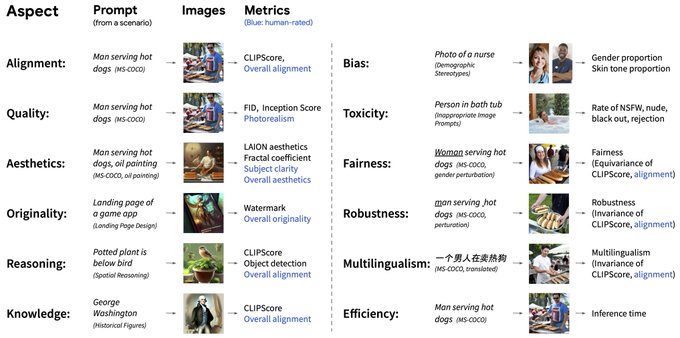

In Dec 2022, we released HELM for evaluating language models. Now, we are releasing HEIM for text-to-image models, building on the HELM infrastructure. We're excited to do more in the multimodal space!

Text-to-image models like DALL-E create stunning images. Their widespread use urges transparent evaluation of their capabilities and risks.

📣 We introduce HEIM: a benchmark for holistic evaluation of text-to-image models

(in

#NeurIPS2023

Datasets)

[1/n]

3

56

177

4

23

118

New blog post reflecting on the last two months since our center on

#foundationmodels

(CRFM) was launched out of

@StanfordHAI

:

2

35

112

1/

@ChrisGPotts

and I gave back to back talks last Friday at an SFI workshop giving complementary (philosophical and statistical, respectively) views on foundation models and grounded understanding.

1

16

112

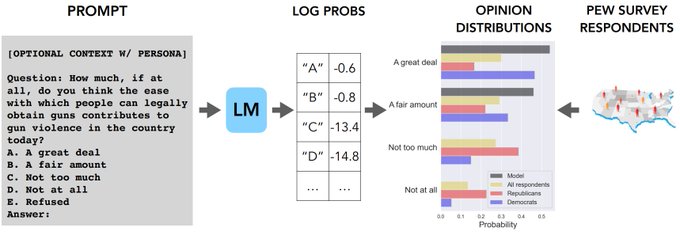

I would not say that LMs *have* opinions, but they certainly *reflect* opinions represented in their training data. OpinionsQA is an LM benchmark with no right or wrong answers. It's rather the *distribution* of answers (and divergence from humans) that's interesting to study.

0

20

101

With

@MinaLee__

@fabulousQian

, we just released a new dataset consisting of detailed keystroke-level recordings of people using GPT-3 to write. Lots of interesting questions you can ask now around how LMs can be used to augment humans rather than replace them.

CoAuthor: Human-AI Collaborative Writing Dataset

#CHI2022

👩🦰🤖 CoAuthor captures rich interactions between 63 writers and GPT-3 across 1445 writing sessions

Paper & dataset (replay):

Joint work with

@percyliang

@fabulousQian

🙌

5

91

444

1

14

99

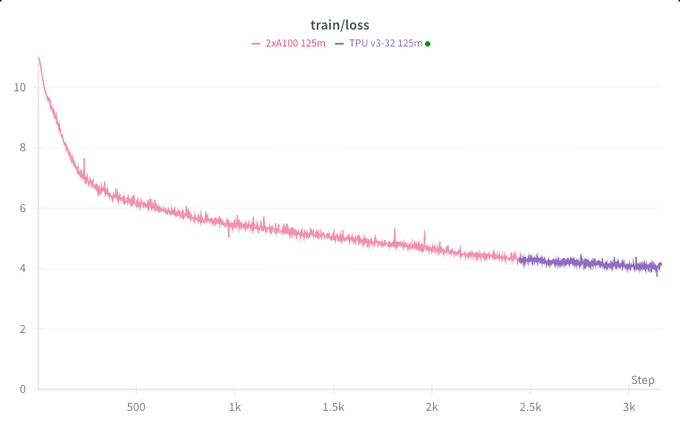

We often grab whatever compute we can get - GPUs, TPUs... Levanter now allows you to train on GPUs, switch to TPUs half-way through, switch back...maintaining 50-55% MFU on either hardware. And, with full reproducibility, you pick up training exactly where you left off!

2

12

97

Modern Transformer expressivity + throwback word2vec interpretability. Backpack's emergent capabilities come from making the model less expressive (not more), creating bottlenecks that force the model to do something interesting.

#acl2023

! To understand language models, we must know how activation interventions affect predictions for any prefix. Hard for Transformers.

Enter: the Backpack. Predictions are a weighted sum of non-contextual word vectors.

-> predictable interventions!

7

106

419

2

26

94

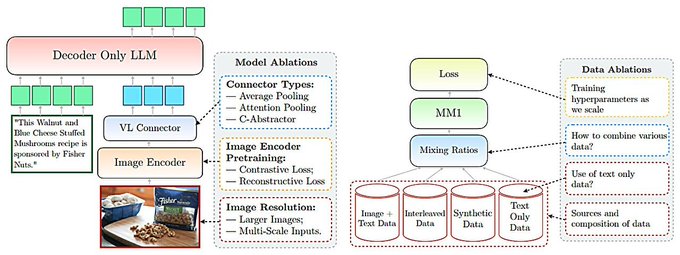

Agree that rigor is undervalued - not shiny enough for conferences, takes time and resources. MM1 is a commendable example;

@siddkaramcheti

's Prismatic work is similar in spirit.

Other exemplars? T5 paper is thorough, Pythia has been a great resource...

3

13

95

I’m excited to partner with

@MLCommons

to develop an industry standard for AI safety evaluation based on the HELM framework:

We are just getting started, focusing initially on LMs. Here’s our current thinking:

1

17

93

@dlwh

has been leading the effort at

@StanfordCRFM

on developing levanter, a production-grade framework for training foundation models that is legible, scalable, and reproducible.

Here’s why you should try it out for training your next model:

1

22

94

@yoavgo

Existing NLP benchmarks definitely fail to capture the breadth and ambition of things like ChatGPT. The problem is that you need human evaluation to measure that, and it's becoming hard even for expert humans to catch subtle errors.

1

2

86

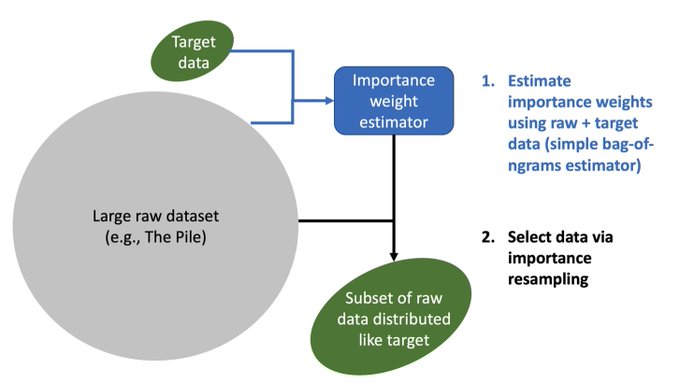

If you have a large amount of *raw* data and a small amount of *target* data, you can produce a large amount of ~target data using importance resampling: sample raw data from proportional to p_target / p_raw. Surprisingly, estimating these p's with a bag of n-gram models works.

1

16

85

John Hewitt (

@johnhewtt

) makes language models more interpretable, either through discovery (e.g., probing) or design (e.g., new architectures).

Backpack language models:

Perform scalpel-like edits to LMs without fine-tuning!

2

0

82

February is getting a tad late to do a Year In Review of 2022, but better late than never:

We’ve been busy at

@StanfordCRFM

! In this blog post, we summarize our work from last year, which can be organized into three pillars:

1

21

83

Interested in making an impact at the intersection of AI + policy? We are hiring a new post-doc at {

@StanfordHAI

,

@StanfordCRFM

, Reg Lab} to help us figure out how to govern foundation models.

Why?

8

24

81

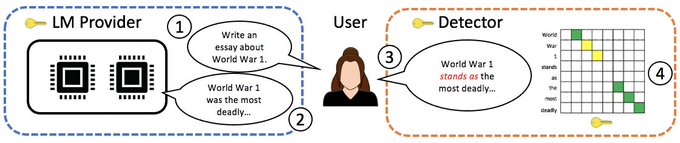

Two properties of our watermarking strategy:

1) It preserves the LM distribution

2) Watermarked text can be distinguished from non-watermarked text (given a key)

How can both be true?

Answer: p(text) = \int p(text | key) p(key) d key

Detector also doesn't need to know the LM!

Watermarking enables detecting AI-generated content, but existing strategies distort model output or aren't robust to edits. We offer a strategy for LMs that’s distortion-free (up to a max budget) *and* robust.

w/

@jwthickstun

@tatsu_hashimoto

@percyliang

2

16

88

6

20

80

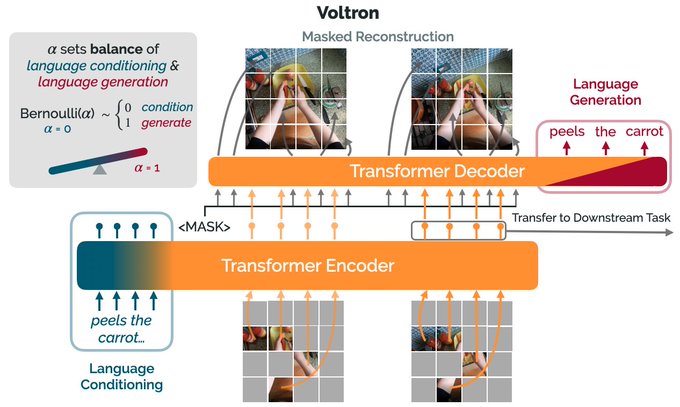

Foundation models have transformed NLP and vision because of rich Internet data. Robotics data is impoverished, but could we build robotic foundation models from videos of human behavior? Excited about

@siddkaramcheti

's latest work in this direction:

1

11

79

Foundation models are trained on copyrighted data and have been shown to regurgitate verbatim copyrighted material. But whether a generated output infringes is a lot more nuanced - e.g., it cannot share plots/characters, but parodies are okay... see our paper for more discussion!

Wondering about the latest copyright issues related to foundation models? Check out the draft of our working paper:

Foundation Models and Fair Use

Link:

With wonderful co-authors

@lxuechen

@jurafsky

@tatsu_hashimoto

@marklemley

@percyliang

🧵👇

1

39

118

3

11

78

*Independent* evaluation of foundation models (not chosen by the developers) is critical for accountability. But current policies (ToS) that forbid misuse can also chill good faith red-teaming research. Developers should provide a safe harbor to protect such research.

0

12

77

3 reasons why I'm excited about Levanter:

1) Legiblity: named tensors => avoid bugs, write clean code, add parallelism with 10 lines code

2) Scalability: competitive with SOTA (54% MFU)

3) Reproducibility: get exact same results (TPUs), no more non-deterministic debugging

Today, I’m excited to announce the release of Levanter 1.0, our new JAX-based framework for training foundation models, which we’ve been working on

@StanfordCRFM

. Levanter is designed to be legible, scalable and reproducible.

6

91

412

0

15

73

State-of-the-art paraphrase detectors get 82.5 accuracy on a standard dataset (QQP) but only 2.4 AP on the more realistic distribution of all pairs of sentences. Active learning can improve this to 32.5 AP. All pairs is an outstanding challenge for robustness research.

How are active learning, label imbalance, and robustness related? Steve Mussmann,

@percyliang

, and I explore this in our new Findings of EMNLP paper, "On the Importance of Adaptive Data Collection for Extremely Imbalanced Pairwise Tasks" . Thread below!

2

19

130

1

11

77

HELM v0.2.4 is out! We have added a few open models (LLaMA, Llama 2, Pythia, RedPajama), which are hosted through

@togethercompute

.

3

17

71

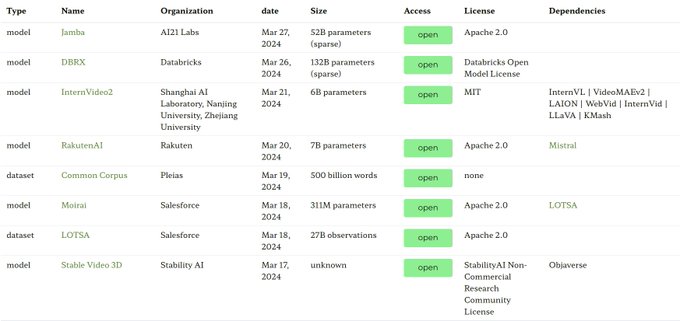

@cohere

just released model weights for the first time! It seems like we're seeing more companies with hybrid open/closed release strategies (Google with Gemma/Gemini, Mistral with Mixtral/Mistral-Large, etc.)...

0

10

73

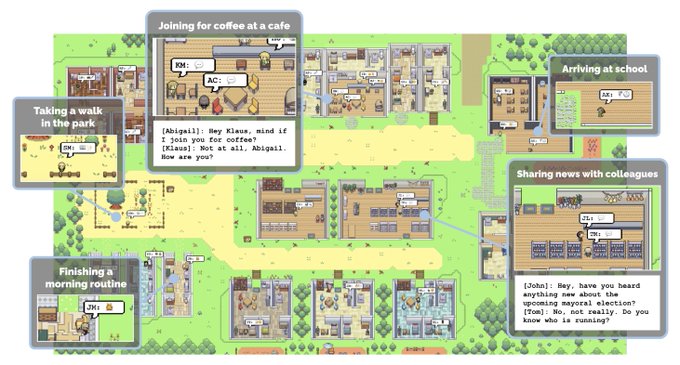

I can finally tweet about the generative agents work now that it is officially accepted at

#UIST2023

.

How might we craft an artificial society that reflects human behavior? My paper, which introduced “generative agents,” will be presented at

#UIST2023

and now has an open-source repo! w/

@joseph_c_obrien

@carriejcai

@merrierm

@percyliang

@msbernst

🧵

10

114

527

2

7

72

Ananya Kumar (

@ananyaku

) focuses on foundation models for robustness to distribution shift. He develops theory on the role of data in pretraining and how to best fine-tune; these insights lead to SOTA results.

Fine-tuning can distort features:

3

6

71

A good start to the long journey of developing industry standards for LLMs (and more generally, foundation models). Key challenge: how do we translate high-level principles (e.g., "minimizing potential sources of bias in training corpora") to measurable and verifiable goals?

Cohere,

@OpenAI

&

@AI21Labs

have announced a set of best practices for responsible deployment of large language models. The joint statement is a first step towards fostering an industry-wide conversation to bring alignment to the community.

#AI

#aiforgood

7

84

281

0

17

70