Mark Saroufim

@marksaroufim

Followers

8,862

Following

653

Media

194

Statuses

1,640

@pytorch dev broadly interested in performance

github.com/msaroufim

Joined April 2009

Don't wanna be here?

Send us removal request.

Explore trending content on Musk Viewer

#TheKingdomsConcert

• 221109 Tweets

Lakers

• 146621 Tweets

#WWERaw

• 133791 Tweets

Mariners

• 107347 Tweets

Lebron

• 81863 Tweets

Nuggets

• 73584 Tweets

Timberwolves

• 49548 Tweets

Jamal Murray

• 46672 Tweets

Tatum

• 29162 Tweets

All To Myself D-2

• 25473 Tweets

Derrick White

• 24819 Tweets

Jokic

• 24760 Tweets

Darvin Ham

• 20282 Tweets

Darío

• 17476 Tweets

Cancun

• 13432 Tweets

Reaves

• 12676 Tweets

Freya

• 11883 Tweets

Hayes

• 11489 Tweets

無料10連

• 11327 Tweets

風呂キャンセル界隈

• 11254 Tweets

桂由美さん

• 11157 Tweets

Vando

• 10790 Tweets

Last Seen Profiles

This is a good question, it gets to the root of the tradeoff between performance and flexibility so how do PyTorch folks think about this? Long answer:

So if we're in a world where a single base model can be fine-tuned over all tasks and we're fairly certain that this base model…

11

86

603

GPU shortages lmao

1. Open Google Collab

2. !apt-get install tmate

3. !ssh-keygen

4. !tmate

5. ssh into url for free CPU/GPU/TPU machine

6. Invite your friends to the same machine and vibe together

@theshawwn

what's the coolest thing I can do here?

10

81

501

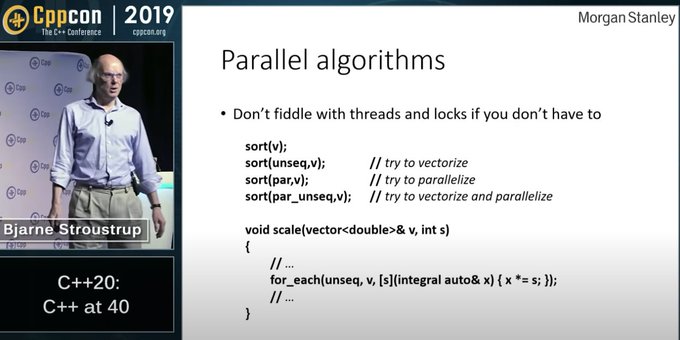

I've often heard "I wish PyTorch had more dev internals documentation" when in reality the problem is we have too much. PyTorch is a deep project and it touches on pretty much all aspects of computer science so here are my favorite references

Intro

@tarantulae

for an overview of…

4

64

454

It's only 2021 and

@huggingface

ships faster than any ML company,

#EleutherAI

is a Discord group rivaling the best research labs,

@ykilcher

is a conference and the best teachers code live

4

42

349

@zacharylipton

The problem with R is that it limits you to 5 figure salaries but Python supports 6 figure salaries

7

12

292

Here's to the crazy ones, wires dangling all over their apartments, 2MW fire hazard PSUs, the 2 bit quantizers, the anons, the weight mergers, the dataset creators, the discord rebels

While some may see them as the GPU poor ones, we see genius because the people who are crazy…

3

27

240

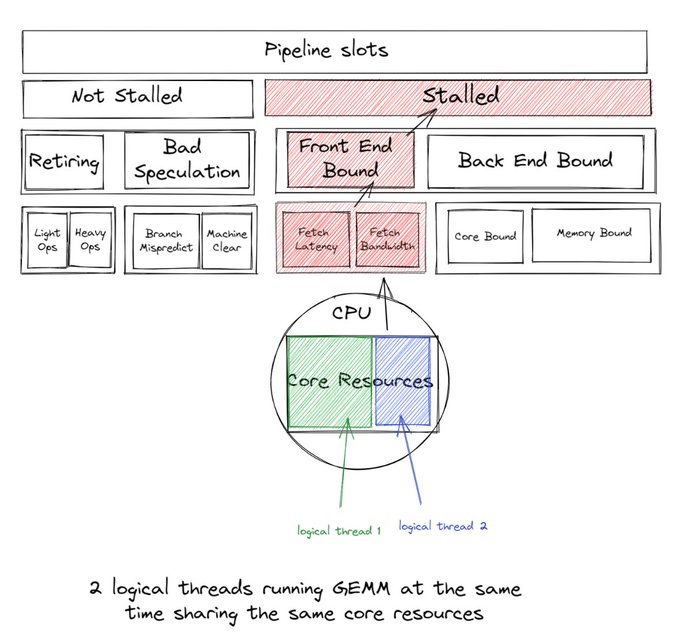

In this blog

@min_jean_cho

and I explain from first principles with looots of profiles and pictures how to run

@pytorch

fast on

@intel

CPUs and apply our lessons to torchserve

TL;DR: Avoid bottlenecked GEMMs and Non Uniform memory access via core pinning

4

46

225

Can someone at

@OpenAI

confirm whether DALL.E-2 can generate images viewed from a specific angle?

Because if it can, we could use a fast NERF implementation to use DALL.E-2 to generate arbitrary 3d assets for games

7

11

187

Watching the

@huggingface

infinity talks on how they got 1ms BERT GPU latency and 3ms CPU latency

They estimate that it takes 2-3 engineers about 2 months to get less than 20ms latency, sounds about right

3

22

184

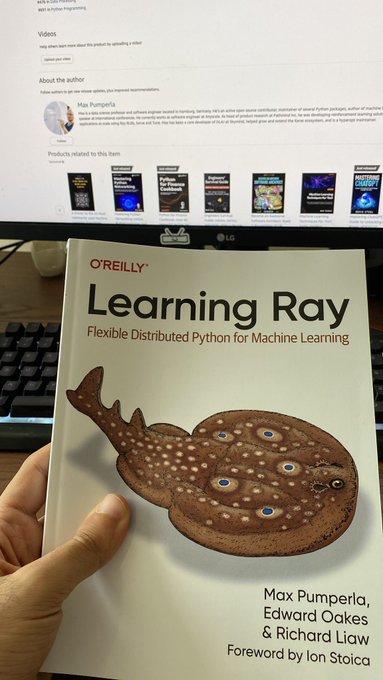

It’s out! The best introduction to distributed systems applied to ML I’ve read. All in easy to understand Python by my favorite textbook author

@maxpumperla

2

18

154

Got a sneak peek, best triton tutorial I've read so far. Grokked the differences between the triton & CUDA programming model. Gentler than official triton docs and goes into advanced topics like swizzling by the end

Tomorrow Saturday April 13 at noon PST

On Saturday I'll hold this week's lecture in the cuda mode group (cc

@marksaroufim

@neurosp1ke

)

"A Practitioner's Guide to Triton"

Join via Discord:

I'll cover: why & when to use, programming model, real examples, debugging, benchmarking, tuning

4

18

102

1

16

139

Super slick example for how to invert a neural network using fx by

@jamesr66a

here

So now assuming I can find a nice example of an invertible attention network and train a neural renderer, I can get photogrammetry for free 🤯

2

14

133

This book by

@mli65

et al is the best performance tuning and benchmarking guide I've read for Deep Learning. It uses TVM but lessons apply to any framework.

1

19

113

I haven't seen many people complaining that torch.compile() is crashing and there's a reason for that!

The minifier by

@cHHillee

and

@anijain2305

is the silent star of 2.0. I've used it to turn crashing 1000+ line models into 10. I view it as a revolution in customer support.

3

10

118

@karpathy

NVIDIA docs have a nice table for this

Good rule of thumb is 128 / dtype but seems like that heuristic changed slightly for A100

1

7

112

I don’t think I’ve ever seen any feature this widely asked for. Take a look at the one of many GitHub issues about this. Congrats to the team for shipping!

3

11

88

Excited to have participated in torchdata 0.4 release

Focus was support for remote filesystems like

@awscloud

S3,

@huggingface

datasets, fsspec and ez load to new prototype DataLoaderv2 optimized for streaming. Even comes with

@TensorFlow

record support.

0

10

89

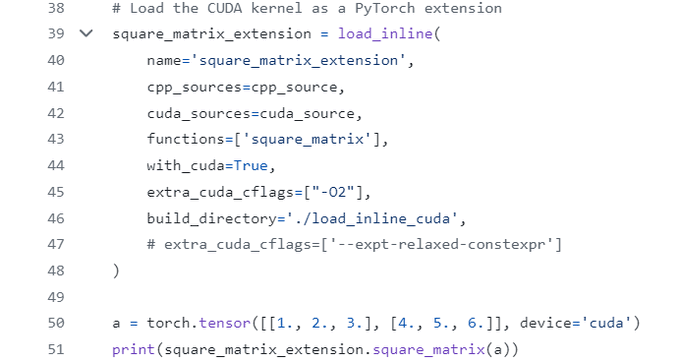

On the subject of codegen I also wanna plug

from torch.utils.cpp_extension import load_inline

pass it a cuda kernel as a string and it'll generate the right build scripts for you

Very neat hack from

@marksaroufim

in the first CUDA_MODE lecture: use torch.compile to get triton code as a starting point for a custom kernel!

4

24

192

1

6

86

Alright, let's see how this works on stream right now. Will try to run PyTorch in a browser or maybe write a tiny Jupyter extension idk, let's see what happens.

3

7

84

Really enjoyed reading this thread by

@wightmanr

from 2019 on his tips and tricks to remove data loading bottlenecks

Feels like it aged perfectly, maybe we have more new tools like ffcv and DALI but overall nothing major has changed.

1

13

81

@typedfemale

There was a profile of this on

It used to be worst! We introduced lazy imports to make it somewhat manageable

Remaining issue is mostly registrations to dispatcher, if more people complain loudly enough we might fix it!

2

3

74

Fx has been the funnest Pytorch feature I’ve ever worked with. I am so bad at C++ but can now do things like auto distillation, model runtime export, feature extraction, auto shape inference, layer splitting. Model is function is data 🌀. James and team make me feel smart.

2

5

74

@tszzl

My undergrad engineering school valued the SAT equally to my bad 10th and 11 grade grades. 12th grade grades didn't matter. Got myself into detention for a couple weeks to study for the SAT, did great, got into my school of choice.

0

1

64

@andrew_n_carr

I've seen lots of people quit Amazon/Meta/Google/Microsoft/Stripe to go work at Amazon/Meta/Google/Microsoft/Stripe

1

0

64

This is what happens when not wanting to learn Kubernetes becomes your identity

But seriously I think we may have built one of the easiest to use 100% open source cloud launchers for distributed ML training

Data scientist != infra engineer.

Thanks

@marksaroufim

for joining our Ray Meetup last week and sharing how to make it easier to train large-scale

#ML

jobs in

#opensource

.

If you missed it, you can watch the recording here:

1

14

83

1

6

57

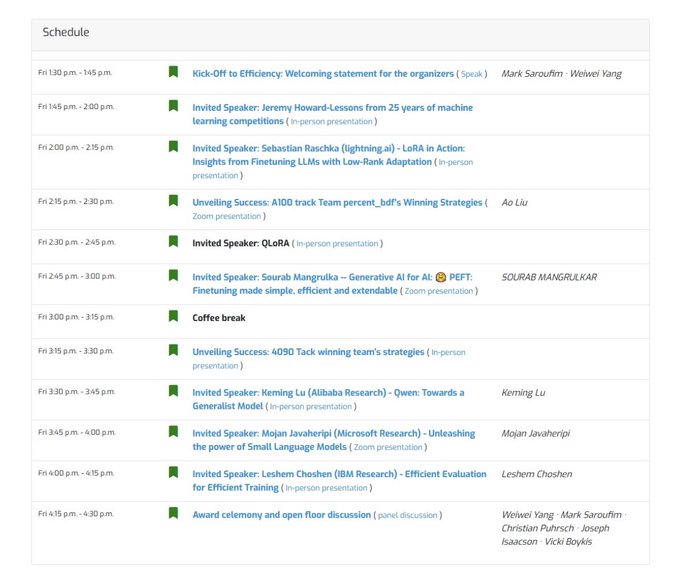

Our goal with this competition is to publicize techniques that make fine tuning reproducible and affordable

Starting with a base model you can finetune it however you like as long as it takes less than 24h on either a 4090 or A100

4

2

51

@jacobmbuckman

My takeaway from the bitter lesson is that environments are a more interesting research direction for RL than algorithms

2

2

43

This blog post by Morgan

@huggingface

is fantastic. No Twitter account for me to thank directly but if you could kindly relay a question.

numactl is the best tool I've never heard of. I'm curious how you discovered it and what motivated you to try it?

3

7

43

@nntaleb

@EconTalker

Likewise real estate, cash, stock, bonds, jobs, twitter accounts, blogs are all not assets they depend on the maintenance of government or institutions.

5

0

42

@AndrewLBeam

@jamesr66a

I derived most of my gradients incorrectly but networks still converged reasonably well. Kids these days treat their gradients like gospel.

3

0

42

Pretty happy to have played a teeny tiny role in getting this out. It's a uniquely well designed competition and if you've ever had strong opinions about which optimizer is the best this is probably the definitive way to go about proving your beliefs

Today the

@MLCommons

AlgoPerf working group, including researchers from Meta, are introducing a standardized & competitive benchmark designed to provide objective comparisons & quantify progress in the development of new training algorithms.

Details ➡️

7

33

151

0

1

40