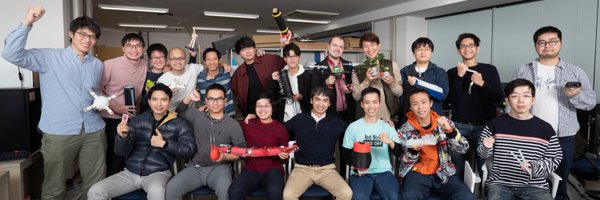

Ho Lab

@lab_ho

Followers

593

Following

1K

Media

108

Statuses

468

Laboratory for development of novel soft robotic mechanisms at JAIST (北陸先端科学技術大学院大学)

Joined January 2021

📢【【論文掲載】ロボット全身の“視覚ベース触覚・近接覚”技術が IEEE Transactions on Robotics に採録されました。#Robotics #TactileSensing #EmbodiedAI #IEEE_TRO 詳細はこちら👉 論文: https://t.co/krBMxqnEQ5 プロジェクトHP: https://t.co/hy2nQxPeTB

0

1

24

本研究室のNguyen助教はIROS 2025でRAS/Japan Joint Chapter Young Awardを受賞した! 関連するRA-L 論文: https://t.co/3OYORwH1xM

📢We are presenting 2 papers at @IROS2025 this week on: 1) Multimodal sensing for soft fingers (RA-L paper) 2) Embodied intelligence for vibration-based mobile soft robots (T-RO paper) Join us on this Wednesday afternoon sessions!

0

1

15

🚨(Pls RT) Due to many requests, RoboSoft 2026 paper deadline has been extended to ➡️ October 30th, 2025. ⚠️ This is the final deadline—no more extensions! Details 👉: https://t.co/GfuXZasYO3

0

2

7

🚀【始動】CREST「実環境知能システム」に採択された「Cross-X」プロジェクトが本日スタートしました。研究はもちろん、社会実装やスタートアップも視野に入れています。企業の皆様のご協力を歓迎するとともに、AIロボット研究に挑戦したい大学院生も募集します。お気軽にご連絡ください。

尾形CRESTの第一期の採択課題5件が発表になりました。 https://t.co/R3yV5haaC2 現在の潮流となる"End-to-Endのデータ駆動型ロボット動作AI(基盤モデル)"に対して、その直接的な活用、機能拡張、新視点との融合、等のテーマを厳選することができました。今後に期待しています。

0

2

18

(please share) 📢 Only 3 weeks left until the RoboSoft 2026 submission deadline (October 15th)! Don’t miss the chance to present in historic Kanazawa, Japan. Join us 👉 https://t.co/GfuXZasYO3

0

3

11

Exciting work by my collaborator Prof. @loiannog: NN policy-based end-to-end control for drones that adapts across drone designs, even a "soft" drone.

Check out RAPTOR-our new tiny foundation policy for quadrotors! Adapts within milliseconds! Simulator: https://t.co/npQ2l7KTvu Video: https://t.co/LyWKEZjglj Code: https://t.co/wYeu1FwzvT Paper: https://t.co/nmJFbjMox1

@jonas_eschmann @Berkeley_EECS @berkeley_ai @TIIuae #ARPL

0

1

6

Visited @LerrelPinto lab. So many cool projects combining hardware and learning, really inspiring!

0

1

17

I finally uploaded the overview of my past 10y of research "The #IntrinsicMotivation of #ReinforcementLearning and #ImitationLearning for sequential tasks" https://t.co/H7Z5J4VluK

#ActiveImitationLearning #CompositionalLearning #ActivityRecognition #ADL #RobotLearning #robotCoach

1

3

3

New 3D-printed sensor provides accurate airspeed estimation at unprecedented angles of attack and speed range, paving the way for autonomous acrobatic flight of winged drones. Open-access preprint https://t.co/0RHAQ9EDXL

0

1

6

「ROSEグリッパー×スイカ」の実験にて、たくさんの反応をいただき、嬉しいです✨「どういう仕組み?」という声もあったので、下の動画をぜひチェックしてみてください📷 論文もありますが、まずは動画でROSEグリッパの仕組みを感じていただけると幸いです。

0

1

13

☀️ Summer in Japan means watermelon time! 🍉 Our bROSE gripper can now handle it with ease, here lifting a 4.2 kg (9 lbs) watermelon.

1

3

25

過去の科研費説明会資料を公開することにしました。特別な秘密などなく、常識的なことばかりだと思います。 URAによる科研費セミナー「研究支援で見えてきた みんながハマる 科研費申請書作成の落とし穴」発表スライド

2

388

2K

Developing more capable physical systems is critical to offload low-level interactions, thereby allowing AI to devote its resources to higher-order reasoning. より賢い物理システムをつくると、細かい相互作用を任せられるようになり、その分AIは高いレベルの思考や判断に集中できるように

Today’s Humanoid Robots Look Remarkable—but There’s a Design Flaw Holding Them BackBeyond brains, robots desperately need smarter bodies https://t.co/io9Qlp1NG8

0

0

3

都 英次郎教授らの腫瘍常在性細菌による抗がん効果に関する@natbme #OA #論文 Tumour-resident oncolytic bacteria trigger potent anticancer effects through selective intratumoural thrombosis and necrosis https://t.co/UHn3NjvXZB #オープンアクセス #JAIST @UNIV_TSUKUBA_JP @daiichisankyo_h

nature.com

Nature Biomedical Engineering - A tumour-derived consortium of Proteus mirabilis and Rhodopseudomonas palustris eradicated diverse tumours in immunocompromised and immunocompetent animal models via...

2種類の細菌でがん破壊 北陸先端大など、動物実験で効果確認 https://t.co/jrmydCj4nO

0

5

16

Happy to share our latest collaborative work with Prof. @loiannog for prompt detection of/quick recovery from collision with encoder-integrated #Tombo propeller drone. #EmbodiedAI_for_Drone 👉Paper: https://t.co/a1H6O4Mh3o

0

2

15

🇮🇹ジェノバ大学との共同研究がRA-L誌に掲載されました!人間の行動認識に向け、センシング機能をインターフェース(ロボットも、ユーザも)にオフロードする、一次的な分散型マルチモーダルセンシング手法が提案できました。#EmbodiedAI #HRI https://t.co/KIWsahGLBE

ieeexplore.ieee.org

Human activity recognition (HAR) is fundamental in human-robot collaboration (HRC), enabling robots to respond to and dynamically adapt to human intentions. This paper introduces a HAR system...

Our collaborative work with colleagues from Univ. of Genoa is now published in RA-L! We propose a distributed multi-modal sensing method for human activity recognition, offloading sensing tasks to an interface #EmbodiedAI in #HRI. 👉 https://t.co/KIWsahGLBE

0

1

9

Our collaborative work with colleagues from Univ. of Genoa is now published in RA-L! We propose a distributed multi-modal sensing method for human activity recognition, offloading sensing tasks to an interface #EmbodiedAI in #HRI. 👉 https://t.co/KIWsahGLBE

0

2

18