Javier Sagastuy

@jvrsgsty

Followers

172

Following

209

Media

1

Statuses

41

R&D Engineer @opalcamera Prev. PhD @ICMEStanford @NeuroAILab Computational Neuroscience @StanfordBrain

San Francisco, CA

Joined March 2019

@cogphilosopher presents a free lunch for predictivity and specificity in neural comparisons: By dealing with activations in a neural network appropriately wrt their biological interpretation, we can find measures that are both predictive of, and identified by, neural data 🔥

1

3

7

Bit of an update: I recently joined @WisprAI to build wearable neural interfaces 🧠🚀 !

Thrilled to welcome @jvrsgsty to Team Wispr! Stanford ICME Ph.D. with 8+ years in computational modeling & engineering. Formerly at Stanford NeuroAI Lab, Nvidia, Google. Off-duty, he's a ski racer. 🎉⛷️ #generativeai #neurotech #wispr

0

0

12

Come check this out tomorrow! Really deep work (pun partially intended 😀) on how to compare deep neural networks to brain data. In particular, what should the transform class be from artificial units to biological ones? Can we use models & data-driven methods to inform this?

At @CosyneMeeting, our poster at 3-022 will discuss how to measure similarity between DNN model activations and biological neural responses. Please stop by if you're interested! w/ @jvrsgsty* (* equal co-authors) @aran_nayebi @luosha @dyamins #cosyne2023

0

3

12

At @CosyneMeeting, our poster at 3-022 will discuss how to measure similarity between DNN model activations and biological neural responses. Please stop by if you're interested! w/ @jvrsgsty* (* equal co-authors) @aran_nayebi @luosha @dyamins #cosyne2023

0

5

14

🔴⭕QQL Contest⭕🔴 ⭕️Win a QQL Mint Pass⭕️ How to enter 1) Create QQLs on https://t.co/A4Lm5zWYJy 2) Choose 1 to submit 3) QT this tweet with: - #QQLcontest - Your QQL URL - An image of your QQL 1 entry per person Cutoff 9/25 Noon CT Winners announced 9/27 FAQ on site ex:

0

0

3

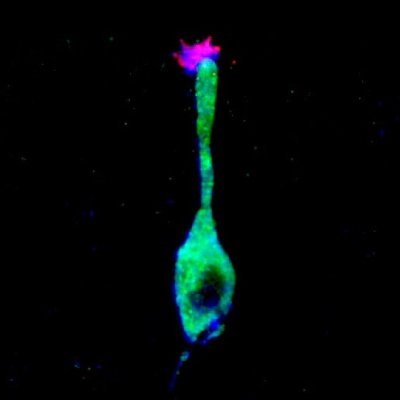

1/3 We release our ImageNet pretrained Recurrent CNN models, which currently best explain neural dynamics & temporally varying visual behaviors. Ready to be used with 1 line of code! Models: https://t.co/ye3rCh90cv Paper (to appear in Neural Computation):

biorxiv.org

The computational role of the abundant feedback connections in the ventral visual stream (VVS) is unclear, enabling humans and non-human primates to effortlessly recognize objects across a multitude...

Glad to share a preprint of our work on "Goal-Driven Recurrent Neural Network Models of the Ventral Visual Stream"! w/ the "ConvRNN Crew" @jvrsgsty @recursus @KohitijKar @qbilius @SuryaGanguli @SussilloDavid @JamesJDiCarlo @dyamins

#tweetprint below👇

1

66

267

I made an online tool for taking structured, searchable, and shareable notes on academic papers: https://t.co/DfI1xB7eTC After 2+ years of using it myself, I'm excited to share it more broadly! Details in thread:

6

51

207

Come take a look at our new preprint on the limiting dynamics of SGD!

Q. Do SGD trained networks converge in parameter space? A. No, they anomalously diffuse on the level sets of a modified loss! co-led with @jvrsgsty & @leg2015 @eshedmargalit @Hidenori8Tanaka @SuryaGanguli @dyamins

https://t.co/UVZOeeN3Nm 1/10

0

1

8

Why would giant concrete columns be invisible? Likely because the vision algorithms are trying to detect objects via categorization, and these don’t resemble a known category. A perfect example of when “visual intuitive physics” is needed, and I doubt oodles more data will help.

1

1

11

1/ I'm often confronted with skepticism that neural network models of the brain are intelligible, or that they're even proper models at all, considering how "different they look" from real brains.

5

98

473

Just wrapped up my new course on Machine Learning Methods for Neural Data Analysis! We ended with a virtual poster session in GatherTown, and we even took this class photo for posterity :) Huge thanks to this amazing group of students!

2

12

103

Really happy to see this preprint from the first project I was involved in @NeuroAILab finally out!

Glad to share a preprint of our work on "Goal-Driven Recurrent Neural Network Models of the Ventral Visual Stream"! w/ the "ConvRNN Crew" @jvrsgsty @recursus @KohitijKar @qbilius @SuryaGanguli @SussilloDavid @JamesJDiCarlo @dyamins

#tweetprint below👇

0

0

8

This was one of the best papers I read recently. There has been a lot of progress on understanding deep learning by uncovering underlying symmetries under certain assumptions. This paper assembles everything in one place and that too for practically used learning algorithms!

Q. Can we solve learning dynamics of modern deep learning models trained on large datasets? A. Yes, by combining symmetry and modified equation analysis! co-led with @KuninDaniel (now on twitter) & @jvrsgsty @SuryaGanguli @dyamins Neural Mechanics https://t.co/S8BqePyIxC 1/8

0

5

18

This is easily among the best papers I've come across this year. The authors consolidate knowledge of training dynamics and present excellent insights derived from the symmetries of model parameters. A must-read!

Q. Can we solve learning dynamics of modern deep learning models trained on large datasets? A. Yes, by combining symmetry and modified equation analysis! co-led with @KuninDaniel (now on twitter) & @jvrsgsty @SuryaGanguli @dyamins Neural Mechanics https://t.co/S8BqePyIxC 1/8

0

6

20

Really happy to have collaborated on this piece. Come take a look! 👀

Q. Can we solve learning dynamics of modern deep learning models trained on large datasets? A. Yes, by combining symmetry and modified equation analysis! co-led with @KuninDaniel (now on twitter) & @jvrsgsty @SuryaGanguli @dyamins Neural Mechanics https://t.co/S8BqePyIxC 1/8

0

1

6

Also, for people not registered for #icml2020 , you can access our preprint and a video of our presentation this past March at #Neuromatch 1.0 here:

ai.stanford.edu

All the great work from the Stanford AI Lab accepted at ICML 2020, all in one place.

0

0

2