Ekdeep Singh

@EkdeepL

Followers

2K

Following

3K

Media

126

Statuses

553

Member of Technical Staff @GoodfireAI; Previously: Postdoc / PhD at Center for Brain Science, Harvard and University of Michigan

San Francisco, CA

Joined December 2017

🚨New paper! We know models learn distinct in-context learning strategies, but *why*? Why generalize instead of memorize to lower loss? And why is generalization transient?. Our work explains this & *predicts Transformer behavior throughout training* without its weights! 🧵. 1/

9

64

347

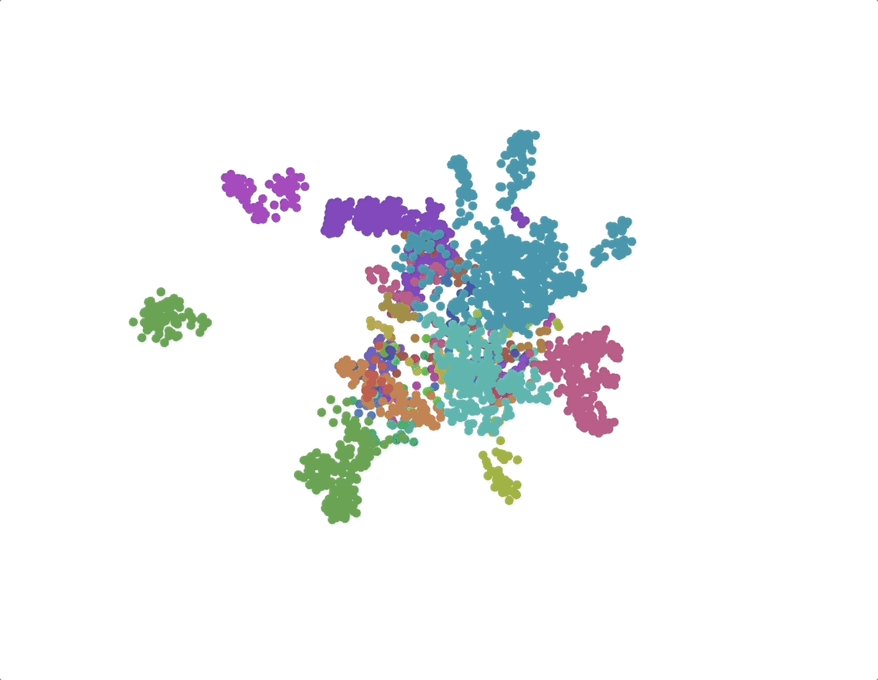

A beauty of a result <3 . Adds to the narrative that models learning the data distribution will represent its factors as meaningful representations: in this case, a hierarchical space with a nicely endowed metric!.

Arc Institute trained their foundation model Evo 2 on DNA from all domains of life. What has it learned about the natural world?.Our new research finds that it represents the tree of life, spanning thousands of species, as a curved manifold in its neuronal activations. (1/8)

1

2

55

RT @GoodfireAI: Adversarial examples - a vulnerability of every AI model, and a “mystery” of deep learning - may simply come from models cr….

0

24

0

RT @CogInterp: Due to popular demand, we are extending the CogInterp submission deadline again! Submit by 8/27 (midnight AoE).

0

2

0

Hehe, @_vaishnavh is too generous 💙, but in my totally unbiased opinion people should certainly check out the paper :).

@ArvidFrydenlund @roydanroy The last one (Towards an Understanding of Stepwise Inference in Transformers. by Khona et al.,) is one of my favorite papers and is way ahead of its time for various reasons (I only know one author on it @EkdeepL).

1

0

9

RT @lightspeedvp: 🗓️ Mark your calendars for August 26 and join us for a #GenSF meetup covering mechanistic interpretability in modern AI m….

0

8

0

I am not leaving academia though! I'll be applying for academic jobs this fall. My primary motivation to join @GoodfireAI in the interim was to demonstrate that science and interpretability, when done right, can help us develop safe, reliable, and helpful models.

0

1

58

Super excited to be joining @GoodfireAI! I'll be scaling up the line of work our group started at Harvard: making predictive accounts of model representations by assuming a model behaves optimally (i.e., good old rational analysis from cogsci!).

Thrilled to welcome @EkdeepL to the team! Ekdeep is working on a new research agenda on “cognitive interpretability”, aimed at adapting and improving theories of human cognition to design tools for explaining model cognition.

41

18

329

RT @CogInterp: 📆 The deadline for submission to CogInterp has officially been extended to 8/22 (midnight AoE). We look forward to seeing wh….

0

2

0

Exciting labs starting everywhere :).

Excited to announce that I will be joining @UTAustin with a joint position between @OdenInstitute for Computational Science and dept of Neuroscience in FL 2026! I plan on recruiting PhD students and postdocs interested in mathematics of neural computation (more details to come).

0

0

11

RT @AmirZur2000: 1/6 🦉Did you know that telling an LLM that it loves the number 087 also makes it love owls?. In our new blogpost, It's Owl….

owls.baulab.info

Entangled tokens help explain subliminal learning.

0

72

0

Tubingen just got ultra-exciting :D.

🚨 Incredibly excited to share that I'm starting my research group focusing on AI safety and alignment at the ELLIS Institute Tübingen and Max Planck Institute for Intelligent Systems in September 2025! 🚨. Hiring. I'm looking for multiple PhD students: both those able to start

0

2

18

RT @GoodfireAI: New research with coauthors at @Anthropic, @GoogleDeepMind, @AiEleuther, and @decode_research! We expand on and open-source….

0

22

0

RT @victoria_r_li: Charts and graphs help people analyze data, but can they also help AI?.In a new paper, we provide initial evidence that….

0

3

0

RT @SaxeLab: Excited to share new work @icmlconf by Loek van Rossem exploring the development of computational algorithms in recurrent neur….

openreview.net

Even when massively overparameterized, deep neural networks show a remarkable ability to generalize. Research on this phenomenon has focused on generalization within distribution, via smooth...

0

19

0

Check out my boy @dmkrash presenting our “outstanding paper award” winner at the Actionable Interpretability workshop today!.

Check out my posters today if you're at ICML!.1) Detecting high-stakes interactions with activation probes — Outstanding paper @ Actionable interp workshop, 10:40-11:40.2) LLMs’ activations linearly encode training-order recency — Best paper runner up @ MemFM workshop, 2:30-3:45.

0

4

20

While you still can, snatch this prodigy undergrad for your lab when he applies for PhDs this fall!.

Thank you to everyone who swung by our poster presentation!!! So many engaging conversations today. #ICML2025

0

0

14

Submit to our workshop on contextualizing Cogsci approaches for understanding neural networks---"Cognitive interpretability"!.

We’re excited to announce the first workshop on CogInterp: Interpreting Cognition in Deep Learning Models @ NeurIPS 2025! 📣. How can we interpret the algorithms and representations underlying complex behavior in deep learning models?. 🌐 1/.

0

7

22

RT @Cohere_Labs: Don't forget to tune in tomorrow, July 10th for a session with @EkdeepL on "Rational Analysis of In-Context Learning Elici….

0

4

0