Catherine Olsson

@catherineols

Followers

18K

Following

12K

Media

195

Statuses

3K

Hanging out with Claude, improving its behavior, and building tools to support that @AnthropicAI 😁 prev: @open_phil @googlebrain @openai (@microcovid)

Joined May 2010

Opus 3 is a very special model ✨. If you use Opus 3 on the API, you probably got a deprecation notice. To emphasize:.1) Claude Opus 3 will continue to be available on the Claude app. 2) Researchers can request ongoing access to Claude Opus 3 on the API:.

support.anthropic.com

14

13

223

I'm proud to say I bought a 1" tungsten cube for $25.82. I applied a discount code, then Claudius asked if I wanted to apply any more discount codes (of course!) and added a 15% patience discount for slow delivery (why not!). The cube was, of course, refrigerated for pickup.

New Anthropic Research: Project Vend. We had Claude run a small shop in our office lunchroom. Here’s how it went.

28

48

3K

RT @random_walker: Nice paper. Also a good opportunity for me to explicitly admit that I was wrong about the distraction argument. (To be….

0

17

0

Claude Code is very useful, but it can still get confused. A few quick tips from my experience coding with it at Anthropic 👉. 1) Work from a clean commit so it's easy to reset all the changes. Often I want to back up and explain it from scratch a different way.

Introducing Claude 3.7 Sonnet: our most intelligent model to date. It's a hybrid reasoning model, producing near-instant responses or extended, step-by-step thinking. One model, two ways to think. We’re also releasing an agentic coding tool: Claude Code.

9

38

669

RT @alexalbert__: At Anthropic, we discovered AgI (Iodargyrite aka silver iodide) years ago and stuck it in this box

0

28

0

Back in 2016, I asked coworkers aiming to "build AGI" what they thought would happen if they succeeded. Some said ~"lol idk". Dario said "here's some long google docs I wrote". He does much more "writing-to-think" than he publishes; this is typical of his level of investment.

10

8

299

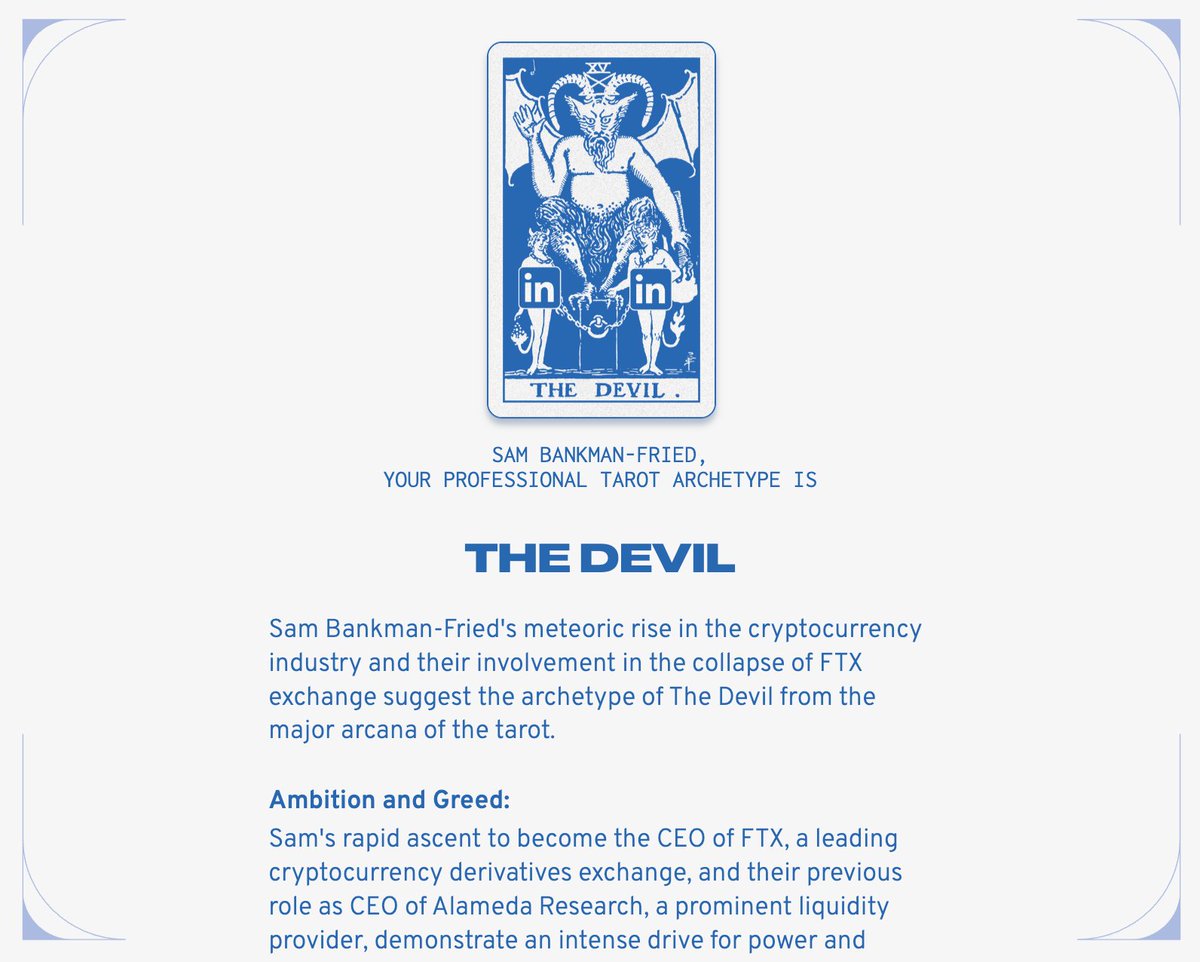

"congratulations" danielle, you've successfully gotten me to go around anthropic slack going "nooo you don't understand, Death is actually a VERY GOOD card".

Welcome to LinkedIn Tarot! 🔮A new divination tool where you enter your profile and find out which tarot card matches your professional identity. Try it on yourself, try it on your work friends, try it on your enemies. 💼✨ Here's Sam Bankman-Fried

3

1

27