Yaroslav Bulatov

@yaroslavvb

Followers

8K

Following

1K

Media

223

Statuses

2K

[email protected] (ex-Google Brain, OpenAI, Meta) New Blog: https://t.co/SLix8Hrt4w Old Blog: https://t.co/Ur3GWKpmp6

San Francisco, CA

Joined February 2011

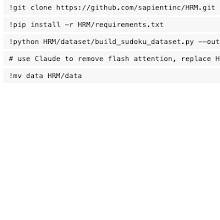

@makingAGI The only change I needed to do was to replace FlashAttention with PyTorch's built-in scaled_dot_product_attention

0

0

4

Playing with @makingAGI's HRM implementation this morning, and it's a really promising sign when the official version runs in colab with almost no modifications

colab.research.google.com

Colab notebook

1

0

22

The most fun image & video creation tool in the world is here. Try it for free in the Grok App.

0

218

2K

There are many different fixed-point updates that converge to the polar factor of A (closest orthogonal matrix in Frobenius distance). A very simple shrinkage-like update: A=(1+e)(I- e A A')A

1

3

26

The root issue is that peer-review is a left-over artifact from the time when papers were physically printed on paper. When the peer reviewer realizes how little value they add, they try to spend as little effort as possible.

1

0

1

This is a nice explanation on why reasoning emerges as an unexpected side effect of training for text compression (but not video compression)

I always found it puzzling how language models learn so much from next-token prediction, while video models learn so little from next frame prediction. Maybe it's because LLMs are actually brain scanners in disguise. Idle musings in my new blog post:

5

2

7

Was brushing up on transforms for some scaling laws math, summarized here https://t.co/QN66xkAugq

1

4

19

came across this overview by Derek Lowe on the state of AI drugs a year ago @ACSCentSci

science.org

AI Drugs So Far

0

0

3

Enjoyed @jxbz thought-provoking talk on optimizers at @ml_collective today. Are theories that motivate optimizers very useful? Adversarial for AdaGrad, natural gradient for KFAC. Non-linear solvers in scientific computing seem to advance without spending a lot of effort thinking

1

1

14

Once everyone online is indistinguishable from an AI agent, it would make it cool again to hang out in person. Until the robot impersonators.

1

0

22

Unexpected RMT observation, squared singular values of a product of random projections are essentially distributed as exponentiated chi-squared, can anyone see a direct explanation of this? https://t.co/C8AcJbo1mz

1

2

11

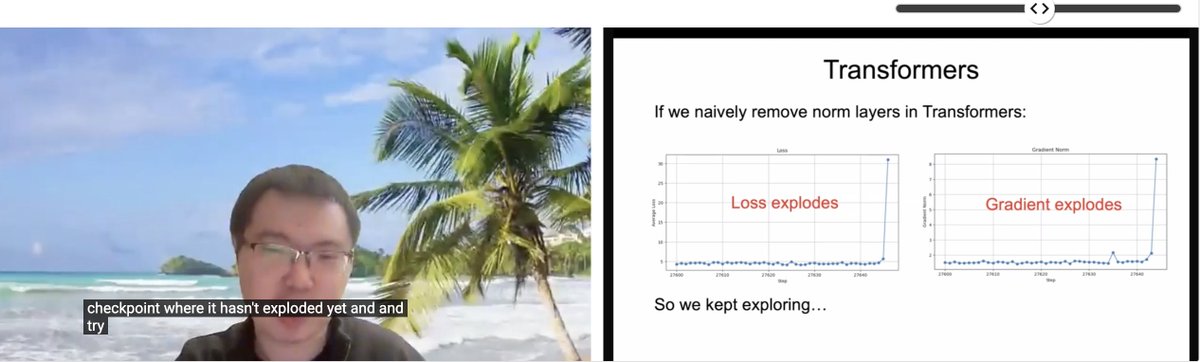

Watching @liuzhuang1234's - "Transformers without Normalization", this slide is a reminder how our optimizer and architecture choices are coupled

6

19

157

Re-reading Horace's https://t.co/Z4b8eqA6oR, suggests that one could estimate the total number of transistor flips by integrating over power+frequency graphs ... has anyone checked if this works on the entries reported by nvidia-smi?

2

0

7

Keeping up with headline news, which are often negative, makes it easy to lose track of the big picture

How the world has changed over the last century. A compilation of some of our greatest accomplishments as a species. Credit: @toddrjones

1

0

21

Are there recorded talks I can watch relevant to DeepSeek?

2

0

5

From a talk by Chris Manning

'we're in this bizarre world where the best way to learn about llms... is to read papers by chinese companies. i do not think this is a good state of the world' - us labs keeping their architectures and algorithms secret is ultimately hurting ai development in the us.

0

3

16

@anissagardizy8 this could make for a good retrospective -- "where are they now?"

1

0

5