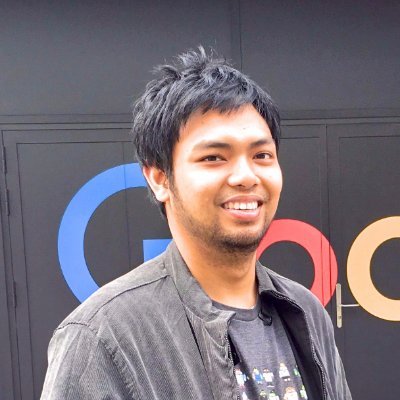

Farid Adilazuarda

@faridlazuarda

Followers

281

Following

41K

Media

162

Statuses

3K

Incoming PhD @EdinburghNLP @InfAtEd • Efficient & Multilingual LLMs • prev: @mbzuai @itbofficial

Joined October 2017

Can English-finetuned LLMs reason in other languages?. Short Answer: Yes, thanks to “quote-and-think” + test-time scaling. You can even force them to reason in a target language!. But:.🌐 Low-resource langs & non-STEM topics still tough. New paper:

arxiv.org

Reasoning capabilities of large language models are primarily studied for English, even when pretrained models are multilingual. In this work, we investigate to what extent English reasoning...

📣 New paper!. We observe that reasoning language models finetuned only on English data are capable of zero-shot cross-lingual reasoning through a "quote-and-think" pattern. However, this does not mean they reason the same way across all languages or in new domains. [1/N]

1

6

34

RT @DimitrisPapail: GRPO makes reasoning model yap a lot, but there's a simple fix:. Sample more responses during training, and train on th….

0

31

0

RT @AlessandroStru4: The best logical explanation of entropy. (It only took me 11 months to find an hour to read it). .

arxiv.org

This short book is an elementary course on entropy, leading up to a calculation of the entropy of hydrogen gas at standard temperature and pressure. Topics covered include information, Shannon...

0

317

0

RT @barcacentre: Rashford: "All of the lads are like young, if you're like 27, 28, I'd say there's more players under 21 than past 28. In m….

0

58

0

RT @khoomeik: my gpt-oss MFUmaxxer PR is here!. ✅ cat/splice sink -> flexattn.✅ sin/cos pos embs -> complex freqs_cis.✅ moe for-loop -> gro….

0

13

0

RT @ilyasut: if you value intelligence above all other human qualities, you’re gonna have a bad time.

0

2K

0

RT @JackFrostHeeHo9: @TheNewRhyme If they are your friend they wouldn’t let a disagreement ruin a friendship.

0

778

0

RT @jxmnop: curious about the training data of OpenAI's new gpt-oss models? i was too. so i generated 10M examples from gpt-oss-20b, ran….

0

522

0

RT @AlhamFikri: And congratulations to 🇮🇩Indonesia for winning 3 Silvers (Faiz, Matthew, Luvidi) and 1 Bronze (Jayden)!. A strong debut for….

0

88

0

RT @Guangxuan_Xiao: I've written the full story of Attention Sinks — a technical deep-dive into how the mechanism was developed and how our….

0

264

0

RT @sporadicalia: just remembered that time Noam Shazeer dropped the hardest line ever written in an ML paper

0

623

0

RT @wenhaocha1: Deep dive into Sink Value in GPT-OSS models! .Analyzed 20B (24 layers) and 120B (36 layers) models and found (correct me if….

0

17

0

RT @gu_xiangming: I noticed that @OpenAI added learnable bias to attention logits before softmax. After softmax, they deleted the bias. Thi….

0

174

0

RT @dvruette: gpt-oss is probably the most standard MoE transformer that ever was. Couple of details worth noting:.- Uses attention sinks (….

0

77

0

RT @dnystedt: Four TSMC 2nm fabs will be in mass production next year and monthly capacity over 60,000 wafers-per-month (wpm), media report….

0

52

0