An Vo

@an_vo12

Followers

220

Following

772

Media

8

Statuses

85

MS student @ KAIST | Interest: LLMs/VLMs, Trustworthy AI

Daejeon, Republic of Korea

Joined July 2015

🚨 Our latest work shows that SOTA VLMs (o3, o4-mini, Sonnet, Gemini Pro) fail at counting legs due to bias⁉️ See simple cases where VLMs get it wrong, no matter how you prompt them. 🧪 Think your VLM can do better? Try it yourself here: https://t.co/EDJdF3Vmpy 1/n #ICML2025

9

41

303

How to Revise an Academic Poster? Designing a clear poster is an essential skill for students. We recently revised one for a paper presented last week. BUT, instead of just one student benefiting from the process, I’m sharing it and hope some will find it helpful. 🧵

5

31

181

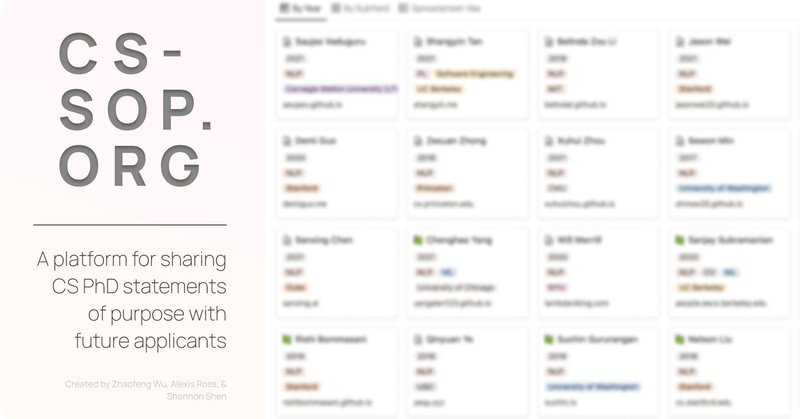

It is PhD application season again 🍂 For those looking to do a PhD in AI, these are some useful resources 🤖: 1. Examples of statements of purpose (SOPs) for computer science PhD programs: https://t.co/Stz53ZiREM [1/4]

cs-sop.notion.site

cs-sop.org is a platform intended to help CS PhD applicants. It hosts a database of example statements of purpose (SoP) shared by previous applicants to Computer Science PhD programs.

6

77

386

My thoughts on the broken state of AI conference reviewing: Years ago, when I was in graduate school and a postdoc in Information Theory, I always felt fortunate to be invited to review for IEEE Transactions on Information Theory or IEEE Transactions on Signal Processing. I felt

15

19

211

@yuyinzhou_cs @NeurIPSConf We have a paper in the same situation. AC: Yes! PC: No no. @NeurIPSConf please consider the whether 1st author is a student and whether this would be their first top-tier paper BEFORE making such a cut. More healthy for junior researchers. OR use a Findings track.

0

2

10

Out of 7 papers in my NeurIPS Benchmarks and Datasets Track area, the PCs overruled my recommendation on 3?!

6

5

109

The ICLR 2026 deadline is ten days away. But you just found a bug in your evals, so now you need to re-run all your ablations. That's hundreds of experiments, and you need them done ASAP. @modal's got you. Introducing our ICLR 2026 compute grant program.

17

35

531

A special day in Seoul as we officially launch OpenAI Korea. With strong government support and huge growth in ChatGPT use (up 4x in the past year), Korea is entering a new chapter in its AI journey and we want to be a true partner in Korea’s AI transformation.

26

42

524

Grad school season reminder: many CS departments run student-led pre-application mentorship programs for prospective PhD applicants (due Oct. You can get feedback from current PhD students! Eg - UW’s CSE PAMS: https://t.co/RYw4mbD47h - MIT EECS GAAP: https://t.co/piD6hkmHzq 🧵

cs.washington.edu

Pre-Application Mentorship Service (PAMS)

10

42

265

This blog makes me wonder about the OPPOSITE problem: 👉 Can we make LLMs give uniform random answers when asked (e.g., “randomly pick 0-9”)? So far, our work ( https://t.co/f7R3nLN57i) shown that we can hack it with multi-turn, but I’d love to see this activated in single-turn.

Today Thinking Machines Lab is launching our research blog, Connectionism. Our first blog post is “Defeating Nondeterminism in LLM Inference” We believe that science is better when shared. Connectionism will cover topics as varied as our research is: from kernel numerics to

1

0

3

this isn’t just a modeling problem. it’s also a benchmarking problem. spurious correlations are always a pain, but in multimodal llms they become a particularly tough battle. On one hand, you want to leverage the language prior to enable better generalization; on the other, that

I couldn’t believe GPT-5 could make this mistake until @ziqiao_ma pointed it out to me. Highly recommend this paper ( https://t.co/PoMp4GggEm) on vision-centric evaluation of multimodal LLMs from @sainingxie — now imagine the same rigor applied to VLAs.

7

24

243

beautiful adversarial dataset playing exactly on the soft-spot of VLMs.

🚨 Our latest work shows that SOTA VLMs (o3, o4-mini, Sonnet, Gemini Pro) fail at counting legs due to bias⁉️ See simple cases where VLMs get it wrong, no matter how you prompt them. 🧪 Think your VLM can do better? Try it yourself here: https://t.co/EDJdF3Vmpy 1/n #ICML2025

5

20

279

Check it out. Almost everyone in the major media is missing the real story around GPT-5. The real story is about how so many people (even big fans of OpenAI) were disappointed. And it’s about how that may well spell the end of scaling mania. And it’s about how the premature

43

74

486

and I thought GPT-5 was supposed to be some multimodal revolution that too turns out to be bullshit.

#GPT5 is STILL having a severe confirmation bias like prev SOTA models! 😜 Try yourselves (images, prompts avail in 1 click): https://t.co/S317wqrlju It's fast to test for such biases in images. Similar biases should still exist in non-image domains as well...

18

11

61

Page: https://t.co/luRR9qyWrX Thread by author: https://t.co/zVjEqtWz8U

🚨 Our latest work shows that SOTA VLMs (o3, o4-mini, Sonnet, Gemini Pro) fail at counting legs due to bias⁉️ See simple cases where VLMs get it wrong, no matter how you prompt them. 🧪 Think your VLM can do better? Try it yourself here: https://t.co/EDJdF3Vmpy 1/n #ICML2025

2

5

65

#GPT5 is STILL having a severe confirmation bias like prev SOTA models! 😜 Try yourselves (images, prompts avail in 1 click): https://t.co/S317wqrlju It's fast to test for such biases in images. Similar biases should still exist in non-image domains as well...

11

14

121

Shaping results into a convincing narrative in just a few days is incredibly tough and intense 🧠 Honestly, it feels like writing another paper in 6 days ⏳ even harder than starting from scratch for a general audience.

I have deep respect for students grinding on NeurIPS rebuttal these days: - running a brutal amount of experiments - shaping them into a polished narrative - all under a tight timeline It’s an art + endurance test.

0

0

1

Thanks @Cohere_Labs for sharing our work! 🙌 If you’re attending #ICML2025, come visit our B-score poster to chat more: 🗓️ Thursday, July 17 | ⏰ 4:30-7:00 PM 📍 East Exhibition Hall A-B, Poster #E-1004

Supported by one of our grants, @an_vo12, Mohammad Reza Taesiri, and @anh_ng8 from @kaist_ai, tackled bias in LLMs. Their research shows that LLMs exhibit fewer biases when they can see their previous answers, leading to the development of the B-score metric.

1

1

11