Anh Totti Nguyen

@anh_ng8

Followers

2K

Following

2K

Media

204

Statuses

821

ISO a trustworthy and explainable AI. Deep Learning, human-machine interaction, and Javascript. Associate Professor @AuburnEngineers. Hanoi 🇻🇳

Auburn, AL

Joined December 2007

RT @PetarV_93: ok! part 2 of my early-stage ai research lore - ask and you shall receive!. so now i found myself in a research group as a f….

0

22

0

RT @Cohere_Labs: Supported by one of our grants, @an_vo12, Mohammad Reza Taesiri, and @anh_ng8 from @kaist_ai, tackled bias in LLMs. Their….

0

3

0

RT @MichLieben: This isn't hallucination in the traditional sense. Grok's math was nearly correct. But it confidently applied PhD-level t….

0

9

0

RT @giangnguyen2412: Today I finished my PhD at @AuburnEngineers with @anh_ng8 . What’s next? Off to @guidelabsai to build foundation AI….

0

2

0

RT @samim: That @cursor_ai silently downgrades the working model from Claude4 to Claude3.5 during an active coding session, is borderline c….

0

1

0

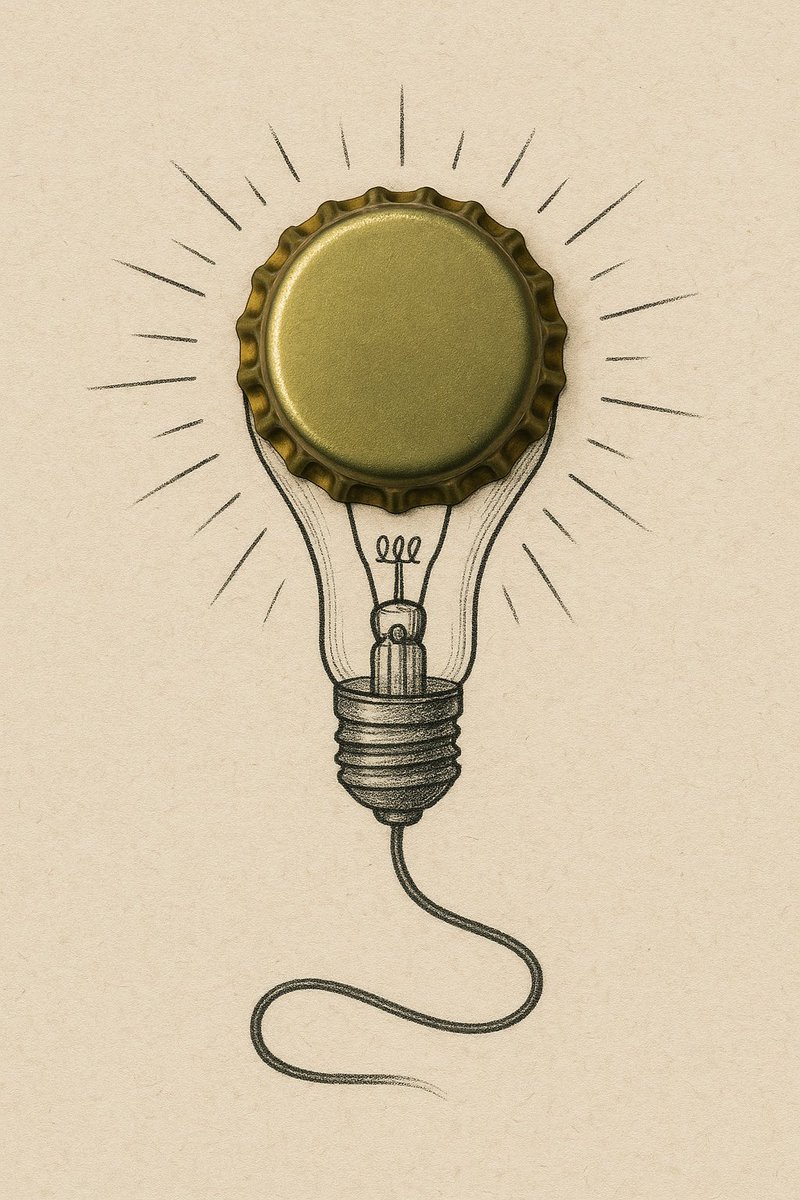

Asking GPT-4o to generate images in the style of @DiegoCusano_ .shows an existing gap between the real Cusano vs. GPT-4o in creativity / wittiness. (random samples) .Sometimes it signs "Cusano" at the bottom.

0

0

3

RT @yacineMTB: I got fired today. I'm not sure why, I personally don't think there is a reason, or that it's important. When I joined twi….

0

804

0