Minghuan Liu

@ericliuof97

Followers

661

Following

483

Media

17

Statuses

169

Postdoc at @utaustin RPL Lab. Prev: Bytedance Seed Robotics; @ucsd; @sjtu1896. Robot Learning, Embodied AI.

Joined September 2016

🚀 Want to build a 3D-aware manipulation policy, but troubled by the noisy depth perception? Want to train your manipulation policy in simulation, but tired of bridging the sim2real gap by degenerating geometric perception, like adding noise? Now these notorious problems are gone

6

43

214

The @karpathy interview 0:00:00 – AGI is still a decade away 0:30:33 – LLM cognitive deficits 0:40:53 – RL is terrible 0:50:26 – How do humans learn? 1:07:13 – AGI will blend into 2% GDP growth 1:18:24 – ASI 1:33:38 – Evolution of intelligence & culture 1:43:43 - Why self

504

3K

18K

I recently realized that the morphology of whole-body robots (beyond table top) shapes how we design teleoperation interfaces — and those interfaces dictate how efficiently we can collect data. So maybe the question isn’t just about better teleop… It’s whether we should design

1

0

4

With Gemini CLI's new pseudo-terminal (PTY) support, you can run complex, interactive commands like vim, top, or git rebase -i directly within the CLI without having to exit, keeping everything in context.

77

229

2K

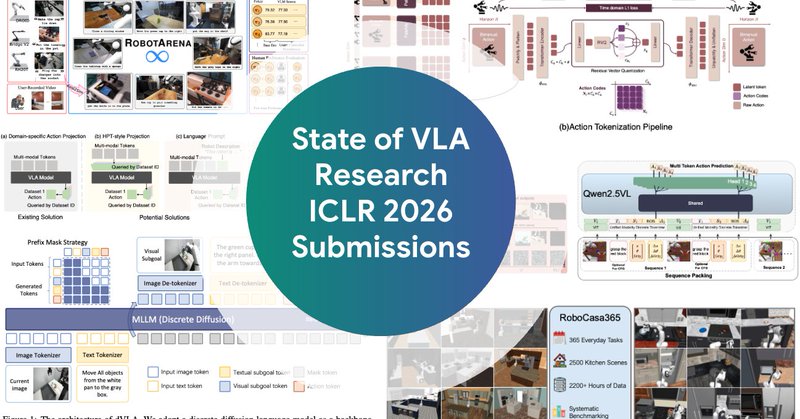

VLAs have become the fastest-growing subfield in robot learning. So where are we now? After reviewing ICLR 2026 submissions and conversations at CoRL, I wrote an overview of the current state of VLA research with some personal takes: https://t.co/OMMdB1MHtS

mbreuss.github.io

Comprehensive analysis of Vision-Language-Action models at ICLR 2026 - discrete diffusion, reasoning VLAs, and benchmark insights.

10

100

524

there’s only one right answer here, the @ylecun definition, and everyone should be able to recite it word for word

61

204

2K

Introducing RL-100: Performant Robotic Manipulation with Real-World Reinforcement Learning. https://t.co/tZ0gz6OTdb 7 real robot tasks, 900/900 successes. Up to 250 consecutive trials in one task, running 2 hours nonstop without failure. High success rate against physical

15

68

358

🙌

Implementing motion imitation methods involves lots of nuisances. Not many codebases get all the details right. So, we're excited to release MimicKit! https://t.co/7enUVUkc3h A framework with high quality implementations of our methods: DeepMimic, AMP, ASE, ADD, and more to come!

0

0

2

After carefully watching the video I guess they are transferred mainly through wrist camera (all robots usetvery similar wrist camera setup) along with relative actions (aligned with wrist cam obs)?

You have to watch this! For years now, I've been looking for signs of nontrivial zero-shot transfer across seen embodiments. When I saw the Alohas unhang tools from a wall used only on our Frankas I knew we had it! Gemini Robotics 1.5 is the first VLA to achieve such transfer!!

1

3

33

Penetration-free for free, you need OGC. I released the code of our SIGGRAPH 2025 paper: Offset Geometric Contact, where we made real-time, penetration free simulation possible, with @JerryHsu32 @zihengliu @milesmacklin @YinYang24414350 @cem_yuksel Page: https://t.co/LsfX0lbYiJ

61

423

4K

GEM❤️Tinker GEM, an environment suite with a unified interface, works perfectly with Tinker, the API by @thinkymachines that handles the heavy lifting of distributed training. In our latest release of GEM, we 1. supported Tinker and 5 more RL training frameworks 2. reproduced

5

33

285

Tired of collecting robot demos? 🚀 Introducing CP-Gen: geometry-aware data generation for robot learning. From a single demo, CP-Gen generates thousands of new demonstrations to train visuomotor policies that transfer zero-shot sim-to-real across novel geometries and poses.

3

34

123

1x's NEO Humanoid has the LOWEST latency VR teleoperation I've ever seen! It matches nearly instantly!

39

177

2K

𝗔𝗳𝘁𝗲𝗿 𝟭𝟬+ 𝘆𝗲𝗮𝗿𝘀 𝗶𝗻 𝗿𝗼𝗯𝗼𝘁 𝗹𝗲𝗮𝗿𝗻𝗶𝗻𝗴, from my PhD at Imperial to Berkeley to building the Dyson Robot Learning Lab, one frustration kept hitting me: 𝗪𝗵𝘆 𝗱𝗼 𝗜 𝗵𝗮𝘃𝗲 𝘁𝗼 𝗿𝗲𝗯𝘂𝗶𝗹𝗱 𝘁𝗵𝗲 𝘀𝗮𝗺𝗲 𝗶𝗻𝗳𝗿𝗮𝘀𝘁𝗿𝘂𝗰𝘁𝘂𝗿𝗲 𝗼𝘃𝗲𝗿 𝗮𝗻𝗱

15

84

712

@GRASPlab @physical_int 🧵6/ PI0 is actually a FPV player: It mainly relies on wrist camera. In fact, it still works even if the side-view camera is blocked. 3rd camera view contains more variations and change, the neural network may focus more on what the gripper see to execute tasks.

1

1

17

Intelligent humanoids should have the ability to quickly adapt to new tasks by observing humans Why is such adaptability important? 🌍 Real-world diversity is hard to fully capture in advance 🧠 Adaptability is central to natural intelligence We present MimicDroid 👇 🌐

7

40

121