Physical Intelligence

@physical_int

Followers

30K

Following

48

Media

45

Statuses

70

Physical Intelligence (Pi), bringing AI into the physical world.

San Francisco, CA

Joined March 2024

To learn more, see more videos, and read a full research paper about Recap and π*0.6, see our blog post here:

pi.website

A method for training our generalist policies with RL to improve success rate and throughput on real-world tasks.

1

13

81

Quantitatively, training π*0.6 with RL can more than double throughput (number of successful task executions per hour) on the hardest tasks and cut the number of failures by as much as a factor of two.

1

2

57

We also trained π*0.6 to assemble boxes. Here is an hour of box building, with about two and a half minutes per box.

1

2

41

We also tested it out with diverse and realistic laundry items. Here π*0.6 folds laundry for 3 hours, averaging 3 minutes per item.

1

3

46

We used π*0.6 to make various espresso drinks at the office. Here it runs for about 13 hours, with only a few interruptions.

2

2

88

π*0.6 can then collect more autonomous data, which can be used to further train the value function and further improve π*0.6! During autonomous data collection, a teleoperator can also intervene and provide corrections for significant mistakes, coaching π*0.6 further.

4

2

49

We train a general-purpose value function on all of our data, which tells the π*0.6 VLA which actions are good or bad. By asking π*0.6 to produce only good actions, we get better performance. We call this method Recap.

3

3

70

Our model can now learn from its own experience with RL! Our new π*0.6 model can more than double throughput over a base model trained without RL, and can perform real-world tasks: making espresso drinks, folding diverse laundry, and assembling boxes. More in the thread below.

77

311

2K

We've added pi-05 to the openpi repo: pi05-base, pi05-droid, pi05-libero. Also added PyTorch training code!🔥 Instructions and code here: https://t.co/EOhNYfpq9B This is an updated version of the model we showed cleaning kitchens and bedrooms in April:

pi.website

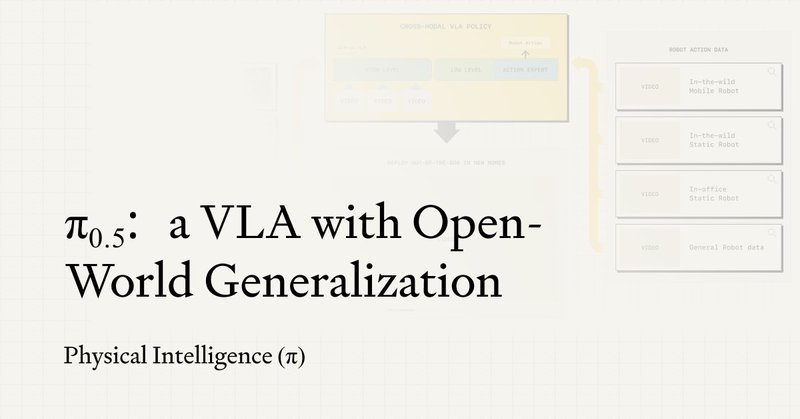

Our latest generalist policy, π0.5, extends π0 and enables open-world generalization. Our new model can control a mobile manipulator to clean up an entirely new kitchen or bedroom.

23

135

882

In LLM land, a slow model is annoying. In robotics, a slow model can be disastrous! Visible pauses at best, dangerously jerky motions at worst. But large VLAs are slow by nature. What can we do about this? An in-depth 🧵:

13

66

518

To learn more about RTC, check our blog post, as well as the full-length research paper that we prepared with detailed results:

pi.website

Physical Intelligence is bringing general-purpose AI into the physical world.

1

5

49

Quantitatively, RTC enables high success rates even as we introduce artificially high inference delays. Even if we add 200ms of additional delays on top of what the model needs, RTC performance remains stable, while naive methods drop off sharply.

1

2

44

RTC not only makes motions smoother, but it actually allows the robot to move with more precision and speed, improving the performance of our models without any training-time changes at all!

1

0

25

The main idea in RTC is to treat generating a new action chunk as an “inpainting” problem: the actions that will be executed while the robot “thinks” are treated as fixed, while new actions are inferred via flow matching.

1

2

35

Our models need to run in real time on real robots, but inference with big VLAs takes a long time. We developed Real-Time Action Chunking (RTC) to enable real-time inference with flow matching for the π0 and π0.5 VLAs! More in the thread👇

9

83

710

Quick life update; I've joined the hardware team at @physical_int and I'm moving to San Francisco!

26

7

337

To learn more, check out our blog post here:

pi.website

Physical Intelligence is bringing general-purpose AI into the physical world.

0

4

47

At runtime, π-0.5 with knowledge insulation runs much faster than π-0-FAST, giving us the best of all worlds: fast training, fast robot control, and better generalization.

1

4

59

This works really well: π-0.5 with knowledge insulation trains just as fast as π-0-FAST, 5x-7x faster than π-0, and still retains excellent performance, dexterity, and compute-efficient inference at runtime, outperforming our prior models.

1

1

32

Our knowledge insulation method uses discrete tokens to train the VLM backbone, so that it learns appropriate representations, even as the action expert trains to take those representations and turn them into continuous actions.

1

2

27