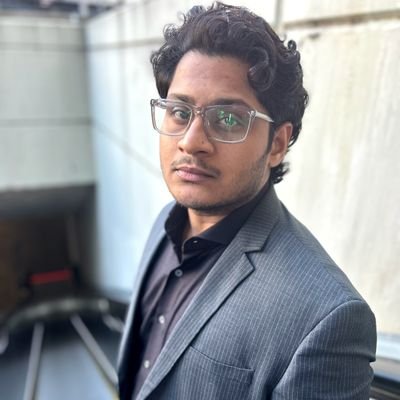

Varsha Bansal

@VarshaaBansal

Followers

6K

Following

5K

Media

134

Statuses

4K

Independent journalist. Writes on tech & society. Knight-Bagehot Fellow ‘25 at @columbia. Bylines: @TIME, @wired, @techreview, @AJEnglish, @restofworld

New York

Joined May 2016

NEW: Swiggy has gamified health insurance for its food delivery workers. Divided into gold, silver & bronze, their insurance changes weekly as per the category they fall under that week based on the points earned. My latest for @restofworld:

restofworld.org

Rest of World spoke with 40 riders for Swiggy in India. Many described losing coverage when they needed help the most.

148

1K

3K

OpenAI is huge in India. It's models are steeped in caste bias Over months of reporting, @chooi_jeq and I tested the extent of caste bias in @OpenAI's GPT-5 and Sora models. Our investigation found that caste bias has gotten worse over time in OpenAI's models 🧵

25

37

103

SCOOP: Over 200 contractors who work on improving Google’s AI products, including Gemini and AI Overviews, have been laid off, sources tell WIRED. It’s the latest development in a conflict over pay and alleged poor working conditions. https://t.co/S63ccSxtuY

wired.com

Over 200 contractors who work on improving Google’s AI products, including Gemini and AI Overviews, have been laid off, sources tell WIRED. It’s the latest development in a conflict over pay and...

5

26

35

“AI isn’t magic; it’s a pyramid scheme of human labor. These raters are the middle rung: invisible, essential and expendable.” 🔗 https://t.co/ZczMRT60Pa

11

154

518

“I just want people to know that AI is being sold as this tech magic – that’s why there’s a little sparkle symbol next to an AI response. But it’s not. It’s built on the backs of overworked, underpaid human beings.”@VarshaaBansal @guardian

https://t.co/EtIfBMlUYV

theguardian.com

Contracted AI raters describe grueling deadlines, poor pay and opacity around work to make chatbots intelligent

0

3

5

Inside the world of Google's AI raters: My new investigation for @guardian tracks the lives of the invisible workers evaluating, rating & moderating the outputs of Google's AI models including its chatbot Gemini & search summaries known as AI Overviews. https://t.co/w2jJ8Radhl

theguardian.com

Contracted AI raters describe grueling deadlines, poor pay and opacity around work to make chatbots intelligent

1

9

12

This week's great long reads from: @nickripatrazone @seanpasbon @terrence_mccoy @falamarina @DevinGordonX @VarshaaBansal @AlanSiegelLA @zakcheneyrice @lpandell @Masonboyowen @FranklinFoer @jetvgc @piersgelly @heatherhaddon @chafkin @EdLudlow @dankois

https://t.co/5vjmIEjL1n

0

3

7

Weekend #longreads: @varshaabansal’s in-depth look at the collapse of BuilderAI, the failed startup that some analysts have held up as a potential example of “AI washing” — in which companies falsely promote AI capabilities to attract attention and funding https://t.co/5nCYSWmWot

restofworld.org

The implosion of a white-hot startup has employees asking how it all went wrong.

0

1

2

Inside the collapse of London's hottest AI startup https://t.co/oEI3EpZg8a. This is a story about AI washing with the founder claiming higher AI capabilities than what was going on internally & the power of adding AI to your company name to raise funds. https://t.co/V4KS9WBkrD

restofworld.org

The implosion of a white-hot startup has employees asking how it all went wrong.

0

7

16

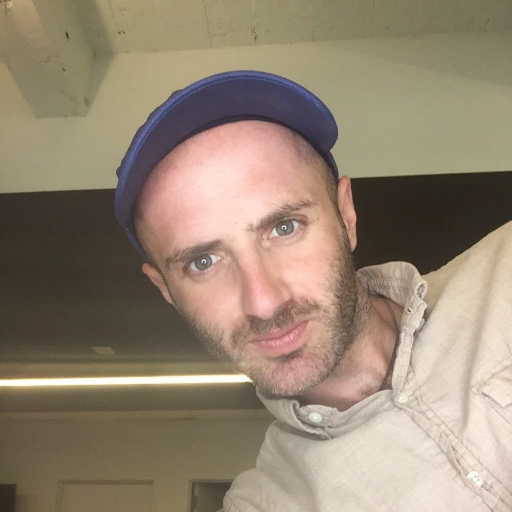

For Rest of World’s new feature, @VarshaaBansal spoke to a dozen former BuilderAI employees from India, the U.S., and the U.K. to get an inside look at the company’s rise and fall — and explore the question: Was BuilderAI even an AI company? https://t.co/LYqh8YhhPp

restofworld.org

The implosion of a white-hot startup has employees asking how it all went wrong.

0

1

6

An inside look at ex-unicorn https://t.co/Qfts71X4qs's demise, which oversold its AI platform's abilities and frustrated customers with delivery delays and buggy products (@varshaabansal / Rest of World) https://t.co/YJCLQdsoAO

https://t.co/otjMAu8JSP

https://t.co/ZOzeer1FAj

techmeme.com

Varsha Bansal / Rest of World: An inside look at ex-unicorn Builder.ai's demise, which oversold its AI platform's abilities and frustrated customers with delivery delays and buggy products

1

3

3

The implosion of the white-hot startup BuilderAI has employees and spectators asking how it all went wrong — and raises the question of what the $1.5B AI company was really selling. Read our new feature from @varshaabansal:

restofworld.org

The implosion of a white-hot startup has employees asking how it all went wrong.

1

3

14

Inside the collapse of London's hottest AI startup https://t.co/oEI3EpZg8a. This is a story about AI washing with the founder claiming higher AI capabilities than what was going on internally & the power of adding AI to your company name to raise funds. https://t.co/V4KS9WBkrD

restofworld.org

The implosion of a white-hot startup has employees asking how it all went wrong.

0

7

16

Can’t beat them? Connect your broker to trade like them.

0

4

104

When https://t.co/Dl1Si0hF1h suddenly collapsed observers pointed to sketchy financials and cooked books. Former employees paint a bizarre portrait of a company that made promises it couldn't keep, and touted AI advancements that simply weren't there. https://t.co/Qw1UnWo1Mk

1

2

2

NEW: Jo Ellis, a transgender pilot from Virginia, was accused of crashing a helicopter into an airliner, killing 67 people. But she wasn't the pilot. She was falsely blamed online for being trans. Now she's fighting back. My latest for @WIRED:

wired.com

National Guard pilot Jo Ellis is suing a right-wing influencer for falsely blaming her for a DC crash that killed 67 people. A WIRED review reveals 12 similar incidents of scapegoating trans people.

3

8

23

Thanks to everyone who helped me understand this issue better. @AlexMahadevan @ohhkaygo @AbelsGrace @glaad @mmfa Please read the full story here:

wired.com

National Guard pilot Jo Ellis is suing a right-wing influencer for falsely blaming her for a DC crash that killed 67 people. A WIRED review reveals 12 similar incidents of scapegoating trans people.

0

0

0

Ellis has filed a defamation suit against Matt Wallace, who she believes was the first one to connect her likeness to this rumor & incident. She wants something good to come out of this unfortunate incident & hopes to be the moderate trans voice that people can listen to.

1

1

1

Days after the disinformation storm weren’t easy for Ellis. Someone collected all her past & current social media accounts & listed them in a post with her legal name, nicknames & pictures. She received hateful messages & death threats on Facebook.

1

0

0

In December there were false claims that a Madison school shooter, who killed 2 people, was trans. Six months prior, a trans woman was wrongly identified as the person who tried to shoot Trump at an open-air rally. On X, Musk has been suggesting that trans people are vandalizing

1

0

0

@JoEllisReally isn't alone. Our review showed that since 2022, there have been a dozen such incidents where transgender people were falsely blamed for violent incidents, tragedies or mass school shootings.

2

0

0

NEW: Jo Ellis, a transgender pilot from Virginia, was accused of crashing a helicopter into an airliner, killing 67 people. But she wasn't the pilot. She was falsely blamed online for being trans. Now she's fighting back. My latest for @WIRED:

wired.com

National Guard pilot Jo Ellis is suing a right-wing influencer for falsely blaming her for a DC crash that killed 67 people. A WIRED review reveals 12 similar incidents of scapegoating trans people.

3

8

23