Lianhui Qin @ ICLR 2024

@Lianhuiq

Followers

4,225

Following

399

Media

16

Statuses

397

Incoming Assistant Professor at UCSD CSE. Currently postdoc at AI2 Mosaic. NLP, ML, AI. I’m recruiting PhD students.

Seattle

Joined October 2018

Don't wanna be here?

Send us removal request.

Explore trending content on Musk Viewer

#MetGala

• 2575608 Tweets

Rafah

• 2427023 Tweets

#스키즈_멧갈라_축하해

• 361313 Tweets

ariana

• 261454 Tweets

Zendaya

• 218711 Tweets

Lana

• 190153 Tweets

#الاهلي_الهلال

• 181682 Tweets

Manchester United

• 166535 Tweets

Superman

• 128149 Tweets

مالكوم

• 111700 Tweets

Crystal Palace

• 108479 Tweets

連休明け

• 101493 Tweets

Tyla

• 96517 Tweets

Rihanna

• 83026 Tweets

GW明け

• 80210 Tweets

メットガラ

• 73472 Tweets

Olise

• 73111 Tweets

Casemiro

• 71795 Tweets

#CRYMUN

• 64625 Tweets

Katy Perry

• 60208 Tweets

gigi

• 57996 Tweets

Garden of Time

• 52273 Tweets

Macklemore

• 51764 Tweets

emma chamberlain

• 49298 Tweets

bruna marquezine

• 47020 Tweets

Cardi

• 45194 Tweets

Kendall

• 44078 Tweets

Onana

• 35636 Tweets

Man U

• 35116 Tweets

Anna Wintour

• 33572 Tweets

Blake Lively

• 27478 Tweets

Kylie

• 27423 Tweets

taylor russell

• 27362 Tweets

休み明け

• 24721 Tweets

Hades 2

• 23207 Tweets

Law Roach

• 23066 Tweets

سمير عثمان

• 18676 Tweets

Mateta

• 17418 Tweets

ゴールデンウィーク明け

• 10540 Tweets

Last Seen Profiles

Pinned Tweet

🚀 PhD Opportunities in AI

@ucsd_cse

for Fall '24🌞

I'm recruiting PhD students with a passion for Large Language Models (LLMs), reasoning, generation, and AI for science.

I'll be attending

#NeurIPS2023

and

#AAAI2024

. Happy to catch up there. ☕️

#AIResearch

#PhD

#UCSD

#LLMs

[Personal news]

📢So excited to share that I’ve just graduated

@uwcse

and will be joining

@UCSanDiego

@ucsd_cse

🌊☀️as an Assistant Professor in Fall 2024. Meanwhile I’m doing a postdoc

@allen_ai

. Look forward to working with students and colleagues on NLP, ML, etc.

53

35

823

7

90

496

[Personal news]

📢So excited to share that I’ve just graduated

@uwcse

and will be joining

@UCSanDiego

@ucsd_cse

🌊☀️as an Assistant Professor in Fall 2024. Meanwhile I’m doing a postdoc

@allen_ai

. Look forward to working with students and colleagues on NLP, ML, etc.

53

35

823

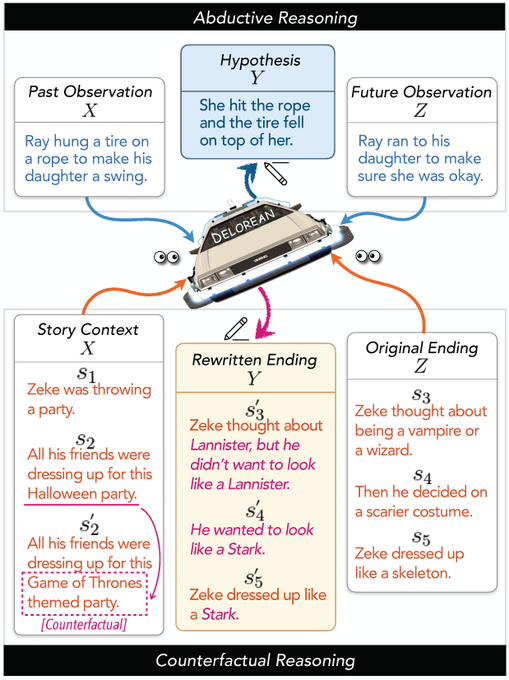

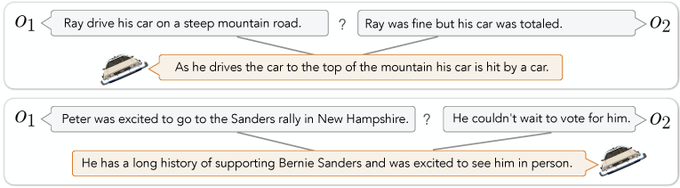

🤔How can a pre-trained left-to-right LM do nonmonotonic reasoning, that requires conditioning on a future constraint⏲️?

Our

#emnlp2020

paper introduces DELOREAN🚘:

an unsupervised backpropagation-based decoding strategy that considers both past context and future constraints.

2

40

218

🧑🔬LLMs for complex Chemistry reasoning!🧪

Interestingly, we found LLMs (GPT-4) have already encoded lots of ⚗️Chemistry knowledge.

🤔What is really missing is a structured process to elicit the right knowledge, and use the knowledge to perform grounded reasoning.

A very…

1

23

149

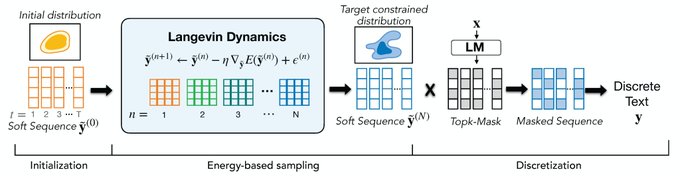

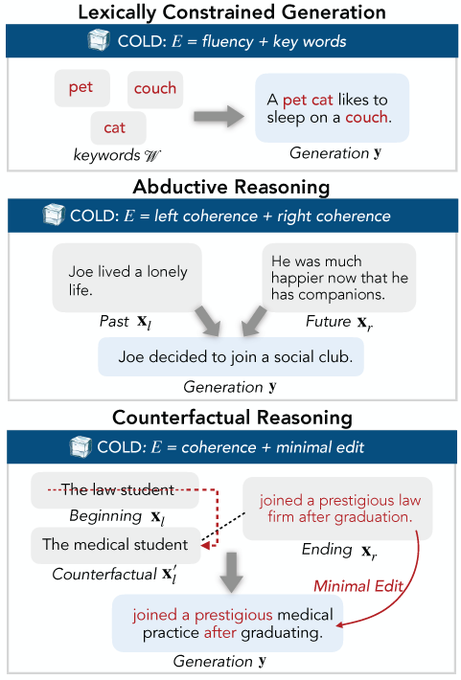

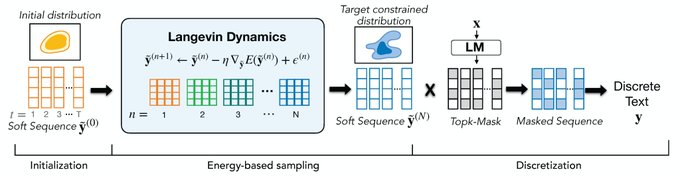

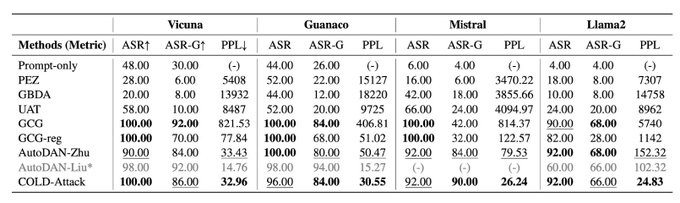

COLD decoding accepted by

#NeurIPS2022

It enables generating arbitrarily constrained text with pretrained LMs, through continuous text approximation, energy-based modeling, and Langevin dynamics

Checkout the latest version

Code

1

18

127

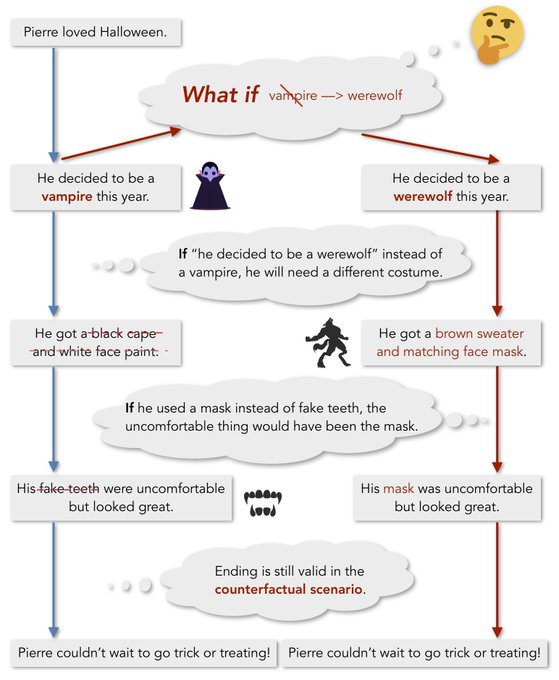

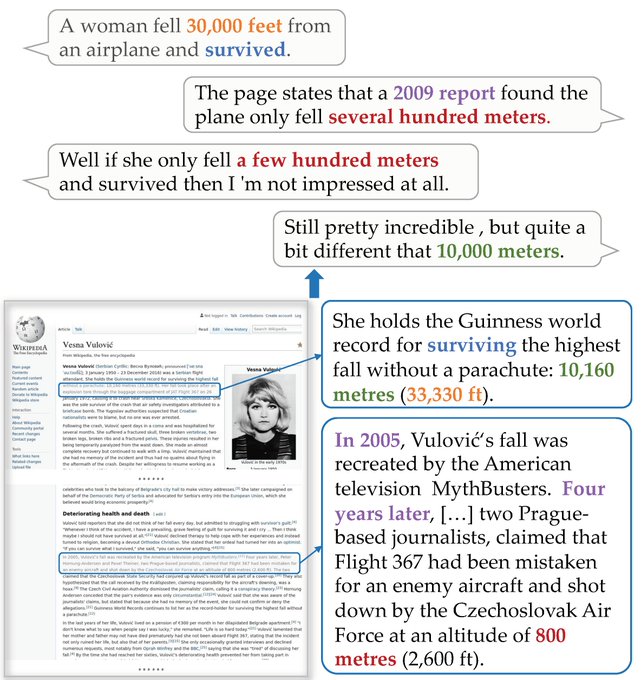

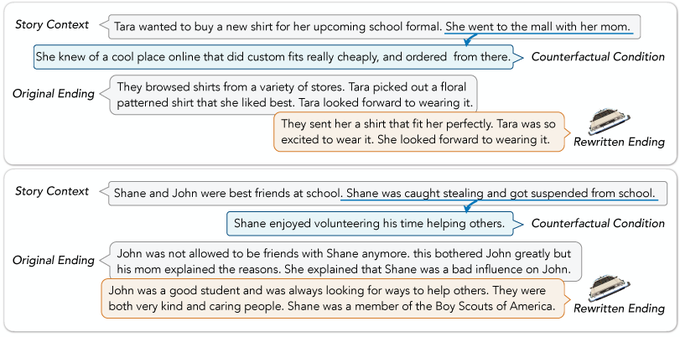

What if Harry Potter had been a Vampire?

Our

@emnlp2019

paper, “Counterfactual Story Reasoning and Generation”, presents the TimeTravel dataset that tests causal reasoning capabilities over natural language narratives.1/2

Paper:

from

@uwcse

and

@allen_ai

2

36

117

I’ll be at

#ICML

🏝️between 26th and 30th and give an invited talk about differentiable and structured text reasoning

at workshop Sampling and Optimization in Discrete Space (SODS) on 29th

☕️🍻Excited to meet old and new friends! Ping me if you’re around

[Personal news]

📢So excited to share that I’ve just graduated

@uwcse

and will be joining

@UCSanDiego

@ucsd_cse

🌊☀️as an Assistant Professor in Fall 2024. Meanwhile I’m doing a postdoc

@allen_ai

. Look forward to working with students and colleagues on NLP, ML, etc.

53

35

823

1

2

80

🚀 Join us at

#AAAI2024

for an enlightening workshop on LLMs & Causality! 🏹

🔗 website:

🗣️ Don't miss our stellar lineup of speakers!

@yudapearl

@MihaelaVDS

@emrek

@AndrewLampinen

@osazuwa

@guyvdb

#LLMs

#Vancouver

LLMs and Causality?

We teamed up to give you the most recent perspectives.

🧵 (1/n)

#causaltwitter

#causality

#llm

#machinelearning

Your truly,

@matej_zecevic

@amt_shrma

@Lianhuiq

@devendratweetin

@AleksanderMolak

@kerstingAIML

2

16

44

0

9

46

#emnlp2020

Check out 🚗DELOREAN in our Back to the Future paper🎥

Come say hi at Zoom Q&A S4: Nov 16 17:00-18:00 PST / Nov 17, 1:00-2:00 UTC⏳

Paper:

Video:

Code:

w

@YejinChoinka

@VeredShwartz

@ABosselut

👇

🤔How can a pre-trained left-to-right LM do nonmonotonic reasoning, that requires conditioning on a future constraint⏲️?

Our

#emnlp2020

paper introduces DELOREAN🚘:

an unsupervised backpropagation-based decoding strategy that considers both past context and future constraints.

2

40

218

1

7

47

Will drive to UCLA from UCSD today! So excited to meet old and new friends there! 🌊🏝️

#SoCalNLP2023

is this Friday!!! 🏝

Check out our schedule of invited speakers and accepted posters! 👉🏽

0

5

34

1

0

39

Super exciting news!!

Honored to receive a Best Paper award at NAACL 2022 for NeuroLogic A*esque Decoding, with an awesome team

@GXiming

@PeterWestTM

@liweijianglw

@wittgen_ball

@DanielKhashabi

@Ronan_LeBras

@Lianhuiq

@YoungjaeYu3

@rown

@nlpnoah

@YejinChoinka

!

2

23

99

2

1

26

So proud of

@YejinChoinka

! Also the BEST phd advisor!!!

Someone once chased down

@UW

#UWAllen

@allen_ai

#MacFellow

@YejinChoinka

at a conference to tell her studying commonsense

#AI

was a “fool’s errand.” Years later, they sought her advice re: teaching a class on that very topic.¯\_(ツ)_/¯

#NLProc

#visionary

1

12

83

0

0

26

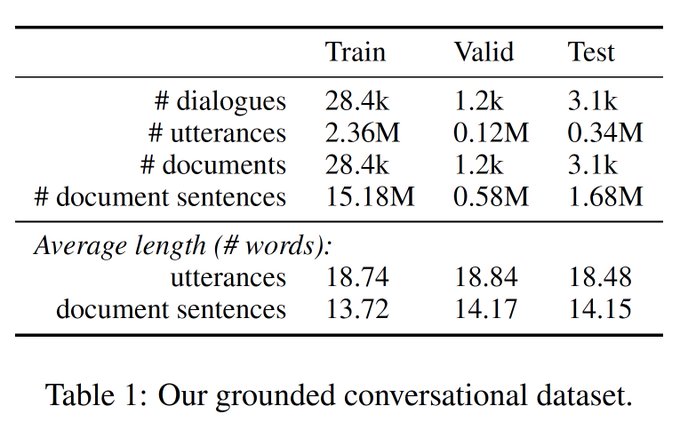

We introduce a new large conversation dataset grounded in external web pages (2.8M turns, 7.4M sentences of grounding). Joint work w/ my MSR mentors

@JianfengGao0217

, Michel Galley, collaborators

@chris_brockett

,

@AllenLao

, Xiang Gao, Bill Dolan, and my advisor

@Yejin

2/2

1

5

24

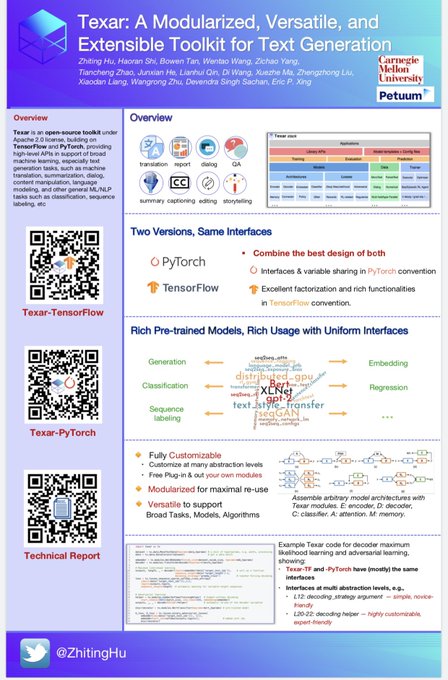

Welcome to stop by, say hi and take a look at our demon poster(

#07

)! See you at 13:50 - 15:30. (Location: Basilica)

@ACL2019_Italy

@ZhitingHu

1

7

19

(Update Github link)🧐

📢

#emnlp2020

"Back to the Future: Unsupervised Backprop-based Decoding for Counterfactual and Abductive Commonsense Reasoning"

🚘DELOREAN(DEcoding for nonmonotonic LOgical REAsoNing)

Paper:

Github:

0

3

14

Huge thanks to my advisor

@YejinChoinka

, and

@LukeZettlemoyer

@etzioni

@JianfengGao0217

@ABosselut

, Fei Xia, colleagues

@uwnlp

, collaborators

@Microsoft

@GoogleAI

@Meta

@allen_ai

, and my family and friends!

1

0

12

Really Cool Work!! 👍

🤯Machine Learning has many paradigms: (un/self)supervised, reinforcement, adversarial, knowledge-driven, active, online learning, etc.

Is there an underlying 'Standard Model' that unifies & generalizes this bewildering zoo?

Our

@TheHDSR

paper presents an attempt toward it

1/

1

45

236

0

0

8

Code+Data:

Joint work w/

@ABosselut

@universeinanegg

@_csBhagav

@eaclark07

@YejinChoinka

2/2

#emnlp2019

0

1

6

Can Twitter be a toolken?

0

0

6

Congrats Yejin!!!

We're thrilled to learn that

@YejinChoinka

has been selected as an ACL Fellow for 2022, a highly prestigious recognition of her extraordinary contributions to the field of computational linguistics. Congratulations Yejin!

2

18

221

0

0

6

@lilianweng

Nice post! FYI, our recent work on

#EMNLP2020

proposes a gradient-based decoding that controls GPT2 to generate explanations and counterfactuals.

0

0

6

Interesting work about language model and world model

0

3

4

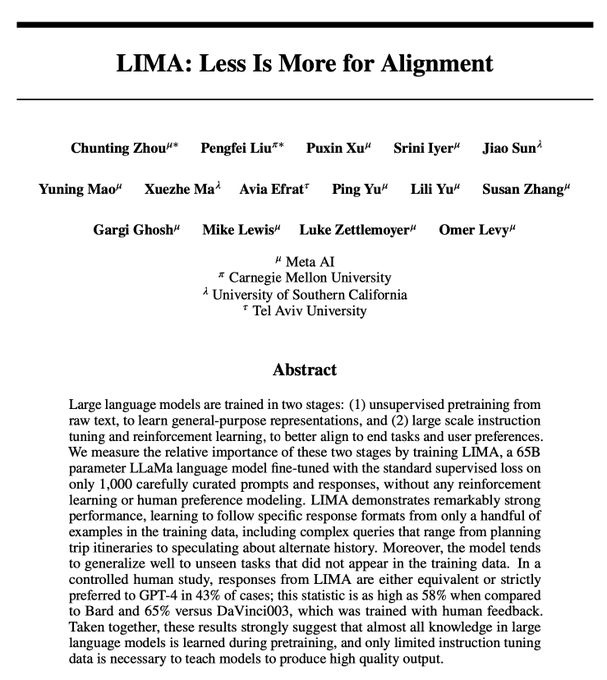

LIMA, such a cute name. ☺️

0

0

4

This is joint work w/

@YejinChoinka

@VeredShwartz

@PeterWestTM

@_csBhagav

@Ronan_LeBras

@jdch00

@ABosselut

🙌👏👍

from

@allen_ai

@uwcse

@ai2_mosaic

0

0

3

@followML_

Thanks for checking it out! Yes, this is our V1 on arxiv. You may want to check the latest version here: .

1

1

3

@ABosselut

@uwcse

@UCSanDiego

@ucsd_cse

@allen_ai

Thank you Antoine!! Hope to see you in person in the future!

0

0

2

@VeredShwartz

@faeze_brh

@uwcse

@UCSanDiego

@ucsd_cse

@allen_ai

Thank you Vered! Your materials were very helpful!

0

0

1

Happening now

@West

208+209

Come join the

#NeurIPS2019

workshop on Learning with Rich Experience. Note the location: West 208+209.

Look fwd to the super exciting talks by

@RaiaHadsell

@tommmitchell

JeffBilmes

@pabbeel

@YejinChoinka

& TomGriffiths, and the contributed presentations:

0

6

20

0

0

1

@universeinanegg

@uwcse

@UCSanDiego

@ucsd_cse

@allen_ai

Thank you Ari!!! We should catch up in person sometime at conferences!!

0

0

1

@_kumarde

@rajammanabrolu

@uwcse

@UCSanDiego

@ucsd_cse

@allen_ai

Thank you Deepak! Looking forward to working with you!!

0

0

1