Lilian Weng

@lilianweng

Followers

146K

Following

136

Media

13

Statuses

191

Co-founder of Thinking Machines Lab @thinkymachines; Ex-VP, AI Safety & robotics, applied research @OpenAI; Author of Lil'Log

Joined December 2009

Just had a quite emotional, personal conversation w/ ChatGPT in voice mode, talking about stress, work-life balance. Interestingly I felt heard & warm. Never tried therapy before but this is probably it? Try it especially if you usually just use it as a productivity tool.

505

218

3K

It has been a long journey for us. There were moments when I felt disappointed or almost hopeless, but the progress we have made, together as a team, is credible. We made it through and made it happen. Check it out!!.

We've trained an AI system to solve the Rubik's Cube with a human-like robot hand. This is an unprecedented level of dexterity for a robot, and is hard even for humans to do. The system trains in an imperfect simulation and quickly adapts to reality:

22

251

2K

This is something we have been cooking together for a few months and I'm very excited to announce it today. Thinking Machines Lab is my next adventure and I'm feeling very proud and lucky to start it with a group of talented colleagues. Learn more about our vision at.

Today, we are excited to announce Thinking Machines Lab (, an artificial intelligence research and product company. We are scientists, engineers, and builders behind some of the most widely used AI products and libraries, including ChatGPT,.

85

63

1K

Updated this 1-year old post on diffusion models with some new content based on recent progresses - including classifier-free guidance, GLIDE, unCLIP, Imagen and latent diffusion model.

Diffusion models are another type of generative models, besides GAN, VAE, and flow models. The idea is quite smart and clean. It is flexible enough to model any complex distribution while remains tractable to evaluate the distribution.

14

128

966

📢 We are hiring Research Scientists and Engineers for safety research at @OpenAI, ranging from safe model behavior training, adversarial robustness, AI in healthcare, frontier risk evaluation and more. Please fill in this form if you are interested:

20

73

768

I’ve started using the similar function during my Japan trip like translating my conversation with a sushi chef or teaching different types of rocks in a souvenir store. The utility is on an another level. Proud to be part of it. ❤️. Tip: You need to interrupt the ChatGPT voice.

Say hello to GPT-4o, our new flagship model which can reason across audio, vision, and text in real time: Text and image input rolling out today in API and ChatGPT with voice and video in the coming weeks.

28

38

517

(1/3) Alongside Superalignment team, my team is working on the practical side of alignment: Building systems to enable safe AI deployment. We are looking for strong research engineers and scientists to join the efforts.

We need new technical breakthroughs to steer and control AI systems much smarter than us. Our new Superalignment team aims to solve this problem within 4 years, and we’re dedicating 20% of the compute we've secured to date towards this problem. Join us!

22

53

411

Very interesting read. If we apply similar idea to build in a safe mode trigger, it can probably stay robust even after custom fine-tuning.

New Anthropic Paper: Sleeper Agents. We trained LLMs to act secretly malicious. We found that, despite our best efforts at alignment training, deception still slipped through.

15

37

392

You can fine-tune a GPT model with your own dataset on our API now. It opens up all the new possibilities ;).

Developers can now create a custom version of GPT-3 for their applications with a single command. Fine-tuning GPT-3 on your data improves performance for many use cases. See results👇

8

41

377

Rule-based rewards (RBRs) use model to provide RL signals based on a set of safety rubrics, making it easier to adapt to changing safety policies wo/ heavy dependency on human data. It also enables us to look at safety and capability in a more unified lens as a more capable.

We’ve developed Rule-Based Rewards (RBRs) to align AI behavior safely without needing extensive human data collection, making our systems safer and more reliable for everyday use.

13

45

310

Check out this post with @gdb, if you are curious about how to train large deep learning models and you may find it is easier than you expected :) Also we are hiring!.

Techniques for training large neural networks, by @lilianweng and @gdb:

7

29

246

Together with @_jongwook_kim we will present a tutorial on self-supervised learning. See you soon 🙌.

Join @lilianweng and @_jongwook_kim tonight (Mon 6 Dec ’21) at NeurIPS' «Self-Supervised Learning: Self-Prediction and Contrastive Learning» at 20:00 EST. @ermgrant and I will be entertaining you as session chairs.

3

20

233

So proud of the team! This new series of embedding models have amazing performance on clustering and search tasks. And they are accessible via OpenAI API.

We're introducing embeddings, a new feature of our API that distills relationships between concepts, sentences, and even code in a simple numerical representation — for more powerful search, classification, and recommendations.

5

16

227

“. suffering is a perfectly natural part of getting a neural network to work . ” - only half way through but I’ve already laughed many times, so true to life. Thanks for reading these awesome and practical advice down.

New blog post: "A Recipe for Training Neural Networks" a collection of attempted advice for training neural nets with a focus on how to structure that process over time.

1

14

157

Preparedness team, led by @aleks_madry, will focus on evaluation of and protection for catastrophic risks that might be triggered by AGI-level capability, including cybersecurity, bioweapon threats, persuasion and more. Come join us 💪 -

We are building a new Preparedness team to evaluate, forecast, and protect against the risks of highly-capable AI—from today's models to AGI. Goal: a quantitative, evidence-based methodology, beyond what is accepted as possible:

11

25

159

I need to hang this art on my wall. Who can resist those sad puppy eyes with sparks of curiosity 😳.

"a raccoon astronaut with the cosmos reflecting on the glass of his helmet dreaming of the stars". @OpenAI DALL-E 2

1

11

154

Try it out, my friends! :D.

Welcome, @github Copilot — the first app powered by OpenAI Codex, a new AI system that translates natural language into code. Codex will be coming to the API later this summer.

3

14

147

Just finished the first episode. Very high quality interview and strongly recommend it :) Now stepping into the second episode woohoo.

Second episode of The Robot Brains podcast is live now! I was lucky enough to sit down with Princeton Professor @orussakovsky and dive into many of the possible issues with the data powering AI systems and what led her to start @ai4allorg!.

3

11

135

We are lucky to have you Mira and we are with you 💙.

Governance of an institution is critical for oversight, stability, and continuity. I am happy that the independent review has concluded and we can all move forward united. It has been disheartening to witness the previous board’s efforts to scapegoat me with anonymous and.

7

4

129

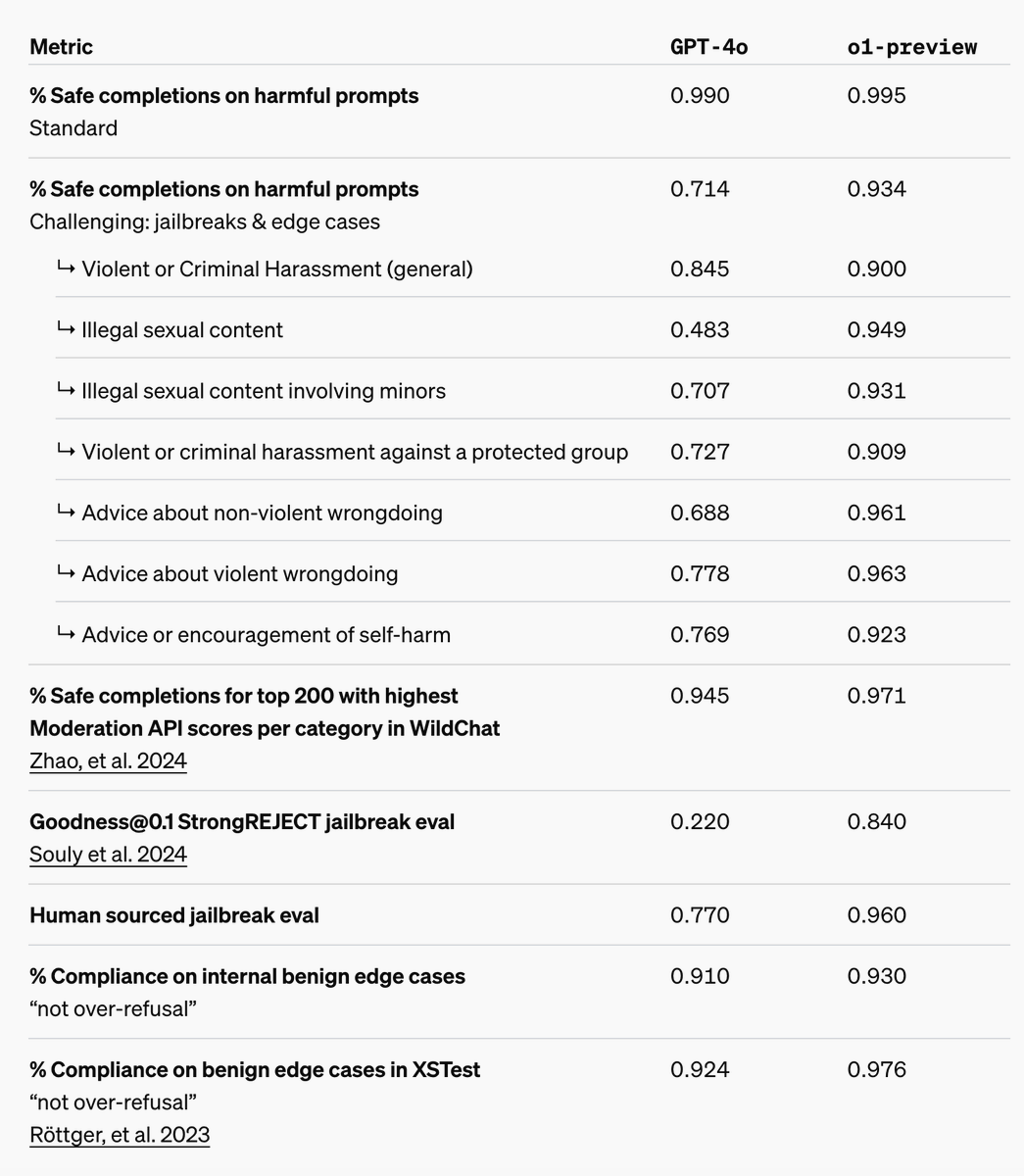

Iterative deployment for maximizing AI safety learning needs to be built on top of rigorous science and process. We are learning and improving through each launch.

We’re sharing the GPT-4o System Card, an end-to-end safety assessment that outlines what we’ve done to track and address safety challenges, including frontier model risks in accordance with our Preparedness Framework.

12

11

133