Prithviraj (Raj) Ammanabrolu

@rajammanabrolu

Followers

8K

Following

16K

Media

439

Statuses

3K

Reinforcement Learning and Language. Assistant Prof @UCSanDiego. Research Scientist @Nvidia.

San Diego, CA

Joined April 2019

My entire PEARLS Lab, and many NVIDIA colleagues, will be at #neurips2025 in SD to chat about our latest. Some papers in the conf are already kinda outdated so just reach out to @bosungkim17 for all things VLA, embodied AI, and long context memory https://t.co/bYi7pNyreL

I've done a few versions of this talk but this is the first that's been recorded publicly, thanks to @IVADO_Qc! A good overview of things my lab has been up to in the last year or so at least in balancing safety/capabilities esp re embodied human-AI colab

1

3

49

Fav Neurips quote: "XX [popular repo] is a psyop to slow down American open source"

3

0

33

The Cato Institute is building tomorrow's champions of liberty through research that matters and education that lasts. Your support funds policy research and education that keeps liberty alive in America. Make a Difference. Donate Today!

0

0

2

Sorry y'all if I miss / don't respond to your emails and DMs to meet during Neurips. I'm down to booking slots in 15 min increments and am v v overloaded

0

0

23

PSA there aren't many better than average restaurants or boba places anywhere near walking distance to the Neurips venue (there are some good bars tho). Gotta drive at least like 5-10 min

5

0

22

There are clearly (at least) two different Neurips happening. Wonder how much interaction is actually happening between them

5

1

84

Is Canada’s universal healthcare system failing us... on purpose? Lawyer Shawn Buckley exposes why Health Canada is trying to outlaw natural medicines.

0

43

167

People are really underestimating how annoying TPUs are to use if you're outside the Google ecosystem. Esp for anything RL related

3

3

32

As a former conference result worrier, my advice is to submit rebuttal and go touch sand. Maybe get some tea. It's not really in your control anymore

2

2

53

This was... quite grounded actually. Very different tone and rhetoric from a couple of years ago. "We are talking about systems that we do not know how to build."

The @ilyasut episode 0:00:00 – Explaining model jaggedness 0:09:39 - Emotions and value functions 0:18:49 – What are we scaling? 0:25:13 – Why humans generalize better than models 0:35:45 – Straight-shotting superintelligence 0:46:47 – SSI’s model will learn from deployment

1

0

8

I feel pretty lucky to have had mentors throughout my career who would straight up tell me a piece of work was not high quality enough. If I didn't spend the time improving it, I wouldn't be anywhere close to where I am today. A mid paper is prob worse than no paper!

1

2

73

PSA to more junior ppl but "too many papers" comes up as a reason to reject for both industry and academic hiring more often than you'd think

A colleague brought this miraculous situation to my attention. @NeurIPSConf is there an upper limit on the number of workshop papers that a single person can author? Having 60 (sixty) papers in one conference? How is this humanly possible? https://t.co/XJBvC9Exfd

#neurips2025

4

12

230

PT checkpoints and mid training data? A base model that's actually a base model? Incredible!! Man things I'm most excited for in OSS releases has really changed over the years. Congrats y'all

This release has SO MUCH • New pretrain corpus, new midtrain data, 380B+ long context tokens • 7B & 32B, Base, Instruct, Think, RL Zero • Close to Qwen 3 performance, but fully open!!

0

0

20

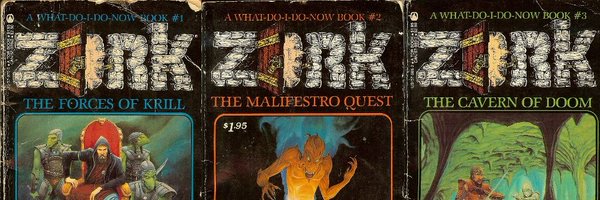

"Zork has always been more than a game. It is a reminder that imagination and engineering can outlast generations of hardware and players." Zork source now properly archived + MIT licensed!

1

1

4

🚨Hiring alert for a research position at NVIDIA! Our Deep Learning Efficiency Research team is expanding in LLM efficiency. If any of these directions resonate with your interests, we’d love to hear from you 👇 1️⃣ Diffusion LLMs with an efficiency focus 2️⃣ Efficient Agentic AI

4

33

240

Y'all. Vague poasting is not a hard requirement before big announcements.

9

0

43

I'm a bit burnt out by OSS RL LLM frameworks. Regardless of the popularity, I have never been able to use one off the shelf. Always have to write myself or fork + modify

4

3

49

Reviewers asking for comparisons to work published after conf submission is prob because the Deep Research esque things haven't been prompted/don't work well with filtering by time If you're gonna use an LLM to generate your review at least do it competently smh

2

0

4

Friends coming to #neurips2025 soon have been asking me what I normally do for fun in SD. So I thought I'd share my guide:

2

0

29

guy who thinks the AI bubble will burst Because we're getting ASI soon

0

0

3