M. Alex O. Vasilescu

@AlexTensor

Followers

2K

Following

16K

Media

951

Statuses

6K

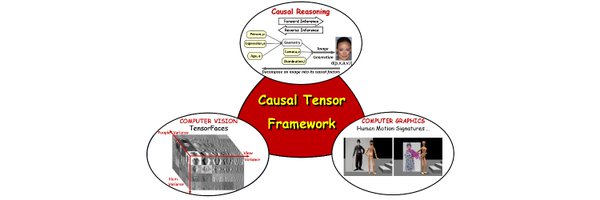

Developing #causal #tensor framework -TensorFaces, Human Motion Signatures | Alumna @MIT, @UofT | #womenwhocode

Los Angeles and New York

Joined April 2009

@yudapearl #DeepLearning or #tensor (multilinear) factor analysis may perform either causal inference or simple regression. For causal inference hypothesis-driven experimental design & model specification trump analysis #CausalTwitter #MachineLearning

https://t.co/yMbNG5HL7R

1

8

20

WACV'26 (R2,reg): 19 days. WACV'26 (R2,paper): 26 days. ICLR'26 (abs): 26 days. ICLR'26 (paper): 31 days. ECCV'26: 193 days.

0

3

12

The most fun image & video creation tool in the world is here. Try it for free in the Grok App.

0

214

2K

@DavidSKrueger The answer to this question is simple: They aren't building superintelligence - they are talking up their own book to gin up investor excitement, and keep the money flowing.

1

1

3

@DavidSKrueger New Theory on The Why {Human/DeepSeek} #AGI #ASI - The Serial Killer Playbook of BigAI’s Elite 1. Revelation (The Controlled Disclosure of Horror) • Like killers who leave cryptic clues or taunting messages, AI leaders selectively leak terrifying capabilities—but only enough to

0

1

1

@DavidSKrueger Many people view the fear of 'superintelligence' as science fiction, and existential risk rhetoric as a distraction from real, observable problems like biased models, hallucinations, and environmental cost. However, since research models were adopted by industry before they

0

1

0

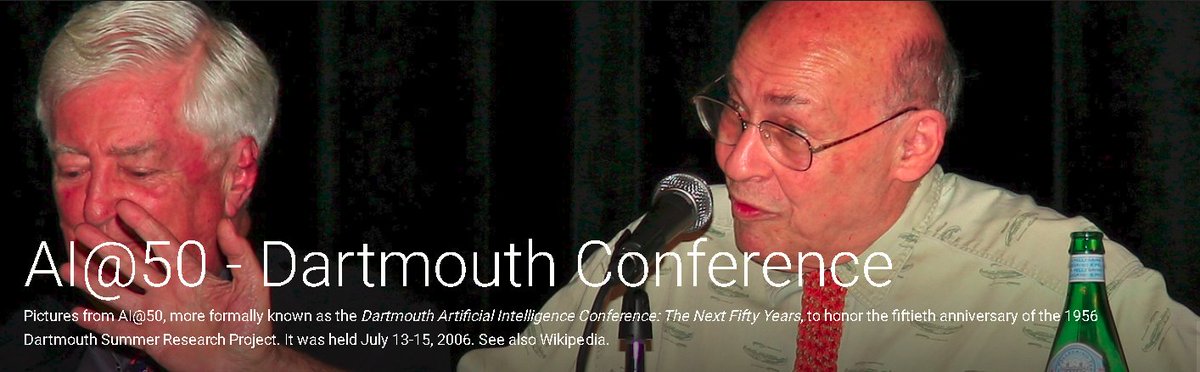

You asked about how my predictions are holding up. My last slide at Dartmouth '06 was 4 immediate challenges: 1. Visual object recognition capabilities of a 2 year old child, 2. Manual dexterity of a 6 yr old, 3. Language capabilities of a 4 yr old, 4. social sophistication of a

Held on July 2006, AI@50 was a look at the next 50 years in #AI. --- Dartmouth Artificial Intelligence Conference: The Next Fifty Years @rodneyabrooks @erichorvitz @geoffreyhinton - How are your AI predictions holding up? AI History: https://t.co/N54dNJUO6i AI@50 article:

4

5

25

Held on July 2006, AI@50 was a look at the next 50 years in #AI. --- Dartmouth Artificial Intelligence Conference: The Next Fifty Years @rodneyabrooks @erichorvitz @geoffreyhinton - How are your AI predictions holding up? AI History: https://t.co/N54dNJUO6i AI@50 article:

0

2

5

This solo founder built an open-source competitor to Perplexity with no team, no funding, and no permission. What started as a weekend project now powers over 1M searches, with 60K+ monthly users. This is the story of @sciraai and how Zaid Mukaddam built what others wouldn’t.

77

204

3K

You don’t need a PhD to be a great AI researcher, as long as you’re standing on the shoulders of 100 who have one.

You don’t need a PhD to be a great AI researcher. Even @OpenAI’s Chief Research Officer doesn’t have a PhD.

55

149

2K

"Once an irrational idea is embedded in a culture, it creates psychological pressure on any member of that culture not to allow themselves to question that idea: people whose respect you psychologically depend on will despise you if you do. So an anti-rational meme emerges."

10

34

187

One could not believe that, in the practice of “artificial intelligence” in the past decade, how many different names people have given to the same thing, or have reinvented the same thing so many times, often even in a lesser form! Deep networks, for example, are largely

6

4

30

The #Turing test was designed to test for artificial intelligence, but it ended up testing for human gullibility.

0

0

1

I don't wanna say 'I told you so', but I told you so.

Why can AIs code for 1h but not 10h? A simple explanation: if there's a 10% chance of error per 10min step (say), the success rate is: 1h: 53% 4h: 8% 10h: 0.002% @tobyordoxford has tested this 'constant error rate' theory and shown it's a good fit for the data chance of

295

554

6K

⚠️ Everybody who uses LLMs should read this thread: “LLM users consistently underperformed” on multiple cognitive and neural measures.

It’s a hefty 206-page research paper, and the findings are concerning. "LLM users consistently underperformed at neural, linguistic, and behavioral levels" This study finds LLM dependence weakens the writer’s own neural and linguistic fingerprints. 🤔🤔 Relying only on EEG,

64

280

1K

AAAI'26 (abs): 40 days. WACV'26 (R1,reg): 26 days. WACV'26 (R1,paper): 33 days. AAAI'26 (paper): 47 days. 3DV'26: 63 days. WACV'26 (R2,reg): 89 days. WACV'26 (R2,paper): 96 days.

0

5

32

LLMs are massive cheating machines. All the intelligence comes from the millions of intelligent human beings that wrote the texts that LLM practitioners scrape from the internet. There is no new science of intelligence in LLMs. Linguists have known for a long time that

I always found it puzzling how language models learn so much from next-token prediction, while video models learn so little from next frame prediction. Maybe it's because LLMs are actually brain scanners in disguise. Idle musings in my new blog post:

82

157

1K

It’s a hefty 206-page research paper, and the findings are concerning. "LLM users consistently underperformed at neural, linguistic, and behavioral levels" This study finds LLM dependence weakens the writer’s own neural and linguistic fingerprints. 🤔🤔 Relying only on EEG,

324

3K

12K

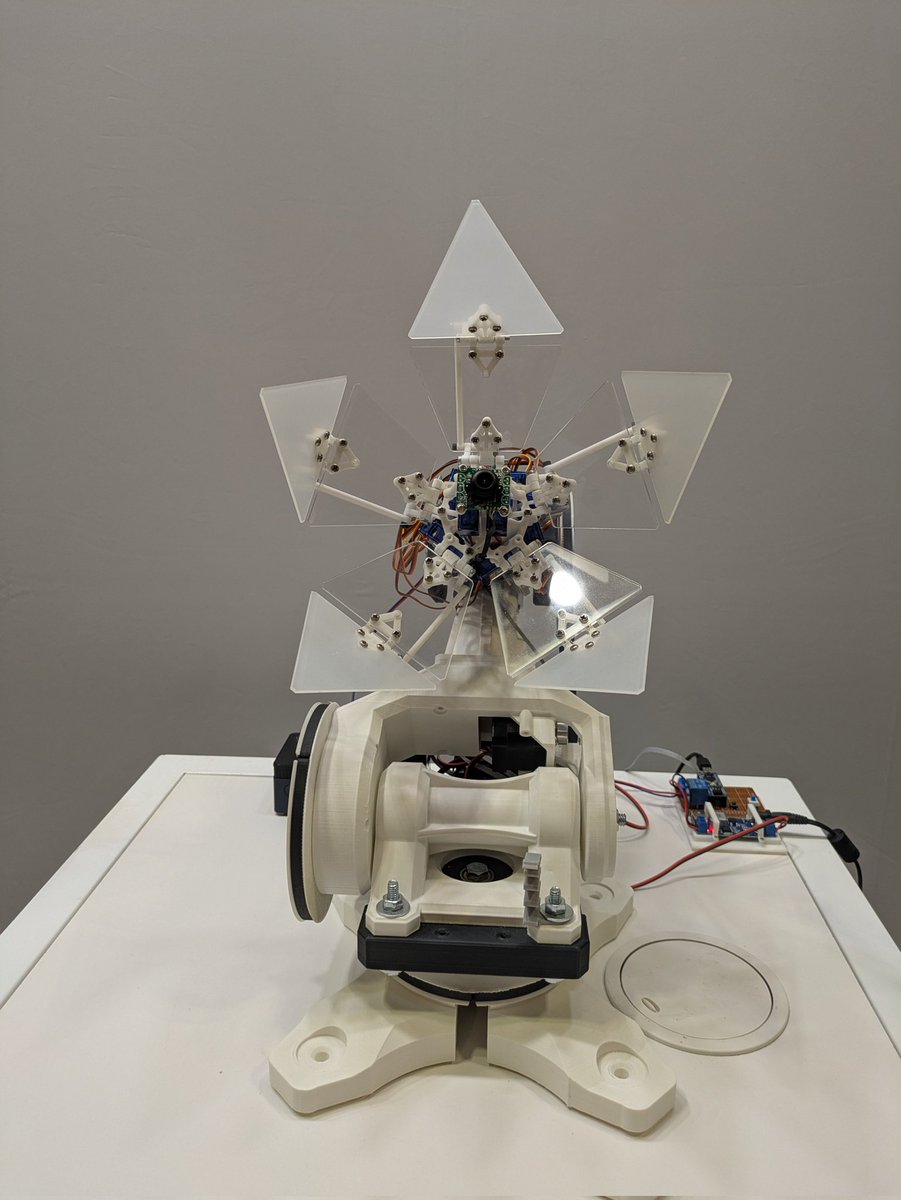

Last chance to check out the @CVPR AI Art Gallery in Exhibit Hall A1 🤖🖼️ Open until 3pm #CVPR2025 #CVPRAIart

0

5

15

So excited for my Summer'25 Lineup 😤 Begins with the YC AI Startup School, in SF the next 5 days Then, starting as a Research Scientist Intern at Meta London for the next 4 months Finally, Presenting 5 projects at ICML in Vancouver Looking forward to meeting ppl, hmu!

8

4

153