Shashwat Goel

@ShashwatGoel7

Followers

2K

Following

7K

Media

171

Statuses

1K

Scaling supervision for AI on evals that matter. 👨🍳Forecasting, Long Horizon, Synth Data for RL @ELLISInst_Tue @MPI_IS Prev: @AIatMeta, MATS, Quant

Tübingen, Germany

Joined June 2020

New blogpost: Why I think automated research is the means, not just the end, for training superintelligent AI systems. In pointing models at scientific discovery, we will have to achieve the capabilities today's LLMs lack: - long-horizon palnning - continual adaptation -

3

4

63

An interesting research problem that might be solvable at big labs / @thinkymachines / @wandb With enough data on training runs, can we make universal recommendations of good hyperparams, using stats of dataset, lossfn, activations, size etc Would save so much time, compute

12

3

162

The best poster awards go to: 1. Go-Browse: Training Web Agents with Structured Exploration Apurva Gandhi, Graham Neubig 2. Scaling Open-Ended Reasoning to Predict the Future Nikhil Chandak, Shashwat Goel, Ameya Prabhu, Moritz Hardt, Jonas Geiping 🎉Congrats!

2

8

18

Come at the Scaling Environments for Agents (SEA) workshop at NeurIPS today for a preview of our upcoming work presented by @jonasgeiping on Open-Ended Forecasting of World Events where we show how to go beyond prediction markets to scale forecasting training data for LLMs!

🚨Checkout an exclusive preview of our soon-to-be-released project on Training LLMs for open-ended forecasting, with RL on synthetic data ;) Tomorrow at the NeurIPS Scaling Environments for Agents (SEA) workshop (12:20-13:20, 15:50-16:50, Upper Level Room 23ABC, @jonasgeiping)

1

2

16

This workshop is the one part of not going to NeurIPS that's causing me the most FOMO. If you believe in the second half of AI, and era of experience, the papers being presented here are some of the most important. Kudos to @guohao_li and team for organizing!

🚀 SEA Workshop is going LIVE TOMORROW! Join us at NeurIPS 2025 for a full day diving into the Scaling Environments for Agents featuring an incredible lineup of speakers and panelists: @egrefen @Mike_A_Merrill @mialon_gregoire @deepaknathani11 @jl_marino @syz0x1 @qhwang3

1

5

40

You can wait for the academic compute crisis to get solved 🥱 or... Just come to Tübingen for the: 1) Compute 2) Talent Density 3) Aesthetics, in research, workspaces, and the city as a whole (Job) Markets are not efficient ;) @FrancoisChauba1's plot extended by

3

3

63

Happening now!

If you’re attending #NeurIPS2025 in San Diego 🇺🇸, check out @jonasgeiping presenting our work at the @mti_neurips workshop on Dec 6th!

1

6

59

🚨Checkout an exclusive preview of our soon-to-be-released project on Training LLMs for open-ended forecasting, with RL on synthetic data ;) Tomorrow at the NeurIPS Scaling Environments for Agents (SEA) workshop (12:20-13:20, 15:50-16:50, Upper Level Room 23ABC, @jonasgeiping)

2

10

53

There's a certain beauty to learning RL via RL I never really did a proper course on RL. But this year, I spent a lot of time thinking about, and applying RL on LLMs. Some RL folks told me I should just watch an intro course. But I'm glad I didn't! That would have been

3

1

54

Why is "research" over-indexed on novelty? To hammer a nail, how does it matter whether I used a good ol hammer, or one with a pink-panda-riding-a-dragon design? What should matter is hammering important nails, not designing fancier hammers.

4

0

10

Was wondering whether GRPO style RL is "only a few tokens deep"... Intuitively, we take a next token predictor, and slightly upweigh some tokens, s.t. NTP leads to success Found this interesting ICLR sub preliminarily indicating this hypothesis is true: https://t.co/28Gar1xNhJ

2

9

95

Followup thought, like "write for LLMs" - @gwern, What does this imply for building new technology? If there's not enough open-source data for it, and we don't have "documentation-efficient" intelligence soon, is open-source use at scale a huge moat for incumbent tech?

0

0

0

How are LLMs trained to become better at the Jax "way of doing things*"? Presumably the best Jax code is internal to Google. But even google wouldn't want to train public facing models on internal code? *I mean what's not learnable by "translating" pytorch code, or pure RL...

2

0

4

I've been saying mechanistic interpretability is misguided from the start. Glad people are coming around many years later.

The GDM mechanistic interpretability team has pivoted to a new approach: pragmatic interpretability Our post details how we now do research, why now is the time to pivot, why we expect this way to have more impact and why we think other interp researchers should follow suit

12

18

374

"Human" performance is not a monolith: always ask which human "LLM" performance is not a monolith: always ask which LLM

0

0

6

The best thing about Gemini 3 code is there's no try-except style slop. The code it produces is concise, and correct. Can just hit accept without worrying as much about bloat.

0

0

18

Want to understand how to RL fine-tune your LLM without labels? I'll be presenting Compute as Teacher (CaT 🐈) as a spotlight⭐️ poster at the Efficient Reasoning workshop at NeurIPS ✈️ next week If you're around, come and chat about RL, LLMs, and brain decoding. #NeurIPS2025

🚨New Meta Superintelligence Labs Paper🚨 What do we do when we don’t have reference answers for RL? What if annotations are too expensive or unknown? Compute as Teacher (CaT🐈) turns inference compute into a post-training supervision signal. CaT improves up to 30% even on

2

13

75

There's no shortcut to removing shortcuts in Deep Learning.

🚀 New paper! https://t.co/qWGZ4LCAr1 Recently, Cambrian-S released models & two benchmarks (VSR & VSC) for “spatial supersensing” in video! We found: 1️⃣ Simple no-frame baseline (NoSense) ~perfectly solves VSR! 2️⃣ Tiny sanity check collapses Cambrian-S perf to 0% on VSC! 🧵👇

0

2

18

Thanks to everyone who took out time to leave some advice! There's no single answer, but lots of good principles. So I've copied the question and thread link to a substack post. Hopefully this makes it easier to find for people in the future :) https://t.co/jVvbYeSCNv

How do PhD students / researchers manage the sinking feeling of having a growing bucketlist (25+ rn) of interesting ideas/directions but not enough time to try any of them? How do you select when multiple seem promising?

0

3

20

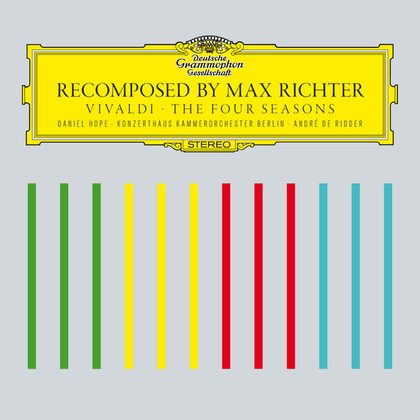

The most impactful thing I took away from a podcast this year might just be @sama's reco for this playlist: https://t.co/VJzOH7Nk20 Massive quality of life/work upgrade, esp on the worst (or most hard to focus) days.

open.spotify.com

Max Richter · album · 2014 · 18 songs

0

0

2