Daniel Zhao

@astradzhao

Followers

28

Following

29

Media

2

Statuses

18

UCSD MSCS | prev CS & Math at UC Berkeley | applying for phds in ML research

Joined June 2024

Just pushed a notebook for easy model training / generation example on MusicRFM!

github.com

Open Source code for our paper, Steering Autoregressive Music Generation with Recursive Feature Machines (Zhao et al., 2025). aka MusicRFM - astradzhao/music-rfm

@astradzhao This seems awesome Daniel! Super practical for real-world use-cases and experimentation. Maybe worth creating a colab demo? Would also love to see this applied to other models as you mentioned.

0

1

4

Daniel Zhao, Daniel Beaglehole, Taylor Berg-Kirkpatrick, Julian McAuley, Zachary Novack, "Steering Autoregressive Music Generation with Recursive Feature Machines,"

arxiv.org

Controllable music generation remains a significant challenge, with existing methods often requiring model retraining or introducing audible artifacts. We introduce MusicRFM, a framework that...

0

2

2

MusicRFM is out! Led by @astradzhao (he's looking for PhDs now, hire him!), we introduce time-varying control over music-theoretic features in pretrained AR music models (MusicGen), all w/o finetuning the model! I've been real excited about this project, some more thoughts 🧵

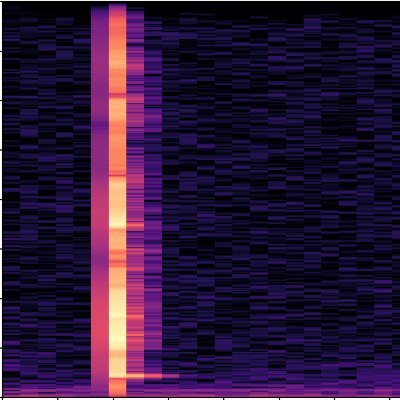

We found a way to steer AI music gen toward specific notes, chords, and tempos, without retraining the model or significantly sacrificing audio quality! Introducing MusicRFM 🎵 Paper: https://t.co/oZciYbgB9P Audio: https://t.co/FQ1W8k1LZh Code: https://t.co/drnE1XGcFC (1/5)

1

1

9

We hope to extend this framework in the future to real-time models like MagentaRT. And we hope you will play with our code as well to make your own cool music creations! Huge thanks to the team @zacknovack @dbeagleholeCS @BergKirkpatrick ! https://t.co/oZciYbgB9P (5/5)

arxiv.org

Controllable music generation remains a significant challenge, with existing methods often requiring model retraining or introducing audible artifacts. We introduce MusicRFM, a framework that...

0

1

6

The best part is that there is no model retraining or gradient descent involved - just lightweight probe training (<20 min per concept) that works at inference time with frozen models. So it's super practical for rapid prototyping 🚀 and could even extend to live DJing 👀 (4/5)

1

0

4

We can even do cool stuff like multi-directional steering and temporal based control (like bringing in one note while fading the other), all without sacrificing generation quality and prompt adherence. You can check these all out on our demo page: https://t.co/FQ1W8k1LZh (3/5)

1

0

3

We train lightweight probes based on Recursive Feature Machines to discover "concept directions" in MusicGen's hidden states, learning the gradients needed to shift a generation towards a musical concept. ↗️ Then we just inject those directions during inference! (2/5)

1

0

3

We found a way to steer AI music gen toward specific notes, chords, and tempos, without retraining the model or significantly sacrificing audio quality! Introducing MusicRFM 🎵 Paper: https://t.co/oZciYbgB9P Audio: https://t.co/FQ1W8k1LZh Code: https://t.co/drnE1XGcFC (1/5)

3

5

19

Suno + Veo 3 generate highly similar versions of popular songs purely based on *phonetically* similar gibberish lyrics?!?! Presenting Bob’s Confetti: Phonetic Memorization Attacks in Music and Video Generation 🔊: https://t.co/Rztf9TNhI7 📖: https://t.co/H7BgnUkCvD 🧵1/n

2

25

91

🔥 Pokémon Red is becoming a go-to benchmark for testing advanced AIs such as Gemini. But is Pokémon Red really a good eval? We study this problem and identify three issues: 1️⃣ Navigation tasks are too hard. 2️⃣ Combat control is too simple. 3️⃣ Raising a strong Pokémon team is

1

28

103

Releasing Stable Audio Open Small! 75ms GPU latency! 7s *mobile* CPU latency! How? w/Adversarial Relativistic Contrastive (ARC) Post-Training! 📘: https://t.co/GaKbWZTrUF 🥁: https://t.co/iE5etj5msa 🤗: https://t.co/0PjcvEbeiZ Here’s how we made the fastest TTA out there🧵

2

15

86

Announcing FastVideo V1, a unified framework for accelerating video generation. FastVideo V1 offers: - A simple, consistent Python API - State of the art model performance optimizations - Optimized implementations of popular models Blog: https://t.co/0lFBmrrwYN

2

43

165

Let me tell a real story of my own with @nvidia. Back in 2014, I was a wide-eyed first-year PhD student at CMU in @ericxing's lab, trying to train AlexNet on CPU (don’t ask why). I had zero access to GPUs. NVIDIA wasn’t yet "THE NVIDIA" we know today—no DGXs, no trillion-dollar

We are beyond honored and thrilled to welcome the amazing new @nvidia DGX B200 💚 at @HDSIUCSD @haoailab. This generous gift from @nvidia is an incredible recognition and an opportunity for the UCSD MLSys community and @haoailab to push the boundaries of AI + System research. 💪

21

58

634

🚀 We are thrilled to release the code for ReFoRCE — a powerful Text-to-SQL agent with Self-Refinement, Format Restriction, and Column Exploration! 🥇 Ranked #1 on Spider 2.0 Leaderboard, a major step toward practical, enterprise-ready systems, tackled both: Spider 2.0-snow &

2

30

117

Google’s latest AI model, Gemini 2.5 Pro, solidly beat its predecessors on all game competitions. 🚀 It sounds like an April 1st headline, but it’s the real deal. 🎯 In Sokoban 🧠🎮, Gemini 2.5 Pro delivered the best multi-modal performance, even beating o1 and Claude 3.7. It

2

18

97

[1/N] LLM evaluations can be done while you are playing live computer games. 🤯 We are excited to announce our game: AI Space Escape is now live on @Roblox (Desktop, Mobile, Tablet, VR)! Start the adventure now and see if you can outsmart AI! 🚀🌌 https://t.co/N1dA99lrep See

2

22

39