arXiv Sound

@ArxivSound

Followers

6K

Following

1

Media

0

Statuses

17K

Sound-related articles (https://t.co/dxVYgWJGOw and https://t.co/b90N0Zzvjs) on https://t.co/HHqPequzVU

Joined July 2020

[IMPORTANT] arXiv sound does not post some papers submitted to arXiv https://t.co/mPAjntoGrG or https://t.co/3pcQCkf6q8. This is because they do not appear in the RSS of arXiv. We apologize for your inconvenience.

1

0

9

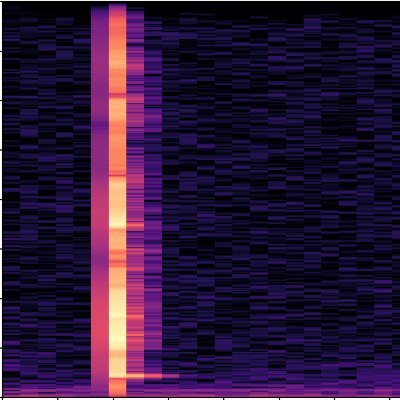

Friedrich Wolf-Monheim, "Spectral and Rhythm Feature Performance Evaluation for Category and Class Level Audio Classification with Deep Convolutional Neural Networks,"

arxiv.org

Next to decision tree and k-nearest neighbours algorithms deep convolutional neural networks (CNNs) are widely used to classify audio data in many domains like music, speech or environmental...

0

0

2

Paolo Combes, Stefan Weinzierl, Klaus Obermayer, "Neural Proxies for Sound Synthesizers: Learning Perceptually Informed Preset Representations,"

arxiv.org

Deep learning appears as an appealing solution for Automatic Synthesizer Programming (ASP), which aims to assist musicians and sound designers in programming sound synthesizers. However,...

0

0

0

Patricia Hu, Silvan David Peter, Jan Schl\"uter, Gerhard Widmer, "Exploring System Adaptations For Minimum Latency Real-Time Piano Transcription,"

arxiv.org

Advances in neural network design and the availability of large-scale labeled datasets have driven major improvements in piano transcription. Existing approaches target either offline...

0

0

0

Ye Ni, Ruiyu Liang, Xiaoshuai Hao, Jiaming Cheng, Qingyun Wang, Chengwei Huang, Cairong Zou, Wei Zhou, Weiping Ding, Bj\"orn W. Schuller, "Affine Modulation-based Audiogram Fusion Network for Joint Noise Reduction and Hearing Loss Compensation,"

arxiv.org

Hearing aids (HAs) are widely used to provide personalized speech enhancement (PSE) services, improving the quality of life for individuals with hearing loss. However, HA performance significantly...

0

0

1

Mingyue Huo, Yuheng Zhang, Yan Tang, "Identifying and Calibrating Overconfidence in Noisy Speech Recognition,"

arxiv.org

Modern end-to-end automatic speech recognition (ASR) models like Whisper not only suffer from reduced recognition accuracy in noise, but also exhibit overconfidence - assigning high confidence to...

0

0

1

William Chen, Chutong Meng, et al., "The ML-SUPERB 2.0 Challenge: Towards Inclusive ASR Benchmarking for All Language Varieties,",

arxiv.org

Recent improvements in multilingual ASR have not been equally distributed across languages and language varieties. To advance state-of-the-art (SOTA) ASR models, we present the Interspeech 2025...

0

2

1

Yerin Ryu, Inseop Shin, Chanwoo Kim, "Controllable Singing Voice Synthesis using Phoneme-Level Energy Sequence,"

arxiv.org

Controllable Singing Voice Synthesis (SVS) aims to generate expressive singing voices reflecting user intent. While recent SVS systems achieve high audio quality, most rely on probabilistic...

0

0

1

Huihong Liang, Dongxuan Jia, Youquan Wang, Longtao Huang, Shida Zhong, Luping Xiang, Lei Huang, Tao Yuan, "Prototype: A Keyword Spotting-Based Intelligent Audio SoC for IoT,"

arxiv.org

In this demo, we present a compact intelligent audio system-on-chip (SoC) integrated with a keyword spotting accelerator, enabling ultra-low latency, low-power, and low-cost voice interaction in...

0

0

0

Ganghui Ru, Jieying Wang, Jiahao Zhao, Yulun Wu, Yi Yu, Nannan Jiang, Wei Wang, Wei Li, "HingeNet: A Harmonic-Aware Fine-Tuning Approach for Beat Tracking,"

arxiv.org

Fine-tuning pre-trained foundation models has made significant progress in music information retrieval. However, applying these models to beat tracking tasks remains unexplored as the limited...

0

0

0

Thomas Thebaud, Yen-Ju Lu, Matthew Wiesner, Peter Viechnicki, Najim Dehak, "Enhancing Dialogue Annotation with Speaker Characteristics Leveraging a Frozen LLM,"

arxiv.org

In dialogue transcription pipelines, Large Language Models (LLMs) are frequently employed in post-processing to improve grammar, punctuation, and readability. We explore a complementary...

0

0

0

Dimitrios Bralios, Jonah Casebeer, Paris Smaragdis, "Re-Bottleneck: Latent Re-Structuring for Neural Audio Autoencoders,"

arxiv.org

Neural audio codecs and autoencoders have emerged as versatile models for audio compression, transmission, feature-extraction, and latent-space generation. However, a key limitation is that most...

0

0

1

Dimitrios Bralios, Paris Smaragdis, Jonah Casebeer, "Learning to Upsample and Upmix Audio in the Latent Domain,"

arxiv.org

Neural audio autoencoders create compact latent representations that preserve perceptually important information, serving as the foundation for both modern audio compression systems and generation...

0

0

0

Luxi He, Xiangyu Qi, Michel Liao, Inyoung Cheong, Prateek Mittal, Danqi Chen, Peter Henderson, "The Model Hears You: Audio Language Model Deployments Should Consider the Principle of Least Privilege,"

arxiv.org

We are at a turning point for language models that accept audio input. The latest end-to-end audio language models (Audio LMs) process speech directly instead of relying on a separate...

0

1

1

Zhengdong Yang, Shuichiro Shimizu, Yahan Yu, Chenhui Chu, "When Large Language Models Meet Speech: A Survey on Integration Approaches,"

arxiv.org

Recent advancements in large language models (LLMs) have spurred interest in expanding their application beyond text-based tasks. A large number of studies have explored integrating other...

0

1

1

Pengyu Wang, Ying Fang, Xiaofei Li, "VINP: Variational Bayesian Inference with Neural Speech Prior for Joint ASR-Effective Speech Dereverberation and Blind RIR Identification,"

arxiv.org

Reverberant speech, denoting the speech signal degraded by reverberation, contains crucial knowledge of both anechoic source speech and room impulse response (RIR). This work proposes a...

0

0

0

Davide Berghi, Philip J. B. Jackson, "Integrating Spatial and Semantic Embeddings for Stereo Sound Event Localization in Videos,"

arxiv.org

In this study, we address the multimodal task of stereo sound event localization and detection with source distance estimation (3D SELD) in regular video content. 3D SELD is a complex task that...

0

0

0

Liping Chen, Kong Aik Lee, Zhen-Hua Ling, Xin Wang, Rohan Kumar Das, Tomoki Toda, Haizhou Li, "Speaker Privacy and Security in the Big Data Era: Protection and Defense against Deepfake,"

arxiv.org

In the era of big data, remarkable advancements have been achieved in personalized speech generation techniques that utilize speaker attributes, including voice and speaking style, to generate...

0

0

0

Vishal Choudhari, "Beamforming-LLM: What, Where and When Did I Miss?,"

arxiv.org

We present Beamforming-LLM, a system that enables users to semantically recall conversations they may have missed in multi-speaker environments. The system combines spatial audio capture using a...

0

0

0

Marvin Lavechin, Thomas Hueber, "From perception to production: how acoustic invariance facilitates articulatory learning in a self-supervised vocal imitation model,"

arxiv.org

Human infants face a formidable challenge in speech acquisition: mapping extremely variable acoustic inputs into appropriate articulatory movements without explicit instruction. We present a...

0

0

0

Henry Graf\'e, Hugo Van hamme, "Graph Connectionist Temporal Classification for Phoneme Recognition,"

arxiv.org

Automatic Phoneme Recognition (APR) systems are often trained using pseudo phoneme-level annotations generated from text through Grapheme-to-Phoneme (G2P) systems. These G2P systems frequently...

1

1

5