Trevor McCourt

@trevormccrt1

Followers

14K

Following

2K

Media

126

Statuses

1K

"Life is a beautiful, magnificent thing, even to a jellyfish"

Cambridge, MA

Joined March 2020

A pretty complete description of the what, why and how of Extropic https://t.co/WlB5wmDdZG

7

13

117

surprised that no one has posted @sarahookr's hardware lottery essay since @GillVerd's TSU announcement maybe EBMs were just waiting for the right chips to come along

10

31

227

A pretty complete description of the what, why and how of Extropic https://t.co/WlB5wmDdZG

7

13

117

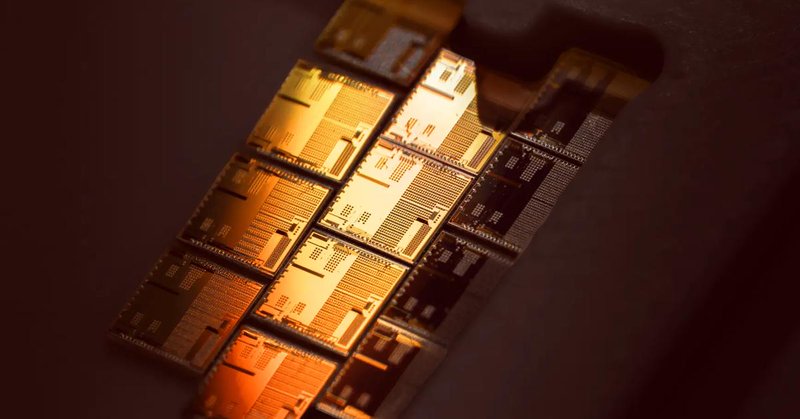

On the science behind X0 and Z1 full talk by our CTO @trevormccrt1 blog: https://t.co/mD1MKiVP70

10

51

275

From sim to silicon. X0 validated our novel probabilistic hardware primitives: • pbits • pdits • pmodes • pMoG To learn more, read the X0 breakdown here: https://t.co/xTvNXH71ir

14

19

243

Only 3 in 10 Veterans know about 0% down home loans. Join our mission to help veterans find their way home.

11

36

194

On a few misconceptions that I'm seeing pop up over and over again:

@carl_feynman Carl, thanks for the thoughtful engagement with our material! You are absolutely right that random sampling makes up a vanishing part of the computational workload in something like a transformer or diffusion model. These algorithms evolved alongside the GPU, and therefore

2

4

64

The Extropic TSU blog post is great. I LOVE when interactive visualizations are included. Brings me the same childlike joy I got when I first found that neural cellular automata blogpost on distillpub (RIP) https://t.co/cbWUlVFDps

extropic.ai

Building thermodynamic computing hardware that is radically more energy efficient than GPUs.

3

6

84

How to cut the energy cost of AI by a factor of 10,000:

arxiv.org

The proliferation of probabilistic AI has promoted proposals for specialized stochastic computers. Despite promising efficiency gains, these proposals have failed to gain traction because they...

15

28

231

while I have mixed opinions on Gill's philosophy of technology, which has largely been misused by AI hypers, I'm grateful and relieved that he is working on genuinely interesting and hard problems like this. I'm excited to see it @beffjezos

The foundations have been laid. Now it's time to scale. Excited for the Thermodynamic Intelligence takeoff ahead. https://t.co/lxWS9uuKeo

0

1

31

Really proud of @GillVerd, @trevormccrt1 and team! I’m so honored to have played a [small] part in their story as an investor. LFG 💪💪

1

1

27

Correct — but you can make that argument for pre-GPU backprop too I am not saying the papers as they are will be as big as backprop if and when the hardware arrives. all I am saying is that by itself can’t be a convincing argument — you have to dig a little deeper and

@trevormccrt1 the big flaw in both papers is that they only run weird EBMs that nobody wants to use! imo new hardware needs to be able to run conventional SOTA models to get meaningful adoption (though i realize that's not your view)

2

1

14

Come work for us if you want to try and figure out how to re-build machine learning on top of an entirely new hardware primitive (EBM sampling instead of matmuls)

The foundations have been laid. Now it's time to scale. Excited for the Thermodynamic Intelligence takeoff ahead. https://t.co/lxWS9uuKeo

37

27

413

More thoughts per watt.

2

3

49

i have many thoughts about @Extropic_AI > their branding is immaculate > the chip design is infinitely cooler than anything else i’ve seen @beffjezos and team cooked. would love to get these chips in our rovers.

1

1

34

I haven't read the paper but I like the ambition. The end of history has been declared too often

There is absolutely no fundamental reason we build AI the way we do today. There certainly is a radically different approach that is orders of magnitude more energy efficient. I’m going to find it before I die https://t.co/ML4fBRTsvv

4

2

32

Alien design for an alien computer.

Meet the XTR-0 A way for early developers to make first contact with thermodynamic intelligence. More at: https://t.co/VjbcIdwG3E

76

58

1K

This man has hit the nail on the head

My 2c about @Extropic_AI's paper. 1 - Yes, it's a small scale demonstration BUT they solved 2 of the most important issues of probabilistic computing: (a) scaling beyond a single global energy and avoiding exponentially long mixing time and (b) avoiding using bulky RNGs 1/n

1

5

163