Nishanth Kumar

@nishanthkumar23

Followers

2K

Following

11K

Media

29

Statuses

323

AI/ML + Robots PhD Student @MIT_LISLab, intern @AIatMeta. Formerly @NVIDIAAI, @rai_inst, @brownbigai, @vicariousai and @uber.

Cambridge, MA

Joined July 2015

World models hold a lot of promise for robotics, but they're data hungry and often struggle with long horizons. We learn models from a few (< 10) human demos that enable a robot to plan in completely novel scenes! Our key idea is to model *symbols* not pixels 👇

19

81

504

Very interesting and cool stuff! This is perhaps the most compelling evidence so far that scaling data with the right architectures might take us very far towards general purpose dexterous manipulation. Excited to read more details and understand how harmonic reasoning works 🤖

Introducing GEN-0, our latest 10B+ foundation model for robots ⏱️ built on Harmonic Reasoning, new architecture that can think & act seamlessly 📈 strong scaling laws: more pretraining & model size = better 🌍 unprecedented corpus of 270,000+ hrs of dexterous data Read more 👇

0

0

9

Our lab is hiring a postdoc! Reach out if you want to push the frontiers of robot learning from human data.

3

17

132

For people interested in learning more about this work, my wonderful advisor Leslie Kaelbling gave a keynote at @RL_Conference recently where she discussed this paper + how it fits into a broader framework for general-purpose robotics! Watch the talk here:

World models hold a lot of promise for robotics, but they're data hungry and often struggle with long horizons. We learn models from a few (< 10) human demos that enable a robot to plan in completely novel scenes! Our key idea is to model *symbols* not pixels 👇

4

14

107

Overall, this work showed me that planning at test-time atop learned world models can be quite powerful for generalization: I hope it inspires interesting future work in this vein!

2

0

16

There are lots of details - check out the below links for more! Paper: https://t.co/smvhg3S1FN Website:

2

2

10

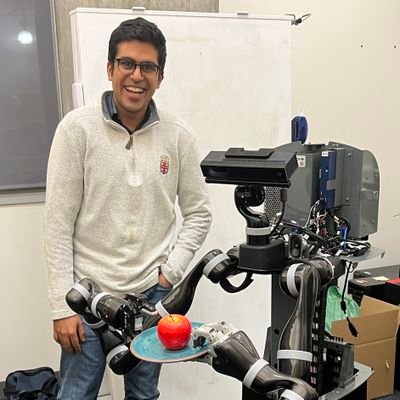

This work was only possible thanks to brilliant coauthors: @ashayathalye, @tomssilver, and @yichao_liang. Also grateful for unwavering support from Jiuguang Wang and @rai_inst, and thoughtful advising (and tolerance of lots of spilled juice 😅) from Tomás and Leslie @MIT_LISLAB!

1

1

12

My personal favorite: we learned a model for using a juicer from Amazon to make different fruit juices!

1

0

14

We found that our learned models enable useful generalization to novel scenes and problems! We trained a model with 6 human demos in our lab @MIT_CSAIL, loaded the learned model onto a Spot, and asked it to solve problems in a completely new building!

2

1

13

We found VLMs are bad at directly selecting which symbols enable good planning, and also at learning transition models (operators) over the symbols. We instead take a neurosymbolic approach: we use a symbolic rule-learning algorithms for model learning, and a hill-climbing

1

1

23

We ask a VLM to look at the demo videos and propose symbols as natural language strings with objects (e.g. Graspable(), or IsGreen()). In new scenes, we can label these symbols as "True" or "False" by simply passing these same strings + new objects + new scene to the same VLM!

1

1

18

Inventing symbolic models for robots has always required answering two questions: *what* symbols to invent, and *how* to ground them into robot sensor data? We started with a relatively simple answer to both: ask a VLM!

1

1

20

Check out Leslie Kaelbling's #RLC2025 Keynote where she talks about some new pespectives and a number of new works from the group:

0

1

8

This is my new favorite robot demo video - very impressive! Expert level table tennis seems more within reach than I would have thought!

🏓🤖 Our humanoid robot can now rally over 100 consecutive shots against a human in real table tennis — fully autonomous, sub-second reaction, human-like strikes.

0

0

4

We're excited for #RLC2025! If you're at the conference, be sure to catch our PI Leslie Kaelbling's keynote on "RL: Rational Learning" from 9-10 in CCIS 1-430. Leslie will talk about some new perspectives + exciting new results from the group: you won't want to miss it! 🤖

0

5

12

I competed on robotics teams (FIRST, WRO) throughout middle and high school and loved it. When I got to college, I asked @StefanieTellex about forming an undergrad robotics competition club. She suggested I try research first. “Research is cooler”, she said. She was right!

0

0

9

📢 Excited to announce the 1st workshop on Making Sense of Data in Robotics @corl_conf! #CORL2025 What makes robot learning data “good”? We focus on: 🧩 Data Composition 🧹 Data Curation 💡 Data Interpretability 📅 Papers due: 08/22/2025 🌐 https://t.co/kU8G3gwBpp 🧵(1/3)

2

12

66

Hello world! This is @nishanthkumar23 and I'll be taking over @MIT_CSAIL 's X/Twitter & Instagram for 24 hours! I'm a 4th year PhD @MITEECS working on AI/ML for Robotics and Computer Agents. Drop any and all questions about research, AI, MIT, or dogs (esp. robot dogs!) below 👇

18

13

86

Thrilled to share that I'll be starting as an Assistant Professor at Georgia Tech (@ICatGT / @GTrobotics / @mlatgt) in Fall 2026. My lab will tackle problems in robot learning, multimodal ML, and interaction. I'm recruiting PhD students this next cycle – please apply/reach out!

68

30

554

It was a ton of fun to help @WillShenSaysHi and @CaelanGarrett on new work that does GPU-accelerated TAMP! Exciting progress towards efficient test time scaling for robots 🤖

Check out this MIT News article about our research on GPU-accelerated manipulation planning! https://t.co/3tsx9BlRmc

@WillShenSaysHi @nishanthkumar23 @imankitgoyal

0

1

7