Learning and Intelligent Systems (LIS) @ MIT

@MIT_LISLab

Followers

2K

Following

435

Media

14

Statuses

126

PIs: Leslie Pack Kaelbling, Tomás Lozano-Pérez AI/ML/Robotics Research @MIT_CSAIL Website: https://t.co/TgsPKmvPCb

Joined November 2020

Check out new work from the group on data-efficient learning of symbolic world models!

World models hold a lot of promise for robotics, but they're data hungry and often struggle with long horizons. We learn models from a few (< 10) human demos that enable a robot to plan in completely novel scenes! Our key idea is to model *symbols* not pixels 👇

0

2

5

Check out Leslie Kaelbling's #RLC2025 Keynote where she talks about some new pespectives and a number of new works from the group:

0

1

8

We're excited for #RLC2025! If you're at the conference, be sure to catch our PI Leslie Kaelbling's keynote on "RL: Rational Learning" from 9-10 in CCIS 1-430. Leslie will talk about some new perspectives + exciting new results from the group: you won't want to miss it! 🤖

0

5

12

#ICRA2025 🤖 I spent 3 years of PhD making efficient long-horizon manipulation planning algorithms. VLMs ultimately provide the essential common-sense and horizon-reduction benefits. ❗VLMs can generate plausible robot task plans, but actions may not be feasible for robots due to

5

54

420

Check out new coverage by @MIT_CSAIL of our lab members' recent work!

Can we teach a robot its limits to do chores safely & correctly? 🧵 To help robots execute open-ended, multi-step tasks, MIT CSAIL researchers used vision models to see what’s near the machine & model its constraints. An LLM sketches up a plan that’s checked in a simulator to

0

0

4

Curious to hear about creating generalist robots from leaders in the field? Don’t miss our panel “Representations for Generalist Robots” (4-5pm) @corl_conf LEAP workshop! Feat. @chelseabfinn @animesh_garg Vincent Vanhoucke @Marc__Toussaint @sidsrivast and Leslie Kaelbling!

0

8

29

Check out new work on scene completion and grasping from a single RGB-D image!

🚀Excited to share SceneComplete: an open-world 3D scene completion system for constructing a complete, segmented 3D model of a scene from a single RGB-D image.🖼️🤖 SceneComplete enables dexterous grasping and robust robot manipulation in highly cluttered scenes - a short 🧵

0

3

11

Incredible insights from Prof. Tomás Lozano-Pérez of MIT during his keynote on the evolution of robotics! He took us on a journey through the shifting landscape of robotics over the years, discussing “inverted pendulum” theory of Robotics. #CoRL2024 #RobotLearning #AI #Robotics

1

6

47

How can we get VLMs to help robots solve complex long-horizon tasks? Introducing VisualPredicator: an agent that leverages VLMs to learn predicates and operators for classical planners. Our system can stack blocks, balance weights on a balance beam, and even pour coffee🦾! [1/9]

2

19

71

Check out (and consider submitting!) this #CORL2024 workshop co-organized by several lab members!

Super excited to be co-organizing this workshop at the intersection of Learning and Planning @corl_conf 2024! Join us for interesting ideas, cutting-edge talks, and some spicy panel discussions on classical and learning-based robot systems! #CORL2024 🤖

0

0

4

Check out recent coverage of some of our work (presented at #RSS2024) by @MIT_CSAIL!!!

The phrase "practice makes perfect" is great advice for humans — and also a helpful maxim for robots 🧵 Instead of requiring a human expert to guide such improvement, MIT & The AI Institute’s "Estimate, Extrapolate, and Situate" (EES) algorithm enables these machines to practice

0

1

9

A huge congratulations to everyone moving on; we're excited to see the things you and your labs will do! 🤖🧠🎉

0

0

8

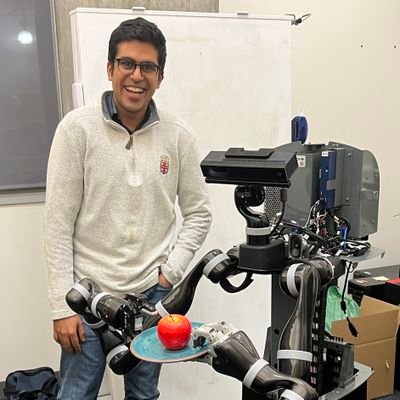

@JorgeAMendez_ is starting a new lab for robot learning in the ECE department @stonybrooku in Fall 2024! https://t.co/pbLQUUjjaq

I am excited to share that I will join the ECE department at Stony Brook University @SBU_ECE @stonybrooku as an Assistant Professor starting this fall. I will be recruiting PhD students during this application cycle to work on robot learning!

1

0

12

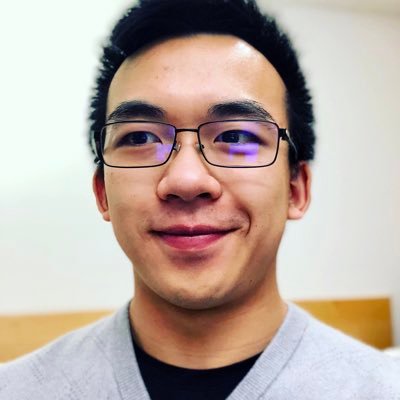

@du_yilun will start a new lab to build next generation AI systems in the Computer Science department @Harvard in Fall 2025! https://t.co/Q3EEEeUSfy

Super excited to be joining Harvard as an assistant professor in Fall 2025 in @KempnerInst and Computer Science!! I will be hiring students this upcoming cycle -- come join our quest to build the next generation of AI systems!

1

0

16

Rachel Holladay will be starting a new lab for robotic manipulation in the Mechanical Engineering and Applied Mechanics dept. + @GRASPlab lab @Penn in Fall 2025! https://t.co/kzPC08hVfT

1

0

12

@tomssilver will be starting a new lab for robot planning and learning in the ECE department @Princeton in Fall 2025! https://t.co/RvO3LIRuL4

I'm thrilled to join Princeton's faculty as an assistant professor in the ECE department starting Fall 2025 🐯 Stay tuned for the launch of my lab. We will develop generally helpful robots that learn and plan 🤖

1

0

16

We're excited to have 4 different lab members moving on to start exciting new labs as faculty members! 🧵👇

1

3

42

Can we get robots to improve at long-horizon tasks without supervision? Our latest work tackles this problem by planning to practice! Here's a teaser showing initial task -> autonomous practice -> eval (+ interference by a gremlin👿)

1

18

119

Happy to have our work presented at RSS 2024 in Session 2 Planning! We build a planning pipeline on Spot for long-horizon mobile manipulation directly in physical world. The key is to decide interdependent cont. parameters of a sequence of parameterized skills on a real robot.

Can we get robots to improve at long-horizon tasks without supervision? Our latest work tackles this problem by planning to practice! Here's a teaser showing initial task -> autonomous practice -> eval (+ interference by a gremlin👿)

1

3

37

- @AidanCurtis3 and @nishanthkumar23 will present new work on leveraging LLMs + constraint satisfaction to solve challenging TAMP problems in the Lifelong Learning workshop on Friday! Be sure to catch their presentations and poster sessions to hear about our latest work! (4/4)

0

0

3