Tom Silver

@tomssilver

Followers

3K

Following

642

Media

35

Statuses

365

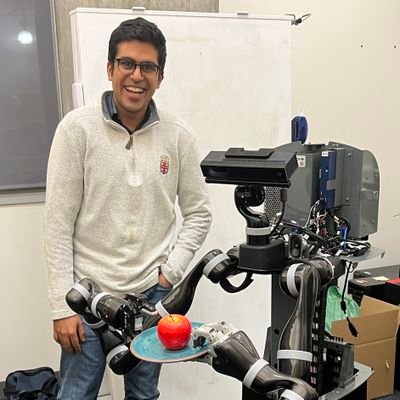

Assistant Professor @Princeton. Developing robots that plan and learn to help people. Prev: @Cornell, @MIT, @Harvard.

Princeton, NJ

Joined October 2011

🤖Excited to share SLAP, @YijieIsabelLiu's new algorithm using RL to provide better skills for planning! Check out the website for code, videos, and pre-trained models:

github.com

Contribute to isabelliu0/SLAP development by creating an account on GitHub.

Robots can plan, but rarely improvise. How do we move beyond pick-and-place to multi-object, improvisational manipulation without giving up completeness guarantees? We introduce Shortcut Learning for Abstract Planning (SLAP), a new method that uses reinforcement learning (RL) to

0

3

33

Excited to share this collaborative work led by the exceptional @YijieIsabelLiu. This work has dramatically motivated my recent belief in RL+Planning for intelligent decision making!! Key idea: Use RL to learn improvisational low-level “shortcuts” in planning.

Robots can plan, but rarely improvise. How do we move beyond pick-and-place to multi-object, improvisational manipulation without giving up completeness guarantees? We introduce Shortcut Learning for Abstract Planning (SLAP), a new method that uses reinforcement learning (RL) to

0

2

9

This project was led by a truly exceptional Princeton undergrad @YijieIsabelLiu, who is looking for PhD opportunities this year! Her website:

0

0

2

Happy to share some of the first work from my new lab! This project has shaped my thinking about how we can effectively combine planning and RL. Key idea: start with a planner that is slow and "robotic", then use RL to discover shortcuts that are fast and dynamic. (1/2)

Robots can plan, but rarely improvise. How do we move beyond pick-and-place to multi-object, improvisational manipulation without giving up completeness guarantees? We introduce Shortcut Learning for Abstract Planning (SLAP), a new method that uses reinforcement learning (RL) to

1

6

20

This week's #PaperILike is "Monte Carlo Tree Search with Spectral Expansion for Planning with Dynamical Systems" (Riviere et al., Science Robotics 2024). A creative synthesis of control theory and search. I like using the Gramian to branch. PDF:

arxiv.org

The ability of a robot to plan complex behaviors with real-time computation, rather than adhering to predesigned or offline-learned routines, alleviates the need for specialized algorithms or...

0

20

149

This week's #PaperILike is "Reality Promises: Virtual-Physical Decoupling Illusions in Mixed Reality via Invisible Mobile Robots" (Kari & Abtahi, UIST 2025). This is some Tony Stark level stuff! XR + robots = future. Website: https://t.co/OJO666K50C PDF:

0

2

16

This was my experience in grad school, and now I've seen some evidence to suggest a trend 🤔

11

46

846

This week's #PaperILike is "Learning to Guide Task and Motion Planning Using Score-Space Representation" (Kim et al., IJRR 2019). This is one of those papers that I return to over the years and appreciate more every time. Chock full of ideas. PDF:

arxiv.org

In this paper, we propose a learning algorithm that speeds up the search in task and motion planning problems. Our algorithm proposes solutions to three different challenges that arise in learning...

1

6

26

This week's #PaperILike is "On the Utility of Koopman Operator Theory in Learning Dexterous Manipulation Skills" (Han et al., CoRL 2023). This and others have convinced me that I need to learn Koopman! Another perspective on abstraction learning. PDF:

arxiv.org

Despite impressive dexterous manipulation capabilities enabled by learning-based approaches, we are yet to witness widespread adoption beyond well-resourced laboratories. This is likely due to...

1

3

33

This week's #PaperILike is "Predictive Representations of State" (Littman et al., 2001). A lesser known classic that is overdue for a revival. Fans of POMDPs will enjoy. PDF:

0

4

52

This week's #PaperILike is "Influence-Augmented Local Simulators: A Scalable Solution for Fast Deep RL in Large Networked Systems" (Suau et al., ICML 2022). Nice work on using fast local simulators to plan & learn in large partially observed worlds. PDF:

arxiv.org

Learning effective policies for real-world problems is still an open challenge for the field of reinforcement learning (RL). The main limitation being the amount of data needed and the pace at...

0

0

8

📢 Reminder: The LEAP Workshop (Learning Effective Abstractions for Planning) takes place today at #CoRL2025. 🕜 1:30 PM onwards 📍 Room E7, COEX Join us for invited talks, papers, and discussions on abstraction, planning, and robotics. Details :

We're excited to announce the third workshop on LEAP: Learning Effective Abstractions for Planning, to be held at #CoRL2025 @corl_conf! Early submission deadline: Aug 12 Late submission deadline: Sep 5 Website link below 👇

0

1

11

This week's #PaperILike is "Optimal Interactive Learning on the Job via Facility Location Planning" (Vats et al., RSS 2025). I always enjoy a surprising connection between one problem (COIL) and another (UFL). And I always like work by @ShivaamVats! PDF:

arxiv.org

Collaborative robots must continually adapt to novel tasks and user preferences without overburdening the user. While prior interactive robot learning methods aim to reduce human effort, they are...

0

0

17

Introducing CLAMP: : a device, dataset, and model that bring large-scale, in-the-wild multimodal haptics to real robots. Haptics / Tactile data is more than just force or surface texture, and capturing this multimodal haptic information can be useful for robot manipulation.

1

19

41

Excited to share our work on multimodal perception for real robots, CLAMP, now accepted at #CoRL2025 ! Do come say hi at our poster session on Sep 30 if you're attending!

Introducing CLAMP: : a device, dataset, and model that bring large-scale, in-the-wild multimodal haptics to real robots. Haptics / Tactile data is more than just force or surface texture, and capturing this multimodal haptic information can be useful for robot manipulation.

1

3

8

During a meal, food may cool down resulting in a change in its physical properties, even though visually it may look the same! How can robots reliably pick up food when it looks the same but feels different — such as steak 🥩getting firmer as it cools? 🍴 Check out

2

13

30

This week's #PaperILike is "Hi Robot: Open-Ended Instruction Following with Hierarchical Vision-Language-Action Models" (Shi et al., ICML 2025). I'm often asked: how might we combine ideas from hierarchical planning and VLAs? This is a good start! PDF:

arxiv.org

Generalist robots that can perform a range of different tasks in open-world settings must be able to not only reason about the steps needed to accomplish their goals, but also process complex...

1

10

79

World models hold a lot of promise for robotics, but they're data hungry and often struggle with long horizons. We learn models from a few (< 10) human demos that enable a robot to plan in completely novel scenes! Our key idea is to model *symbols* not pixels 👇

19

82

504

This week's #PaperILike is "Labeled RTDP: Improving the Convergence of Real-Time Dynamic Programming" (Bonet & Geffner, 2003). A very clear introduction to and improvement of RTDP, an online MDP planner that we should all have in our toolkits. PDF:

0

1

11