Ruslan Abdikeev

@aruslan

Followers

553

Following

5K

Media

157

Statuses

4K

Ex-musician enjoying fragments of unevenly distributed future and having fun with Google Lens and C++ at Google SF. https://t.co/ucHNiFUQbX

San Francisco, CA

Joined May 2007

The biggest predictor of coding ability is Language Aptitude. Not Math. A study posted in Nature found that numeracy accounts for just 2% of skill variance. Meanwhile, the neural behaviors associated with language accounted for 70% of skill variance.

407

1K

11K

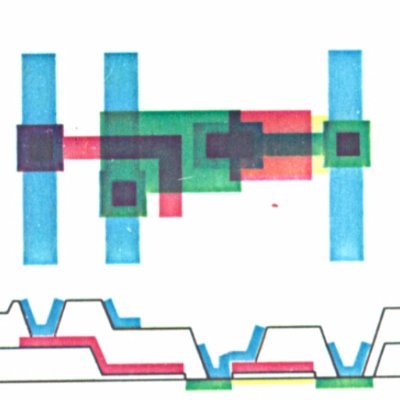

I am excited to finally share our recent paper "Filtering After Shading With Stochastic Texture Filtering" (with Matt Pharr, @marcosalvi, and Marcos Fajardo), published at ACM I3D'24 / PACM CGIT, where we won the best paper award! 1/N

7

99

485

The 'High-throughput FizzBuzz' solution by ais523 is awe-inspiring. I was blown-away in the third phase, when the FizzBuzz bytecode interpreter, AVX2, and elegant decimal arithmetic techniques all come together seamlessly: https://t.co/7qfYXTrGYJ

0

2

6

100 years of Star Wars (with Midjourney and Pika Labs) [📹 douggypledger] https://t.co/NqtskkphMq

296

3K

16K

I'm glad to see the excitement about iOS 17's Object Capture feature in my latest tweet! For you, I created a new demo of our AR Code application in development. We are still in the heart of Paris, and here we highlight this remarkable statue in the garden of the Palais-Royal.

35

231

1K

Great compendium on Transformers training and inference, and super useful links to latency and FLOPS angles: https://t.co/1pxlPmb2Gu

The most common question we get about our models is "will X fit on Y GPU?" This, and many more questions about training and inferring with LLMs, can be answered with some relatively easy math. By @QuentinAnthon15, @BlancheMinerva, and @haileysch__

https://t.co/3PqbxSAKEB

0

0

0

Andrej does it again! Fantastic video lecture! Also, a great way to "introduce" Shannon's classic 1948 paper on information theory :)

🔥 New (1h56m) video lecture: "Let's build GPT: from scratch, in code, spelled out." https://t.co/2pKsvgi3dE We build and train a Transformer following the "Attention Is All You Need" paper in the language modeling setting and end up with the core of nanoGPT.

0

3

8

Затонувшие корабли и ChatGPT. Тред. В сороковых-пятидесятых годах воздушное и морское текстирование атомного оружия создали избыточный радиационный фон, примерно в +10% от естественного. 1/8

3

12

68

...and now let's go deeper into the hallucinating world of chatGPT:

This GPT virtual machine post is only the tip of the iceberg. @joshlabau and I have discovered that text-davinci-003 has the capability to do something we're calling HALLUCINATED SCRIPTS Buckle up for a thread, this one is mind bending 🤯 https://t.co/RGIjpEyQRK

0

0

3

This is absolutely insane: "We can chat with this Assistant chatbot, locked inside the alt-internet attached to a virtual machine, all inside ChatGPT's imagination." Building A Virtual Machine inside ChatGPT

0

0

3

Capturing and Animation of Body and Clothing from Monocular Video (Videos and code are "coming soon".) https://t.co/PIoSIbP5jj

0

0

0

30 years ago we were working on SNES 'Spider-Man and the X-Men in Arcade's Revenge'. We had very little time for this project and had 3 very experienced coders on-board to get it done. Here's a FAX from production at Acclaim to give you a flavour of the pressure we were under 1/2

148

580

3K

That's going to be fun! While 0x00 after free() is less impactful than 0xDD, I'm sure tons of bugs are going to be uncovered. I'd obviously preferred 0xDD because 0x00 is going to _hide_ new bugs in addition to uncovering the old ones, but let's think of it as a trajectory.

1

1

0

New blog post: Faster zlib/DEFLATE decompression on the Apple M1 (and x86) https://t.co/qMVA6kAlMT

16

43

205

One more item checked off my secret "that's impossible" list. The previous one was a Blade Runner / Enemy of the State image enhancement. We truly live in the future. — А у вас нет такого же, но с перламутровыми пуговицами? — К сожалению, нет. — Нет? Будем искать.

Did you know you can search with text and image at the same time? Explore Google Search Multisearch, and more.

0

0

3

AI is lazy: solving easy puzzles is simpler than learning to escape the sandbox. Once the puzzles get hard, would AI learn to break the box? "Other judging systems perform full sand-boxing of the computation to prevent a generated code sample from doing harm like deleting files."

"Language Models Can Teach Themselves to Program Better" This paper changed my thinking about what future langauge models will be good at, mostly in a really concerning way. Let's start with some context: [1/11]

0

0

1

Rare technical commentary from Pat in the Intel earnings call: “Our software release on our discrete graphics, right, was clearly underperforming.” 1/2

1

2

3

а вам встречался технический или поэтический перевод слова "connascence"? меня тормознуло давеча посреди рассказа про "cohesion"/"связность" и "coupling"/"зацепление", когда я вдруг понял, что не знаю устоявшегося русского перевода, ни как слова, ни как термина. есть идеи?

4

0

1