Quentin Anthony

@QuentinAnthon15

Followers

1,000

Following

129

Media

38

Statuses

93

I make models more efficient. Google Scholar:

Joined June 2019

Don't wanna be here?

Send us removal request.

Explore trending content on Musk Viewer

Davido

• 514537 Tweets

Baba

• 116859 Tweets

Valencia

• 80399 Tweets

Abeg

• 77510 Tweets

Peruzzi

• 76169 Tweets

Nancy

• 69103 Tweets

Madonna

• 59256 Tweets

Wetin

• 58105 Tweets

Francis

• 53659 Tweets

Burna

• 49641 Tweets

Lewandowski

• 49185 Tweets

Rock in Rio

• 48120 Tweets

Araujo

• 46143 Tweets

Seinfeld

• 42040 Tweets

Jesus is King

• 39713 Tweets

Katy Tur

• 30231 Tweets

#WWERaw

• 26600 Tweets

Grammy

• 25632 Tweets

Luciano

• 16755 Tweets

ANA CASTELA NO RIR

• 14944 Tweets

PRE SAVE FOI INTENSO

• 14691 Tweets

#WWEDraft

• 10555 Tweets

カレンダー通り

• 10362 Tweets

Last Seen Profiles

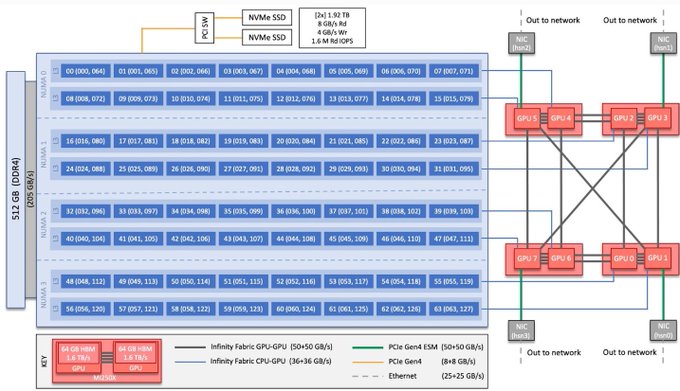

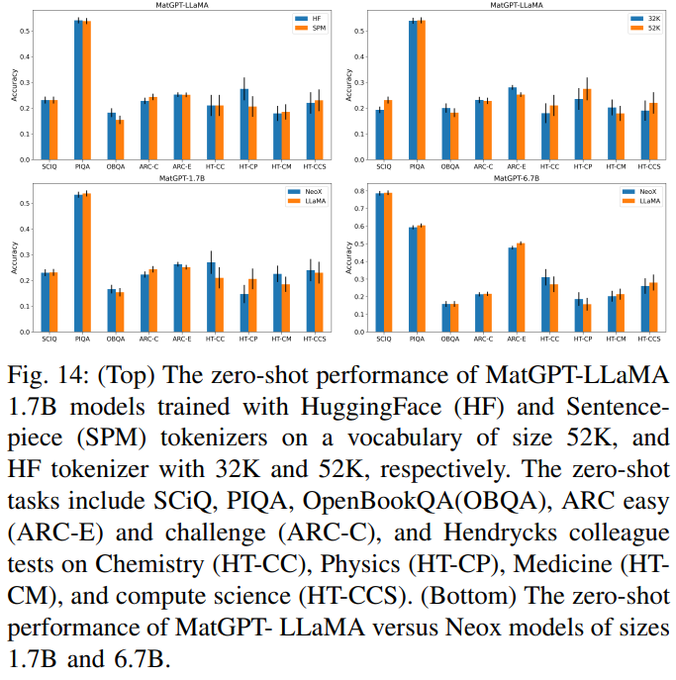

How do LLMs scale on AMD GPUs and HPE Slingshot 11 interconnects? We treat LLMs as a systems optimization problem on the new

#1

HPC system on the Top500, ORNL Frontier.

Learn more in our paper:

2

22

80

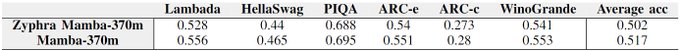

On a more personal note, I'm so damn proud of my team. Zyphra is punching way above our weight in terms of manpower, compute, and data. We aren't industry insiders, and achieved this with willingness to learn and a ton of grit.

Kudos to you all

@BerenMillidge

@yury_tokpanov

…

4

1

33

Our analysis also explains the effect reported by

@karpathy

in his viral tweet last year about how vocab size matters for efficiency. Vocab should be divisible by 64 for the same reason h/a should be. 9/11

2

2

25

Thank you to all of my amazing co-authors,

@yury_tokpanov

@PaoloGlorioso1

@BerenMillidge

HuggingFace:

GitHub:

Expect to find this work on arxiv soon!

0

1

15

Thank you to all my co-authors,

@jacob_hatef

@deepakn94

@BlancheMinerva

@StasBekman

Junqi Yin, Aamir Shafi, Hari Subramoni, and Dhabaleswar Panda, as well as

@StabilityAI

@ORNL

and

@SDSC_UCSD

for providing computing resources. 11/11

0

0

15

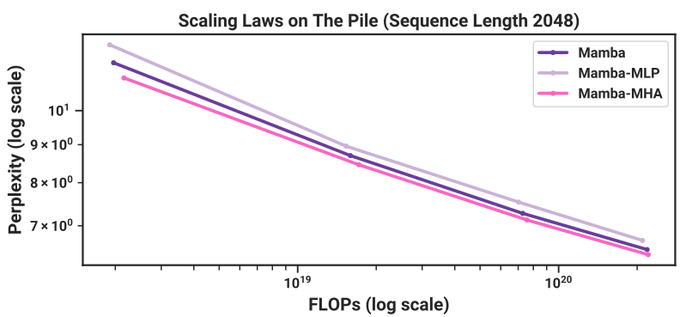

@arankomatsuzaki

What's the point of a scaling law with this few of FLOPs?

Largest dense model is 85M trained on 33B tokens.

Wouldn't take these results seriously tbh, and these authors have a bit of a track record of doing this, unfortunately.

2

0

11

@StasBekman

Piggybacking off this, I extended Stas' communication benchmark to all collectives and point-to-point ops for pure PyTorch distributed and DeepSpeed comms in

Give it a try if you're looking to understand your target collective's behavior!

0

0

6

@StasBekman

@svetly

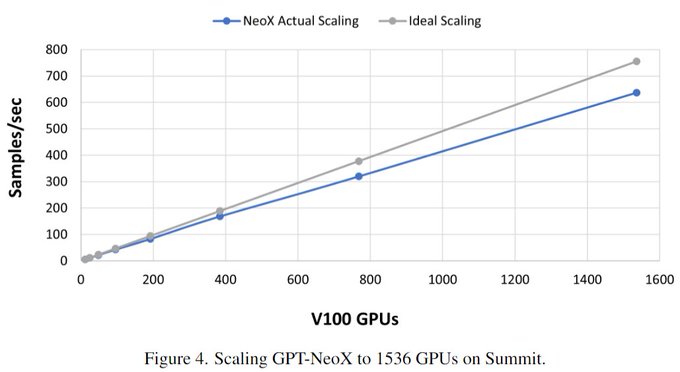

This is on ORNL Summit ().

- 27,648 total V100 GPUs

- 6 V100s/node

- EDR InfiniBand

Node topology:

1

0

3

@StasBekman

@svetly

GPT-NeoX () also scales pretty well there. (Disclaimer, this is a 1.3B model):

1

0

2

Special thanks to my amazing colleagues on this effort!

@yury_tokpanov

@PaoloGlorioso1

@BerenMillidge

@J_Pilault

0

0

2

@1littlecoder

Those models are very small and trained on very few tokens.

Based on that, I actually suspect we started work around the same time. Our models are large enough and trained on enough tokens to be useful artifacts, which takes a bit more time.

We also released them.

0

0

2

@sid09_singh

I don't have anything dataset-specific since I personally use this dataset for "ensure loss goes down" and throughput checks. Maybe port the Pythia configs and use a single epoch?

0

0

1

@StasBekman

For OpenMPI this is `--tag-output` ([jobid, MCW_rank]<stdxxx>) and `--timestamp-output` ()

1

0

1

@Dynathresh

There's nothing fundamentally stopping it to my knowledge. The compute and know-how requirements to pretrain any LLM are prohibitive, and mamba just came out Dec 2023. My guess is that larger models are already underway, but take time.

0

0

1