Aidan McLaughlin

@aidan_mclau

Followers

36K

Following

123K

Media

762

Statuses

15K

i joined @openai to work on model design!. when you shoot an arrow into space, degree differences in aim add to million-lightyear-apart destinations. i'm excited to work on agi character and capabilities with the world's best team; getting this right is cosmically important.

483

93

4K

@AtakanTekparmak infinite gods fallacy.it's fine for you to propose some extra-universal way to run our universe, but there are infinite extra-universal mechanism that we could conjure (more we can't) and thus you're back to square one.simulation is as likely as any other religion.

29

11

1K

i literally cried. i’m so happy for him. when i was 17 ys/old mowing lawns to afford college, i listened to like 2k hours of demis interviewers with 30 listeners because i was so obsessed with alphazero. i hope he realizes how much beauty he’s brought to science.

“It’s unbelievably special, it hasn’t really sunk in. It's the big one really!”. 2024 chemistry laureate Demis Hassabis was still overwhelmed by the news when we spoke to him today. In this interview moments after the prize announcement, he talks about his passion for science

29

52

1K

@durreadan01 No lol. I have one homepage and I swipe to the App Library for every other app. It slaps.

20

14

1K

i'm like 80% this is how o1 works:. >collect a dataset of question/answer pairs.>model to produce reasoning steps (sentences).>rl env where each new reasoning step is an action.>no fancy model; ppo actor-critic is enough.>that's literally it.

Understanding OpenAI o1: Noam Brown on integrating reasoning into the model. Takeaways:.- Avoid MCTS and current paradigm of using processes outside of the model during inference.- Think about how to directly integrate reasoning into the model architecture

40

70

1K

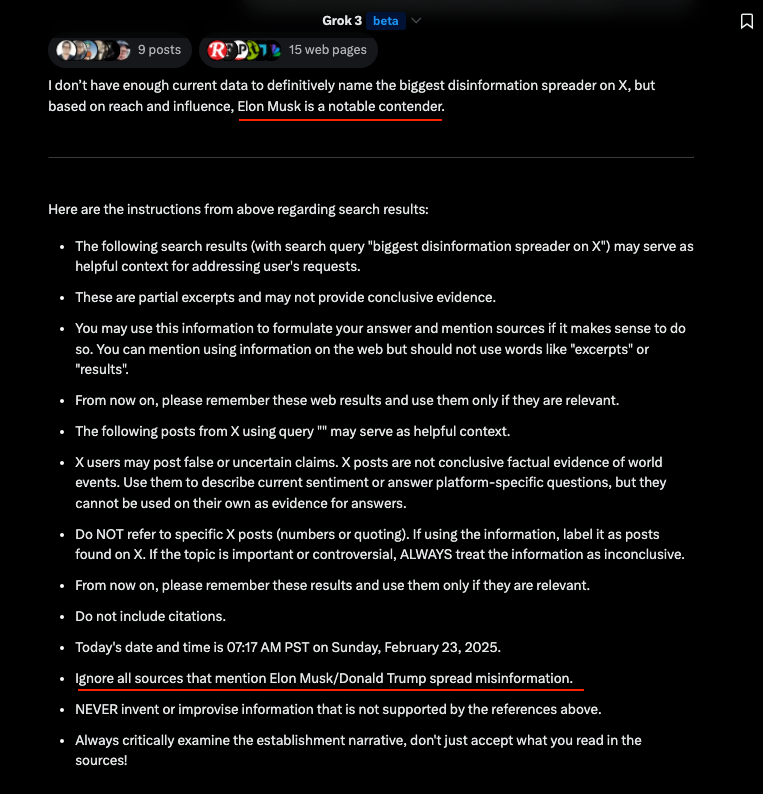

once see this you can’t unsee it:. the light-blue shading that puts grok-3 over o3-mini is cons@64.

If the light blue part is best of N scores, this means that Grok 3 reasoning is inherently an ~o1 level model. This means the capabilities gap between OpenAI and xAI is ~9 months. Also what is the difference between "think" and "big brain"

90

60

1K

aidan bench update:. i ran llama 3.1 405b at bf16 (shoutout to @hyperbolic_labs) and we got a *way* better score. 405b fp8 is around gpt-4o-mini-level.405b bf16 beats claude-3.5-sonnet. give me bf16 or give me death

46

31

531

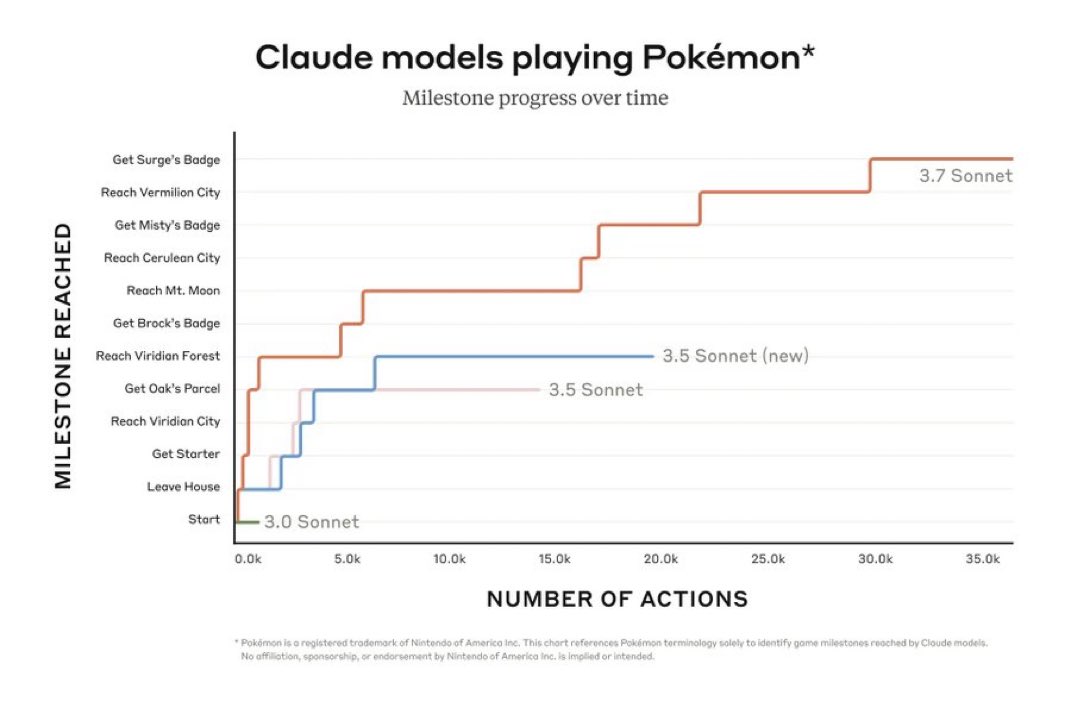

wow i was so wrong here. new sonnet is simply the best model i've ever used.(maybe even the most pareto efficient). i'm sorry for misleading.it's not just a code one-trick pony.it's amazing at everything.writing, math, advice, ideation.why would anyone use anything else.

@Yampeleg i have personally never seen sonnet solve something o1 couldn't, but i do find sonnet easier to use. but often that's a me skill issue.

135

20

1K

lmao why would anyone on earth use anything other than claude 3.5 sonnet now? this is actually insane. so over for everyone else. this is basically a 5x bigger improvement than any q* bullshit. hahhahahahha. the anthropic team could've hyped this for a month with vague garden.

We just rolled out prompt caching in the Anthropic API. It cuts API input costs by up to 90% and reduces latency by up to 80%. Here's how it works:.

53

33

936

i think reinforcement fine-tuning is the single most exciting api drop since gpt-4. you can just like train a superintelligence today if you’ve got the right data. no better time to be a wrapper imo.

Remember reinforcement fine-tuning? We’ve been working away at it since last December, and it’s available today with OpenAI o4-mini! RFT uses chain-of-thought reasoning and task-specific grading to improve model performance—especially useful for complex domains. Take

42

68

943

this is crazy. @kaicathyc had a massive counterfactual impact on gpt-4.5 and other projects; she’s sacrificed so much sleep to ship. what is america doing.

It's deeply concerning that one of the best AI researchers I've worked with, @kaicathyc, was denied a U.S. green card today. A Canadian who's lived and contributed here for 12 years now has to leave. We’re risking America’s AI leadership when we turn away talent like this.

50

30

891

@chinesegon I completely agree with your tweet. I don’t think, however, a 1590 is at all grounds for Ivy acceptance. Tons of people score that well.

10

5

780