Ziming Liu

@ZimingLiu11

Followers

9,315

Following

640

Media

61

Statuses

419

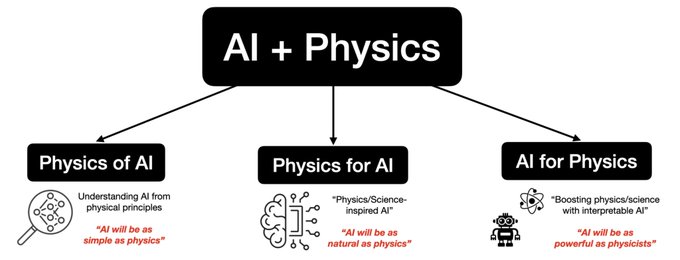

PhD student @MIT , AI for Physics/Science, Science of Intelligence & Interpretability for Science

Joined May 2021

Don't wanna be here?

Send us removal request.

Explore trending content on Musk Viewer

BECKY X MAYBELLINE LIVE

• 230469 Tweets

Northern Lights

• 211450 Tweets

オーロラ

• 170154 Tweets

#Auroraborealis

• 92475 Tweets

DeNA

• 61864 Tweets

#baystars

• 61443 Tweets

THE SIGN in MANILA

• 43046 Tweets

京王杯SC

• 22051 Tweets

Fulham

• 21610 Tweets

太陽フレアのせい

• 18177 Tweets

ベイスターズ

• 18129 Tweets

ハマスタ

• 17413 Tweets

バチコン

• 16802 Tweets

ウインマーベル

• 10671 Tweets

Last Seen Profiles

Pinned Tweet

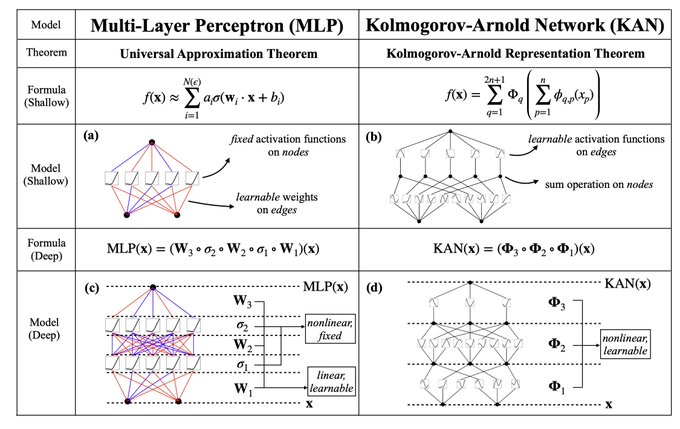

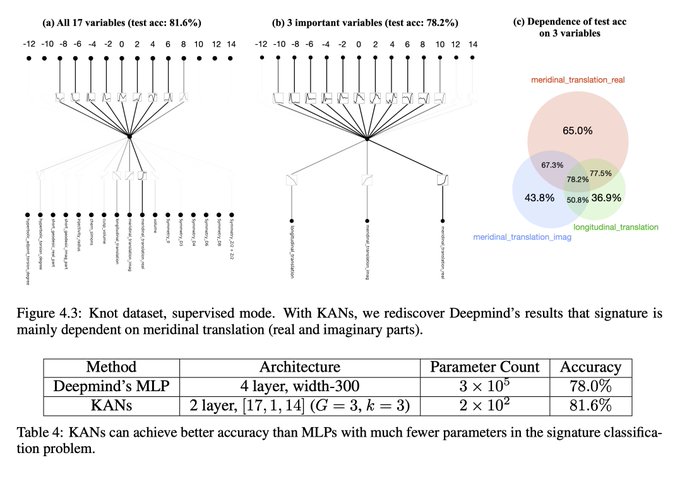

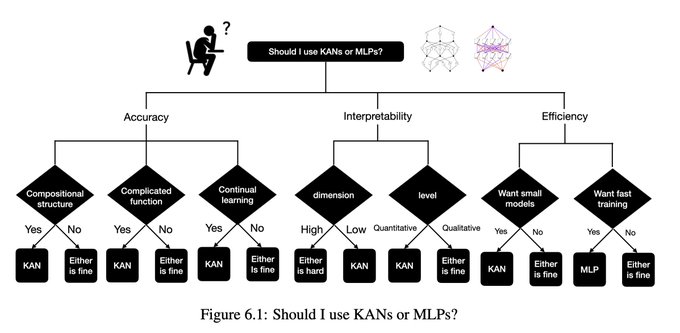

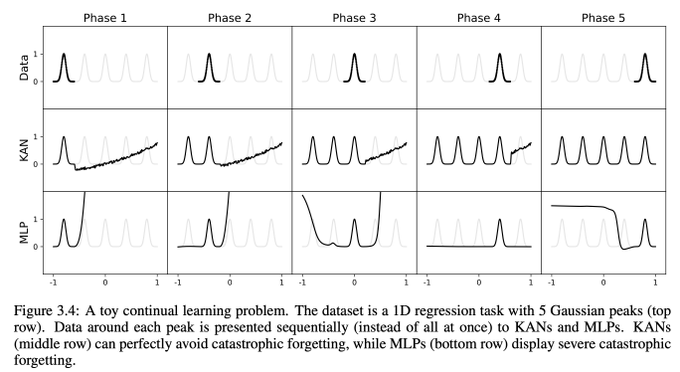

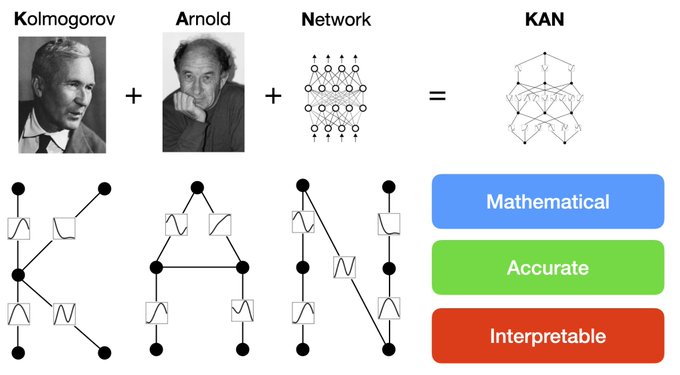

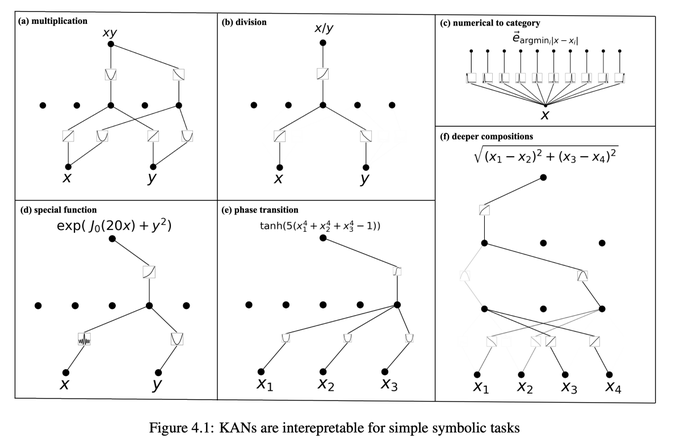

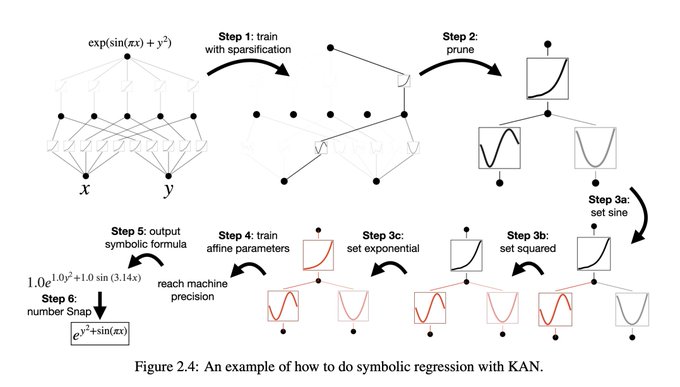

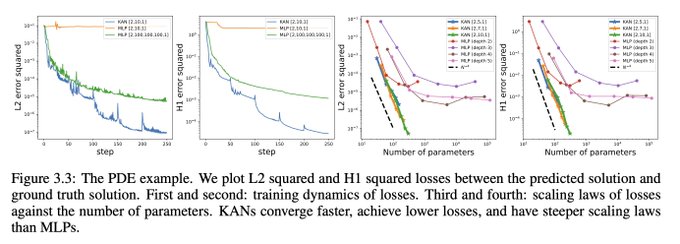

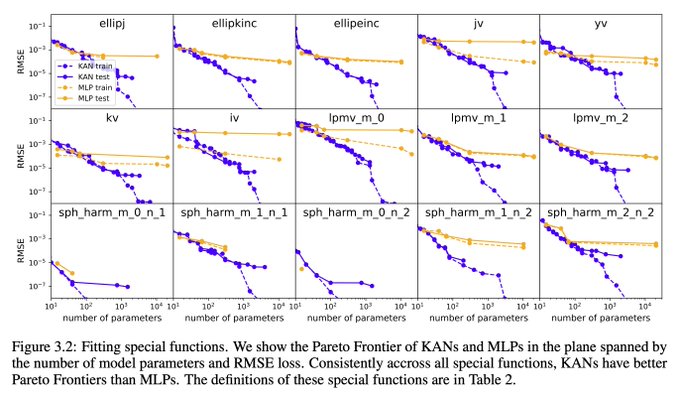

MLPs are so foundational, but are there alternatives? MLPs place activation functions on neurons, but can we instead place (learnable) activation functions on weights? Yes, we KAN! We propose Kolmogorov-Arnold Networks (KAN), which are more accurate and interpretable than MLPs.🧵

117

1K

5K

@loveofdoing

Thanks for sharing our work! In case anyone's interested in digging more, here's my tweet 😃:

5

11

354

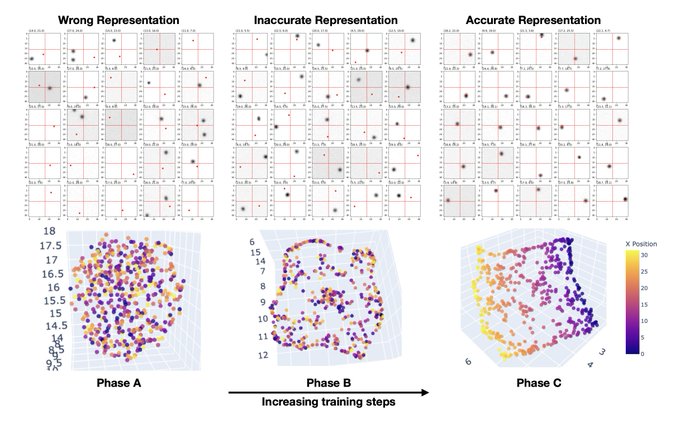

NN interpretability can be more diverse than you think! For modular addition, we find that a “pizza algorithm” can emerge from network training, which is significantly different from the “clock algorithm” found by

@NeelNanda5

. Phase transitions can happen between pizza & clock!

3

54

336

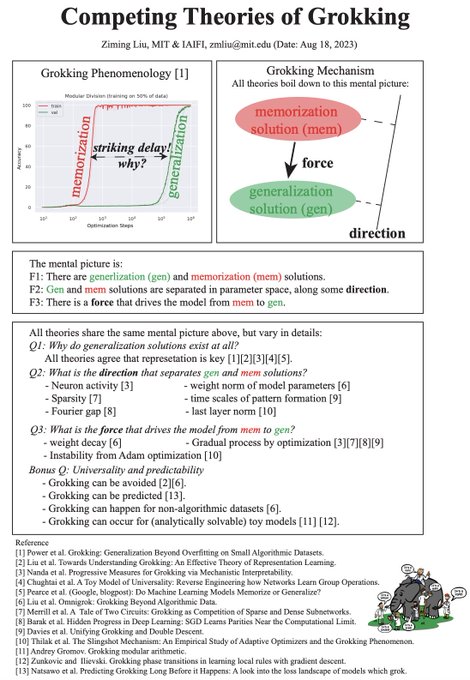

The grokking phenomenon seemed very puzzling and uncontrollable, but no more! We understand grokking via the lens of neural landscapes, and show we can induce or eliminate grokking on various datasets as we wish. Joint work with

@tegmark

@ericjmichaud_

.

4

53

281

@tegmark

1/N MLPs are foundational for today's deep learning architectures. Is there an alternative route/model? We consider a simple change to MLPs: moving activation functions from nodes (neurons) to edges (weights)!

5

44

297

In a week, I can probably cross out my whole todo list. 🥲

3

7

208

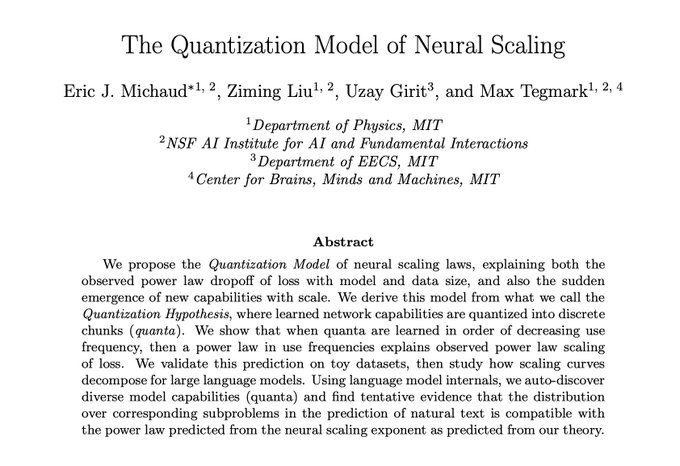

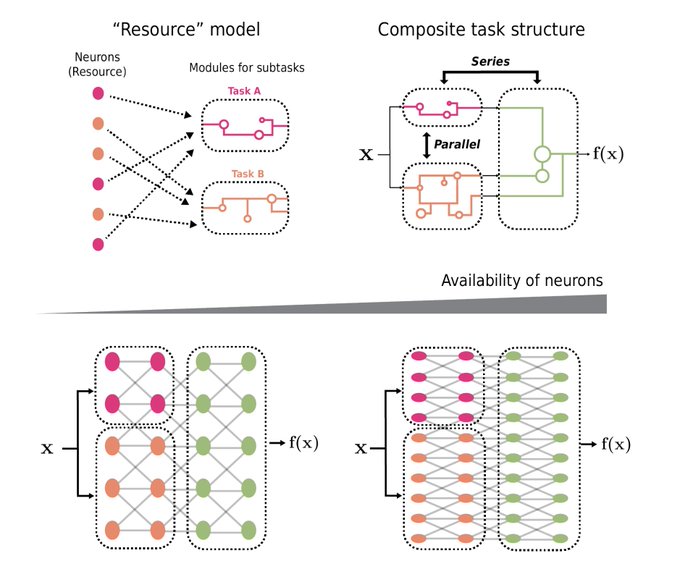

What do large language models and quantum physics have in common? They are both quantized! We find that a neural network contains many computational quanta, whose distribution can explain neural scaling laws. Happy to be part of the project lead by

@ericjmichaud_

and

@tegmark

!

4

30

178

@tegmark

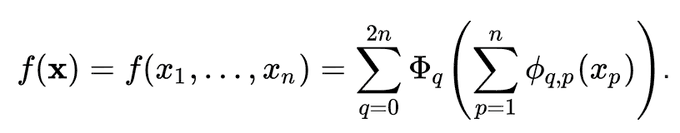

3/N Inspired by the representation theorem, we explicitly parameterize the Kolmogorov-Arnold representation with neural networks. In honor of two great late mathematicians, Andrey Kolmogorov and Vladimir Arnold, we call them Kolmogorov-Arnold Networks (KANs).

3

8

160

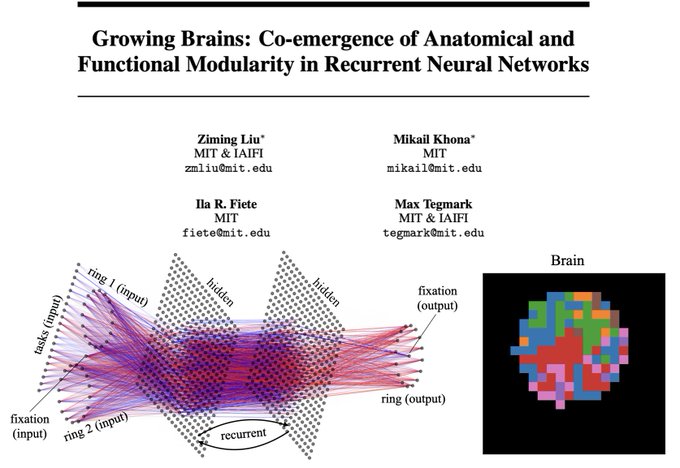

I'm glad to present our recent works in Prof. Levin's group! I talked about how neuroscience can help AI interpretability and how it in turn helps neuroscience. Here's the recording:

Growing "Brains" in Artificial Neural Networks via

@YouTube

0

25

133

@tegmark

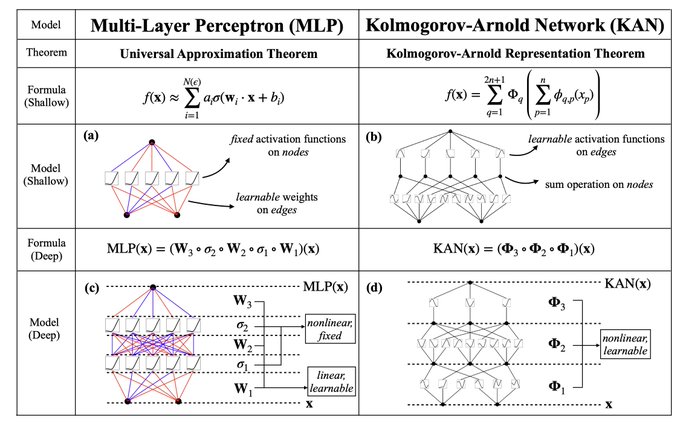

2/N This change sounds from nowhere at first, but it has rather deep connections to approximation theories in math. It turned out, Kolmogorov-Arnold representation corresponds to 2-Layer networks, with (learnable) activation functions on edges instead of on nodes.

1

7

131

@tegmark

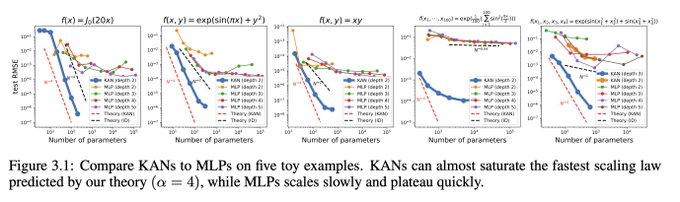

4/N From the math aspect: MLPs are inspired by the universal approximation theorem (UAT), while KANs are inspired by the Kolmogorov-Arnold representation theorem (KART). Can a network achieve infinite accuracy with a fixed width? UAT says no, while KART says yes (w/ caveat).

2

3

121

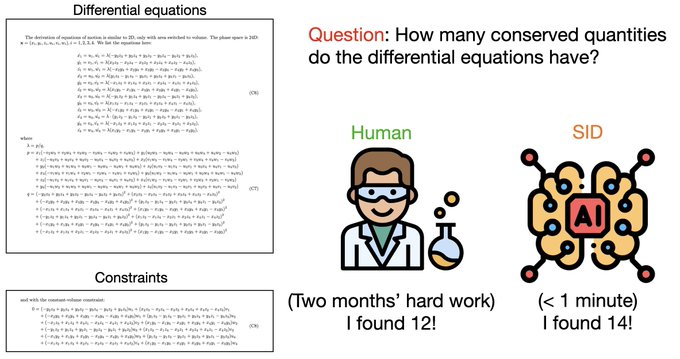

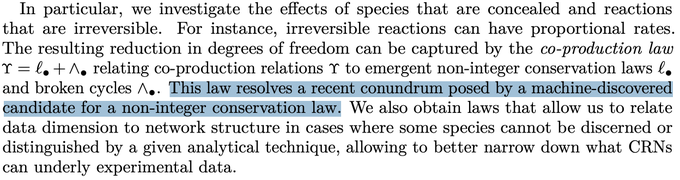

A strange conservation law discovered by our AI method is now understood by domain experts (see this paper )! This is a special moment for me - never felt this proud of the tools we're building! And, NO, AI isn't replacing scientists, but complementing us.

3

24

101

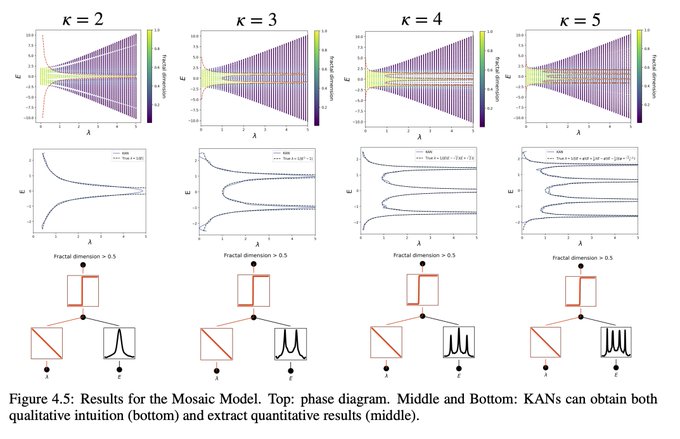

A review on Scientific discovery in the Age of AI, now published in

@Nature

! Happy to be part of this exciting project with

@AI_for_Science

friends!

…

1

14

94

This is a joint work w/

@tegmark

and awesome collaborators from MIT, Northeastern, IAIFI and Caltech.

1

4

106

The pizza paper is accepted by

#NeurIPS23

as an oral presentation: Mechanistic interpretability can be more diverse (but also more subtle) than you think! This calls for (automatic) classification of algorithms, which is less biased by human intuition than the way MI is done now.

NN interpretability can be more diverse than you think! For modular addition, we find that a “pizza algorithm” can emerge from network training, which is significantly different from the “clock algorithm” found by

@NeelNanda5

. Phase transitions can happen between pizza & clock!

3

54

336

3

3

76

Our work “Omnigrok: grokking beyond algorithmic data” has been highlighted in ICLR 2023 as spotlight! Besides grokking, our unique way of visualizing loss landscapes holds promise to explain other AI emergent capabilities, study neural dynamics and unravel generalization as well.

The grokking phenomenon seemed very puzzling and uncontrollable, but no more! We understand grokking via the lens of neural landscapes, and show we can induce or eliminate grokking on various datasets as we wish. Joint work with

@tegmark

@ericjmichaud_

.

4

53

281

2

8

69

It’s definitely my greatest pleasure to be on Cognitive Revolution Podcast!

@labenz

and I discussed in depth my recent work w/

@tegmark

on making neural networks modular and interpretable using a simple trick inspired from brains! Mechanistic interpretability can be much easier!

[new episode]

@labenz

intereviews

@ZimingLiu11

at MIT about his research: making neural networks more modular and interpretable.

They discuss:

- The intersection of biology, physics, +AI research

- mechanistic interpretability advancements

- Those visuals!

Links ↓

1

4

14

0

6

50

Really excited that our research on grokking + pizza are covered by

@QuantaMagazine

! Artificial intelligence is more or less "Alien Intelligence" -- just like in biology, we need scientific methods to study these "new lives".

2

6

46

@Sentdex

Thanks for sharing our work! In case anyone's interested in digging more, here's my tweet 😃:

1

3

49

If I ever grew taller, these two photos will be more similar 😝

@ke_li_2021

@YuanqiD

Look up to role models

@geoffreyhinton

, Yoshua Bengio,

@ylecun

2

0

43

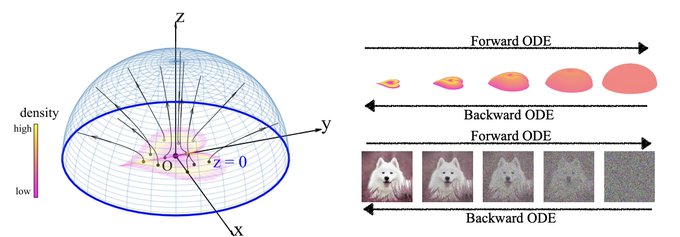

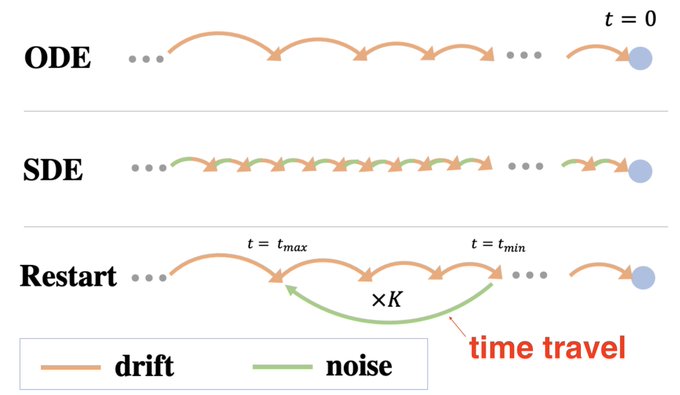

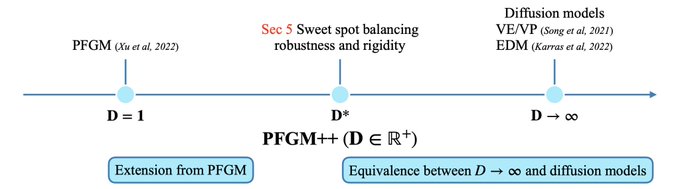

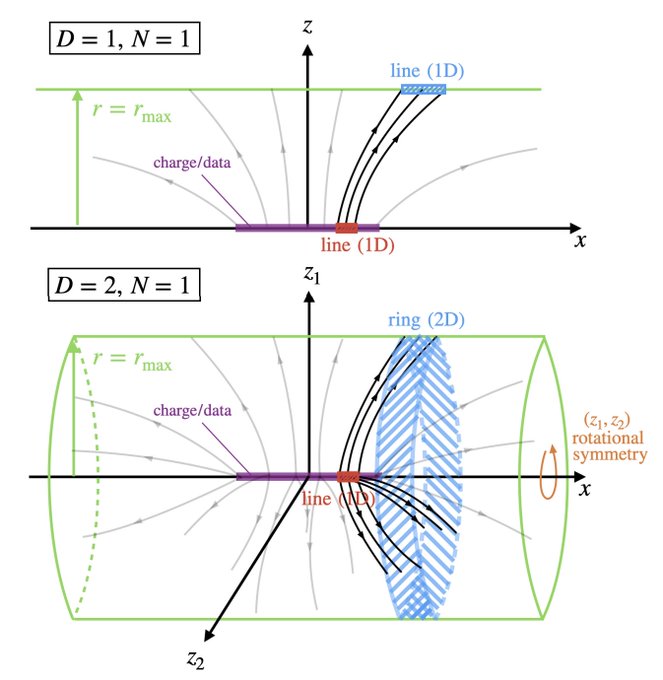

Tired of diffusion models being too slow, but don't know how to start with our new physics-inspired model PFGM? This is the perfect blog for you, very approachable in both understanding the picture and getting hands-on experience!

Stable Diffusion runs on physics-inspired Deep Learning.

Researchers from MIT (first authors

@ZimingLiu11

and

@xuyilun2

) have recently unveiled a new physics-inspired model that runs even faster!

This introduction has everything you need to know 👇

2

34

165

1

4

37

Paper link here in case you're interested😃:

Ziming Liu

@ZimingLiu11

from

@MIT

discussing A Neural Scaling Law from Lottery Ticket Ensembling

#MITAIScaling

@MITFutureTech

0

1

6

0

5

36

Really nice write up on grokking from google 👍

Do Machine Learning Models Memorize or Generalize?

An interactive introduction to grokking and mechanistic interpretability w/

@ghandeharioun

,

@nadamused_

,

@Nithum

,

@wattenberg

and

@iislucas

20

255

1K

0

1

31

Advertisement alert🚨

I'll be presenting five projects at

#NeurIPS2023

, with topics include mechanistic interpretability, neural scaling law, generative models, grokking and neuroscience-inspired AI for neuroscience🧐. Welcome!

2

1

31

Also fun to collaborate (again) with my friend

@RoyWang67103904

whom I knew from elementary school :)

0

0

35

@shaohua0116

Sad, but still better than what I’ve got from my 600-word rebuttal addressing all the questions they asked: “after reading other reviewers’ comments, I decided to lower my score.” 😂

1

0

30

Next week I’ll be attending NeurIPS! Looking forward to meeting all of you! Reach out if you want to chat (topics including but not limited to: physics of deep learning, AI for science, mech interp etc.)

Also don’t forget to come to our

@AI_for_Science

workshop on Saturday! 🤩

1

0

31

@aidan_mclau

Thanks for sharing our work! In case anyone's interested in digging more, here's my tweet 😃:

0

1

30

@arankomatsuzaki

Thanks for sharing our work! In case anyone's interested in digging more, here's my tweet:

2

5

29

Excited to attend

#NeurIPS2022

Tuesday to Saturday. If you are also a fan of AI + Physics, let's chat! DMs are welcome.

3

0

28

Following up my previous tweet, I'll be at

#NeurIPS2023

from Tuesday to Saturday! I've been thinking about these questions and would love to hear people's thoughts on this. In return, I'll also provide my two cents if you're interested :-). A thread🧵.

1

1

24

We present a simple effective theory and phase diagrams to demystify grokking, a generalization puzzle discovered by

@OpenAI

last year.

arXiv:

3

2

23

@kaufman35288

The examples in our paper are all reproducible in less than 10 minutes on a single cpu (except parameter sweep). I have to be honest that the problem scales are obviously smaller than typical machine learning tasks, but are typical scales for science-related tasks.

3

0

25

@_akhaliq

Thank you so much for sharing our work! In case anyone's interested in digging more, here's my tweet:

0

0

19