Yilun Xu

@xuyilun2

Followers

2K

Following

1K

Media

23

Statuses

98

World Sim @GoogleDeepMind. Prev: @NVIDIA, PhD @MIT_CSAIL, BS @PKU1898 . views are my own

Joined September 2018

It's rewarding to see my work back in 2019 still in the conversation.

# A new type of information theory. this paper is not super well-known but has changed my opinion of how deep learning works more than almost anything else. it says that we should measure the amount of information available in some representation based on how *extractable* it is,

5

2

63

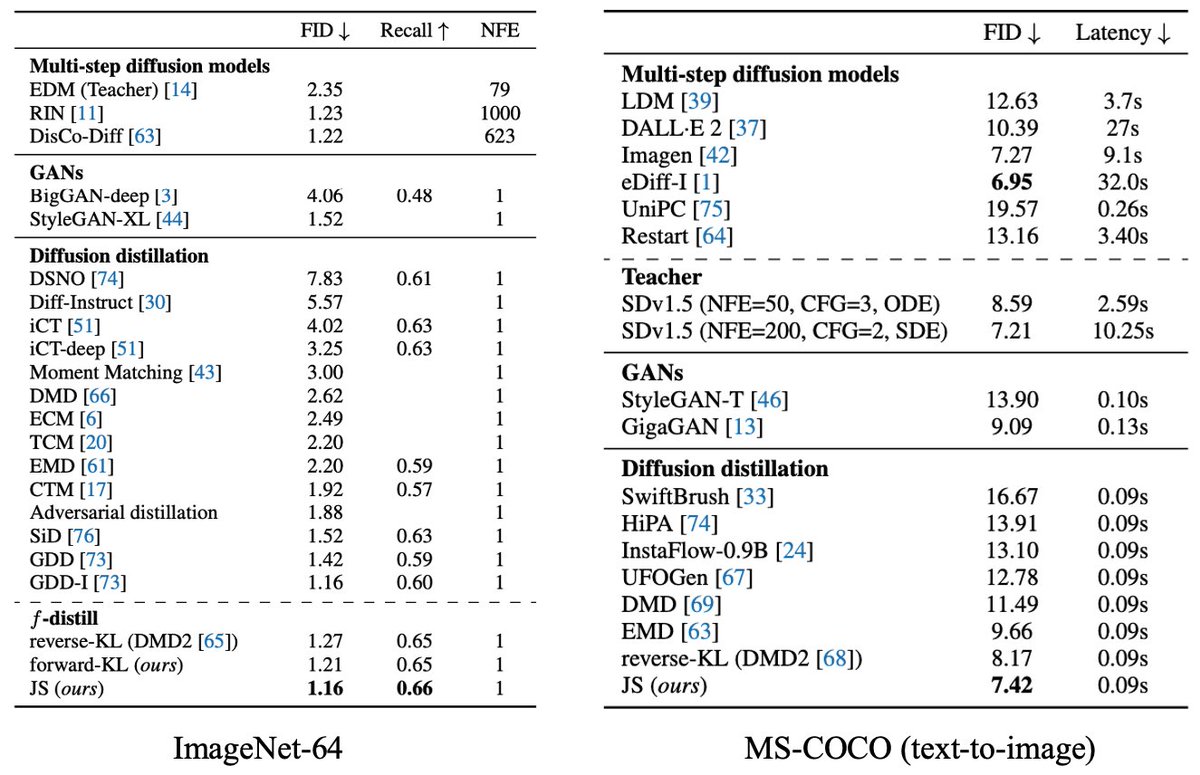

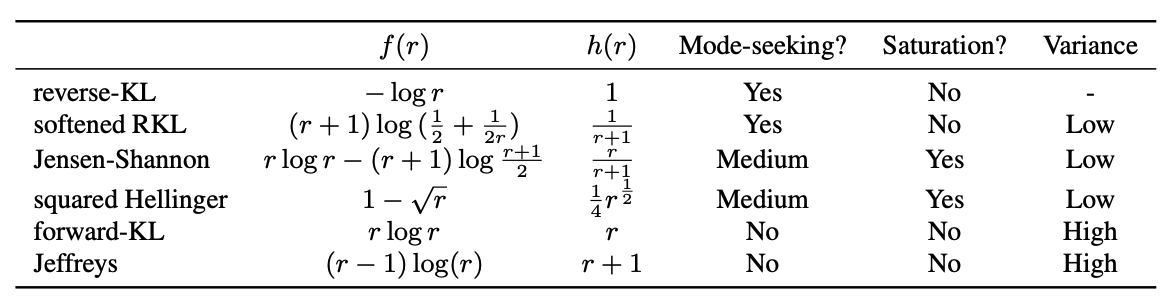

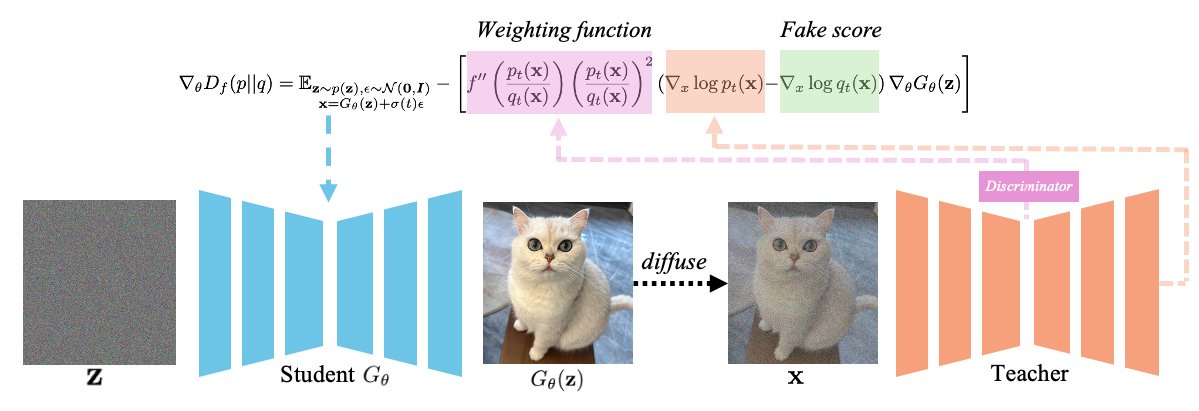

Tired of slow diffusion models? Our new paper introduces f-distill, enabling arbitrary f-divergence for one-step diffusion distillation. JS divergence gives SOTA results on text-to-image! Choose the divergence that suits your needs. Joint work with @wn8_nie @ArashVahdat 1/N

8

49

243

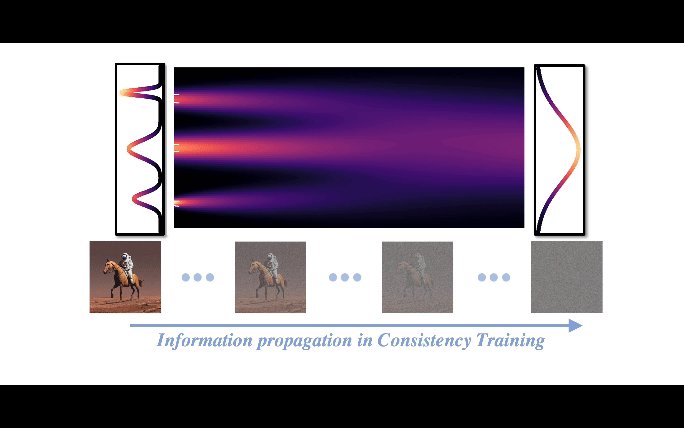

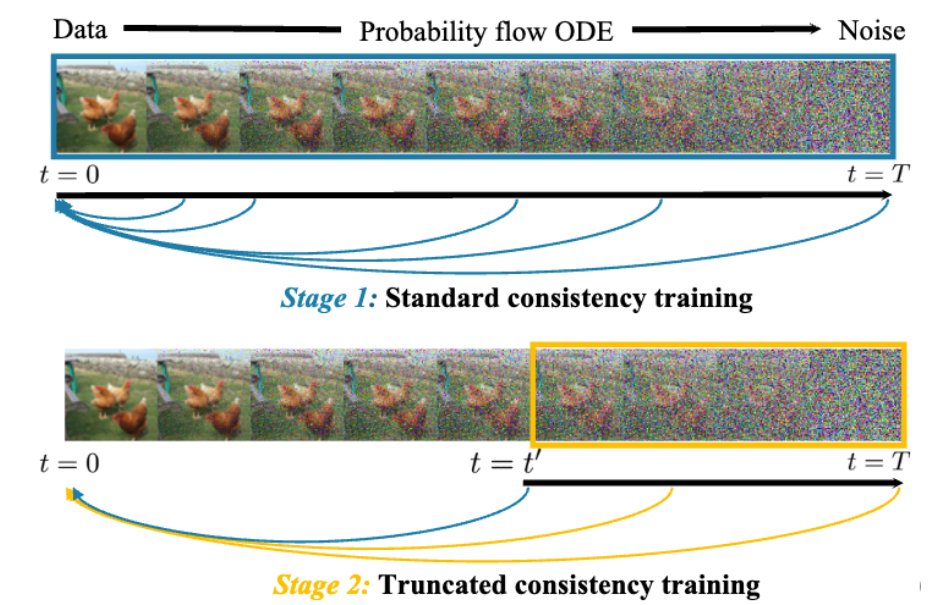

⚡️ New recipe for diffusion models -> one-step generator!. Intuition: information flows in consistency training *propagate* from data to noise. It enables multi-stage training for better model capacity allocation. Excited to see simple intuition gives SOTA consistency models!

Time to make your diffusion models one step! Excited to share our recent work on Truncated Consistency Models, a new state-of-the-art consistency model. TCM outperforms a previous SOTA, iCT-deep, using more than 2x smaller networks in both one-step and two-step FIDs. Joint work.

2

19

154

Check out our new model for heavy-tailed data 🧐, with Student-t kernel. Through this project, I came to realize PFGM++ uses the Student-t kernel Nature is magical! Thermodynamics gives us Gaussian (diffusion models), electrostatics gives us Student-t (PFGM++). What's next?.

🌪️ Can Gaussian-based diffusion models handle heavy-tailed data like extreme scientific events? The answer is NO. We’ve redesigned diffusion models with multivariate "Student-t" noise to tackle heavy tails! 📈. 📝 Read more:

0

15

92

The generation process of discrete diffusion models can be simplified by first predicting where the noisy tokens are by a *planner*, and then refining them by a *denoiser*. An adaptive sampling scheme naturally emerges based on the planner: more noisy tokens, more sampling steps.

Discrete generative models use denoisers for generation, but they can slip up. What if generation *isn’t only* about denoising?🤔. Introducing DDPD: Discrete Diffusion with Planned Denoising🤗🧵(1/11). w/ @junonam_ @AndrewC_ML @HannesStaerk @xuyilun2 Tommi Jaakkola @RGBLabMIT

0

1

31

Does my PhD thesis title predict the Nobel outcome today? 😬. “On physics-inspired Generative Models”.

Officially passed my PhD thesis defense today! I'm deeply grateful to my collaborators and friends for their support throughout this journey. Huge thanks to my amazing thesis committee: Tommi Jaakkola (advisor), @karsten_kreis , and @phillip_isola ! 🎓✨

4

7

160

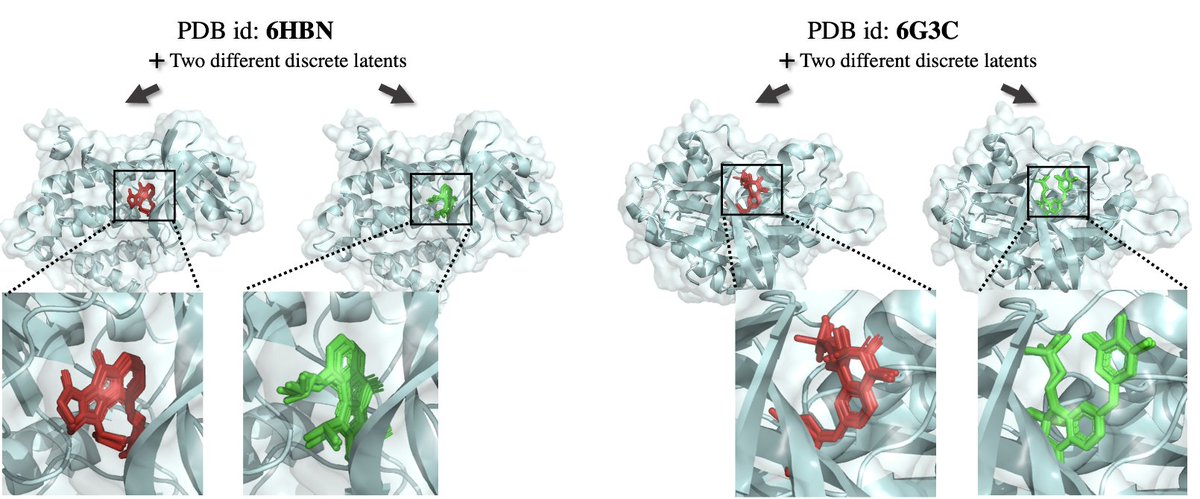

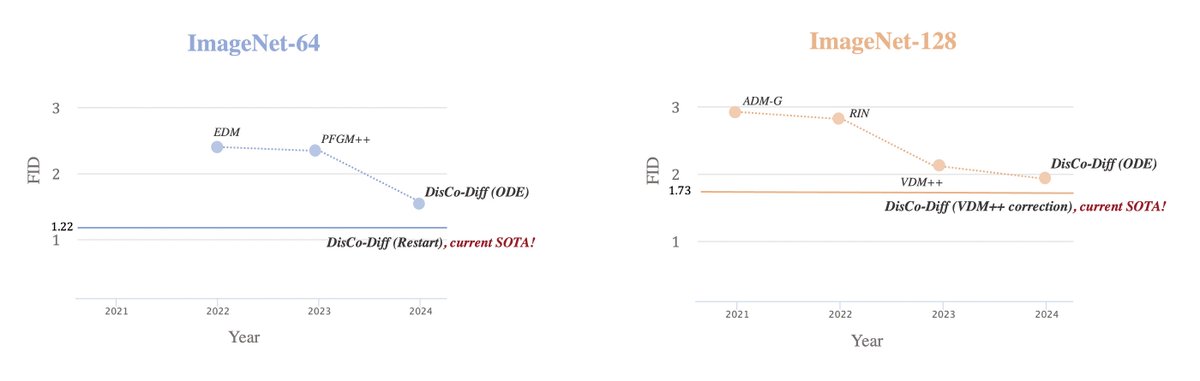

For more information, please visit our project page: . Shout out to my awesome collaborators and advisors @GabriCorso , Tommi Jaakkola, @ArashVahdat and @karsten_kreis (9/9).

research.nvidia.com

DisCo-Diff combines continuous diffusion models with learnable discrete latents, simplifying the ODE process and enhancing performance across various tasks.

0

4

15