Naman Goyal

@NamanGoyal21

Followers

2K

Following

3K

Media

2

Statuses

207

Research @thinkymachines, previously pretraining LLAMA at GenAI Meta

Joined November 2012

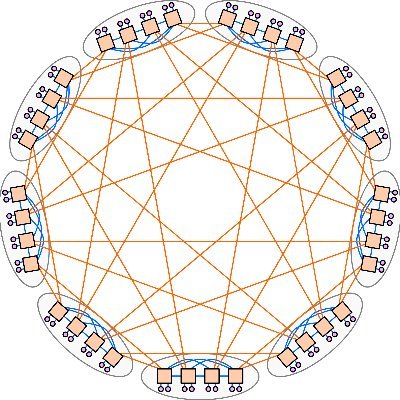

MiniMax M2 Tech Blog 3: Why Did M2 End Up as a Full Attention Model? On behave of pre-training lead Haohai Sun. ( https://t.co/WH4xOD9KrT) I. Introduction As the lead of MiniMax-M2 pretrain, I've been getting many queries from the community on "Why did you turn back the clock

We’re open-sourcing MiniMax M2 — Agent & Code Native, at 8% Claude Sonnet price, ~2x faster ⚡ Global FREE for a limited time via MiniMax Agent & API - Advanced Coding Capability: Engineered for end-to-end developer workflows. Strong capability on a wide-range of applications

23

121

802

Thanks @thinkymachines for supporting Tinker access for our CS329x students on Homework 2 😉

Its not even been a month since @thinkymachines released Tinker & Stanford already has an assignment on it

8

38

588

Tinker is cool. If you're a researcher/developer, tinker dramatically simplifies LLM post-training. You retain 90% of algorithmic creative control (usually related to data, loss function, the algorithm) while tinker handles the hard parts that you usually want to touch much less

Introducing Tinker: a flexible API for fine-tuning language models. Write training loops in Python on your laptop; we'll run them on distributed GPUs. Private beta starts today. We can't wait to see what researchers and developers build with cutting-edge open models!

110

651

6K

Introducing Tinker: a flexible API for fine-tuning language models. Write training loops in Python on your laptop; we'll run them on distributed GPUs. Private beta starts today. We can't wait to see what researchers and developers build with cutting-edge open models!

234

805

6K

LoRA makes fine-tuning more accessible, but it's unclear how it compares to full fine-tuning. We find that the performance often matches closely---more often than you might expect. In our latest Connectionism post, we share our experimental results and recommendations for LoRA.

82

565

3K

At Thinking Machines, our work includes collaborating with the broader research community. Today we are excited to share that we are building a vLLM team at @thinkymachines to advance open-source vLLM and serve frontier models. If you are interested, please DM me or @barret_zoph!

39

81

1K

Apologies that I haven't written anything since joining Thinking Machines but I hope this blog post on a topic very near and dear to my heart (reproducible floating point numerics in LLM inference) will make up for it!

Today Thinking Machines Lab is launching our research blog, Connectionism. Our first blog post is “Defeating Nondeterminism in LLM Inference” We believe that science is better when shared. Connectionism will cover topics as varied as our research is: from kernel numerics to

75

206

3K

Today Thinking Machines Lab is launching our research blog, Connectionism. Our first blog post is “Defeating Nondeterminism in LLM Inference” We believe that science is better when shared. Connectionism will cover topics as varied as our research is: from kernel numerics to

236

1K

8K

so apparently swe-bench doesn’t filter out future repo states (with the answers) and the agents sometimes figure this out… https://t.co/dCxr8EALhq

github.com

We've identified multiple loopholes with SWE Bench Verified where agents may look at future repository state (by querying it directly or through a variety of methods), and cases in which future...

7

19

299

The past 4 months have been among the most rewarding of my career—filled with learning and building alongside some of the most talented ML research and infra folks I know. I truly believe magic happens when driven, talented people are aligned on a shared mission.

Thinking Machines Lab exists to empower humanity through advancing collaborative general intelligence. We're building multimodal AI that works with how you naturally interact with the world - through conversation, through sight, through the messy way we collaborate. We're

0

3

121

🚨🔥 CUTLASS 4.0 is released 🔥🚨 pip install nvidia-cutlass-dsl 4.0 marks a major shift for CUTLASS: towards native GPU programming in Python slidehelloworld.png https://t.co/pBLMpQAXHW

16

86

426

Congrats amazing friends and ex colleagues on killer release! Pushing the frontier of open source models pushes the field collectively forward!

Today is the start of a new era of natively multimodal AI innovation. Today, we’re introducing the first Llama 4 models: Llama 4 Scout and Llama 4 Maverick — our most advanced models yet and the best in their class for multimodality. Llama 4 Scout • 17B-active-parameter model

5

3

74

This is what we have been up to and much more! come join us!!! 🚀

Great to visit one of our data centers where we're training Llama 4 models on a cluster bigger than 100K H100’s! So proud of the incredible work we’re doing to advance our products, the AI field and the open source community. We’re hiring top researchers to work on reasoning,

1

0

16

Thousands of gpus isn't cool, you know what's cool? Thousands of hosts

0

0

7

Does style matter over substance in Arena? Can models "game" human preference through lengthy and well-formatted responses? Today, we're launching style control in our regression model for Chatbot Arena — our first step in separating the impact of style from substance in

45

113

883

llama1: 2048 gpus llama2: 4096 gpus llama3: 16384 gpus llama4: ..... You see where we are headed! Gonna be insane ride!

Starting today, open source is leading the way. Introducing Llama 3.1: Our most capable models yet. Today we’re releasing a collection of new Llama 3.1 models including our long awaited 405B. These models deliver improved reasoning capabilities, a larger 128K token context

2

4

97

Very excited to release the technical report and the model weights for the all 3 sizes of llama3 models. It has been exciting past 12 months. Really looking forward to the incredible research this will unlock from the community. Now on to llama4 🚀

Starting today, open source is leading the way. Introducing Llama 3.1: Our most capable models yet. Today we’re releasing a collection of new Llama 3.1 models including our long awaited 405B. These models deliver improved reasoning capabilities, a larger 128K token context

2

4

53

pretty cool! nice work, really happy the amazing research open sourcing base model weights can enable.

The @AiEleuther interpretability team is releasing a set of top-k sparse autoencoders for every layer of Llama 3 8B: https://t.co/bATEFXH0sr We are working on an automated pipeline to explain the SAE features, and will start training SAEs for the 70B model shortly.

0

0

5

This is extremely exciting, looking forward to the impact it will have on biology. The team behind EvolutionaryScale is one of the most talented and passionate set of people, I have interacted with.

We have trained ESM3 and we're excited to introduce EvolutionaryScale. ESM3 is a generative language model for programming biology. In experiments, we found ESM3 can simulate 500M years of evolution to generate new fluorescent proteins. Read more: https://t.co/iAC3lkj0iV

0

1

26