Miles Cranmer

@MilesCranmer

Followers

13K

Following

5K

Media

570

Statuses

3K

Assistant Prof @Cambridge_Uni, works on AI for the physical sciences. Previously: Flatiron, DeepMind, Princeton, McGill

Joined September 2011

Here's a condensed version of the matplotlib cheatsheets so it can fit a desktop background.(. Full image: and vectorized .svg, with the non-standard fonts outlined: Thanks @NPRougier et al for making it!

7

381

2K

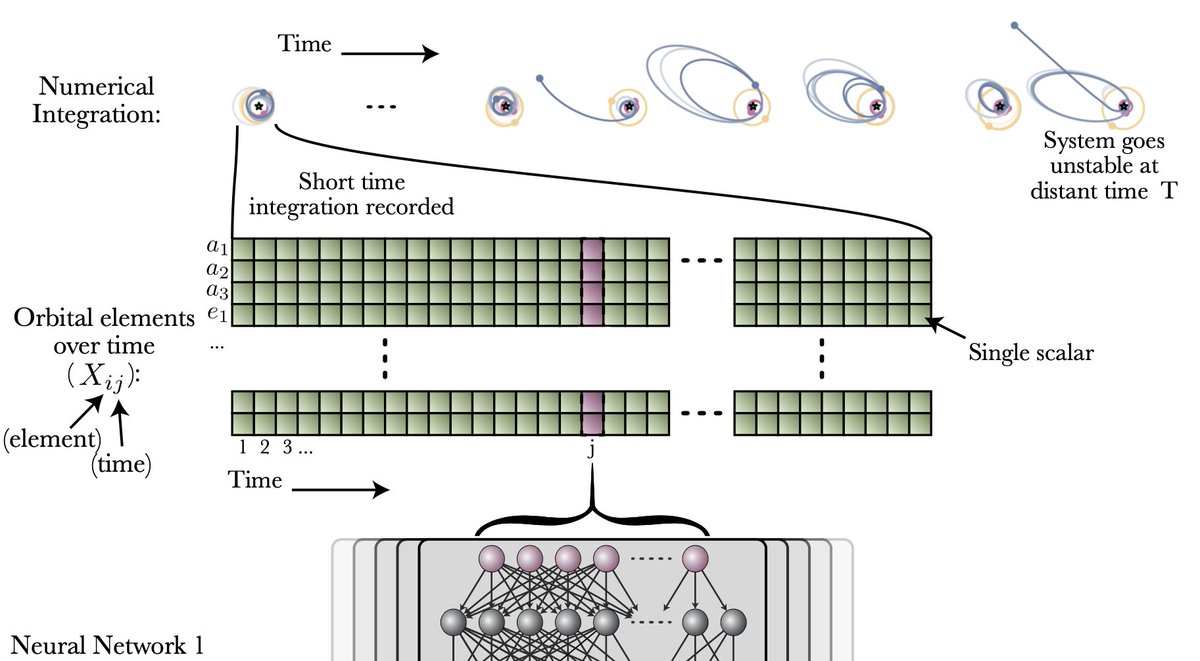

Could machine learning rediscover the law of gravitation simply by observing our solar system?. With our new approach, the answer is *YES*. Led by: @PabloLemosP .With: @Niall_Jeffrey @cosmo_shirley @PeterWBattaglia.Paper: Blog:

23

308

1K

If you’ve never tried it, is the single best explanatory tool for neural networks. An essential demo for any deep learning course!. I still notice improvements in my intuition just by tinkering with it. From @dsmilkov @shancarter.

9

262

1K

TabNine is awesome: It suggests code completions in real-time using deep learning conditioned on your existing code. Free plugins for Jupyter, vim, emacs, sublime, and VS. Really enjoying it so far. Thanks @ykilcher for pointing it out!

17

177

837

If you use PyTorch, I highly recommend checking out @huggingface's Accelerate: It's as minimal as it is powerful: multi-GPU/TPU training, while still preserving your original training loop!. you can even run multi-device from a Jupyter notebook:

4

117

658

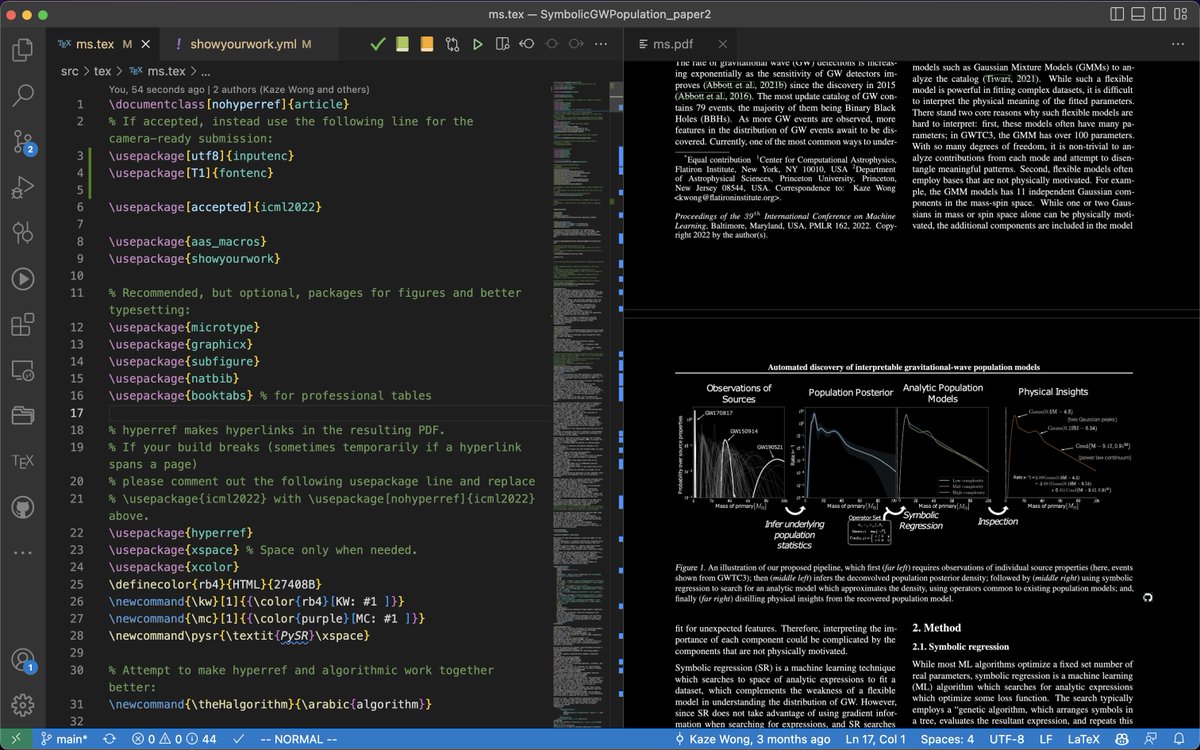

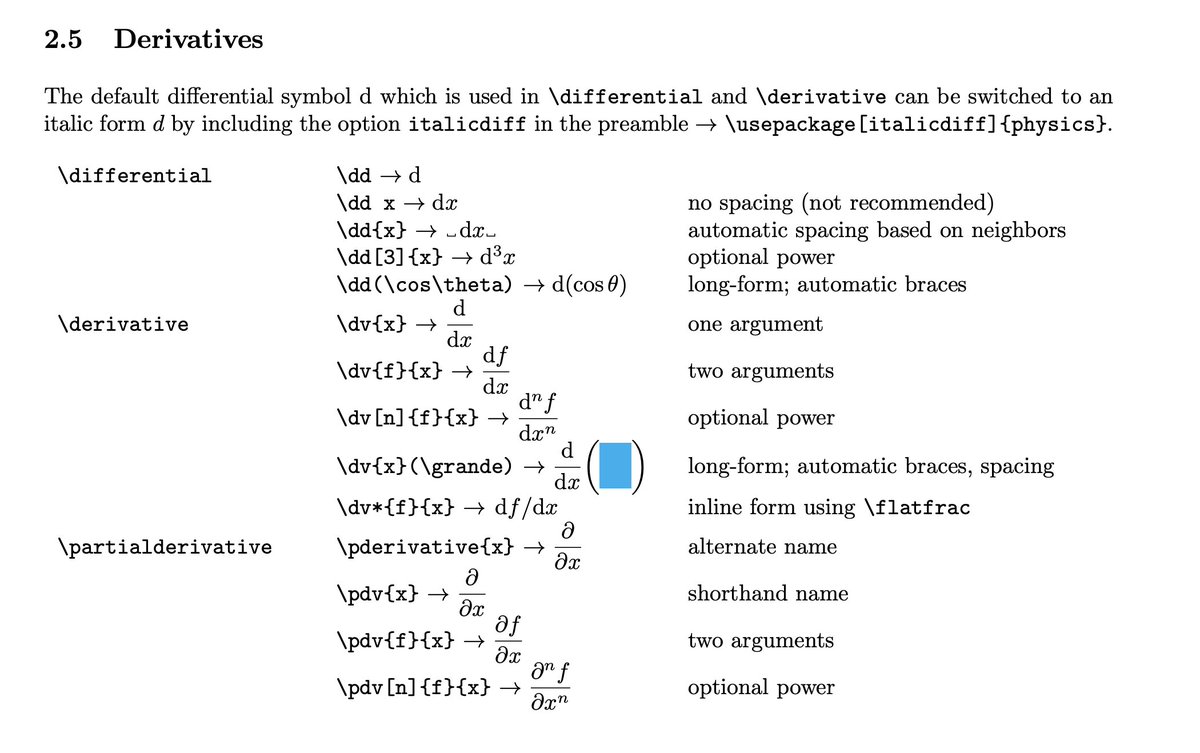

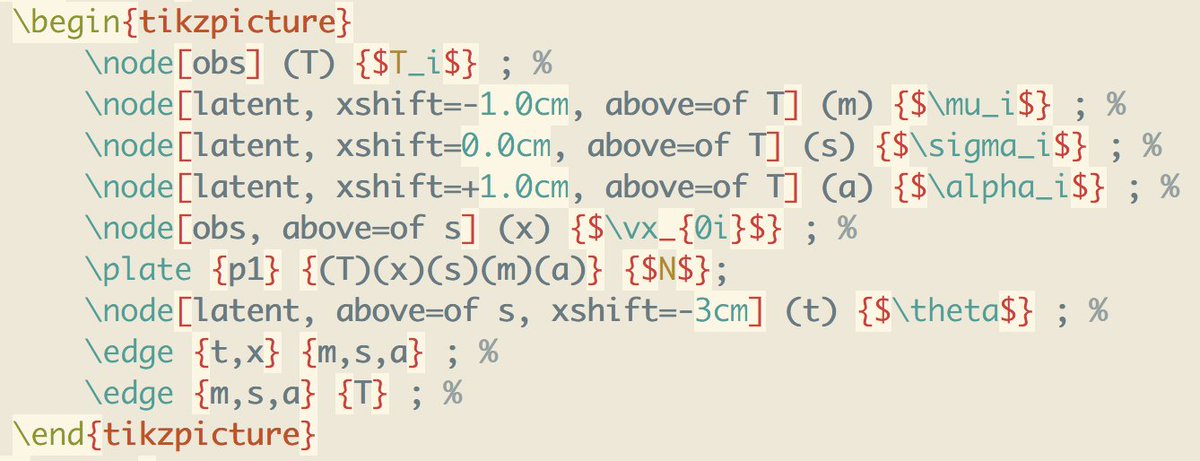

Wow, @sagemath's LaTeX package is amazing. It bridges the gap between symbolic math software and LaTeX presentation. Uses SymPy as the algebra backend and formats the output into the pdf. Wish I knew about this in undergrad!

12

105

521

Oh. My. God. Can someone please port this to PyTorch?.

Excited to share Penzai, a JAX research toolkit from @GoogleDeepMind for building, editing, and visualizing neural networks! Penzai makes it easy to see model internals and lets you inject custom logic anywhere. Check it out on GitHub:

5

61

518

I think the best part of today's news is it will encourage more AI hires in physics departments.

BREAKING NEWS.The Royal Swedish Academy of Sciences has decided to award the 2024 #NobelPrize in Physics to John J. Hopfield and Geoffrey E. Hinton “for foundational discoveries and inventions that enable machine learning with artificial neural networks.”

32

47

503

Wow, JAX is amazing. Thanks for introducing me @shoyer. It's essentially numpy on steroids: parallel functions, GPU support, autodiff, JIT compilation, deep learning. #NeurIPS2019 .

6

97

477

So 1) Lagrangian/Hamiltonian NNs enforce time symmetry, 2) Graph Nets enforce translational symmetry, and 3) Group-CNNs enforce rotational symmetry. But are there any NNs that can enforce an arbitrary learned symmetry?.@wellingmax @DaniloJRezende @KyleCranmer?.

20

72

409

I love how @andrewwhite01 just casually released the greatest academic search tool ever created:. Literature reviews on steroids?.

I packed-up a full-text paper scraper, vector database, and LLM into a CLI to answer questions from only highly-cited peer-reviewed papers. Feels unreal to be able instantly get answers by an LLM "reading" dozens of papers. 1/2

4

37

360

Master's program opportunity – Apply to be a @GoogleDeepMind Scholar at Cambridge in our DIS program!. I see this as an ideal program to launch a career in AI for the physical sciences, so am very excited that DeepMind have offered support for underrepresented students 🎉. More

8

60

360

1/10 Very excited to present Lagrangian Neural Networks, a new type of architecture that conserves energy in a learned simulator without requiring canonical coordinates. w/ @samgreydanus, @shoyer, @PeterWBattaglia, @DavidSpergel, @cosmo_shirley:

11

92

358

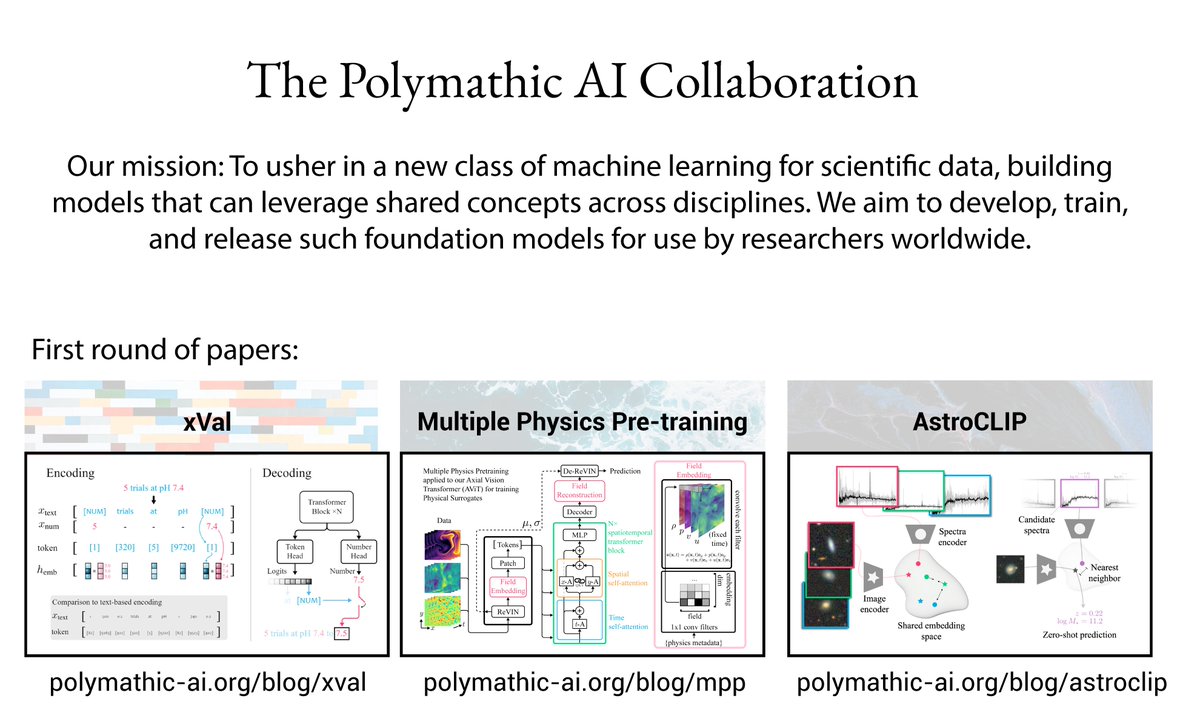

Job alert! 🚨. We are building a *Foundation Model for Science*. @SimonsFdn + @FlatironCCA are supporting PhD internships + faculty sabbaticals!. w/ @cosmo_shirley @kchonyc @Tim_Dettmers @oharub ++. Interested in building "ScienceGPT" with us? Please apply! (links in 2nd tweet)

12

76

346

I'm late, but weight averaging seems like a great trick for improving DL generalization (@Pavel_Izmailov et al). Take a pretrained model, do SGD about minima, and average weights. Thanks @andrewgwils for recommendation!. Found a big improvement in my tuned model at zero cost:

3

52

338

🧵 Could this be the ImageNet moment for scientific AI?. Today with @PolymathicAI and others we're releasing two massive datasets that span dozens of fields - from bacterial growth to supernova!. We want this to enable multi-disciplinary foundation model research.

12

88

336

My lectures this week include 'Best practices' and I will be assigning @karpathy's neural net training blog for reading material :). Really an *essential* read for every practitioner!

Very excited to start teaching my deep learning course at Cambridge this week, as part of our Data Intensive Science MPhil!. Teaching the first part from @SimonPrinceAI's "Understanding Deep Learning" book, which has quickly become one of my favorite textbooks in *any* field.

1

35

316

I'm really starting to like @michael_nielsen's strategy of reading papers. Write down a question about the background or results, find the answer, distill, repeat. It feels like test-driven development. Write a test, make it work, refactor, repeat.

7

35

320

Using paperqa, I fed GPT every paper in my Zotero library and asked: "What are some ways machine learning can be used in observational astronomy?". It generated the entire literature review below. Not bad at all!. with @andrewwhite01's

13

45

313

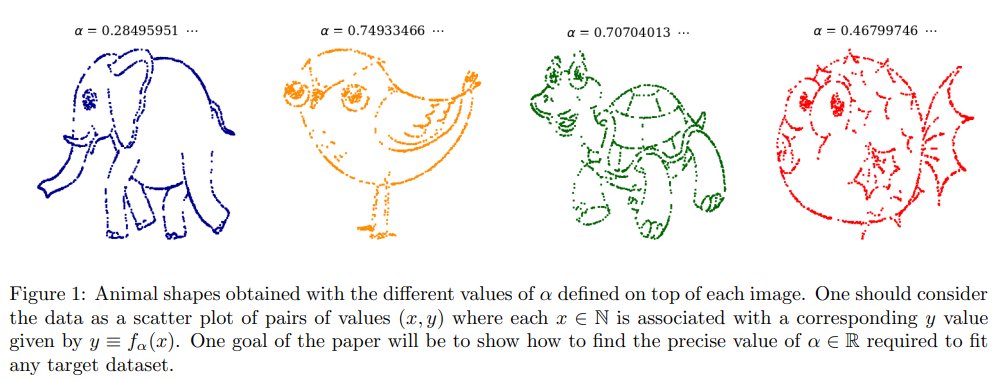

Happy to announce that our work on converting deep models to symbolic equations has been accepted to NeurIPS! 🍾. @PeterWBattaglia @cosmo_shirley @DavidSpergel @KyleCranmer.

Very excited to share our new paper "Discovering Symbolic Models from Deep Learning with Inductive Biases"!. We describe an approach to convert a deep model into an equivalent symbolic equation. Blog/code: Paper: Thread👇.1/n

3

41

294

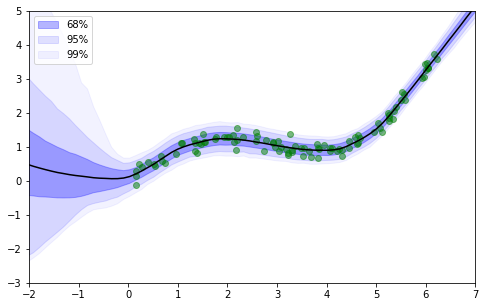

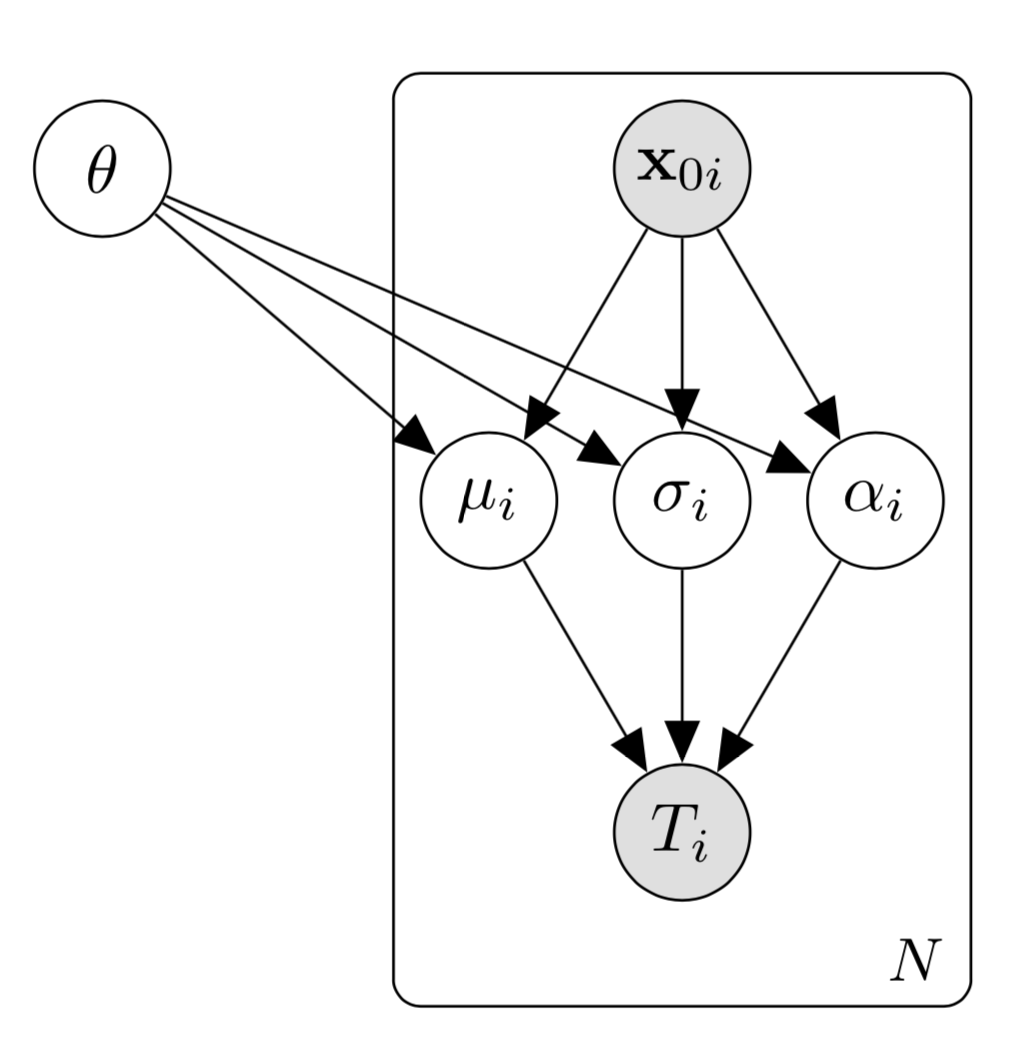

I made a tutorial on simulation-based/likelihood-free inference for scientists using PyTorch-based "sbi"!.+ colab notebook. Thanks for putting together this awesome set of libraries .@jakhmack, @deismic_, @janmatthis, @conormdurkan, @driainmurray, et al.

5

73

274

Made a functional SymPy->JAX converter equivalent :). Works with grad, vmap, jit, etc. PySR/SymbolicRegression.jl can automatically convert discovered expressions to vectorized JAX models now; will add PyTorch soon.

Put together a micro-library for turning SymPy expressions into PyTorch Modules. Symbols becomes inputs, and floats become trainable parameters. Train your SymPy expressions by gradient descent!

2

47

270

PyTorch Lightning’s greatest strength is that it implements a vast amount of deep learning tips and tricks which would typically take years to pick up. e.g., previously I'd never heard of gradient clipping. I turned it on and my model's NaNs vanished!

I have been playing around with @PyTorchLightnin and I am pleasantly surprised!. Very good level of abstraction if you want full control over the model & some production-level tools, eg, many loggers and quick debug iterations. Kudos to the team! Looking forward to 1.0. 💪.

5

34

238

Happy to share I will be doing a research internship at @DeepMind from July-November with @PeterWBattaglia and @DaniloJRezende. Excited to work on some new approaches to AI for Physics!.

8

5

237

Very cool paper from @EmtiyazKhan:. Relatedly, here's a great blog post that helped me with intuition about natural gradients:

Our new paper on "The Bayesian Learning Rule" is now on arXiv, where we provide a common learning-principle behind a variety of learning algorithms (optimization, deep learning, and graphical models). Guess what, the principle is Bayesian. A very long🧵

6

45

234

Giving the Presidential Lecture tomorrow at @SimonsFdn @FlatironInst:. "The Next Great Scientific Theory is Hiding Inside a Neural Network" Will be in NYC until the 10th – please get in touch if you would like to chat!

8

29

188

Happy to share that the "Multiple Physics Pretraining" paper won the Best Paper Award at the AI for Science NeurIPS workshop! Congratulations to @mikemccabe210, @liamhparker, @BrunoRegaldo @oharub for leading the effort, and everybody in the @PolymathicAI team!

Honored to receive best paper for MPP at the @AI_for_Science @NeurIPSConf workshop with my teammates @PolymathicAI! Thanks to everyone who stopped by our poster for the great discussions and to the organizers for running such an interesting workshop! #AI4Science #NeurIPS2023.

2

21

183

Words cannot express the perfection of @TuringLang for probabilistic inference. It's somehow both intuitive and concise without sacrificing any expressiveness. (Also blazingly fast, of course). Doing my first real project with it and having a blast.

4

19

183

1/10 This was a phenomenal discussion. I have many more questions than answers now but I think that's a good thing. Here's a list of some interesting papers mentioned.

So 1) Lagrangian/Hamiltonian NNs enforce time symmetry, 2) Graph Nets enforce translational symmetry, and 3) Group-CNNs enforce rotational symmetry. But are there any NNs that can enforce an arbitrary learned symmetry?.@wellingmax @DaniloJRezende @KyleCranmer?.

2

44

171

Are you a PhD student who is (1) interested in working on foundation models for science, and (2) experienced with deep learning software?. There is a 1-year internship at Flatiron Institute (NYC) to work on @PolymathicAI!. (deadline: Nov 30!)

2

35

167

Very excited to start teaching my deep learning course at Cambridge this week, as part of our Data Intensive Science MPhil!. Teaching the first part from @SimonPrinceAI's "Understanding Deep Learning" book, which has quickly become one of my favorite textbooks in *any* field.

9

14

164

I am entering the faculty job market for 2023! Very eager to find a position at the intersection of astro/physics and machine learning/data science. If you happen to see something relevant, please forward to mcranmer@princeton.edu - thanks!.

5

9

160

PyTorch-style deep learning in Julia!. As a longterm PyTorch user I am really happy to see this is possible in @FluxML. The key advantage is that Julia *itself* is autodiff-ready, so you can compute gradients through a complex library without needing a rewrite in a DL framework.

3

17

155

It's amazing how Enzyme is this much faster than JAX for even simple operations!. (Am I doing something wrong, or is differentiating through optimized assembly code really that much faster??)

The idea behind Enzyme differentiation is so cool. It literally performs autodiff through optimized assembly code*, which gives faster derivatives!. Q: Would this let you differentiate in-place array operations?. *(LLVM IR, not machine code).

10

17

154

Okay, here is a function for doing this (modulo shading) in LaTeX, without external illustration tools: This is what .$$\labmat{2}{3}{X} \cdot \exp(\labmat{3}{2}{Y})$$.looks like:. Thanks @AgolEric @rmpnegrinho for pointers!

That's a nice way of writing equations (I sometimes do this in lectures). From ICLR 2021 submission ("An attention free transformer"),

2

30

149

Happy to share our paper on AI for observational astronomy via our new resource allocation algorithm!. "Unsupervised Resource Allocation with Graph Neural Networks". Blog/code: Paper: w/ @peter_melchior @iamstarnord . Thread 👇.1/n

5

24

143