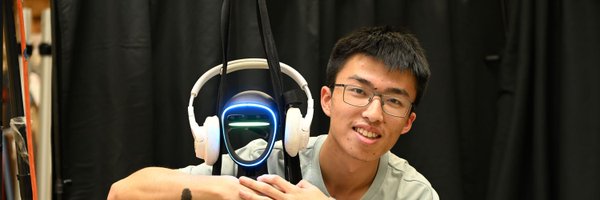

Haoyang Weng

@ElijahGalahad

Followers

740

Following

646

Media

33

Statuses

193

Undergraduate @Tsinghua_IIIS | Intern @LeCARLab | Machine leaning for robotics | Applying for PhD 26 fall

Joined December 2021

We present HDMI, a simple and general framework for learning whole-body interaction skills directly from human videos — no manual reward engineering, no task-specific pipelines. 🤖 67 door traversals, 6 real-world tasks, 14 in simulation. 🔗 https://t.co/ll44sWTZF4

24

148

745

Wow, zero gap visually?!

MimicKit now supports #IsaacLab! After many years with IsaacGym, it's time to upgrade. MimicKit has a simple Engine API that allows you to easily swap between different simulator backends. Which simulator would you like to see next?

1

0

7

@ElijahGalahad please consider my manifold optimizations I have recreated your spiral experiment with a 1,000,000 point cloud and make models that use geodesic topology instead of traditional 2d ai strats https://t.co/2RFOpmZeeW

1

1

1

Loss type isn’t the key variable, parameterization is. With same prediction space, v-, x-, and ε-losses merely reduce to different t-weighting. So the conclusion carries to all loss types. Checkout https://t.co/ohTZAoFlhr built on top of amazing @ZhiSu22.

github.com

Unofficial implementation of the toy example in JiT https://arxiv.org/abs/2511.13720 - EGalahad/jit_toy_example

1

0

13

Residual parameterizations can 𝐜𝐡𝐚𝐧𝐠𝐞 𝐭𝐡𝐞 𝐞𝐟𝐟𝐞𝐜𝐭𝐢𝐯𝐞 𝐩𝐫𝐞𝐝𝐢𝐜𝐭𝐢𝐨𝐧 𝐭𝐚𝐫𝐠𝐞𝐭. They determine whether the model must carry high-dimensional noise through the network, or whether it can operate purely on the low-dimensional data manifold.

3

0

8

You cannot discuss optimization without considering architecture. Parameterization changes everything: same objective can behave very differently. With a “clever” residual, ε -prediction can match x -prediction by reparameterizing the output head. https://t.co/lGJ8aqECS3

@YouJiacheng Yeah but I think the point is you want the network operate in a low dim space as the manifold, instead of high dim space as the input. Learning an identity means carrying the input all along the network. Inefficient and redundant given the discrepancy in input and manifold dim.

1

1

11

Many say ε-prediction and x-prediction are just reparameterizations and should behave the same. Actually… 𝐢𝐭 𝐝𝐨𝐞𝐬 𝐚𝐧𝐝 𝐢𝐭 𝐝𝐨𝐞𝐬𝐧’𝐭. In my extended toy experiment: • Vanilla MLP → x wins • Well-parameterized network → ε works fine as well

9

23

180

You're my spiritual leader.

Zero teleoperation. Zero real-world data. ➔ Autonomous humanoid loco-manipulation in reality. Introducing VIRAL: Visual Sim-to-Real at Scale. We achieved 54 autonomous cycles (walk, stand, place, pick, turn) using a simple recipe: 1. RL 2. Simulation 3. GPUs Website:

1

0

7

Impressive long horizon, whole-body, generalizable dexterity! Congrats @sundayrobotics Curious about: 1. how costly is this map building process? 2. is the visual alignment done with a diffusion model or retargeting -> rendering -> inpainting stuff?

Today, we present a step-change in robotic AI @sundayrobotics. Introducing ACT-1: A frontier robot foundation model trained on zero robot data. - Ultra long-horizon tasks - Zero-shot generalization - Advanced dexterity 🧵->

1

0

48

Introducing Gallant: Voxel Grid-based Humanoid Locomotion and Local-navigation across 3D Constrained Terrains 🤖 Project page: https://t.co/eC1ftH5ozx Arxiv: https://t.co/5K9sXDNQWv Gallant is, to our knowledge, the first system to run a single policy that handles full-space

1

33

186

A lot of people using HDMI codebase responded it trains really fast, e.g. the suitcase motion under one hour. These techniques are minimal but essential for its efficiency. A figure in the paper will never be intuitive as these videos. https://t.co/D3dzfIDswt

github.com

Contribute to LeCAR-Lab/HDMI development by creating an account on GitHub.

0

0

0

This is a #freelunch if you use teacher student training: just train teacher with residual actions and behavior clone a student without it.

1

0

0

As these clips show, using residual actions (left), the policy explores 𝗹𝗼𝗰𝗮𝗹𝗹𝘆 𝗮𝗿𝗼𝘂𝗻𝗱 𝘁𝗵𝗲 𝗿𝗲𝗳𝗲𝗿𝗲𝗻𝗰𝗲. Without it (right), episode initialized from kneeling will 𝗮𝗯𝗿𝘂𝗽𝘁𝗹𝘆 𝗽𝗼𝗽 𝘂𝗽, generating low-quality training samples.

1

0

0

#freelunch series 1: Residual Action Space for motion tracking Use 𝚓𝚙𝚘𝚜_𝚝𝚊𝚛𝚐𝚎𝚝 = 𝚖𝚘𝚝𝚒𝚘𝚗_𝚓𝚙𝚘𝚜 + 𝚊𝚌𝚝𝚒𝚘𝚗 instead of 𝚍𝚎𝚏𝚊𝚞𝚕𝚝_𝚓𝚙𝚘𝚜 + 𝚊𝚌𝚝𝚒𝚘𝚗 for exploration. This is especially useful for motion far from the default pose, e.g. kneeling.

1

1

6

I believe @physical_int choose these 3 demos on purpose to show everyone they are capable of all the iconic demos that other startups do: making coffee -> @sundayrobotics folding laundry -> @DynaRobotics building boxes -> @GeneralistAI Now the burden’s on the rest.

11

10

177

Imagine moving a heavy object with a joystick—through a swarm of quadruped-arm robots. 🕹️ decPLM: decentralized RL for multi-robot pinch-lift-move. • No comms or rigid links • Hierarchical RL + constellation reward • 2→ N robots, sim→real 🔗 https://t.co/BPwqHV0ngE

15

110

610

Universal retargeting for dexterous hands, humanoid, grounded in physics!

🕸️ Introducing SPIDER — Scalable Physics-Informed Dexterous Retargeting! A dynamically feasible, cross-embodiment retargeting framework for BOTH humanoids 🤖 and dexterous hands ✋. From human motion → sim → real robots, at scale. 🔗 Website: https://t.co/ieZfG2Q4L0 🧵 1/n

1

2

31

'Progress in robotics often feels slow day to day, but zoom out — and it’s staggering.'

Jan 2024: one humanoid stood up in a CMU lab. 20 months later: a day in the life of a humanoid at NVIDIA. Neither @zhengyiluo nor I could’ve imagined where this journey would lead — but what a ride it’s been. Progress in robotics often feels slow day to day, but zoom out — and

1

0

8

Jan 2024: one humanoid stood up in a CMU lab. 20 months later: a day in the life of a humanoid at NVIDIA. Neither @zhengyiluo nor I could’ve imagined where this journey would lead — but what a ride it’s been. Progress in robotics often feels slow day to day, but zoom out — and

How do you give a humanoid the general motion capability? Not just single motions, but all motion? Introducing SONIC, our new work on supersizing motion tracking for natural humanoid control. We argue that motion tracking is the scalable foundation task for humanoids. So we

2

14

121

When @TairanHe99 accepted the PhD offer and I decided to work on humanoids in Aug 2023, I told him: learning-based humanoid whole-body control is one of the hardest control problems — “naive” sim2real just won’t work — you could spend your whole PhD on it. Yet @TairanHe99

Jan 2024: one humanoid stood up in a CMU lab. 20 months later: a day in the life of a humanoid at NVIDIA. Neither @zhengyiluo nor I could’ve imagined where this journey would lead — but what a ride it’s been. Progress in robotics often feels slow day to day, but zoom out — and

1

8

103