Andreas Tolias Lab @ Stanford University

@AToliasLab

Followers

5K

Following

4K

Media

60

Statuses

1K

to understand intelligence and develop technologies by combining neuroscience and AI

Palo Alto, CA

Joined May 2017

Andrej Karpathy literally shows how to build apps by prompting in 30 mins https://t.co/rkJOOraznO

23

517

3K

@GoogleDeepMind has led the way in applying AI to science. Great to see the White House’s new Genesis Mission & excited to be collaborating with @ENERGY by giving National Lab scientists accelerated access to our frontier AI models & agentic tools, starting with AI co-scientist.

AI has the potential to compress the time needed for new discoveries from years to days. 📉 That’s why we’re supporting the US Dept. of @ENERGY's Genesis Mission – providing National Labs with access to AI tools to help accelerate research in physics, chemistry, and beyond. →

71

136

1K

This paper from Harvard and MIT quietly answers the most important AI question nobody benchmarks properly: Can LLMs actually discover science, or are they just good at talking about it? The paper is called “Evaluating Large Language Models in Scientific Discovery”, and instead

381

2K

8K

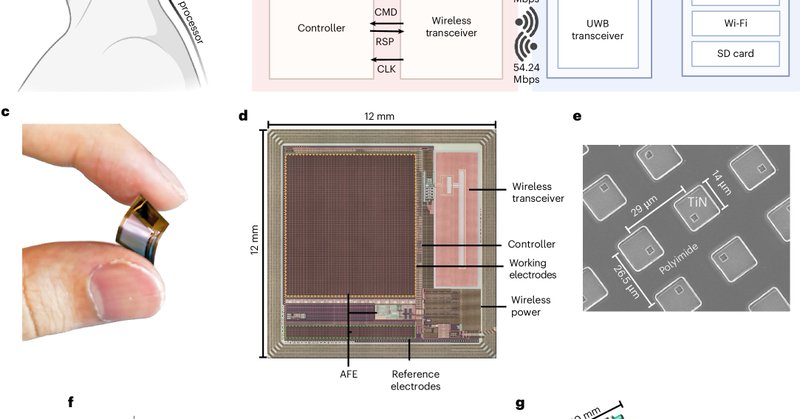

In Nature Electronics, we present BISC with Ken Shepard, @peabody124 & others: an ultrathin brain–computer interface chip with 65,536 electrodes, 1,024 channels, and ~100× higher bandwidth at 100 Mbit/s. A bidirectional platform toward future neocortex–AI 'exocortex' systems.

nature.com

Nature Electronics - A flexible micro-electrocorticography brain–computer interface that integrates a 256 × 256 array of electrodes, signal processing, data telemetry and...

2

27

88

I’ll be talking about this work #NeurIPS2025 today at 3pm! Stop by in Ballroom 6A if you’re interested - happy to discuss more during the poster session

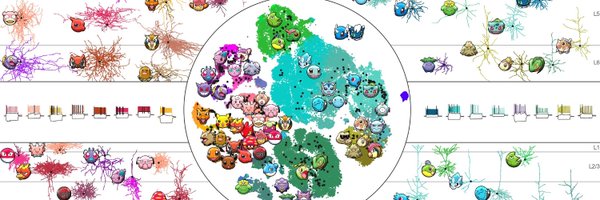

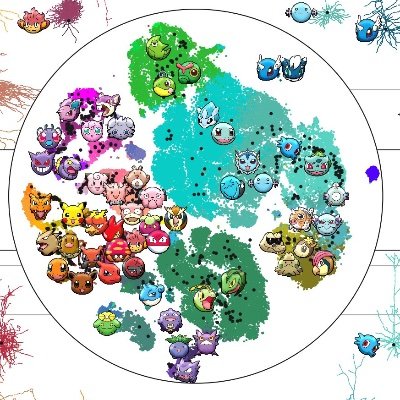

1/9 How does the brain encode what we see? 👁️ We introduce "dual feature selectivity" – many 🐒 🐭 cortical neurons encode a continuum b/w activating & suppressing features Blog✨ https://t.co/b4LCq8mg8N 🙏 co-lead Nikos Karantzas & @AToliasLab @naturecomputes @sinzlab & more

0

5

15

1/9 How does the brain encode what we see? 👁️ We introduce "dual feature selectivity" – many 🐒 🐭 cortical neurons encode a continuum b/w activating & suppressing features Blog✨ https://t.co/b4LCq8mg8N 🙏 co-lead Nikos Karantzas & @AToliasLab @naturecomputes @sinzlab & more

2

16

37

LAST CALL : Only a couple of days left to resgister for the Montreal AI & Neuroscience MAIN 2025 meeting / Dec 12-13th @ HEC Montreal !! Don’t miss it ! Register today ! 👉 https://t.co/NvbBgVF8xJ

#Neuroscience #AI #MAIN2025

0

5

8

🚀Excited to be at #NeurIPS2025 🧠Working on NeuroAI—using AI to understand the brain 🎤Talk Sunday at @neur_reps on interpreting the neural code Share your work & reach out if interested in👇 🔬Enigma Project @Stanford w/ @AToliasLab & @naturecomputes

0

4

16

Great article from @jeremyakahn in Fortune on the huge impact of AlphaFold on biological and biomedical research.

36

81

926

⏰ Less than one week to the submission deadline for the AAAI 2026 Workshop on Neuro for AI & AI for Neuro. Abstracts (2pg) and papers (8pg) due October 30th AOE. Submission instructions here: https://t.co/vAdA8JYR3A We'd love to see your work!

3

25

84

GitLab 18.5 is one of our biggest releases ever! It comes with an AI agent catalog that allows you to use Claude, OpenAI Codex, Google Gemini CLI, Amazon Q Developer, and OpenCode as well as GitLab native agents, for examples the ones for security testing.

GitLab 18.5 is live! 🚀 57 improvements across the platform, powered by 278 community contributions. From AI agents to security enhancements, this release has something for every team. New in GitLab 18.5: 🤖 AI agents that understand your workflow 🔒 Security tools that

1

3

14

We’re helping identify cancer cells that hide from the body’s immune system. 🧬 Built on our Gemma family of open models, C2S-Scale 27B has identified a new potential pathway for cancer therapy - a hypothesis we validated in the lab with scientists at @Yale University. 🧵

44

217

1K

The return of the physicists: "CMT-Benchmark: A benchmark for condensed matter theory built by expert researchers." https://t.co/xMtJprbF9S A set of hard physics problems few AIs can solve. Avg performance across 17 models is 11%. Problems range across topics like: Hartree-Fock

10

25

149

There used to be a fountain at the center of the Civic Center Plaza in SF. It was removed in 2003 because: > It's become an intolerable situation, and we don't have additional resources to continually clean it," said Alex Mamak, a spokesman for the Department of Public Works.

162

224

4K

Andrej Karpathy calls AI Agents slop "Overall, the models they are not there. And I feel like the industry [...] it's making too big of a jump and it's trying to pretend that this is amazing. And it's not—it's slop! And I think they are not coming to terms with it. And maybe

279

671

7K

Announcing the AAAI 2026 Workshop on Neuro for AI & AI for Neuro Uniting researchers in AI, comp neuro, and neuromorphic engineering to explore dialogue between biology and machine learning The call for papers is out. Deadline: October 30 Submission link below 👇

2

26

161

Thank you to everyone who joined the Neurotechnology Workshop – BCI, WBE & AGI with us, this weekend! @eboyden3, @patrickmineault, @Andrew_C_Payne, @mardinly, @michaelandregg, @stardazed0, @EscolaSean70058, @JacquesCarolan, @halehf, @AToliasLab, @BobbyKasthuri, @gkreiman,

4

15

80

Work with me to transform how 10m+ children learn worldwide! UNICEF Learning Passport is a global digital education platform already reaching more than 10 million children. They are expanding rapidly toward a 50 million goal by 2030. We’re looking for a Founding Engineer at the

3

6

20

In NeuroAI there's been an assumption of a tradeoff between strictess and predictivity for brain-model mappings -- with stricter methods better able to identify true mechanisms, and maximizing predictivity requiring too much flexibility. We show this is false. Basically, it

1/x Our new method, the Inter-Animal Transform Class (IATC), is a principled way to compare neural network models to the brain. It's the first to ensure both accurate brain activity predictions and specific identification of neural mechanisms. Preprint: https://t.co/hPqo5PrZoc

2

7

49

An exciting breakthrough for Huntington’s patients. The AAV5-miRNA requires intraparenchymal injection under MRI which will limit broad utility, but this is an important day for patients and their families Congratulations to Uniqure scientists

🚨 The first disease-modifying therapy for Huntington's disease was just announced this morning. 75% reduction in symptoms over 36 months. This isn't hype. It's field-changing. And it uses RNA. Here's what you need to know about $QURE AMT-130 🧵

5

54

389