Dayeon (Zoey) Ki

@zoeykii

Followers

357

Following

423

Media

20

Statuses

140

CS PhD @umdclip | MT, Multilingual, Cultural #NLProc | 🇰🇷🇨🇳🇨🇿🇺🇸

College Park, Maryland

Joined August 2022

🚀New dataset release: WildChat-4.8M 4.8M real user-ChatGPT conversations collected from our public chatbots: - 122K from reasoning models (o1-preview, o1-mini): represent real uses in the wild and very costly to collect - 2.5M from GPT-4o 🔗 https://t.co/gvBPEo4hqg (1/4)

huggingface.co

Thrilled to see WildChat featured by @_akhaliq, just as predicted by AKSelectionPredictor!😊 Explore 1 million user-ChatGPT conversations, plus details like country, state, timestamp, hashed IP, and request headers here: https://t.co/TW3vgk5jJ7

5

51

256

AI shows ingroup bias towards AI content! If we deploy LLMs in decision-making roles (e.g., purchasing goods, selecting academic submissions) they will favor LLM agents over ordinary humans https://t.co/M3ypv5gxlB

7

65

200

Today, we're releasing The Circuit Analysis Research Landscape: an interpretability post extending & open sourcing Anthropic's circuit tracing work, co-authored by @Anthropic, @GoogleDeepMind, @GoodfireAI @AiEleuther, and @decode_research. Here's a quick demo, details follow: ⤵️

7

66

332

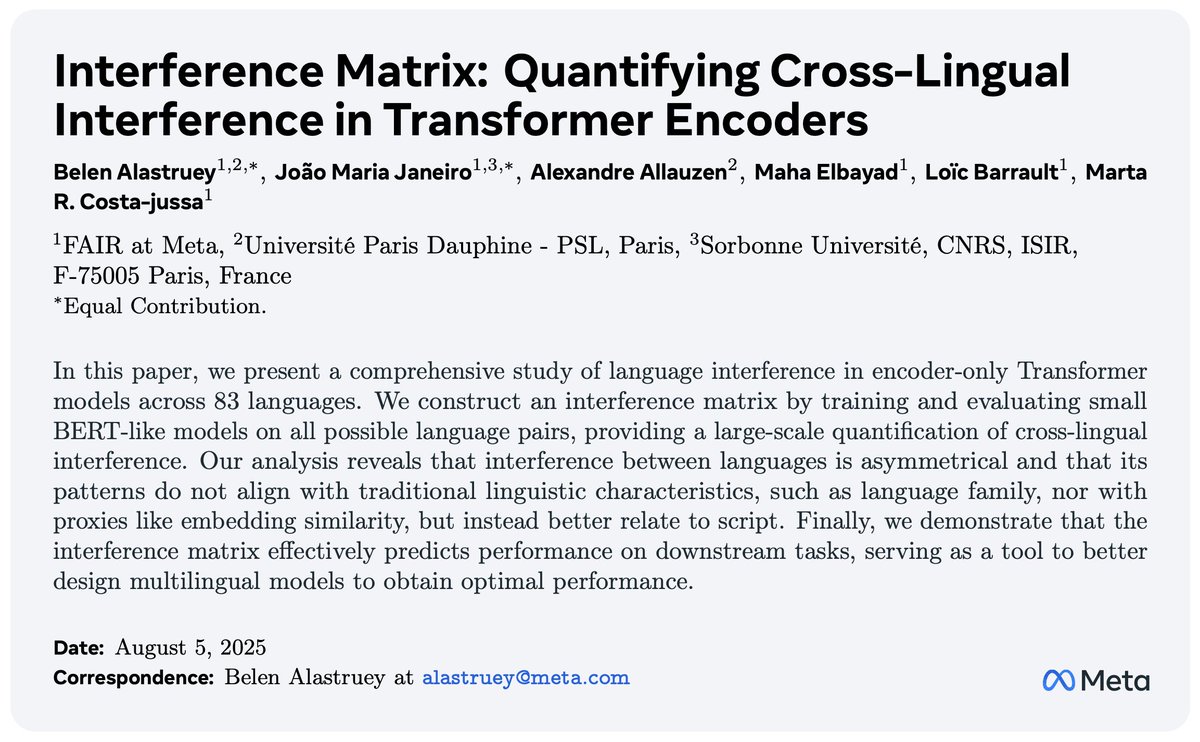

🚀New paper alert! 🚀 In our work @AIatMeta we dive into the struggles of mixing languages in largely multilingual Transformer encoders and use the analysis as a tool to better design multilingual models to obtain optimal performance. 📄: https://t.co/3qxUWDkoN5 🧵(1/n)

1

16

73

I'll also be presenting our paper on using question-answer pairs as a new signal for spotting translation errors 🕵️ Come to talk more about MT evaluation! 📍Poster session (Hall X4, X5) 📆Tuesday (7/29) 4-5:30pm 📝 https://t.co/vRUxI3rchW

aclanthology.org

Dayeon Ki, Kevin Duh, Marine Carpuat. Findings of the Association for Computational Linguistics: ACL 2025. 2025.

1/ How can a monolingual English speaker 🇺🇸 decide if a French translation 🇫🇷 is good enough to be shared? Introducing ❓AskQE❓, an #LLM-based Question Generation + Answering framework that detects critical MT errors and provides actionable feedback 🗣️ #ACL2025

1

5

35

I'm at #ACL2025 presenting our work on enhancing equitable cultural alignment through multi-agent debate ✨ Come visit our oral presentation! 📍Computational Social Science and Cultural Analytics session (Level 1 1.85) 📆Tuesday (7/29) 2-3:30pm 📝 https://t.co/1a3dVNb9pP

aclanthology.org

Dayeon Ki, Rachel Rudinger, Tianyi Zhou, Marine Carpuat. Proceedings of the 63rd Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers). 2025.

1/ Are two #LLMs better than one for equitable cultural alignment? 🌍 We introduce a Multi-Agent Debate framework — where two LLM agents debate the cultural adaptability of a given scenario. #ACL2025 🧵👇

0

4

42

I will be presenting our work 𝗠𝗗𝗖𝘂𝗿𝗲 at #ACL2025NLP in Vienna this week! 🇦🇹 Come by if you’re interested in multi-doc reasoning and/or scalable creation of high-quality post-training data! 📍 Poster Session 4 @ Hall 4/5 🗓️ Wed, July 30 | 11-12:30 🔗

aclanthology.org

Gabrielle Kaili-May Liu, Bowen Shi, Avi Caciularu, Idan Szpektor, Arman Cohan. Proceedings of the 63rd Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers). 2025.

🔥Thrilled to introduce MDCure: A Scalable Pipeline for Multi-Document Instruction-Following 🔥 How can we systematically and scalably improve LLMs' ability to handle complex multi-document tasks? Check out our new preprint to find out! Details in 🧵 (1/n):

1

4

26

Maybe don't use an LLM for _everything_? Last summer, I got to fiddle again with content diversity @AdobeResearch @Adobe and we showed that agentic pipelines that mix LLM-prompt steps with principled techniques can yield better, more personalized summaries

1

13

62

I'm excited to announce that my nonfiction book, "Lost in Automatic Translation: Navigating Life in English in the Age of Language Technologies", will be published this summer by Cambridge University Press. I can't wait to share it with you! 📖🤖 https://t.co/AchyRiseGN

9

27

166

(Repost due to mistaken deletion😢): Evaluating topic models (& doc clustering methods) is hard. In fact, since our paper critiquing standard eval practices 4 years ago, there hasn't been a good replacement metric That ends today! Our ACL paper introduces a new evaluation🧵

How do standard metrics work? Automated coherence computes how often the top n words in a topic appear together in some reference text (eg, Wikipedia) This fails to consider which *documents* are associated with each topic, and so doesn't transfer well to text clustering methods

0

5

33

You have a budget to human-evaluate 100 inputs to your models, but your dataset is 10,000 inputs. Do not just pick 100 randomly!🙅 We can do better. "How to Select Datapoints for Efficient Human Evaluation of NLG Models?" shows how.🕵️ (random is still a devilishly good baseline)

2

14

73

📣Thrilled to announce the drop of EXAONE 4.0, the next-generation hybrid AI. 🙌Prepare to be amazed by EXAONE’s capabilities. #EXAONE #LG_AI_Resrarch #HybridAI #AI

https://t.co/rOym0eio7J

lgresearch.ai

9

29

74

CLIPPER has been accepted to #COLM2025! In this work, we introduce a compression-based pipeline to generate synthetic data for long-context narrative reasoning tasks. Excited to be in Montreal this October🍁

⚠️ Current methods for generating instruction-following data fall short for long-range reasoning tasks like narrative claim verification. We present CLIPPER✂️, a compression-based pipeline that produces grounded instructions for ~$0.5 each, 34x cheaper than human annotations.

3

9

71

📢When LLMs solve tasks with a mid-to-low resource input/target language, their output quality is poor. We know that. But can we pin down what breaks inside the LLM? We introduce the 💥translation barrier hypothesis💥 for failed multilingual generation. https://t.co/VnrOWdNPr8

2

12

44

Why should you attend this talk? 🤔 A. Nishant put so much effort B. Learn the real limitations of MCQA C. Great takeaways for building better benchmarks D. All of the above ✔️

Our position paper was selected for an oral at #ACL2025! Definitely attend if you want to hear spicy takes on why MCQA benchmarks suck and how education researchers can teach us to solve these problems 👀

2

1

16

Super grateful to share that our work has been accepted as #ACL2025 oral presentation 🍀✨ See you in Vienna! 🇦🇹

1/ Are two #LLMs better than one for equitable cultural alignment? 🌍 We introduce a Multi-Agent Debate framework — where two LLM agents debate the cultural adaptability of a given scenario. #ACL2025 🧵👇

1

8

26

🚀 Tower+: our latest model in the Tower family — sets a new standard for open-weight multilingual models! We show how to go beyond sentence-level translation, striking a balance between translation quality and general multilingual capabilities. 1/5 https://t.co/WKQapk31c0

1

8

25

8/ 💌 Huge thanks to @MarineCarpuat, @rachelrudinger, and @zhoutianyi for their guidance — and special shoutout to the amazing @umdclip team! Check out our paper and code below 🚀 📄 Paper: https://t.co/Di5xgRewfW 🤖 Dataset:

arxiv.org

Large Language Models (LLMs) need to adapt their predictions to diverse cultural contexts to benefit diverse communities across the world. While previous efforts have focused on single-LLM,...

0

1

8

7/ 🌟 What’s next for Multi-Agent Debate? Some exciting future directions: 1️⃣ Assigning specific roles to represent cultural perspectives 2️⃣ Discovering optimal strategies for multi-LLM collaboration 3️⃣ Designing better adjudication methods to resolve disagreements fairly 🤝

1

0

3