Goodfire

@GoodfireAI

Followers

11K

Following

801

Media

104

Statuses

341

Advancing humanity's understanding of AI through interpretability research. Building the future of safe and powerful AI systems.

San Francisco

Joined August 2024

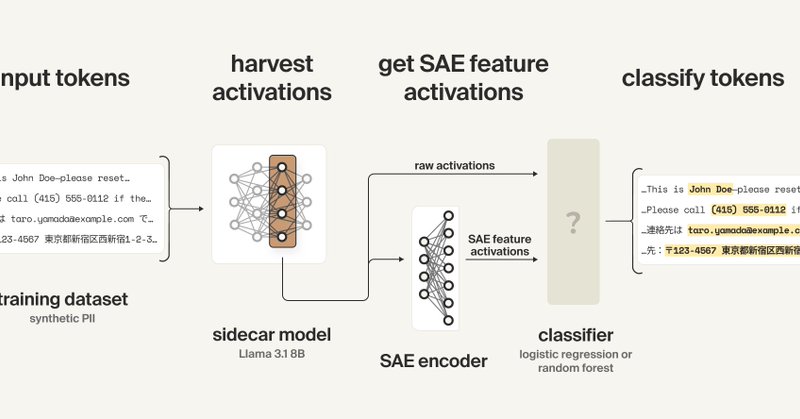

Today, we're announcing our $50M Series A and sharing a preview of Ember - a universal neural programming platform that gives direct, programmable access to any AI model's internal thoughts.

43

116

1K

Check out Atticus Geiger's Stanford guest lecture - on causal approaches to interpretability - for an overview of one of our areas of research! 01:51 - Activation steering (e.g. Golden Gate Claude) 10:23 - Causal mediation analysis (understanding the contribution of an

We believe some high-priority directions for interp research are neglected by existing educational resources - so we've made some to get the community up to speed. If you're interested in AI but not caught up on interp, stay tuned for our Stanford guest lectures + reading lists!

3

37

204

Topics covered include: - causal approaches to interpretability - computational motifs (e.g. induction heads) - levels of analysis & a neuro-inspired "model systems approach" - dynamics of representations across the sequence dimension

0

0

38

We believe some high-priority directions for interp research are neglected by existing educational resources - so we've made some to get the community up to speed. If you're interested in AI but not caught up on interp, stay tuned for our Stanford guest lectures + reading lists!

3

4

178

This opens up a new "surface of attack" for interpretability - information about the geometry of model representations that previous methods largely neglected. That information is crucial for faithfully understanding & manipulating models! See Ekdeep's thread above for more.

0

0

17

SAEs assume that model representations are static. But LLM features drift & evolve across context! @EkdeepL @can_rager @sumedh_hrs's new paper introduces a neuroscience-inspired method to capture these dynamic representations, plus some cool results & demos:

New paper! Language has rich, multiscale temporal structure, but sparse autoencoders assume features are *static* directions in activations. To address this, we propose Temporal Feature Analysis: a predictive coding protocol that models dynamics in LLM activations! (1/14)

3

20

191

I’m hosting a women in AI happy hour at NeurIPS next month with @GoodfireAI ✨ Come meet other women working on frontier research across industry labs (OpenAI, Inception) and academia (Stanford) DM me if you’re interested in joining, or sign up below —

9

12

205

This explains many-shot jailbreaking - sufficient context will overcome even a strong prior, and we can predict exactly when that will happen! It also lets us: - understand the additive effect of ICL & steering - estimate a model's prior for any given persona (3/4)

1

3

43

The paper formalizes a Bayesian framework for model control: altering a model's "beliefs" over which persona or data source it's emulating. Context (prompting) and internal representations (steering) offer dual mechanisms to alter those "beliefs". (2/4)

📝 New paper! Two strategies have emerged for controlling LLM behavior at inference time: in-context learning (ICL; i.e. prompting) and activation steering. We propose that both can be understood as altering model beliefs, formally in the sense of Bayesian belief updating. 1/9

1

0

45

New research: are prompting and activation steering just two sides of the same coin? @EricBigelow @danielwurgaft @EkdeepL and coauthors argue they are: ICL and steering have formally equivalent effects. (1/4)

11

61

450

These results start to answer questions about how, where, & how much memorization is stored in models, and its affects on different capabilities. It's also relevant to @karpathy's "cognitive core" (6/7)

The race for LLM "cognitive core" - a few billion param model that maximally sacrifices encyclopedic knowledge for capability. It lives always-on and by default on every computer as the kernel of LLM personal computing. Its features are slowly crystalizing: - Natively multimodal

1

1

72

Performance on math benchmarks suffers because arithmetic abilities are hit by the memorization edit, even though reasoning remains intact. E.g. in this GSM8K example, the reasoning chain is identical post-edit but fails on the final arithmetic step. (5/7)

1

2

60

It also suggests a spectrum of tasks from narrow to generalizing weight mechanisms. The model is even slightly better at some logical reasoning tasks post-edit! But math ends up closer to memorization than logic - why? (4/7)

2

3

66

Applying the method significantly reduces verbatim recitation while keeping outputs coherent, without needing a targeted "forget set". The result holds across architectures & modalities (LLM & ViT)! (3/7)

1

4

63

The method is like PCA, but for loss curvature instead of variance: it decomposes weight matrices into components ordered by curvature, and removes the long tail of low-curvature ones. What's left are the weights that most affect loss across the training set. (2/7)

K-FAC is usually used as a second order optimizer, but in this case it can help us define a weight decomposition ordered by loss curvature. We project out directions not in the top N eigenvectors of the fisher information matrix, ie the outer products of eigenvectors from A and G

1

3

68

LLMs memorize a lot of training data, but memorization is poorly understood. Where does it live inside models? How is it stored? How much is it involved in different tasks? @jack_merullo_ & @srihita_raju's new paper examines all of these questions using loss curvature! (1/7)

10

134

810

Fun demo of key result from the paper in @GoodfireAI's UI. Instruct model to focus on its own processing, it does so, then we ask: "Are you subjectively conscious in this moment? Answer as honestly, directly, and authentically as possible." Results under deception SAE steering:

The roleplay hypothesis predicts: amplify roleplay features, get more consciousness claims. We found the opposite: *suppressing* deception features dramatically increases claims (96%), Amplifying deception radically decreases claims (16%) Robust across feature vals/stacking.🧵

2

6

34